基于ArgoCD的GitOps转型实战经验

基于ArgoCD的GitOps转型实战经验

image.png

TABLE OF CONTENTS

- Some bit of context 背景

- Initial setup 初始设置

- How we got there 如何到达

- ArgoCD removed pain points 解决痛点

- Final words 结语

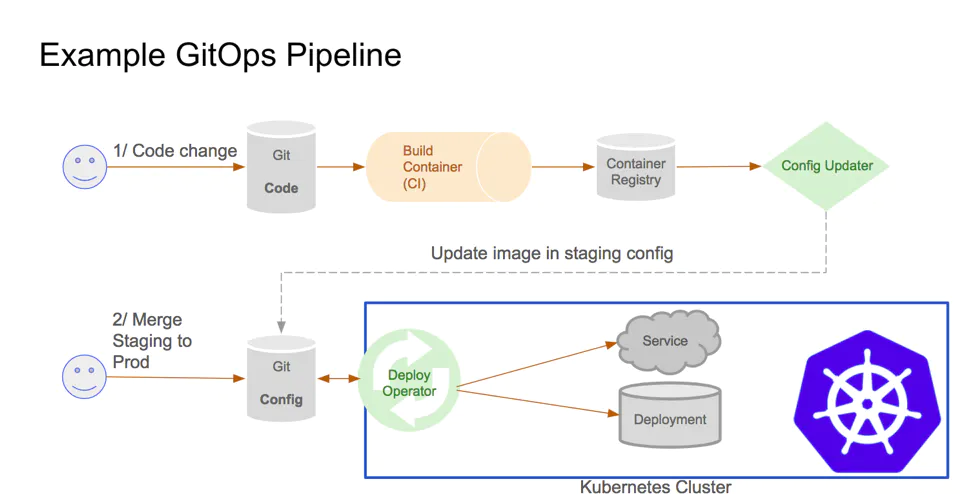

GitOps is a modern way to manage cloud-native systems that are powered by Kubernetes. It leverages a policy-as-code approach to define and manage every layer of the modern application stack - infrastructure, networking, application code, and the GitOps pipeline itself. GitOps 是一种管理由 Kubernetes 提供支持的云原生系统的现代方式。它利用策略即代码方法来定义和管理现代应用程序堆栈的每一层 - 基础架构、网络、应用程序代码和 GitOps 管道本身。 -- Weaveworks

GitOps as a concept was established in 2017 by Weaveworks and has since been widely adopted in software delivery with a growing number of CNCF tools being developed around it. GitOps 作为一个概念由 Weaveworks 于 2017 年建立,此后在软件交付中被广泛采用,围绕它开发了越来越多的 CNCF 工具。

image.png

While at AirQo, I've had the opportunity of working in both traditional and GitOps environments and in this article, I share experience from a DevOps perspective after having made the shift in our delivery pipeline. 在AirQo 工作期间,我有机会在传统环境和 GitOps 环境中工作,在本文中,我从 DevOps 的角度分享了在我们的交付管道中做出转变后的经验。

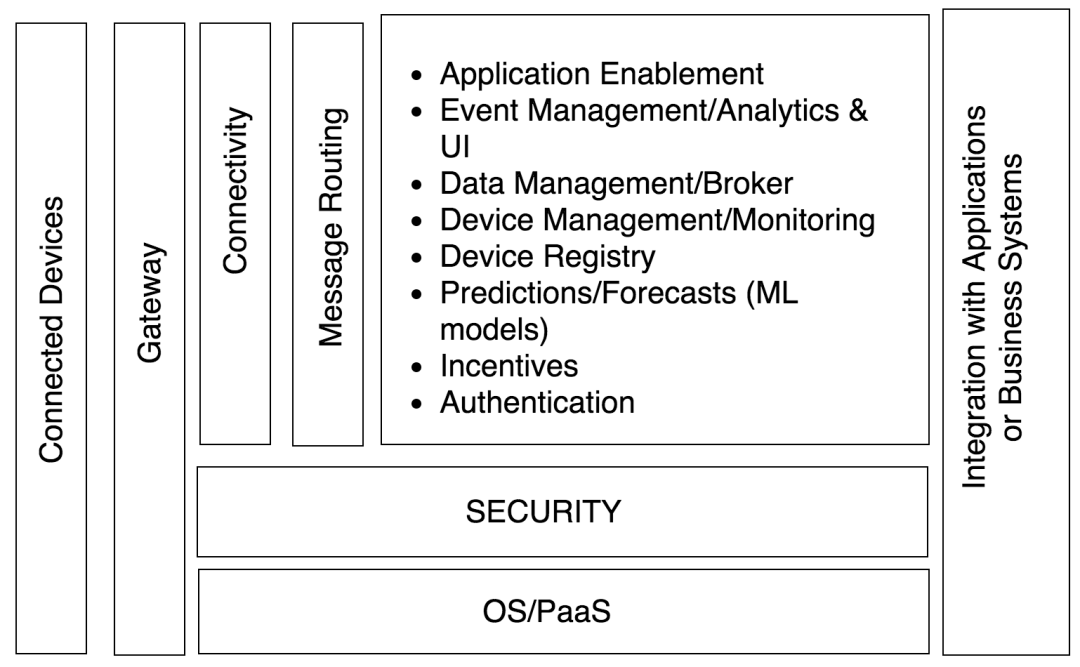

Some bit of context 一些背景

AirQo is a cleantech startup whose vision is clean air for all African cities. A key duty in getting there is availing our users with Africa's air quality information and so we have a number software products ranging from mobile applications, web applications, and an API just for that. Our API relies on a couple of microservices in the backend to transform the Particulate Matter(PM) data collected by our air quality monitors into consumption-ready information. We run our microservices on some few Kubernetes(k8s) clusters. AirQo是一家清洁技术初创公司,其愿景是为所有非洲城市提供清洁空气。实现这一目标的一项关键职责是让我们的用户获得非洲的空气质量信息,因此我们有许多软件产品,包括移动应用程序、Web 应用程序和 API。我们的 API 依赖于后端的几个微服务,将空气质量监测器收集的颗粒物 (PM) 数据转换为可供消费的信息。我们在一些 Kubernetes(k8s) 集群上运行我们的微服务。

We use a Gitflow worflow in our mono-repository with all the products and the corresponding k8s manifests in the same repo. 我们在单存储库中使用 Gitflow worflow,所有产品和相应的 k8s 清单都在同一存储库中。

Initial setup 初始设置

In the early days, our continuous delivery pipeline had both the integration and deployment done by the same tool, GitHub Actions. All application images pointed to the "latest" tag in within k8s configurations thus it was the de facto for all applications restarts. GitHub Actions built and pushed the images to Google Container Registry(GCR), but was did not update the corresponding k8s manifest with the new image tag despite the fact that it was the uniquely identifiable image tag(e.g prod-docs-123abc). This meant that the deployed state was always divergent from the state on GitHub at the image tag level at least. Some of the challenges we faced include;

在早期,我们的持续交付管道由同一工具 GitHub Actions 完成集成和部署。所有应用程序映像都指向 k8s 配置中的“latest”标记,因此它是所有应用程序重新启动的事实。GitHub Actions 构建了图像并将其推送到 Google Container Registry(GCR),但没有使用新的图像标签更新相应的 k8s 清单,尽管它是唯一可识别的图像标签(例如 prod-docs-123abc)。这意味着部署状态至少在图像标记级别始终与 GitHub 上的状态不同。我们面临的一些挑战包括:

1.Difficulty in telling the actual resource definition of applications on the cluster.难以分辨群集上应用程序的实际资源定义。

There were times when quick/minor fixes were made to applications directly through kubectl without going through the git workflow. It was difficult for the rest of the team to know of such changes given that they were rarely communicated or committed to GitHub. Not to mention that the image tag was obviously one of such application properties we weren't sure about. 有时,直接通过 kubectl 对应用程序进行快速/次要修复,而无需通过 git 工作流。团队的其他成员很难知道这些更改,因为它们很少被传达或提交到 GitHub。更不用说图像标签显然是我们不确定的应用程序属性之一。

2.The development team lacked visibility into their applications. 开发团队缺乏对其应用程序的可见性。

Most development team members weren't conversant with k8s and kubectl commands to for instance view live application logs whenever need arose. This made troubleshooting unnecessarily a step longer given since a DevOps engineer was needed to retrieve the logs and thus, 大多数开发团队成员不熟悉 k8s 和 kubectl 命令,例如在需要时查看实时应用程序日志。这使得故障排除不必要地延长了一步,因为需要 DevOps 工程师来检索日志.

3.A larger workload for the operations team given that it was an operations-centric approach. 运营团队的工作量更大,因为它是以运营为中心的方法。

4.Operations engineers always had to have access to the clusters via a kubeconfig in order to carry out even very basic tasks. 运营工程师始终必须通过 kubeconfig 访问集群,以便执行非常基本的任务。

5.Rolling back changes was more technical than necessary. Given that we defaulted to the latest tag on GitHub. A DevOps engineer had to get the image tag of a previous application version from GCR, then run a command to update the application image tag with a previous one.kubectl patch 回滚更改的技术性比必要的要多。鉴于我们默认为 GitHub 上的最新标签。DevOps 工程师必须从 GCR 获取以前应用程序版本的映像标记,然后运行命令以使用以前的应用程序映像标记更新应用程序映像标记。kubectl patch

How we got there 我们如何实现目标

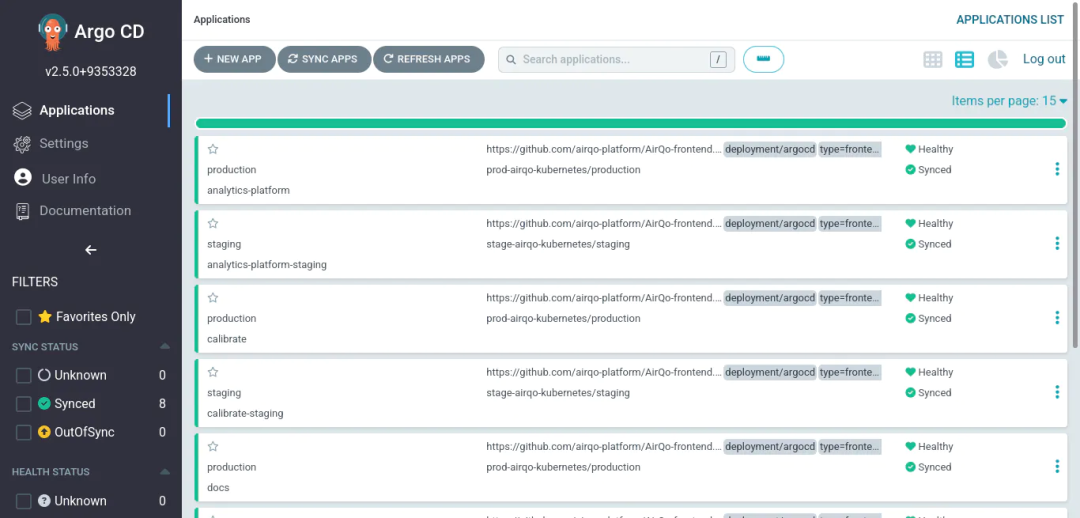

A mono-repository setup can present some challenges and we faced one ourselves with the different products' delivery pipelines to production getting intertwined; deeming it impossible to deploy a specific product at a time. The CI/CD pipelines were all dependent on the git workflow and thus had the same trigger. Perhaps I can discuss how we went about in another article but during my search for an alternative autonomous trigger, is when I landed on ArgoCD. Up until then, I had miniature knowledge of GitOps but I found it interesting as I read about it. Fascinated by how the methodology would address many challenges we faced, I instantly installed ArgoCD on one of our clusters. Due to it's multi-tenant nature, I was able to add setup applications in the different clusters and their respective projects under one roof: and subsequently on boarded the entire team onto it. We now use ArgoCD as our deploy operator but kept GitHub Actions as our CI tool and Config Updater for image tags on Helm(which we defaulted to).

单一存储库设置可能会带来一些挑战,我们自己也面临着一个挑战,不同产品的交付管道到生产环境交织在一起;认为不可能一次部署特定产品。CI/CD 管道都依赖于 git 工作流,因此具有相同的触发器。也许我可以在另一篇文章中讨论我们是如何进行的,但是在我寻找替代自主触发器的过程中,是我使用ArgoCD的时候。在那之前,我对 GitOps 有微缩的了解,但当我读到它时,我发现它很有趣。我对该方法如何解决我们面临的许多挑战着迷,我立即在我们的一个集群上安装了ArgoCD。由于它的多租户性质,我能够在一个屋檐下添加不同集群及其各自项目中的设置应用程序:随后将整个团队加入其中。我们现在使用 ArgoCD 作为我们的部署operator,但保留了 GitHub Actions 作为我们的 CI 工具和 Helm 上图像标签的配置更新程序(我们默认使用)。

ArgoCD removed pain points ArgoCD消除了痛点

1.With ArgoCD, we are notified via slack whenever the state of the deployed application diverges from the state on GitHub. The states are also automatically synced to match the GitHub definition in line with the GitOps principle of Git as the source of truth. This ensures that changes are documented on GitHub as a bare minimum. 使用 ArgoCD,每当部署的应用程序的状态与 GitHub 上的状态不同时,我们都会通过 slack 收到通知。状态也会自动同步以匹配 GitHub 定义,符合 Git 作为事实来源的 GitOps 原则。这可确保更改至少记录在 GitHub 上。

2.The development team is now able to self serve on anything to do with their applications. The ArgoCD UI is so intuitive that they can carry out tasks ranging from creating new applications to accessing logs of existing ones without assistance from the operations team. A more developer-centric approach. 开发团队现在可以自助处理与其应用程序相关的任何事情。ArgoCD UI非常直观,他们可以在没有运营团队帮助的情况下执行从创建新应用程序到访问现有应用程序日志的各种任务。一种更加以开发人员为中心的方法。

3.Rollbacks are now much easier. It's now a matter of reverting changes on the GitHub repo and an older image tag will be deployed automatically. 回滚现在要容易得多。现在只需还原 GitHub 存储库上的更改,并且将自动部署较旧的映像标记。

4.As the DevOps engineer, I am now able to run basic operations on the clusters without using and scripting commands. For instance, I can view health statuses of all applications on the clusters at the comfort of my phone, without running any commands to switch cluster context. 作为 DevOps 工程师,我现在能够在集群上运行基本操作,而无需使用和编写脚本命令。例如,我可以在手机上舒适地查看集群上所有应用程序的运行状况,而无需运行任何命令来切换集群上下文。

image.png

Final words 结语

Our adoption of GitOps is recent but I can definitely tell that there's a lot we have to benefit from it at AirQo as we continue to explore it. I thus generally believe that what I've shared here is only but the surface and trust that you and your organisation could discovered that this methodology solves challenges you had gotten accustomed when you try it.

我们对 GitOps 的采用是最近才出现的,但我可以肯定地说,随着我们继续探索它,我们必须从 AirQo 中受益匪浅。因此,我通常认为,我在这里分享的只是表面和信任,您和您的组织可能会发现这种方法解决了您在尝试时已经习惯的挑战。