【最全总结】离线强化学习(Offline RL)数据集、Benchmarks、经典算法、软件、竞赛、落地应用、核心算法解读汇总

【最全总结】离线强化学习(Offline RL)数据集、Benchmarks、经典算法、软件、竞赛、落地应用、核心算法解读汇总

深度强化学习实验室

发布于 2022-12-31 09:38:35

发布于 2022-12-31 09:38:35

来源:https://offlinerl.ai/

Supported by: Nanjing University and Polixir

排版:OpenDeepRL

离线强化学习最初英文名为:Batch Reinforcement Learning, 后来Sergey Levine等人在2020年的综述中使用了Offline Reinforcement Learning(Offline RL), 现在普遍使用后者表示。Offline RL 可以被定义为 data-driven 形式的强化学习问题,即在智能体(policy函数?)不和环境交互的情况下,来从获取的轨迹中学习经验知识,达到使目标最大化,其和Online的区别如图所示:

An illustration of offline RL. One key composition in Offline RL is the static dataset which includes experience from past interactions. The source of experience can be various: usually, we collect datasets using experts, medium players, script policies, or human demonstrations. In the second phase, we train a policy via an offline reinforcement learning algorithm. Finally, we deploy the learned policy in the real world directly.

Dataset

D4RLContinuous Control https://github.com/rail-berkeley/d4rl

NeoRLNear Real World https://github.com/siemens/industrialbenchmark/tree/master/industrial_benchmark_python

Visuomotor affordance learning (VAL) robot interaction datasetvision, robotics https://drive.google.com/drive/folders/1kD9kyP7-RlIrSnuN7rpEASAGWp5qnNov?usp=sharing

Benchmarks

rl_unplugged RL Unplugged: A Collection of Benchmarks for Offline Reinforcement Learning https://github.com/deepmind/deepmind-research/tree/master/rl_unplugged

d3rlpyd3rlpy: An Offline Deep Reinforcement Learning Library https://github.com/takuseno/d3rlpy

Software

offlinerl https://github.com/polixir/OfflineRL tianshou https://github.com/thu-ml/tianshou revive https://revive.cn/

Reading list(Survey/ Tutorial)

- Offline Reinforcement Learning: Tutorial, Survey and Perspectives on Open Problems, Levine, Kumar, Tucker, Fu, 2020.

- Policy Gradient and Actor-Critic Learning in Continuous Time and Space: Theory and Algorithms, Jia, Zhou, 2021.

Algorithms

备注:微信公众无法显示MarkDown链接,pdf链接访问文末阅读原文

Model-free

- Least-Squares Policy Iteration, Lagoudakis et al, 2003.JMLR,Algorithm: LSPI.

- Tree-Based Batch Mode Reinforcement Learning, Ernst et al, 2005.JMLR.Algorithm: FQI.

- Neural fitted q iteration–first experiences with a data efficient neural reinforcement learning method, Riedmiller, 2005.Algorithm: NFQ

- Off-Policy Actor-Critic, Degris et al, 2012.CoRR.Algorithm: Off-Policy Actor-Critic.

- Guided Policy Search, Levine et al, 2013.ICML.Algorithm: GPS.

- Safe Policy Improvement by Minimizing Robust Baseline Regret, Petrik et al,2016.NIPS.Algorithm:RMDP,Approximate Robust Baseline Regret Minimization

- Double Robust Off-Policy Value Evaluation for Reinforcement Learning, Jiang et al, 2016.ICML.Algorithm:DR

- Break Curse of Horizon: Infinite-Horizon Off-Policy Estimation, Liu et al, 2018.NIPS.Algorithm:Stationary State Density Ratio Estimation

- Safe Policy Improvement with Baseline Bootstrapping, Laroche et al, 2018.ICML.Algorithm: SPIBB.

- Constrained Policy Improvement For Safe and Efficient Reinforcement Learning, Sarafian et al, 2018.IJCAI.Algorithm: RBI.

- Off-Policy Deep Reinforcement Learning without Exploration, Fujimoto et al, 2019.ICML.Algorithm: BCQ, VAE-BC.

- Stabilizing Off-Policy RL via Bootstrapping Error Reduction, Kumar et al, 2019.NIPS.Algorithm: BEAR-QL.

- DualDICE: Behavior-Agnostic Estimation of Discounted Stationary Distribution Corrections, Nachum et al, 2019.NIPS.Algorithm: DualDICE.

- AlgaeDICE: Policy Gradient from Arbitrary Experience, Nachum et al, 2019.arxiv.Algorithm: ALGAE.

- Advantage-Weighted Regression: Simple and Scalable Off-Policy Reinforcement Learning, Peng et al, 2019.arxiv.Algorithm: AWR

- Off-Policy Policy Gradient Algorithms by Constraining the State Distribution Shift, Islam et al, 2019.arxiv.Algorithm: StateKL.

- Behavior Regularized Offline Reinforcement Learning, Wu et al, 2019.CoRR.Algorithm: BRAC(vp_pr).

- Off-Policy Policy Gradient with State Distribution Correction, Liu et al, 2019.CoRR.Algorithm: OPPOSD

- From Importance Sampling to Double Robust Policy Gradient, Huang et al, 2020.ICML.Agorithm:DR-PG.

- Keep Doing What Worked: Behavior Modelling Priors for Offline Reinforcement Learning, Siegel et al, 2020.ICLR.Algorithm: Behavior Extraction Priors.

- GenDICE: Generalized Offline Estimation of Stationary Values, Zhang et al, 2020.ICLR.Algorithm:GenDICE.

- GradientDICE: Rethinking Generalized Offline Estimation of Stationary Values, Zhang et al, 2020.ICML.Algorithm:GradientDICE.

- Batch Stationary Distribution Estimation, Wen et al, 2020.ICML.Algorithm: variational power method.

- BRPO: Batch Residual Policy Optimization, Sohn et al, 2020.IJCAI.Algorithm: BRPO.

- On Reward-Free Reinforcement Learning with Linear Function Approximation, Wang et al, 2020.NIPS.Algorithm: Exploration& Planning Phase Reward Free RL.

- AWAC: Accelerating Online Reinforcement Learning with Offline Dataset, Nair et al, 2020.arxiv.Algorithm: AWAC.

- Doubly Robust Off-Policy Value and Gradient Estimation for Deterministic Policies, Kallus et al, 2020.NIPS.Algorithm: deterministic DR.

- Efficient Evaluation of Natural Stochastic Policies in Offline Reinforcement Learning, Kallus et al, 2020.arxiv.Algorithm: Efficient Off-Policy Evaluation for Natural Stochastic Policies

- Conservative Q-Learning for Offline Reinforcement Learning, Kumar et al, 2020.NIPS.Algorithm: CQL.

- Provably Good Batch Reinforcement Learning Without Great Exploration, Liu et al , 2020.NIPS.Algorithm: PQI.

- Critic Regularized Regression, Wang et al, 2020.NIPS.Algorithm: CRR.

- EMaQ: Expected-Max Q-Learning Operator for Simple Yet Effective Offline and Online RL, Kamyar et al, 2020.ICML.Algorithm: EMaQ.

- Batch Reinforcement Learning Through Continiation Method, Guo et al, 2021.ICLR.Algorithm: Soft Policy Iteration through Continuation Method.

- Offline Reinforcement Learning with Fisher Divergence Critic Regularization, Kostrikov et al, 2021.ICML.Algorithm: Fisher-BRC.

- Offline-to-Online Reinforcement Learning via Balanced Replay and Pessimistic Q-Ensemble, Lee et al, 2021.arxiv.Algorithm: Balance Replay, Pessimistic Q-Ensemble.

- You Only Evaluate Once: a Simple Baseline Algorithm for Offline RL, Wonjoon Goo and Scott Niekum, 2021.CoRL.Algorithm: YOEO.

- Causal Reinforcement Learning using Observational and Interventional Data, Gasse et al, 2021.arxiv.Algorithm: augmented POMDP.

- Dealing with Unknown: Pessimistic Offline Reinforcement Learning, Li et al, 2021.CoRL.Algorithm: PessORL.

- Uncertainty-Based Offline Reinforcement Learning with Diversified Q-Ensemble, An et al, 2021.NIPS.Algorithm: EDAC.

- Offline Reinforcement Learning with Implicit Q-Learning, Kostrikov et al, 2021.arxiv.Algorithm: IQL.

- Value Penalized Q-Learning for Recommender Systems, Gao et al, 2021.arxiv.Algorithm: VPQ.

- Offline Reinforcement Learning with Pseudometric Learning, Dadashi et al, 2021.ICML.Algorithm: PLOFF.

- OptiDICE: Offline Policy Optimization via Stationary Distribution Correction Estimation, Lee et al, 2021.ICML.Algorithm: OptiDICE.

- Offline RL Without Off-Policy Evaluation, Brandfonbrener et al, 2021.NIPS.Algorithm: One-step algorithm.

- Offline Reinforcement Learning with Soft Behavior Regularization, Xu et al, 2021.arxiv.Algorithm: SBAC.

Model-Based

- MOReL: Model-Based Offline Reinforcement Learning, Kidambi et al, 2020.TWIML.Algorithm: MOReL.

- MOPO: Model-based Offline Policy Optimization, Yu et al, 2020.NIPS.Algorithm: MOPO.

- Deployment-Efficient Reinforcement Learning via Model-Based Offline Optimization, Matsushima et al, 2020.ICLR.Algorithm: BREMEN.

- Overcoming Model Bias for Robust Offline Deep Reinforcement Learning, Swazinna et al, 2020.arxiv.Algorithm: MOOSE.

- Model-Based Offline Planning, Argenson et al, 2020.arxiv.Alogorithm: MBOP.

- DeepAveragers: Offline Reinforcement Learning by Solving Derived Non-Parametric MDPs, Shrestha et al, 2020.ICLR.Algorithm: DAC-MDP.

- Causality and Batch Reinforcement Learning: Complementary Approaches to Planning in Unknown Domains, Bannon et al, 2020.arxiv.Algorithm: Counterfactual Policy Evaluation.

- Counterfactual Data Augmentation using Locally Factored Dynamics, Pitis et al, 2020.NIPS.Algorithm: CoDA.

- Offline Reinforcement Learning from Images with Latent Space Model, Rafailov et al, 2020.arxiv.Algorithm: LOMPO.

- Model-Based Visual Planning with Self-Supervised Functional Distances, Tian et al, 2020.ICLR.Algorithm: MBOLD.

- Augmented World Models Facilitate Zero-Shot Dynamics Generalization From a Single Offline Environment, Ball et al, 2021.ICML.Algorithm: AugWM.

- Vector Quantized Models for Planning, Ozair et al, 2021.ICML.Algorithm: VQVAE.

- PerSim: Data-Efficient Offline Reinforcement Learning with Heterogeneous Agents via Personalized Simulators, Agarwal et al, 2021.NIPS.Algorithm: PerSim.

- COMBO: Conservative Offline Model-Based Policy Optimization, Yu et al, 2021.NIPS.Algorithm: COMBO.

- Offline Model-based Adaptable Policy Learning, Chen et al, 2021.NIPS.Algorithm: MAPLE.

- Online and Offline Reinforcement Learning by Planning with a Learned Model, Schrittwieser et al, 2021.NIPS.Algorithm: MuZero Unplugged.

- Representation Matters: Offline Pretraining for Sequential Decision Making, Yang et al, 2021.ICML.Algorithm: representation learning via contrastive self-prediction.

- Decision Transformer: Reinforcement Learning via Sequence Modeling, Chen et al, 2021.arxiv.Algorithm: DT.

- Offline Reinforcement Learning as One Big Sequence Modeling Problem, Janner et al, 2021.NIPS.Algorithm: Trajectory Transformer.

- StARformer: Transformer with State-Action-Reward Representations, Shang et al, 2021.arxiv.Algorithm: StARformer.

- Behavioral Priors and Dynamics Models: Improving Performance and Domain Transfer in Offline RL, Cang et al, 2021.arxiv.Algorithm: MABE.

- Offline Reinforcement Learning with Reverse Model-based Imagination, Wang et al, 2021.NIPS.Algorithm: ROMI.

- Koopman Q-learning: Offline Reinforcement Learning via Symmetries of Dynamics, Weissenbacher et al, 2021.arxiv.Algorithm: KFC.

- Generalized Decision Transformer for Offline Hindsight Information Matching, Furuta et al, 2021.arxiv.Algorithm: DT-X, CDT, BDT.

- UMBRELLA: Uncertainty-Aware Model-Based Offline Reinforcement Learning Leveraging Planning, Diehl et al, 2021.arxiv.Algorithm: UMBRELLA.

3. Theory

- Hyperparameter Selection for Offline Reinforcement Learning, Paine et al, 2020.arxiv.

- Batch Exploration with Examples for Scalable Robotic Reinforcement Learning, Chen et al, 2020.arxiv.

- Recovery RL: Safe Reinforcement Learning with Learned Recovery Zones, Thananjeyan et al, 2020.arxiv.

- Batch Value-function Approximation with Only Realizability, Xie et al, 2020.arxiv.

- Sparse Feature Selection Makes Batch Reinforcement Learning More Sample Efficient, Hao et al, 2020.arxiv.

- What are the Statistical Limits of Offline RL with Linear Function Approximation?, Wang et al, 2020.RL Theory Seminar2021.

- A Variant of the Wang-Foster-Kakade Lower Bound for the Discounted Setting, Amortila et al, 2020.arxiv.

- Sample-Efficient Reinforcement Learning via Counterfactual-Based Data Augmentation, Lu et al, 2020.arxiv.

- Exponential Lower Bounds for Batch Reinforcement Learning: Batch RL can be Exponentially Harder than Online RL, Zanette et al, 2020.RL Theory Seminar2021.

- A Workflow for Offline Model-Free Robotic Reinforcement Learning, Kumar et al, 2021.CoRL.

- S4RL: Surprisingly Simple Self-Supervision for Offline Reinforcement Learning in Robotics, Sinha et al, 2021.CoRL.

- Instabilities of Offline RL with Pre-Trained Neural Representation, Wang et al, 2021.ICML.

- Risk Bounds and Rademacher Complexity in Batch Reinforcement Learning, Duan et al, 2021.ICML.

- Offline Contextual Bandits with Overparameterized Models, Brandfonbrener et al, 2021.ICML.

- Is Pessimism Provably Efficient for Offline RL?, Jin et al, 2021.RL Theory Seminar2021.

- Near-Optimal Offline Reinforcement Learning via Double Variance Reduction, Yin et al, 2021.NIPS.

- Bridging Offline Reinforcement Learning and Imitation Learning: A Tale of Pessimism, Rashidinejad et al, 2021.RL Theory Seminar2021.

- Nearly Horizon-Free Offline Reinforcement Learning, Ren et al, 2021.NIPS.

- Bellman-consistent Pessimism for Offline Reinforcement Learning, Xie et al, 2021.RL Theory Seminar2021.

- Policy Finetuning: Bridging Sample-Efficient Offline and Online Reinforcement Learning, Xie et al, 2021.NIPS.

- The Difficulty of Passive Learning in Deep Reinforcement Learning, Ostrovski et al, 2021.NIPS.

4. Other related settings

1) Multi-Task, Goal Conditioned RL

- Multi-Task Batch Reinforcememt Learning with Metric Learning, Li et al, 2020.NIPS.Algorithm: MBML.

- Offline Meta Learning of Exploration, Dorfman et al, 2020.arxiv.Algorithm: BORel.

- Offline Meta-Reinforcement Learning with Advantage Weighting, Mitchell et al, 2020.ICML.Algorithm: MACAW.

- Goal-Conditioned Batch Reinforcement Learning for Rotation Invariant Locomotion, Mavalankar et al, 2020.arxiv.Algorithm: Enforcing equivalence.

- Exploration by Maximizing Renyi Entropy for Reward-Free RL Framework, Zhang et al, 2020.AAAI.Algorithm: MaxRenyi.

- Reset-Free Lifelong Learning with Skill-Space Planning, Lu et al, 2021.ICLR.Algorithm: LiSP.

- Efficient Fully-Offline Meta-Reinforcement Learning via Distance Metric Learning and Behavior Regularization, Li et al, 2021.ICLR.Algorithm: FOCAL.

- Actionable Models: Unsupervised Offline Reinforcement Learning of Robotic Skills, Chebotar et al, 2021.ICML.Algorithm: Actionable Model.

- Conservative Data Sharing for Multi-Task Offline Reinforcement Learning, Yu et al, 2021.NIPS.Algorithm: CDS.

- Offline Meta Reinforcement Learning — Identifiability Challenges and Effective Data Collection Strategies, Dorfman et al, 2021.NIPS.Algorithm: BORel.

- Improving Zero-shot Generalization in Offline Reinforcement Learning using Generalized Similarity Functions, Mazoure et al, 2021.arxiv.Algorithm: GSF.

- Offline Meta-Reinforcement Learning with Online Self-Supervision, Pong et al, 2021.arxiv.Algorithm: Semi-Supervised Meta Actor-Critic.

- Lifelong Robotic Reinforcement Learning by Retaining Experiences, Xie et al, 2021.arxiv.Algorithm: Lifelong RL by Retaining Experiences.

2) Safety

- Risk-Averse Offline Reinforcement Learning, Urpi et al, 2021.ICLR.Algorithm: O-RAAC.

- Multi-Objective SPIBB: Seldonian Offline Policy Improvement with Safety Constraints in Finite MDPs, Satija et al, 2021.NIPS.Algorithm: Multi-Objective SPIBB.

- Safely Bridging Offline and Online Reinforcement Learning, Xu et al, 2021.arxiv.Alogorithm: Safe UCBVI.

- Expert-Supervised Reinforcement Learning for Offline Policy Learning and Evaluation, Sonabend et al, 2020.NIPS.Algorithm: ESRL.

Competitions

A list of competitions that is of interest to the community. (sorted by starting date)

- Real-world Reinforcement Learning Challenge—Learning to make fair and incentive coupon decisions for sales promotion from data, organized by Polixir, Dec. 25, 2021 – Feb. 27, 2022 (Ongoing)

- MineRL BASALT Challenge NeurIPS 2021 Competition—Learning from Human Feedback in Minecraft, organized by C.H.A.I. – UC Berkeley, July 7, 2021 – Dec 14, 2021

- MineRL Diamond Challenge NeurIPS 2021 Competition—Training Sample-Efficient Agents in Minecraft, organized by MineRL Labs – Carnegie Mellon University, Jun. 9, 2021 – Dec., 2021

- Tactile Games Playtest Agent—Level Difficulty Prediction of Lily’s Garden Levels, at 3rd IEEE Conference on Games in 2021, organized by Tactile Games

- Real Robot Challenge, organized by Empirical Inference Max Planck Institute for Intelligent Systems, May 28, 2021 – Sep. 16, 2021

- Real Robot Challenge, organized by Empirical Inference Max Planck Institute for Intelligent Systems, Aug. 10, 2020 – Dec. 14, 2020

- MineRL NeurIPS 2020 Competition—Sample-efficient reinforcement learning in Minecraft, organized by MineRL Labs – Carnegie Mellon University, Jul. 1, 2020 – Dec. 5, 2020

- MineRL NeurIPS 2019 Competition—Sample-efficient reinforcement learning in Minecraft, organized by MineRL Labs – Carnegie Mellon University, May 10, 2019 – Dec 14, 2019

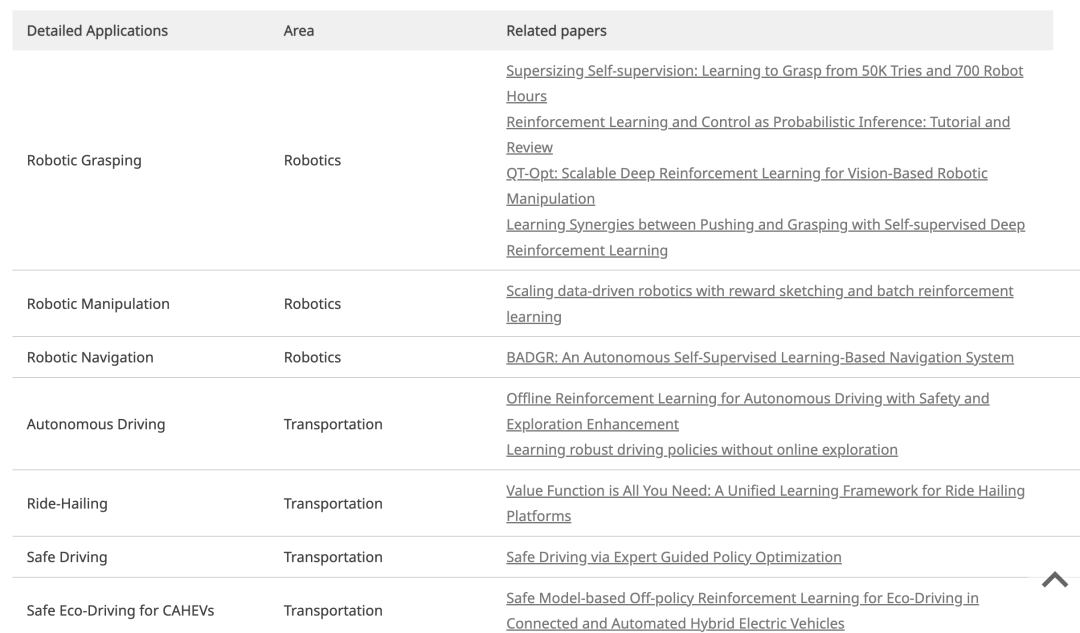

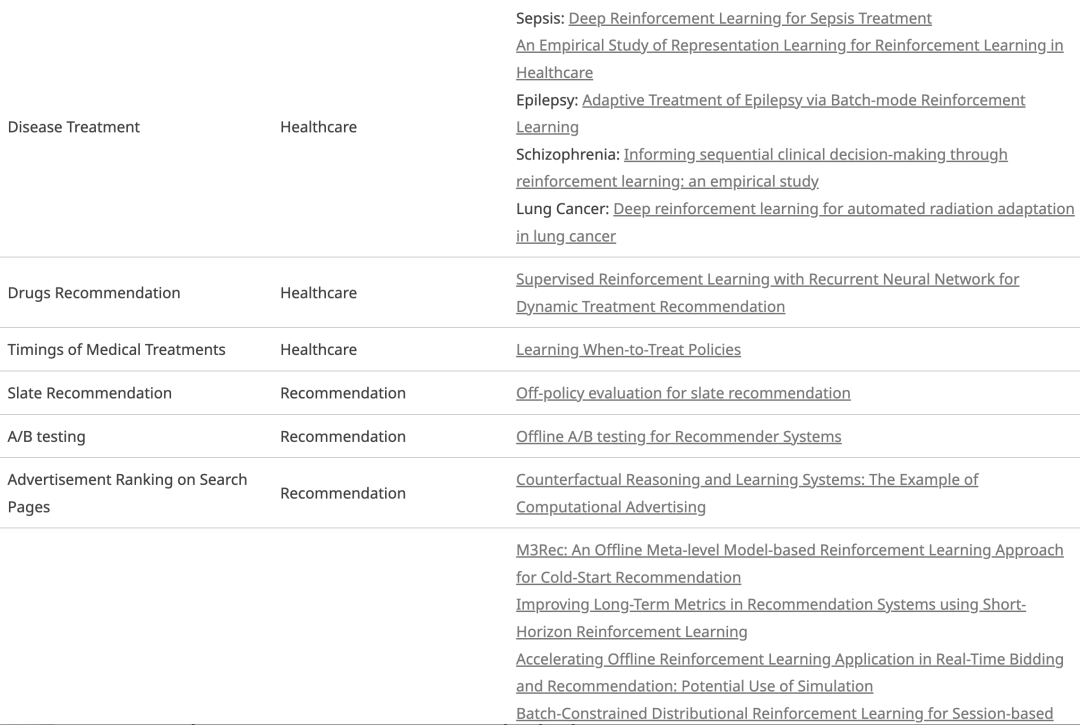

Applications or related news

In many applications, including safety-critical domains such as driving, and human-interactive domains such as dialogue systems, online learning is prohibitively costly in terms of time, money, and safety considerations. Therefore, developing a new generation of data-driven reinforcement learning may usher in a new era of progress in reinforcement learning. In the applications list, we will show some specific application domains where offline reinforcement learning has already made an impact.

本文转载自: https://offlinerl.ai/ , 感谢南京大学&南栖仙策在离线强化学习领域的贡献

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2022-11-14,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录