探索Lustre文件系统的客户端mount实现

探索Lustre文件系统的客户端mount实现

用户4700054

发布于 2023-02-26 14:42:37

发布于 2023-02-26 14:42:37

lustrefs文件系统定义

lustre/llite/super25.c定义lustre_fs_type为lustre文件关联mount和mount失败的后处理逻辑。这里的mount实现也是后端文件系统和客户端的mount实现。

static struct file_system_type lustre_fs_type = {

.owner = THIS_MODULE,

// lustre文件系统的名称定义

.name = "lustre",

// lustre的mount过程

.mount = lustre_mount,

.kill_sb = lustre_kill_super,

.fs_flags = FS_RENAME_DOES_D_MOVE,lustre文件系统的superblock,这信息是在inode->s_fs_info中。

/****************** superblock additional info *********************/

struct ll_sb_info;

struct kobject;

struct lustre_sb_info {

int lsi_flags;

// lustre客户端的mgc的obd_device

struct obd_device *lsi_mgc;

// mount执行的挂载信息

struct lustre_mount_data *lsi_lmd;

// 为客户端准备的,记录文件系统的状态

struct ll_sb_info *lsi_llsbi;

// 访问后端文件系统的device

struct dt_device *lsi_dt_dev; /* dt device to access disk fs*/

atomic_t lsi_mounts; /* references to the srv_mnt */

struct kobject *lsi_kobj;

char lsi_svname[MTI_NAME_MAXLEN];

/* lsi_osd_obdname format = 'lsi->ls_svname'-osd */

char lsi_osd_obdname[MTI_NAME_MAXLEN + 4];

/* lsi_osd_uuid format = 'lsi->ls_osd_obdname'_UUID */

char lsi_osd_uuid[MTI_NAME_MAXLEN + 9];

struct obd_export *lsi_osd_exp;

char lsi_osd_type[16];

char lsi_fstype[16];

struct backing_dev_info lsi_bdi; /* each client mountpoint needs

own backing_dev_info */

/* protect lsi_lwp_list */

struct mutex lsi_lwp_mutex;

struct list_head lsi_lwp_list;

unsigned long lsi_lwp_started:1,

lsi_server_started:1;

#ifdef CONFIG_LL_ENCRYPTION

const struct llcrypt_operations *lsi_cop;

struct key *lsi_master_keys; /* master crypto keys used */

#endif

};客户端mount实现

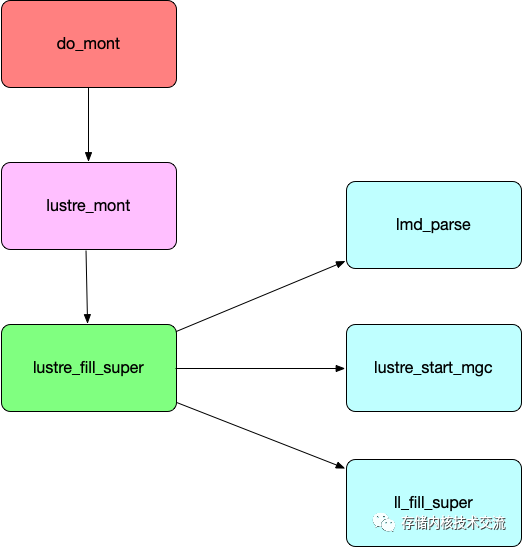

lustre_mount函数

lustre_mount是vfs中do_mount的具体的实现,当客户端执行mount时候就会调用lustre_mount函数来执行lustre文件系统的挂载.这个也是lustre客户端或者后端ost挂载的入口。

static struct dentry *lustre_mount(struct file_system_type *fs_type, int flags,

const char *devname, void *data)

{

return mount_nodev(fs_type, flags, data, lustre_fill_super);

}lustre_fill_super函数

lustre_fill_super中的核心函数,lmd_parse函数

static int lustre_fill_super(struct super_block *sb, void *lmd2_data,

int silent)

{

/*

lmd_parse函数负责解析挂载的参数,方便后续挂载初始化中使用

通过lmd_parse解析数据到struct lustre_mount_data *lmd结构

lmd->lmd_profile = bigfs-client

lmd->lmd_dev = 10.211.55.5@tcp:/bigfs

lmd->lmd_flags = 2

*/

lmd_parse(lmd2_data, lmd);

// lustre_start_mgc 通过mount参数初始化mgs的客户端服务

lustre_start_mgc(sb);

// lustre其他的OBD初始化,一旦完成整个mount就完成了

ll_fill_super(sb);

}- 其中的

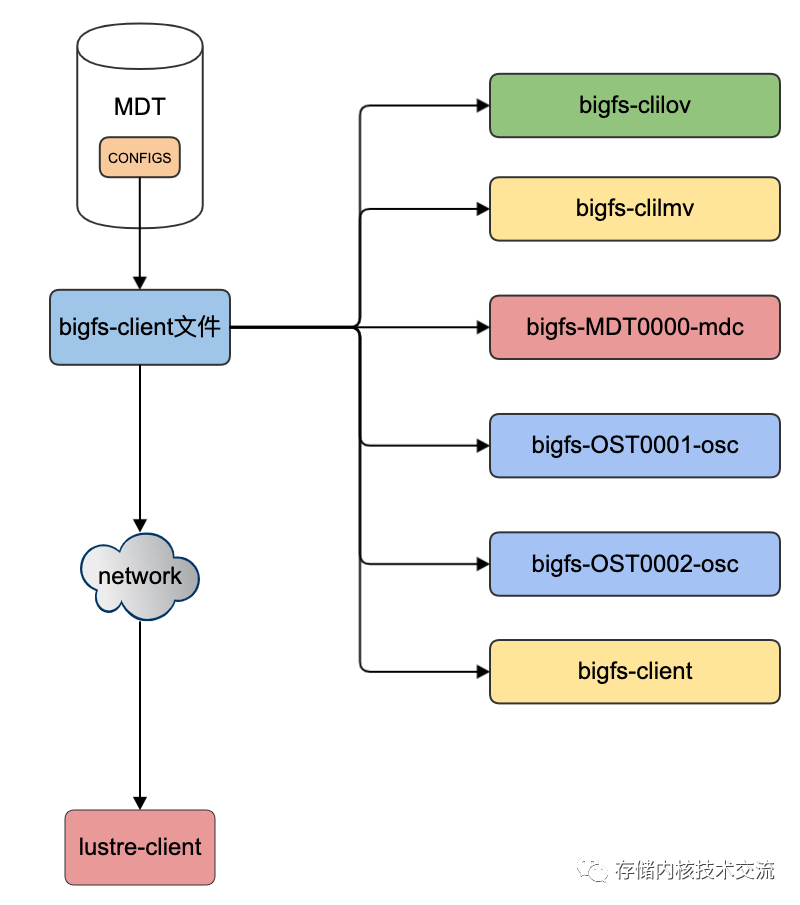

lustre_mount_data中的lmd_profile是存储了MGS需要给客户端挂载需要的OBD的信息.客户端自身内置了一些OBD.这里我们通过llog_reader读取MGT端的{MDT挂载目录}/CONFIGS/{文件系统名称}-client(这里示例的是/mnt/mgt_mdt/CONFIGS/bigfs-client)数据可以发现客户端获取的数据分别有clilov/climv/mdc/osc等信息。

[root@CentOS-Lustre-Server /mnt/mgt_mdt/CONFIGS]$ ls -l bigfs-client

-rw-r--r-- 1 root root 13024 Dec 31 1969 bigfs-client

[root@CentOS-Lustre-Server /mnt/mgt_mdt/CONFIGS]$ llog_reader bigfs-client

Number of records: 29 cat_idx: 0 last_idx: 29

Target uuid : config_uuid

-----------------------

#01 (224)marker 4 (flags=0x01, v2.15.0.0) bigfs-clilov 'lov setup' Tue Sep 20 22:21:25 2022-

#02 (120)attach 0:bigfs-clilov 1:lov 2:bigfs-clilov_UUID

#03 (168)lov_setup 0:bigfs-clilov 1:(struct lov_desc)

uuid=bigfs-clilov_UUID stripe:cnt=1 size=1048576 offset=18446744073709551615 pattern=0x1

#04 (224)END marker 4 (flags=0x02, v2.15.0.0) bigfs-clilov 'lov setup' Tue Sep 20 22:21:25 2022-

#05 (224)marker 5 (flags=0x01, v2.15.0.0) bigfs-clilmv 'lmv setup' Tue Sep 20 22:21:25 2022-

#06 (120)attach 0:bigfs-clilmv 1:lmv 2:bigfs-clilmv_UUID

#07 (168)lov_setup 0:bigfs-clilmv 1:(struct lov_desc)

uuid=bigfs-clilmv_UUID stripe:cnt=0 size=0 offset=0 pattern=0

#08 (224)END marker 5 (flags=0x02, v2.15.0.0) bigfs-clilmv 'lmv setup' Tue Sep 20 22:21:25 2022-

#09 (224)marker 6 (flags=0x01, v2.15.0.0) bigfs-MDT0000 'add mdc' Tue Sep 20 22:21:25 2022-

#10 (080)add_uuid nid=10.211.55.5@tcp(0x200000ad33705) 0: 1:10.211.55.5@tcp

#11 (128)attach 0:bigfs-MDT0000-mdc 1:mdc 2:bigfs-clilmv_UUID

#12 (136)setup 0:bigfs-MDT0000-mdc 1:bigfs-MDT0000_UUID 2:10.211.55.5@tcp

#13 (160)modify_mdc_tgts add 0:bigfs-clilmv 1:bigfs-MDT0000_UUID 2:0 3:1 4:bigfs-MDT0000-mdc_UUID

#14 (224)END marker 6 (flags=0x02, v2.15.0.0) bigfs-MDT0000 'add mdc' Tue Sep 20 22:21:25 2022-

#15 (224)marker 7 (flags=0x01, v2.15.0.0) bigfs-client 'mount opts' Tue Sep 20 22:21:25 2022-

#16 (120)mount_option 0: 1:bigfs-client 2:bigfs-clilov 3:bigfs-clilmv

#17 (224)END marker 7 (flags=0x02, v2.15.0.0) bigfs-client 'mount opts' Tue Sep 20 22:21:25 2022-

#18 (224)marker 10 (flags=0x01, v2.15.0.0) bigfs-OST0001 'add osc' Tue Sep 20 22:21:25 2022-

#19 (080)add_uuid nid=10.211.55.5@tcp(0x200000ad33705) 0: 1:10.211.55.5@tcp

#20 (128)attach 0:bigfs-OST0001-osc 1:osc 2:bigfs-clilov_UUID

#21 (136)setup 0:bigfs-OST0001-osc 1:bigfs-OST0001_UUID 2:10.211.55.5@tcp

#22 (128)lov_modify_tgts add 0:bigfs-clilov 1:bigfs-OST0001_UUID 2:1 3:1

#23 (224)END marker 10 (flags=0x02, v2.15.0.0) bigfs-OST0001 'add osc' Tue Sep 20 22:21:25 2022-

#24 (224)marker 13 (flags=0x01, v2.15.0.0) bigfs-OST0002 'add osc' Tue Sep 20 22:21:26 2022-

#25 (080)add_uuid nid=10.211.55.5@tcp(0x200000ad33705) 0: 1:10.211.55.5@tcp

#26 (128)attach 0:bigfs-OST0002-osc 1:osc 2:bigfs-clilov_UUID

#27 (136)setup 0:bigfs-OST0002-osc 1:bigfs-OST0002_UUID 2:10.211.55.5@tcp

#28 (128)lov_modify_tgts add 0:bigfs-clilov 1:bigfs-OST0002_UUID 2:2 3:1

#29 (224)END marker 13 (flags=0x02, v2.15.0.0) bigfs-OST0002 'add osc' Tue Sep 20 22:21:26 2022-lustre_start_mgc函数

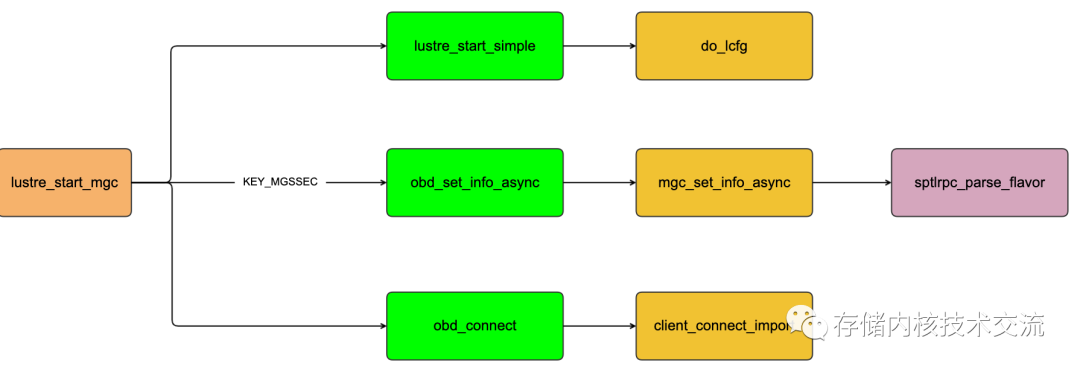

lustre_start_mgc函数核心启动一个mgc服务,用来接受来自mgs推送的conf log.这个函数中lustre_start_mgc中调用的大部分函数都是基于mgc_obd_ops中定义的函数

// mgc obd操作的函数表

static const struct obd_ops mgc_obd_ops = {

.o_owner = THIS_MODULE,

.o_setup = mgc_setup,

.o_precleanup = mgc_precleanup,

.o_cleanup = mgc_cleanup,

.o_add_conn = client_import_add_conn,

.o_del_conn = client_import_del_conn,

.o_connect = client_connect_import,

.o_disconnect = client_disconnect_export,

.o_set_info_async = mgc_set_info_async,

.o_get_info = mgc_get_info,

.o_import_event = mgc_import_event,

.o_process_config = mgc_process_config,

};

// 这里仅仅是展现了客户端的代码执行的核心函数

int lustre_start_mgc(struct super_block *sb)

{

// 解析mount参数中的MGS的信息

class_parse_nid(ptr, &nid, &ptr);

// 设置和启动mgc obd

rc = lustre_start_simple(mgcname, LUSTRE_MGC_NAME,(char *)uuid->uuid, LUSTRE_MGS_OBDNAME,niduuid, NULL, NULL);

rc = obd_set_info_async(NULL, obd->obd_self_export,strlen(KEY_MGSSEC), KEY_MGSSEC,strlen(mgssec), mgssec, NULL);

rc = obd_connect(NULL, &exp, obd, uuid, data, NULL);

}lustre_start_simple函数是设置和初始化mgc obd的服务,期间会做初始化mgc obd的obd_import和obd_export用来发送和接受数据的连接。同时也启动内核线程运行mgc_requeue_thread服务接受来自mgs的数据变更和处理.截止到这里mgc还没有正式连接到mgs,仅仅是把mgc obd连接需要的数据结构做好初始

// lustre客户端log处理函数表

const struct llog_operations llog_client_ops = {

.lop_next_block = llog_client_next_block,

.lop_prev_block = llog_client_prev_block,

.lop_read_header = llog_client_read_header,

.lop_open = llog_client_open,

.lop_close = llog_client_close,

};

int lustre_start_simple(char *obdname, char *type, char *uuid,

char *s1, char *s2, char *s3, char *s4)

{

int rc;

// mgc的obd的信息为MGC10.211.55.5@tcp (typ=mgc)

rc = do_lcfg(obdname, 0, LCFG_ATTACH, type, uuid, NULL, NULL)

{

// mgc的的配置处理和初始化,这里走的是LCFG_ATTACH逻辑

class_process_config(lcfg)

{

// mgc的obd_device创建

class_attach(struct lustre_cfg *lcfg)

{

// obd的创建,这里的MGC10.211.55.5@tcp

struct obd_device *obd = class_newdev(typename, name, uuid);

// 创建mgc obd的数据接口连接结构

struct obd_export *exp=class_new_export_self(obd, &obd->obd_uuid);

// obd的注册到客户端本地内核中

rc = class_register_device(obd);

}

}

}

// 开始设置mgc obd

rc = do_lcfg(obdname, 0, LCFG_SETUP, s1, s2, s3, s4)

{

// mgc的的配置处理和初始化,这里走的是LCFG_SETUP逻辑

class_process_config(lcfg)

{

class_setup(obd, lcfg)

{

// obd_setup实际调用的是mgc_setup

obd_setup(obd, lcfg)

{

mgc_setup(obd,lcfg)

{

// 设置mgc obd的obd_import的链接

client_obd_setup(obd, lcfg)

{

// obd_import初始化

imp = class_new_import(obd);

// connection MGC10.211.55.5@tcp_0 初始化

rc = client_import_add_conn(imp, &server_uuid, 1);

}

// lustre log上下文初始化

mgc_llog_init(NULL, obd)

{

// 设置lustre 客户端log处理函数

llog_setup(env, obd, &obd->obd_olg,LLOG_CONFIG_REPL_CTXT, obd,&llog_client_ops);

}

rc = mgc_tunables_init(obd);

// 运行内核线程轮训是否有新的log推送到客户端

kthread_run(mgc_requeue_thread, NULL, "ll_cfg_requeue");

}

}

}

}

}

return rc;

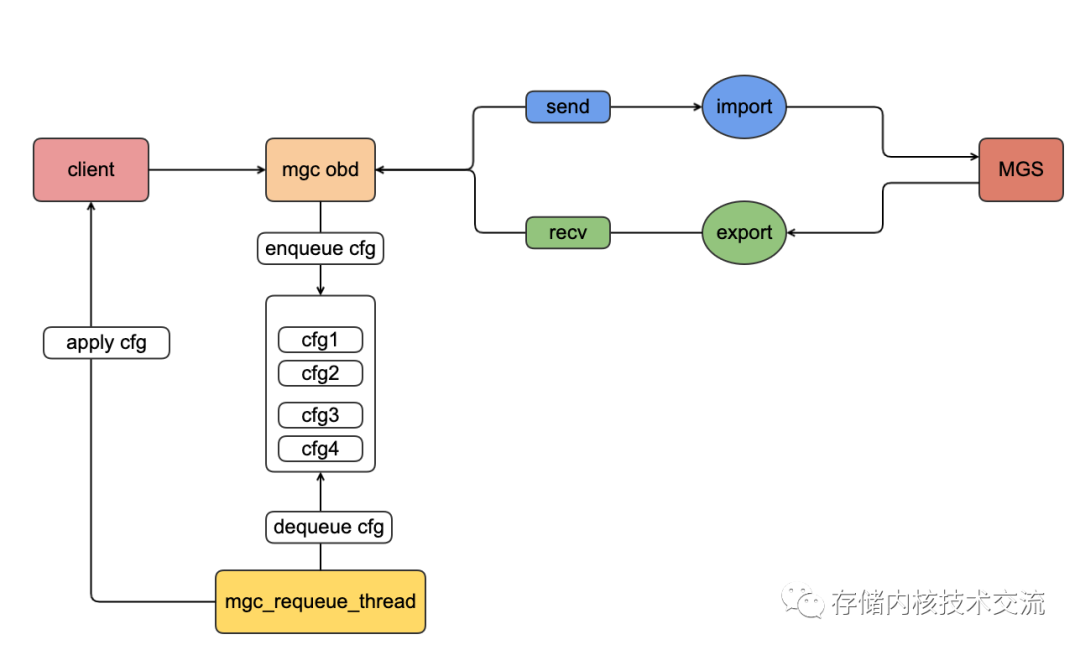

}mgc_requeue_thread是内核线程轮训判断mgs是否有变更日志推送过来,其中启动内核线程轮训监听来自mgs的日志,插入到队列中调用do_requeue函数进行处理。这里可以想到如果lustre文件系统有节点状态发生改变或者添加新的节点,mgs会把数据推送到客户端,客户端在很短时间内会了解当前集群的变化。

// 这里省去了大部分逻辑,保留和最核心的函数

static int mgc_requeue_thread(void *data)

{

rq_state |= RQ_RUNNING;

while (!(rq_state & RQ_STOP)) {

struct config_llog_data *cld, *cld_prev;

rq_state &= ~RQ_PRECLEANUP;

// 等待一会再执行后面的例如日志的数据

to = mgc_requeue_timeout_min == 0 ? 1 : mgc_requeue_timeout_min;

to = cfs_time_seconds(mgc_requeue_timeout_min) +

prandom_u32_max(cfs_time_seconds(to));

wait_event_idle_timeout(rq_waitq,

rq_state & (RQ_STOP | RQ_PRECLEANUP), to);

// 遍历日志配置的链表,遍历是否有数据变更

list_for_each_entry(cld, &config_llog_list,

cld_list_chain) {

if (!cld->cld_lostlock || cld->cld_stopping)

continue;

config_log_get(cld);

cld->cld_lostlock = 0;

spin_unlock(&config_list_lock);

config_log_put(cld_prev);

cld_prev = cld;

if (likely(!(rq_state & RQ_STOP))) {

// mgs log的处理逻辑

do_requeue(cld);

spin_lock(&config_list_lock);

} else {

spin_lock(&config_list_lock);

break;

}

}

}

RETURN(rc);

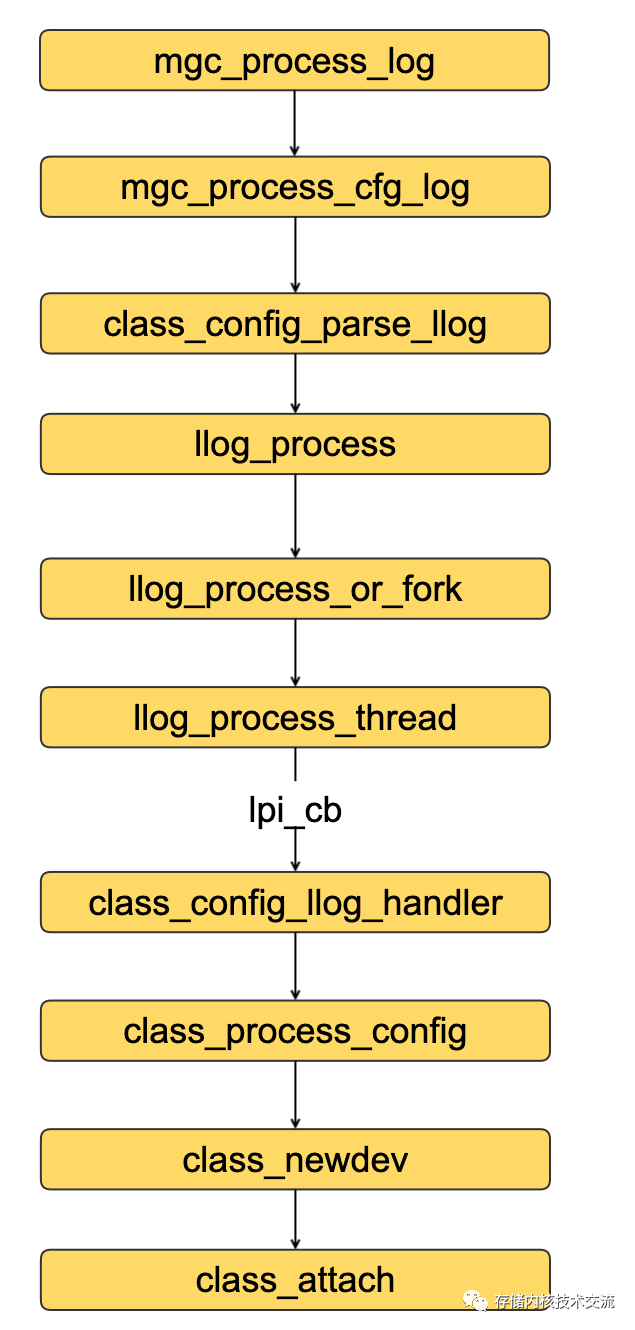

}do_requeue被用来处理mgs lustre log函数.这个函数在MGS和客户端都会执行,走的不同的逻辑(代码里会判断是否是后端服务)。do_requeue函数最核心的是调用class_config_parse_llog函数来处理mgs的lustre log

// 拿到mgs的change log开始进行处理,

static void do_requeue(struct config_llog_data *cld)

{

// mgc处理lustre log的逻辑

rc = mgc_process_log(cld->cld_mgcexp->exp_obd, cld)

{

rc = mgc_process_cfg_log(mgc, cld, rcl != 0)

{

// 这里走的是lustre client的逻辑,拿到luste log进行处理。这里涉及

class_config_parse_llog(env, ctxt, cld->cld_logname,&cld->cld_cfg)

}

}

}

// lustre log处理函数

int class_config_parse_llog(const struct lu_env *env, struct llog_ctxt *ctxt,

char *name, struct config_llog_instance *cfg)

{

struct llog_process_cat_data cd = {

.lpcd_first_idx = 0,

};

struct llog_handle *llh;

llog_cb_t callback;

int rc;

ENTRY;

// llog_open和llog_init_handle分别打开和设置log处理函数

rc = llog_open(env, ctxt, &llh, NULL, name, LLOG_OPEN_EXISTS);

rc = llog_init_handle(env, llh, LLOG_F_IS_PLAIN, NULL);

/* continue processing from where we last stopped to end-of-log */

if (cfg) {

cd.lpcd_first_idx = cfg->cfg_last_idx;

callback = cfg->cfg_callback;

LASSERT(callback != NULL);

} else {

callback = class_config_llog_handler;

}

cd.lpcd_last_idx = 0;

// 启动内核线程进行处理

rc = llog_process(env, llh, callback, cfg, &cd)

{

task = kthread_run(llog_process_thread_daemonize, lpi,

"llog_process_thread");

}

RETURN(rc);

}obd_connect函数实际调用的是client_connect_import函数正式连接到MGS服务,前面mgc_requeue_thread线程已经做好了处理lustre客户端接受并处理MGS推送的日志准备,这里一旦连接MGS就会发送lustre log给客户端。

// mgc obd 的import连接到MGS

int client_connect_import(const struct lu_env *env,

struct obd_export **exp,

struct obd_device *obd, struct obd_uuid *cluuid,

struct obd_connect_data *data, void *localdata)

{

// 底层RPC,mgc obd 连接到MGS

rc = ptlrpc_connect_import(imp);

ptlrpc_pinger_add_import(imp);

return rc;

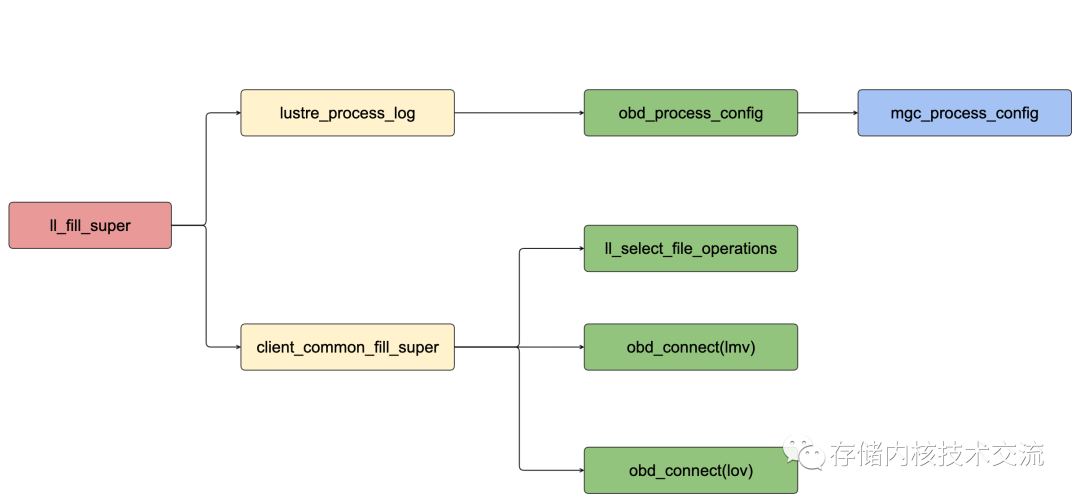

}ll_fill_super函数

ll_fill_super函数是设置好MGC的OBD

int ll_fill_super(struct super_block *sb)

{

// 这里获取的是 bigfs-client: mdc=bigfs-clilmv osc=bigfs-clilov

lprof = class_get_profile(profilenm);

// class_config_llog_handler 设置lustre log 日志条目的处理函数

cfg->cfg_callback = class_config_llog_handler;

// lustre_process_log从mgs获取lustre log进行处理

// lustre_process_log调用链路是从obd_process_config->mgc_process_config

err = lustre_process_log(sb, profilenm, cfg);

// client_common_fill_super设置文件系统的file_operation操作函数、其次连接到mds/oss等服务

err = client_common_fill_super(sb, md, dt);

}lustre_process_log连接到MGS,MGS推送lustre log,首先通过do_config_log_add函数把日志添加到watch列队中,然后调用mgc_process_log进行日志处理,首次日志处理需要初始化各种class.其调用链路这样的:mgc_process_log->mgc_process_cfg_log->class_config_parse_llog->llog_process->llog_process_or_fork->llog_process_thread->class_config_llog_handler->class_process_config->class_newdev->class_attach,到这里MGS推送过来的lustre log最终初始化成为了客户端的OBD.

int lustre_process_log(struct super_block *sb, char *logname,

struct config_llog_instance *cfg)

{

struct lustre_cfg *lcfg;

struct lustre_cfg_bufs *bufs;

struct lustre_sb_info *lsi = s2lsi(sb);

struct obd_device *mgc = lsi->lsi_mgc;

int rc;

OBD_ALLOC(lcfg, lustre_cfg_len(bufs->lcfg_bufcount, bufs->lcfg_buflen));

lustre_cfg_init(lcfg, LCFG_LOG_START, bufs);

// lustre处理config

rc = obd_process_config(mgc, sizeof(*lcfg), lcfg)

{

// 添加到队列中进行Watch变更

struct config_llog_data cld = config_log_add(obd, logname, cfg, sb)

{

do_config_log_add(obd, logname, MGS_CFG_T_CONFIG, cfg, sb);

}

// mgs端的日志处理

rc = mgc_process_log(obd, cld);

}

RETURN(rc);

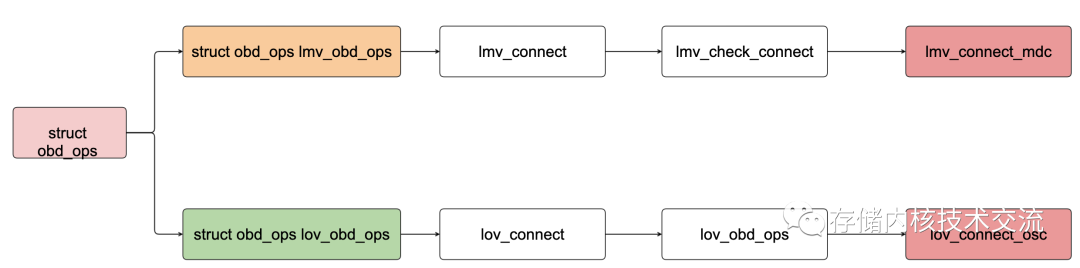

}client_common_fill_super函数核心逻辑连接mds/oss同时设置struct file_operations.lmv是聚合多个mds的抽象层,lov是聚合osc的抽象层

// lmv定义的函数操作表,

static const struct obd_ops lmv_obd_ops = {

.o_owner = THIS_MODULE,

.o_setup = lmv_setup,

.o_cleanup = lmv_cleanup,

.o_precleanup = lmv_precleanup,

.o_process_config = lmv_process_config,

.o_connect = lmv_connect,

.o_disconnect = lmv_disconnect,

.o_statfs = lmv_statfs,

.o_get_info = lmv_get_info,

.o_set_info_async = lmv_set_info_async,

.o_notify = lmv_notify,

.o_get_uuid = lmv_get_uuid,

.o_fid_alloc = lmv_fid_alloc,

.o_iocontrol = lmv_iocontrol,

.o_quotactl = lmv_quotactl

// lov定义的函数操作表

static const struct obd_ops lov_obd_ops = {

.o_owner = THIS_MODULE,

.o_setup = lov_setup,

.o_cleanup = lov_cleanup,

.o_connect = lov_connect,

.o_disconnect = lov_disconnect,

.o_statfs = lov_statfs,

.o_iocontrol = lov_iocontrol,

.o_get_info = lov_get_info,

.o_set_info_async = lov_set_info_async,

.o_notify = lov_notify,

.o_pool_new = lov_pool_new,

.o_pool_rem = lov_pool_remove,

.o_pool_add = lov_pool_add,

.o_pool_del = lov_pool_del,

.o_quotactl = lov_quotactl,

};

static int client_common_fill_super(struct super_block *sb, char *md, char *dt)

{

sbi->ll_fop = ll_select_file_operations(sbi);

// 完成连接lmv对应的mdc的过程,实际调用的是lmv_obd_ops->lmv_connect

obd_connect(NULL, &sbi->ll_md_exp, sbi->ll_md_obd,&sbi->ll_sb_uuid, data, sbi->ll_cache)

{

lmv_connect()

{

lmv_check_connect(obd)

{

lmv_mdt0_inited(lmv)

lmv_foreach_tgt(lmv, tgt) {

lmv_connect_mdc(obd, tgt);

}

}

}

}

// fid初始化

obd_fid_init(sbi->ll_md_exp->exp_obd, sbi->ll_md_exp,

LUSTRE_SEQ_METADATA);

// 连接到lov对应的oss,这里调用的是lov_obd_ops->lov_connect

obd_connect(NULL, &sbi->ll_dt_exp, sbi->ll_dt_obd,&sbi->ll_sb_uuid, data, sbi->ll_cache)

{

lov_obd_ops() {

for (i = 0; i < lov->desc.ld_tgt_count; i++) {

lov_connect_osc(obd, i, tgt->ltd_activate, &lov->lov_ocd);

}

}

}

}

// 设置file的操作函数

const struct file_operations *ll_select_file_operations(struct ll_sb_info *sbi)

{

const struct file_operations *fops = &ll_file_operations_noflock;

if (test_bit(LL_SBI_FLOCK, sbi->ll_flags))

fops = &ll_file_operations_flock;

else if (test_bit(LL_SBI_LOCALFLOCK, sbi->ll_flags))

fops = &ll_file_operations;

return fops;

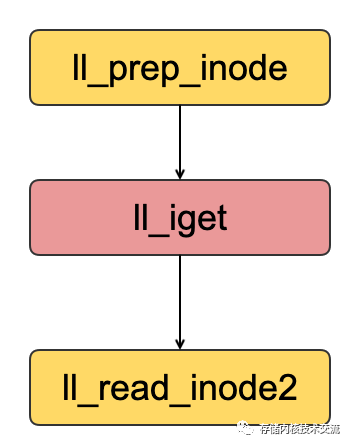

}lustre针对元数据和数据操作定义了file_operations和ll_dir_inode_operations.其中涉及到元数据的操作的inode通过ll_iget设置操作函数。

// vfs层文件读写定义的函数操作表

static const struct file_operations ll_file_operations = {

#ifdef HAVE_FILE_OPERATIONS_READ_WRITE_ITER

# ifdef HAVE_SYNC_READ_WRITE

.read = new_sync_read,

.write = new_sync_write,

# endif

.read_iter = ll_file_read_iter,

.write_iter = ll_file_write_iter,

#else /* !HAVE_FILE_OPERATIONS_READ_WRITE_ITER */

.read = ll_file_read,

.aio_read = ll_file_aio_read,

.write = ll_file_write,

.aio_write = ll_file_aio_write,

#endif /* HAVE_FILE_OPERATIONS_READ_WRITE_ITER */

.unlocked_ioctl = ll_file_ioctl,

.open = ll_file_open,

.release = ll_file_release,

.mmap = ll_file_mmap,

.llseek = ll_file_seek,

#ifndef HAVE_DEFAULT_FILE_SPLICE_READ_EXPORT

.splice_read = generic_file_splice_read,

#else

.splice_read = pcc_file_splice_read,

#endif

.fsync = ll_fsync,

.flush = ll_flush,

.fallocate = ll_fallocate,

};

// vfs层属性和权限的定义的函数函数操作表

const struct inode_operations ll_file_inode_operations = {

.setattr = ll_setattr,

.getattr = ll_getattr,

.permission = ll_inode_permission,

#ifdef HAVE_IOP_XATTR

.setxattr = ll_setxattr,

.getxattr = ll_getxattr,

.removexattr = ll_removexattr,

#endif

.listxattr = ll_listxattr,

.fiemap = ll_fiemap,

.get_acl = ll_get_acl,

#ifdef HAVE_IOP_SET_ACL

.set_acl = ll_set_acl,

#endif

};

// 元数据操作定义的函数操作表

const struct inode_operations ll_dir_inode_operations = {

.mknod = ll_mknod,

.atomic_open = ll_atomic_open,

.lookup = ll_lookup_nd,

.create = ll_create_nd,

/* We need all these non-raw things for NFSD, to not patch it. */

.unlink = ll_unlink,

.mkdir = ll_mkdir,

.rmdir = ll_rmdir,

.symlink = ll_symlink,

.link = ll_link,

.rename = ll_rename,

.setattr = ll_setattr,

.getattr = ll_getattr,

.permission = ll_inode_permission,

#ifdef HAVE_IOP_XATTR

.setxattr = ll_setxattr,

.getxattr = ll_getxattr,

.removexattr = ll_removexattr,

#endif

.listxattr = ll_listxattr,

.get_acl = ll_get_acl,

#ifdef HAVE_IOP_SET_ACL

.set_acl = ll_set_acl,

#endif

};

const struct inode_operations ll_special_inode_operations = {

.setattr = ll_setattr,

.getattr = ll_getattr,

.permission = ll_inode_permission,

#ifdef HAVE_IOP_XATTR

.setxattr = ll_setxattr,

.getxattr = ll_getxattr,

.removexattr = ll_removexattr,

#endif

.listxattr = ll_listxattr,

.get_acl = ll_get_acl,

#ifdef HAVE_IOP_SET_ACL

.set_acl = ll_set_acl,

#endif

};

// 为inode设置操作函数表

int ll_read_inode2(struct inode *inode, void *opaque)

{

struct lustre_md *md = opaque;

struct ll_inode_info *lli = ll_i2info(inode);

int rc;

if (S_ISREG(inode->i_mode)) {

struct ll_sb_info *sbi = ll_i2sbi(inode);

inode->i_op = &ll_file_inode_operations;

inode->i_fop = sbi->ll_fop;

inode->i_mapping->a_ops = (struct address_space_operations *)&ll_aops;

EXIT;

} else if (S_ISDIR(inode->i_mode)) {

inode->i_op = &ll_dir_inode_operations;

inode->i_fop = &ll_dir_operations;

EXIT;

} else if (S_ISLNK(inode->i_mode)) {

inode->i_op = &ll_fast_symlink_inode_operations;

EXIT;

} else {

inode->i_op = &ll_special_inode_operations;

init_special_inode(inode, inode->i_mode,

inode->i_rdev);

EXIT;

}

return 0;

}本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2022-10-14,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录