Attention Is All You Need

Attention Is All You Need

YoungTimes

发布于 2023-09-01 08:57:00

发布于 2023-09-01 08:57:00

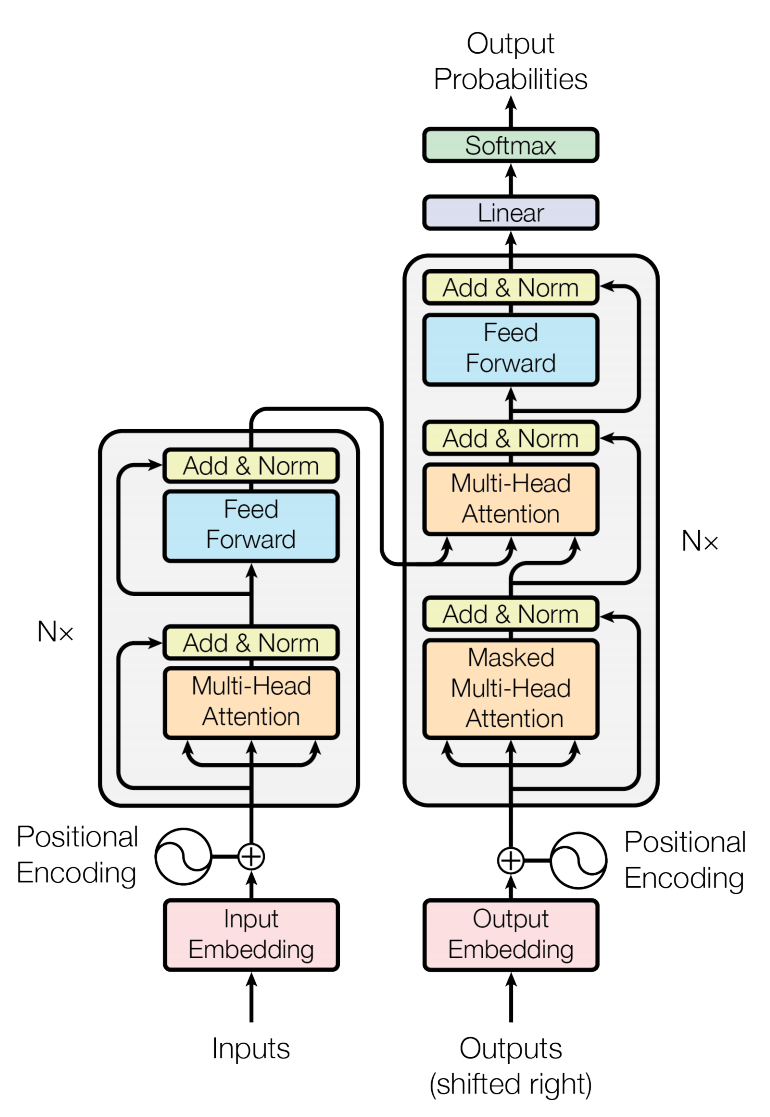

Attention解决了类RNN的长时序依赖问题和计算的并行化的问题,Multi-Head Attention实现了类似RNN多通道的效果。Transformer的整体架构是如下,Encoder和Decoder都使用了Stacked Self-Attention And Point-wise, Fully Connected Layers(MLP)结构。

Encoder

Encoder包含6个Layer,每个Layer包含2个Sub-Layer,Sub-Layer-1是Multi-Head Self-Attention,Sub_Layer-2是MLP,每个Sub-Layer中还包含了Residual Connection和Layer Normalization。

Decoder

Decoder包含6个Layer,每个Layer包含3个Sub-Layer,Sub-Layer-1是Masked Multi-Head Self-Attention,Mask是为了保证【Predictions for position

can depend only on the known outputs at positions less than

】;Sub-Layer-2是Multi-Head Self Attention;Sub-Layer-3是MLP,每个Sub-Layer中还包含了Residual Connection和Layer Normalization。

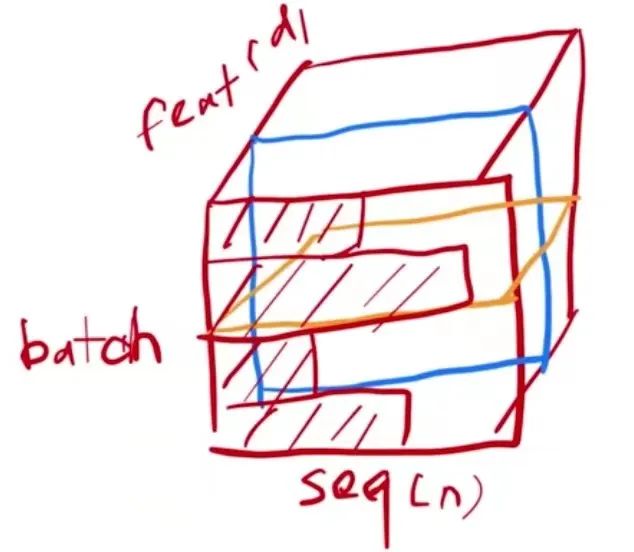

Layer Normalization

在机器翻译领域,输入的Seq长度往往是不同的,因此相比于在多个样本之间做Normalization的Batch Normalization,在单个样本内部做Layer Normalization是一种更好的策略。

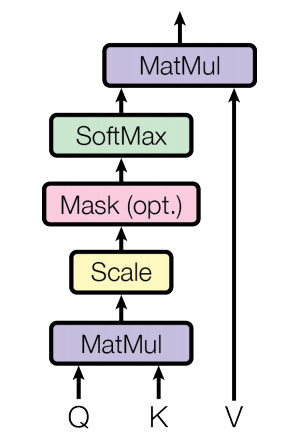

Scaled Dot-Product Attention

Scaled Dot-Product Attention

Attention本质是一种加权和机制,它的计算公式如下:

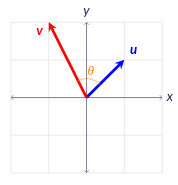

- 用Q与K的内积衡量二者相似度原理是什么?

来源:https://charlieleee.github.io/post/cmc/

向量的相似性是用两者的角度余弦来度量,角度越小,余弦值愈大,两者愈相似。

- 为什么要除以

“While for small values of

the two mechanisms perform similarly, additive attention outperforms dot product attention without scaling for larger values of

,We suspect that for large values of

, the dot products grow large in magnitude, pushing the softmax function into regions where it has extremely small gradients.

简单的说,在Dot Product Attention当

比较大的时候,点乘的值可能比较大或者比较小,导致向量间相对的差值变大,经过softmax之后,大的值向1靠近,小的值向0靠近,使得网络的梯度变得很小,难以训练。Transformer中的

一般比较大,所以除以

是不错的选择。

- Mask是什么

Mask主要避免网络提前看到当前时刻之后的输入。

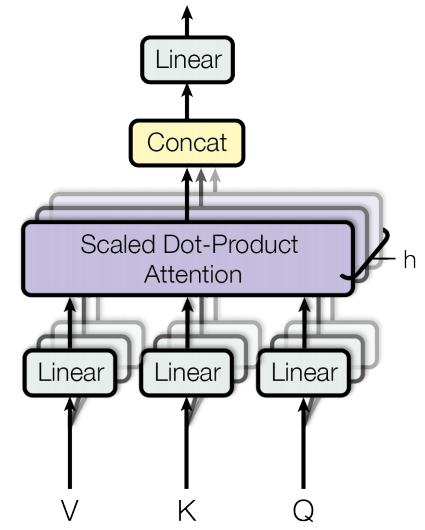

Multi-Head Attention

Multi-Head Attention

Multi-Head Attention中,Q、K、V先经过Linear层,类似CNN的多Channel机制,给网络h次投影学习机会,让其有机会学到更好的投影。

其中:

“In this work we employ h = 8 parallel attention layers, or heads. For each of these we use

. Due to the reduced dimension of each head, the total computational cost is similar to that of single-head attention with full dimensionality.

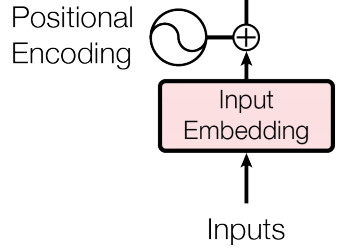

Embedding & Positional Encoding

Input Embedding将输入单词映射为维度d_{model}的向量,这里将Embedding Weight除以

,保证Input Embedding与Positional Encoding的Scale基本一致。

Position Encoding采用相对位置编码方式:

“where pos is the position and i is the dimension. That is, each dimension of the positional encoding corresponds to a sinusoid. The wavelengths form a geometric progression from 2π to 10000 · 2π. We chose this function because we hypothesized it would allow the model to easily learn to attend by relative positions, since for any fixed offset k,

can be represented as a linear function of

.

参考材料

1.https://www.zhihu.com/question/592626839/answer/2965200007?utm_campaign=shareopn&utm_medium=social&utm_oi=703332645056049152&utm_psn=1630928281645072385

2.[跟李沐读论文] https://www.youtube.com/watch?v=nzqlFIcCSWQ

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2023-05-14,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录