基于Pytorch构建ResNet网络对cifar-10进行分类

基于Pytorch构建ResNet网络对cifar-10进行分类

python与大数据分析

发布于 2023-09-03 21:23:16

发布于 2023-09-03 21:23:16

何凯明等人在2015年提出的ResNet,在ImageNet比赛classification任务上获得第一名,获评CVPR2016最佳论文。

自从深度神经网络在ImageNet大放异彩之后,后来问世的深度神经网络就朝着网络层数越来越深的方向发展,从LeNet、AlexNet、VGG-Net、GoogLeNet。直觉上我们不难得出结论:增加网络深度后,网络可以进行更加复杂的特征提取,因此更深的模型可以取得更好的结果。

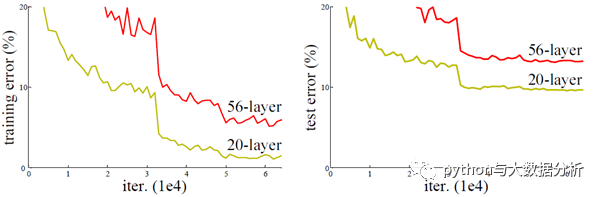

但事实并非如此,人们发现随着网络深度的增加,模型精度并不总是提升,并且这个问题显然不是由过拟合(overfitting)造成的,因为网络加深后不仅测试误差变高了,它的训练误差竟然也变高了。作者提出,这可能是因为更深的网络会伴随梯度消失/爆炸问题,从而阻碍网络的收敛。将这种加深网络深度但网络性能却下降的现象称为退化问题。例如传统神经网络的层数从20增加为56时,网络的训练误差和测试误差均出现了明显的增长。

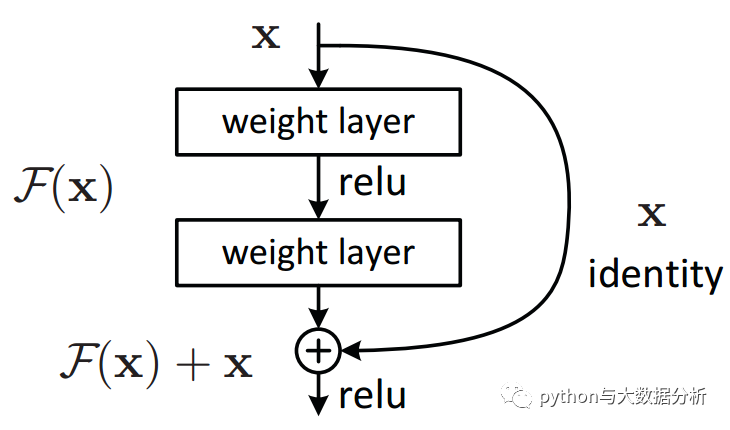

何博士灵感爆发,他提出了残差学习来解决退化问题。对于一个堆积层结构(几层堆积而成)当输入为 时其学习到的特征记为 H(x) ,现在我们希望其可以学习到残差 F(x)=H(x)-x ,这样其实原始的学习特征是 F(x)+x 。之所以这样是因为残差学习相比原始特征直接学习更容易。当残差为0时,此时堆积层仅仅做了恒等映射,至少网络性能不会下降,实际上残差不会为0,这也会使得堆积层在输入特征基础上学习到新的特征,从而拥有更好的性能。残差学习的结构如图4所示。这有点类似与电路中的“短路”,所以是一种短路连接

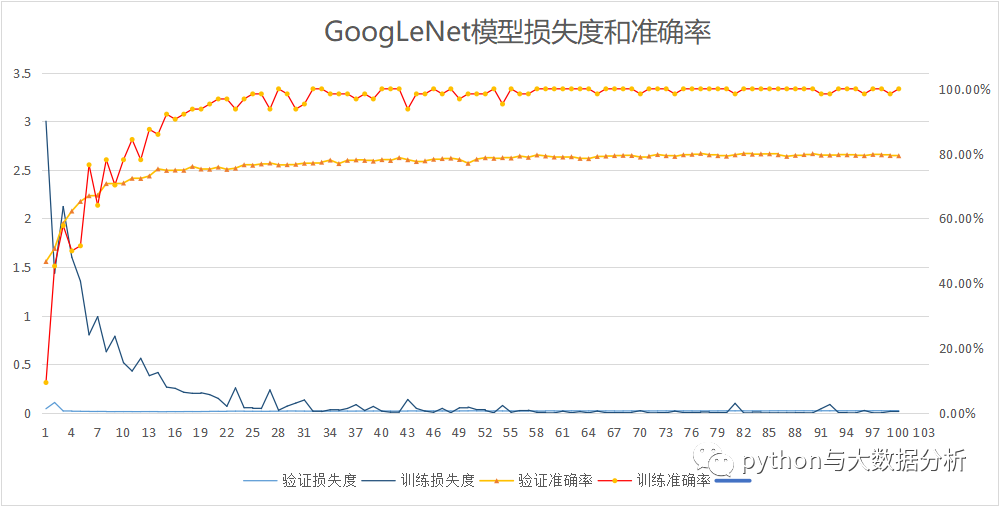

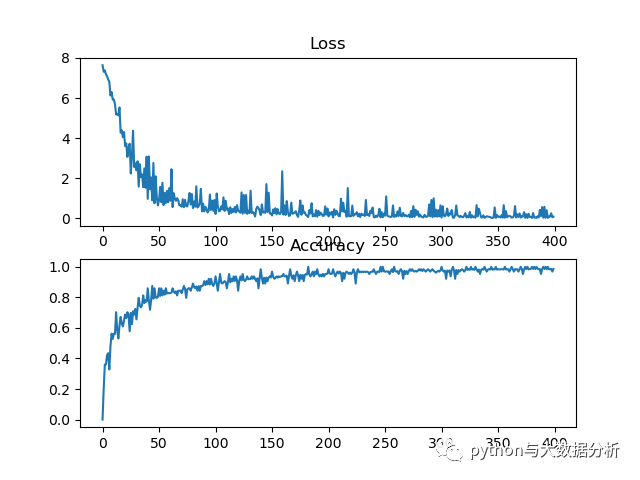

而且网络的训练,也并不是越多轮次越好,这一点可以参考一下GoogLeNet网络的训练和验证数据,基本上到了60轮次,就出现上下波动了。

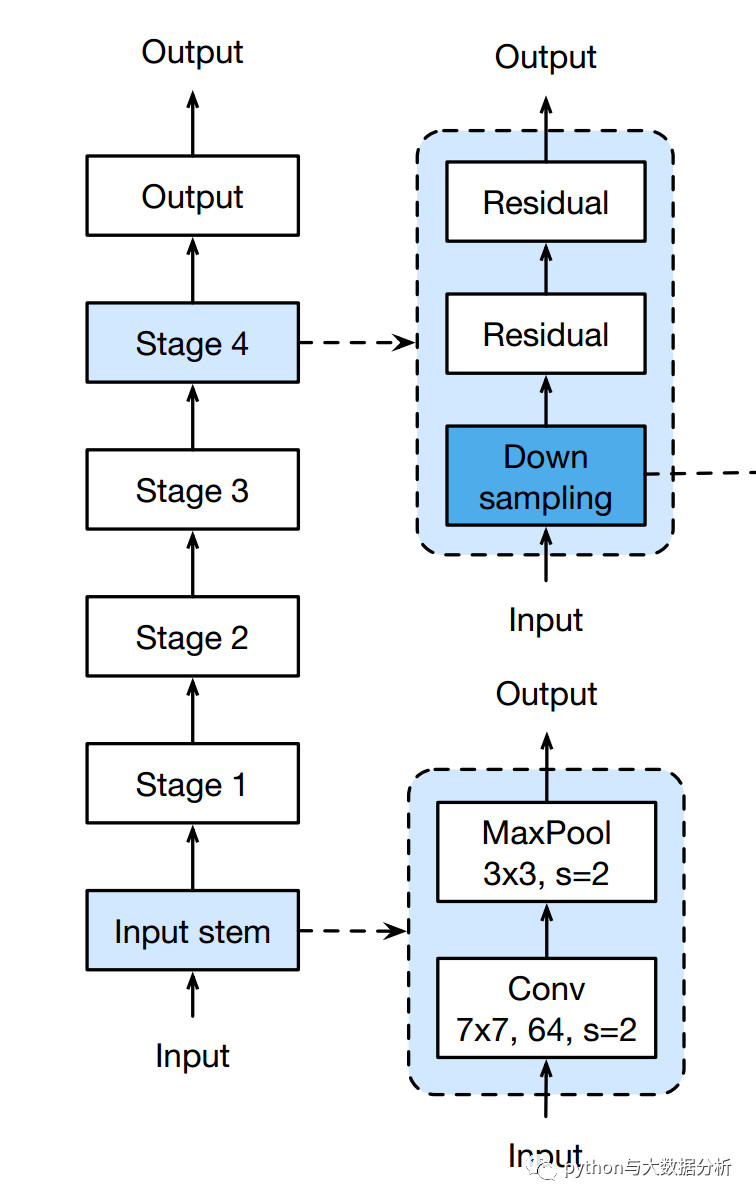

ResNet主要有五种主要形式:Res18,Res34,Res50,Res101,Res152;

如下图所示,每个网络都包括三个主要部分:输入部分、输出部分和中间卷积部分(中间卷积部分包括如图所示的Stage1到Stage4共计四个stage)。尽管ResNet的变种形式丰富,但都遵循上述的结构特点,网络之间的不同主要在于中间卷积部分的block参数和个数存在差异。

具体代码如下:

#定义ResNet网络# 3x3卷积定义def conv3x3(in_channels, out_channels, kernel_size = 3,stride=1, padding=1):return nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size,stride=stride, padding=padding, bias=False)# Resnet_50中的残差块classResidualBlock(nn.Module):def __init__(self, in_channels, out_channels, stride=1, downsample=None):super(ResidualBlock, self).__init__()self.mid_channels = out_channels//4self.conv1 = conv3x3(in_channels, self.mid_channels, kernel_size=1, stride=stride, padding=0)#Resnet50中,从第二个残差块开始每个layer的第一个残差块都需要一次downsampleself.bn1 = nn.BatchNorm2d(self.mid_channels)self.relu = nn.ReLU(inplace=True)self.conv2 = conv3x3(self.mid_channels, self.mid_channels)self.bn2 = nn.BatchNorm2d(self.mid_channels)self.conv3 = conv3x3(self.mid_channels, out_channels,kernel_size=1,padding=0)self.bn3 = nn.BatchNorm2d(out_channels)self.downsample_0 = conv3x3(in_channels,out_channels,kernel_size=1,stride=1,padding=0)self.downsample = downsampledef forward(self, x):residual = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)out = self.conv3(out)out = self.bn3(out)if self.downsample:residual = self.downsample(x)else:residual = self.downsample_0(x)out += residualout = self.bn3(out)out = self.relu(out)return out# ResNet定义classResNet(nn.Module):def __init__(self, block, layers, num_classes=10):super(ResNet, self).__init__()self.conv = conv3x3(3, 64,kernel_size=7,stride=2)self.bn = nn.BatchNorm2d(64)self.max_pool = nn.MaxPool2d(3,2,padding=1)self.layer1 = self.make_layer(block, 64, 256, layers[0])self.layer2 = self.make_layer(block, 256, 512, layers[1], 2)self.layer3 = self.make_layer(block, 512, 1024, layers[2], 2)self.layer4 = self.make_layer(block, 1024, 2048, layers[3], 2)self.avg_pool = nn.AvgPool2d(3,stride=1,padding=1)self.fc = nn.Linear(math.ceil(img_height/32)*math.ceil(img_width/32)*2048, num_classes)def make_layer(self, block, in_channels, out_channels, blocks, stride=1):downsample = Noneif(stride != 1):downsample = nn.Sequential(conv3x3(in_channels, out_channels, kernel_size=3,stride=stride),nn.BatchNorm2d(out_channels))layers = []layers.append(block(in_channels, out_channels, stride, downsample))for i in range(1, blocks):layers.append(block(out_channels, out_channels))return nn.Sequential(*layers)def forward(self, x):out = self.conv(x)out = self.bn(out)out = self.max_pool(out)out = self.layer1(out)out = self.layer2(out)out = self.layer3(out)out = self.layer4(out)out = self.avg_pool(out)out = out.view( -1,math.ceil(img_height/32)*math.ceil(img_width/32)*2048)return out# 定义模型输出模式,GPU和CPU均可#Resnet-503-4-6-3总计(3+4+6+3)*3=48个conv层 加上开头的两个Conv一共50层model = ResNet(ResidualBlock, [3, 4, 6, 3]).to(DEVICE)

输出ResNet网络架构

ResNet((conv): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(1, 1), bias=False)(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(max_pool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(layer1): Sequential((0): ResidualBlock((conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False))(1): ResidualBlock((conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False))(2): ResidualBlock((conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)))(layer2): Sequential((0): ResidualBlock((conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(downsample): Sequential((0): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): ResidualBlock((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False))(2): ResidualBlock((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False))(3): ResidualBlock((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)))(layer3): Sequential((0): ResidualBlock((conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(downsample): Sequential((0): Conv2d(512, 1024, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): ResidualBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(1024, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False))(2): ResidualBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(1024, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False))(3): ResidualBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(1024, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False))(4): ResidualBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(1024, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False))(5): ResidualBlock((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(1024, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)))(layer4): Sequential((0): ResidualBlock((conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)(downsample): Sequential((0): Conv2d(1024, 2048, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): ResidualBlock((conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(2048, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False))(2): ResidualBlock((conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample_0): Conv2d(2048, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)))(avg_pool): AvgPool2d(kernel_size=3, stride=1, padding=1)(fc): Linear(in_features=2048, out_features=10, bias=True))

ResNet的损失率和准确率变化

说实话,到了ResNet基本上已经无法理解其数学原理了,只知道增加了残差网络,降低深度网络学习退化问题。

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2023-08-20,如有侵权请联系 cloudcommunity@tencent.com 删除

本文分享自 python与大数据分析 微信公众号,前往查看

如有侵权,请联系 cloudcommunity@tencent.com 删除。

本文参与 腾讯云自媒体同步曝光计划 ,欢迎热爱写作的你一起参与!

评论

登录后参与评论

推荐阅读

目录