System Design Interview 9 设计网络爬虫

System Design Interview 9 设计网络爬虫

s09g

发布于 2024-04-10 14:10:14

发布于 2024-04-10 14:10:14

In this chapter, we focus on web crawler design: an interesting and classic system design interview question.

在本章,我们重点讨论网络爬虫的设计,这也是一个有趣且经典的系统设计面试问题。

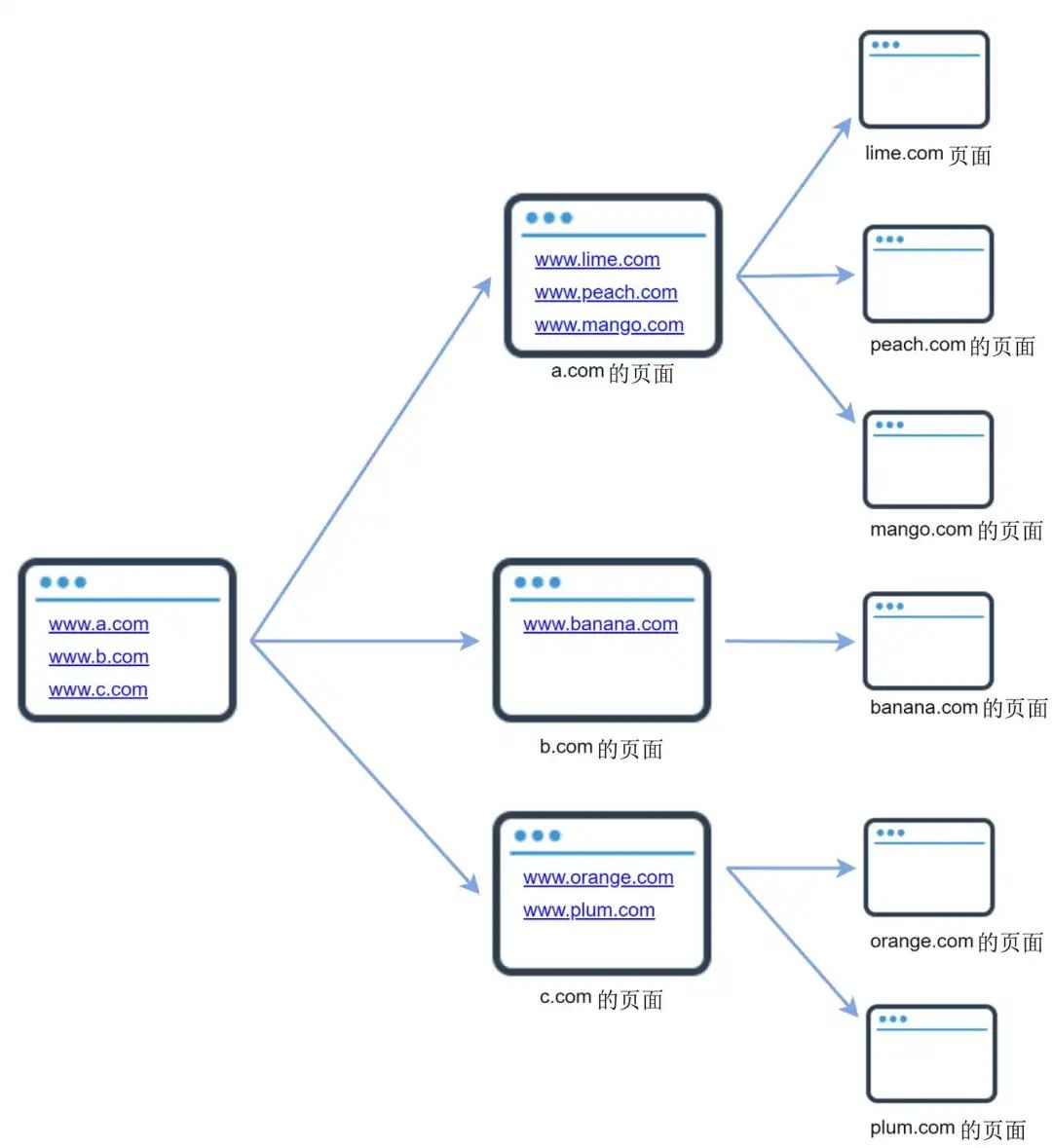

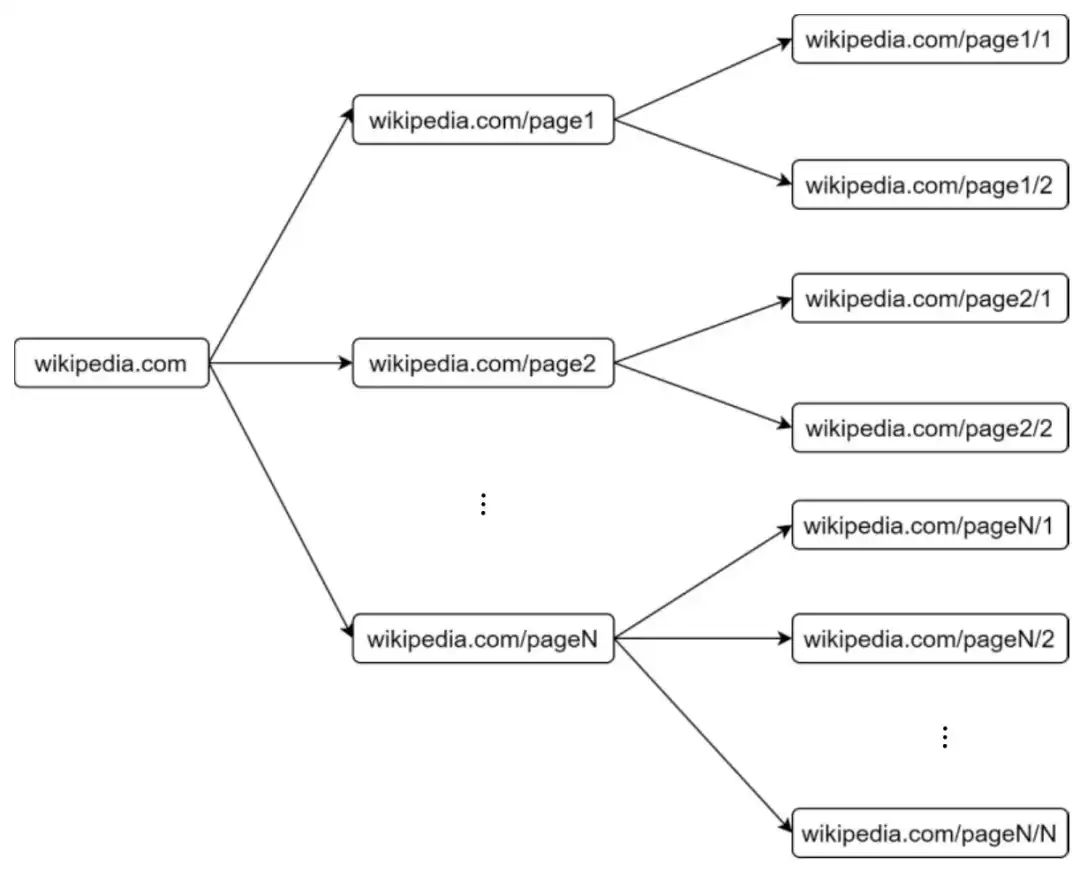

A web crawler is known as a robot or spider. It is widely used by search engines to discover new or updated content on the web. Content can be a web page, an image, a video, a PDF file, etc. A web crawler starts by collecting a few web pages and then follows links on those pages to collect new content. Figure 1 shows a visual example of the crawl process.

网络爬虫(Web Crawler,下文简称为“爬虫”)也称为机器人(Bot)或者蜘蛛(Spider),被搜索引擎广泛地用于发现网络上的新内容或者更新的内容。这些内容可以是网页、图片、视频、PDF文件等。爬虫从收集网页开始,然后顺着这些网页上的链接收集新的内容。图1展示了爬虫爬取页面的示例。

A crawler is used for many purposes:

爬虫有很多用途。

- Search engine indexing: This is the most common use case. A crawler collects web pages to create a local index for search engines. For example, Googlebot is the web crawler behind the Google search engine. 搜索引擎索引:这是最常见的使用场景。爬虫收集网页并为搜索引擎创建本地索引。例如,Googlebot就是谷歌搜索引擎背后的爬虫。

- Web archiving: This is the process of collecting information from the web to preserve data for future uses. For instance, many national libraries run crawlers to archive web sites. Notable examples are the US Library of Congress and the EU web archive. 网页存档:这是指从网上收集信息并保存起来以备未来使用的过程。很多国家图书馆运行爬虫来存档网站,比如美国国会图书馆和欧盟网页存档。

- Web mining: The explosive growth of the web presents an unprecedented opportunity for data mining. Web mining helps to discover useful knowledge from the internet. For example, top financial firms use crawlers to download shareholder meetings and annual reports to learn key company initiatives. 网络挖掘:互联网的迅猛发展为数据挖掘提供了前所未有的机会。网络挖掘帮助我们从互联网上发现有用的信息。比如,顶级金融公司使用爬虫来获取关键公司的股东会议信息和年报,从而了解它们的动向。

- Web monitoring. The crawlers help to monitor copyright and trademark infringements over the Internet. For example, Digimarc utilizes crawlers to discover pirated works and reports. 网络监控:爬虫可以帮助监控互联网上的版权和商标侵权行为。例如,Digimarc公司利用爬虫发现盗版作品并上报。

The complexity of developing a web crawler depends on the scale we intend to support. It could be either a small school project, which takes only a few hours to complete or a gigantic project that requires continuous improvement from a dedicated engineering team. Thus, we will explore the scale and features to support below.

爬虫开发的复杂性取决于我们想要支持的爬虫规模。它可以是一个小的学校项目,只需要几小时就可以完成,也可以是一个需要专业开发团队持续优化的巨型项目。因此,下面我们会先确定我们需要支持的爬虫规模和特性。

1 Step 1 - Understand the problem and establish design scope 第一步 理解问题并确定设计的边界

The basic algorithm of a web crawler is simple:

爬虫的基本算法很简单。

- Given a set of URLs, download all the web pages addressed by the URLs. 给定一组URL,下载这些URL对应的所有网页。

- Extract URLs from these web pages 从这些网页中提取URL。

- Add new URLs to the list of URLs to be downloaded. Repeat these 3 steps. 将新的URL添加到需要下载的URL列表里。然后重复执行这3个步骤。

Does a web crawler work truly as simple as this basic algorithm? Not exactly. Designing a vastly scalable web crawler is an extremely complex task. It is unlikely for anyone to design a massive web crawler within the interview duration. Before jumping into the design, we must ask questions to understand the requirements and establish design scope:

爬虫的工作真的像基本算法所述的这样简单吗?并不完全是。设计大规模的可扩展爬虫是一个极度复杂的任务。在面试时间内,没有人能设计出一个巨型爬虫。在着手设计之前,我们必须通过提问来理解需求并确定设计的边界。

Candidate: What is the main purpose of the crawler? Is it used for search engine indexing, data mining, or something else? Interviewer: Search engine indexing.

候选人:爬虫的主要目的是什么?是用于搜索引擎索引、数据挖掘还是其他什么?

面试官:搜索引擎索引。

Candidate: How many web pages does the web crawler collect per month? Interviewer: 1 billion pages.

候选人:爬虫每个月收集多少网页?

面试官:10亿个。

Candidate: What content types are included? HTML only or other content types such as PDFs and images as well? Interviewer: HTML only.

候选人:包括哪些内容类型?是只有HTML页面,还是也包括其他内容类型,比如PDF文件、图片等?

面试官:仅包括HTML页面。

Candidate: Shall we consider newly added or edited web pages? Interviewer: Yes, we should consider the newly added or edited web pages.

候选人:我们需要考虑新增的或者有更新的网页吗?

面试官:是的,我们应该要考虑新增的或者有更新的网页。

Candidate: Do we need to store HTML pages crawled from the web? Interviewer: Yes, up to 5 years

候选人:我们需要保存从网络上爬取的HTML页面吗?

面试官:是的,最多要保存5年。

Candidate: How do we handle web pages with duplicate content? Interviewer: Pages with duplicate content should be ignored.

候选人:我们如何处理有重复内容的网页?

面试官:忽略有重复内容的网页。

Above are some of the sample questions that you can ask your interviewer. It is important to understand the requirements and clarify ambiguities. Even if you are asked to design a straightforward product like a web crawler, you and your interviewer might not have the same assumptions.

以上是你可以向面试官提出的问题的一些示例。理解和厘清需求是很重要的。即使你被要求设计一个像爬虫这样简单的产品,你和面试官也可能会有不一样的假设。

Beside functionalities to clarify with your interviewer, it is also important to note down the following characteristics of a good web crawler:

除了要与面试官厘清功能需求,还要确定爬虫是否具备如下特性,它们都是一个好的爬虫应该具备的。

- Scalability: The web is very large. There are billions of web pages out there. Web crawling should be extremely efficient using parallelization. 可扩展性:互联网很庞大,存在数十亿的网页。爬虫需要通过并行化来高效爬取信息。

- Robustness: The web is full of traps. Bad HTML, unresponsive servers, crashes, malicious links, etc. are all common. The crawler must handle all those edge cases. 健壮性:网络上充满了陷阱。糟糕的HTML页面、无响应的服务器、宕机、恶意链接等都很常见。爬虫必须应对所有这些极端场景。

- Politeness: The crawler should not make too many requests to a website within a short time interval. 礼貌性:爬虫不应该在很短的时间间隔内对一个网站发送太多请求。

- Extensibility: The system is flexible so that minimal changes are needed to support new content types. For example, if we want to crawl image files in the future, we should not need to redesign the entire system. 可扩展性:系统应该具有灵活性,只需要做最少的更改就能支持新的内容类型。举个例子,如果我们将来想要爬取图片,应该不需要重新设计整个系统。

Back of the envelope estimation 封底估算

The following estimations are based on many assumptions, and it is important to communicate with the interviewer to be on the same page.

下面的估算基于很多假设,与面试官交流并达成共识很重要。

- Assume 1 billion web pages are downloaded every month. 假设每个月要下载10亿个网页。

- QPS: 1,000,000,000 / 30 days / 24 hours / 3600 seconds = ~400 pages per second. QPS:1,000,000,000÷30÷24÷3600≈400,即每秒约400个网页。

- Peak QPS = 2 * QPS = 800 峰值QPS=2×QPS=800。

- Assume the average web page size is 500k. 假设平均每个网页的大小是500KB。

- 1-billion-page x 500k = 500 TB storage per month. If you are unclear about digital storage units, go through “Power of 2” section in the "Back-of-the-envelope Estimation" chapter again. 每月需要存储1,000,000,000×500KB=500TB。如果你不太熟悉存储单位的含义,请重新阅读第2章的2.1节。

- Assuming data are stored for five years, 500 TB * 12 months * 5 years = 30 PB. A 30 PB storage is needed to store five-year content. 假设数据要保存5年,则500TB×12×5=30PB,即需要30PB的存储空间来保存5年的内容。

2 Step 2 - Propose high-level design and get buy-in 第二步 提议高层级的设计并获得认同

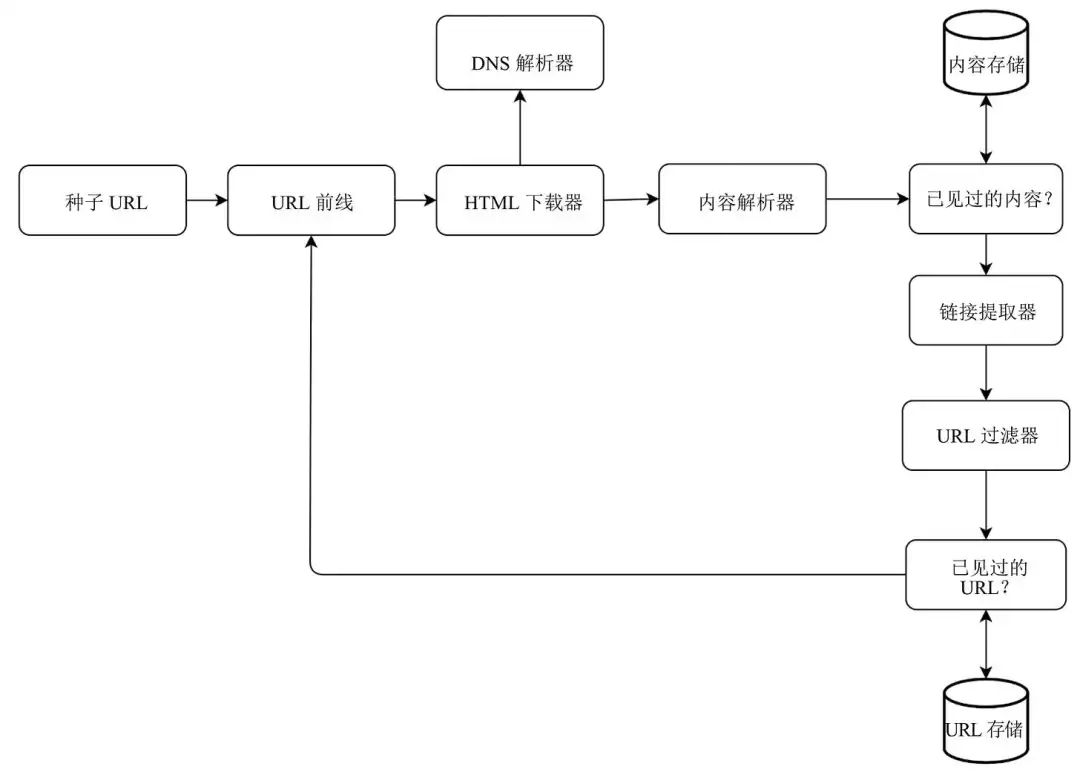

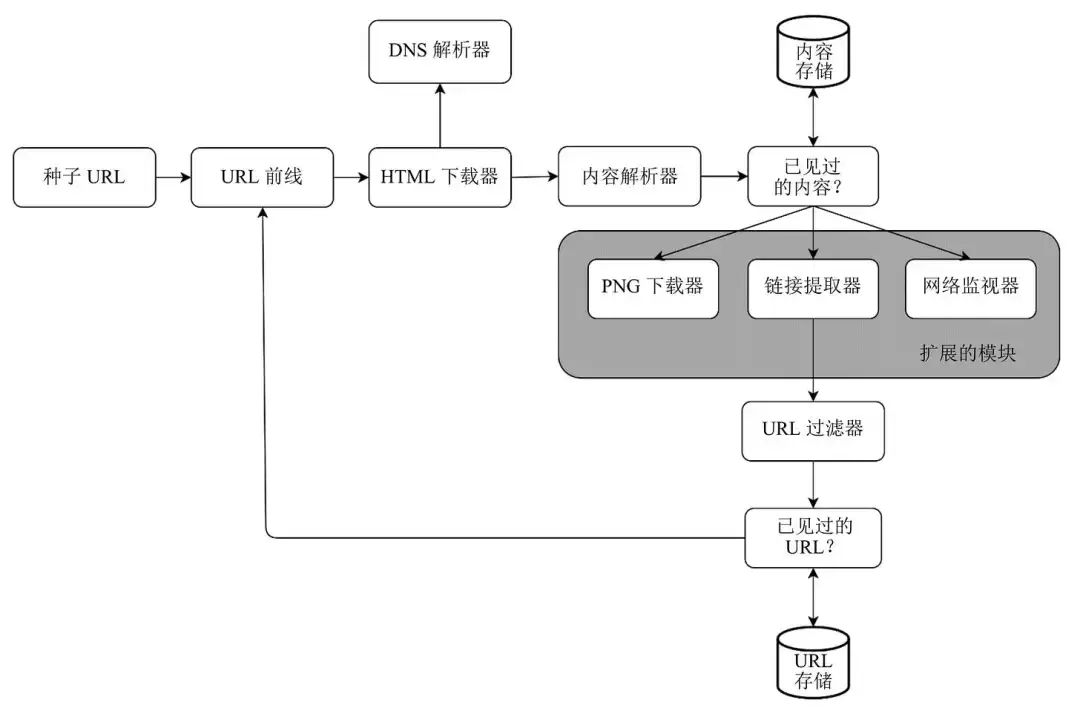

Once the requirements are clear, we move on to the high-level design. Inspired by previous studies on web crawling, we propose a high-level design as shown in Figure 2.

一旦明确了需求,我们就可以考虑高层级设计了。受前人关于爬虫研究的启发,我们提出如图2所示的高层级设计。

First, we explore each design component to understand their functionalities. Then, we examine the crawler workflow step-by-step.

首先,我们探索每个组件以了解它们的功能,然后一步步分析这个爬虫的工作流程。

Seed URLs

种子URL

A web crawler uses seed URLs as a starting point for the crawl process. For example, to crawl all web pages from a university’s website, an intuitive way to select seed URLs is to use the university’s domain name.

爬虫使用种子URL作为爬取的起点。例如,要爬取一所大学网站上的所有网页,直观的方法是用该大学的域名作为种子URL。

To crawl the entire web, we need to be creative in selecting seed URLs. A good seed URL serves as a good starting point that a crawler can utilize to traverse as many links as possible. The general strategy is to divide the entire URL space into smaller ones. The first proposed approach is based on locality as different countries may have different popular websites. Another way is to choose seed URLs based on topics; for example, we can divide URL space into shopping, sports, healthcare, etc. Seed URL selection is an open-ended question. You are not expected to give the perfect answer. Just think out loud.

为了爬取整个网络,我们需要有创意地选择种子URL。好的种子URL让我们有一个好的起点,爬虫可以利用它来遍历尽可能多的链接。一般的策略是把整个URL空间分成若干小块。因为不同国家可能有不同的热门网站,所以我们提议的第一个方法是基于物理位置来划分。另一个方法是基于话题来选择种子URL,比如我们可以把URL空间分为购物、体育、健康等部分。种子URL的选择是一个开放式问题。你不必给出完美的答案。只要边想边说出来就好。

URL Frontier

URL前线

Most modern web crawlers split the crawl state into two: to be downloaded and already downloaded. The component that stores URLs to be downloaded is called the URL Frontier. You can refer to this as a First-in-First-out (FIFO) queue. For detailed information about the URL Frontier, refer to the deep dive.

大部分现代爬虫把爬取状态分为两种:即将下载和已经下载。用来存储即将下载的URL的组件叫URL前线。你可以把它看作一个先进先出(FIFO)的队列。关于URL前线的详细信息,将在3.2节讲解。

HTML Downloader

HTML下载器

The HTML downloader downloads web pages from the internet. Those URLs are provided by the URL Frontier.

HTML下载器从互联网上下载网页。要下载的URL由URL前线来提供。

DNS Resolver

DNS解析器

To download a web page, a URL must be translated into an IP address. The HTML Downloader calls the DNS Resolver to get the corresponding IP address for the URL. For instance, URL www.wikipedia.org is converted to IP address 198.35.26.96 as of 3/5/2019.

要下载网页,必须将URL转换成IP地址。HTML下载器请求DNS解析器来获取URL对应的IP地址。举个例子,截至2019年5月3日,www.wikipedia.org会被转换成198.35.26.96这个IP地址。

Content Parser

内容解析器

After a web page is downloaded, it must be parsed and validated because malformed web pages could provoke problems and waste storage space. Implementing a content parser in a crawl server will slow down the crawling process. Thus, the content parser is a separate component.

网页被下载以后,必须进行解析和校验,因为有问题的网页可能会引发问题且浪费存储空间。在爬虫服务器中实现内容解析器会减慢爬虫的速度。因此,内容解析器是一个独立的组件。

Content Seen?

已见过的内容?

Online research reveals that 29% of the web pages are duplicated contents, which may cause the same content to be stored multiple times. We introduce the “Content Seen?” data structure to eliminate data redundancy and shorten processing time. It helps to detect new content previously stored in the system. To compare two HTML documents, we can compare them character by character. However, this method is slow and time-consuming, especially when billions of web pages are involved. An efficient way to accomplish this task is to compare the hash values of the two web pages.

有研究显示,29%的网页是重复内容,这可能导致同样的内容被多次存储。我们引入了“已见过的内容?”数据结构,来消除数据冗余和缩短处理时间。它帮助检测内容是否已存储在系统中。在比较两个HTML文件时,我们可以逐字符地比较。但是这个方法太慢且耗时,特别是有数十亿的网页要比较时。完成这个任务的一个高效方法是比较两个网页的哈希值。

Content Storage

内容存储

It is a storage system for storing HTML content. The choice of storage system depends on factors such as data type, data size, access frequency, life span, etc. Both disk and memory are used.

它是存储HTML内容的存储系统。选择什么样的存储系统,取决于数据的类型、大小、访问频率、生命周期等因素。硬盘和内存都被用到。

- Most of the content is stored on disk because the data set is too big to fit in memory. 大部分内容存储在硬盘中,因为数据集太大,内存装不下。

- Popular content is kept in memory to reduce latency. 热门内容被存储在内存中以降低延时。

URL Extractor

URL提取器

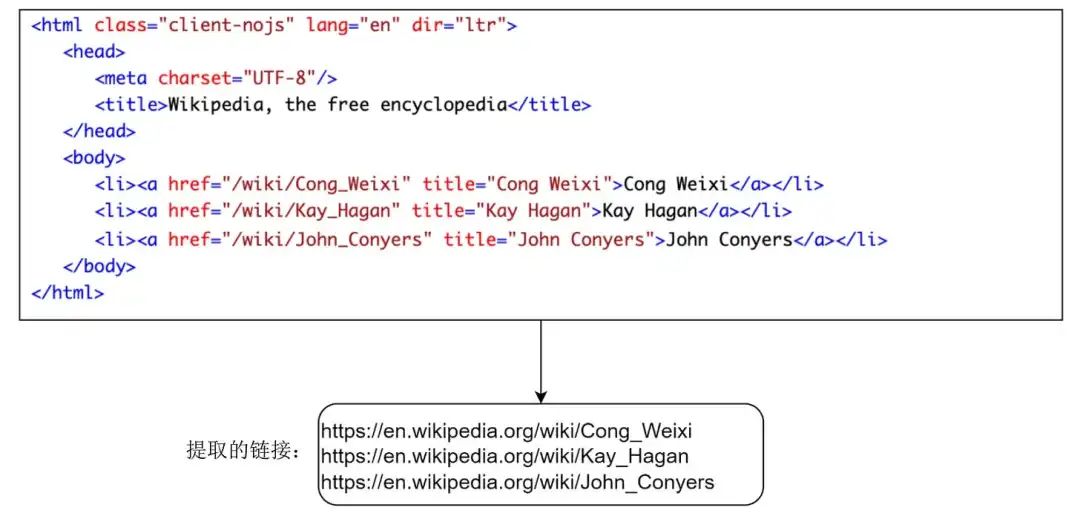

URL Extractor parses and extracts links from HTML pages. Figure 3 shows an example of a link extraction process. Relative paths are converted to absolute URLs by adding the “https://en.wikipedia.org” prefix.

URL提取器从HTML页面中解析和提取链接。图3展示了链接提取过程。通过添加前缀“https://en.wikipedia.org”,相对路径被转换成绝对URL。

URL Filter

URL过滤器

The URL filter excludes certain content types, file extensions, error links and URLs in “blacklisted” sites.

URL过滤器用于排除特定内容类型、文件扩展名、问题链接和“黑名单”网站的URL。

URL Seen?

已见过的URL?

“URL Seen?” is a data structure that keeps track of URLs that are visited before or already in the Frontier. “URL Seen?” helps to avoid adding the same URL multiple times as this can increase server load and cause potential infinite loops.

“已见过的URL?”是一种数据结构,可以用来追踪记录已访问过的或者已经在URL前线里的URL。“已见过的URL?”可以帮我们避免多次添加同一个URL,重复添加URL会增加服务器负载并导致潜在的无限循环。

Bloom filter and hash table are common techniques to implement the “URL Seen?” component. We will not cover the detailed implementation of the bloom filter and hash table here. For more information, refer to the reference materials.

布隆过滤器和哈希表都是实现“已见过的URL?”组件的常见技术。在这里我们不会介绍布隆过滤器和哈希表的详细实现细节。如果你感兴趣,请参考Burton H.Bloom的文章“Space/Time Trade-Offs in Hash Coding with Allowable Errors”,以及Allan Heydon与Marc Najork合著的文章“Mercator:A Scalable,Extensible Web Crawler”。

URL Storage

URL存储

URL Storage stores already visited URLs.

URL存储用于保存已访问过的URL。

So far, we have discussed every system component. Next, we put them together to explain the workflow.

到目前为止,我们讨论了所有系统组件。接下来,我们把它们组合在一起来解释爬虫的工作流程。

Web crawler workflow

爬虫工作流程

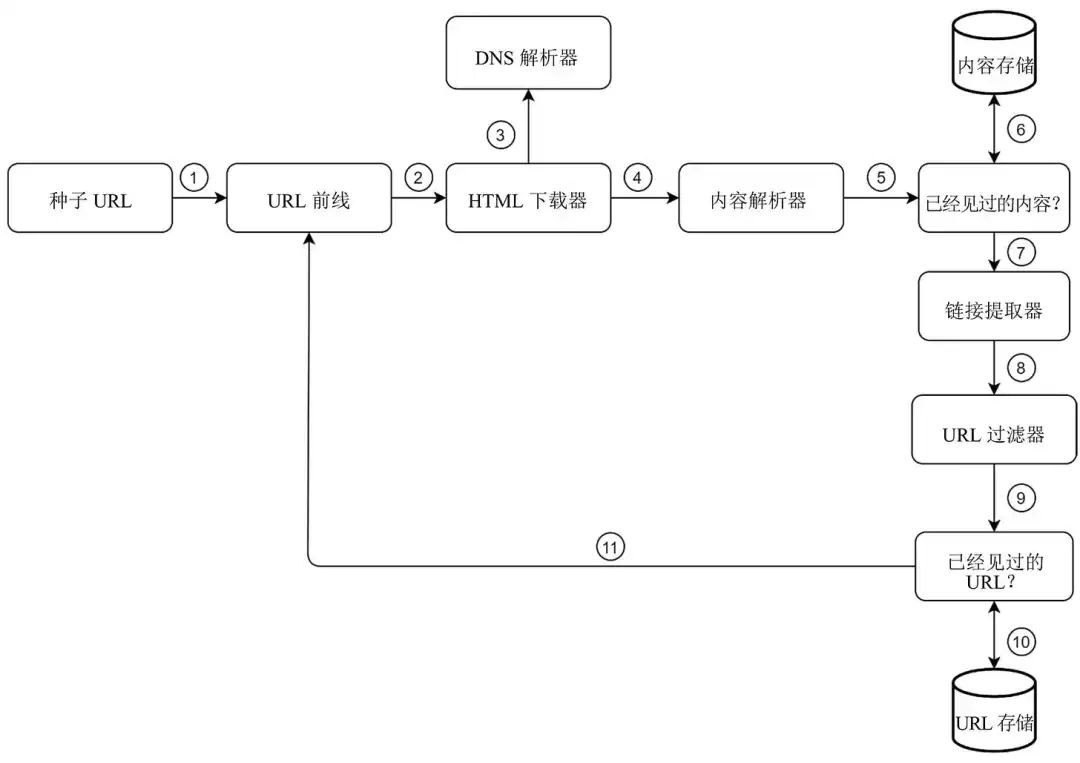

To better explain the workflow step-by-step, sequence numbers are added in the design diagram as shown in Figure 4.

为了更好地分步骤解释爬虫工作流程,我们在设计图里加了序号,如图4所示。

Step 1: Add seed URLs to the URL Frontier

第1步:将种子URL添加到URL前线中。

Step 2: HTML Downloader fetches a list of URLs from URL Frontier.

第2步:HTML下载器从URL前线中获取URL列表。

Step 3: HTML Downloader gets IP addresses of URLs from DNS resolver and starts downloading.

第3步:HTML下载器从DNS解析器中获取URL对应的IP地址并开始下载。

Step 4: Content Parser parses HTML pages and checks if pages are malformed.

第4步:内容解析器解析HTML页面并检查页面是否有问题。

Step 5: After content is parsed and validated, it is passed to the “Content Seen?” component.

第5步:内容解析器将解析和验证后的内容传给“已见过的内容?”组件。

Step 6: “Content Seen” component checks if a HTML page is already in the storage.

第6步:“已见过的内容?”组件检查这个HTML页面是否已经在数据库中。

- If it is in the storage, this means the same content in a different URL has already been processed. In this case, the HTML page is discarded. 如果页面已经在数据库中,意味着包含同样的内容的不同URL已经被处理过。在这种情况下,这个HTML页面会被丢弃。

- If it is not in the storage, the system has not processed the same content before. The content is passed to Link Extractor. 如果页面不在数据库中,表示系统还没有处理过相同的内容。该页面将被传递给链接提取器。

Step 7: Link extractor extracts links from HTML pages.

第7步:链接提取器从HTML页面中提取链接。

Step 8: Extracted links are passed to the URL filter.

第8步:提取出来的链接被传递给URL过滤器进行筛选。

Step 9: After links are filtered, they are passed to the “URL Seen?” component.

第9步:经过筛选的链接被传递给“已见过的URL?”组件。

Step 10: “URL Seen” component checks if a URL is already in the storage, if yes, it is processed before, and nothing needs to be done.

第10步:“已见过的URL?”组件检查这个URL是否已经在数据库中,如果是,则意味着它之前被处理过,无须再做处理。

Step 11: If a URL has not been processed before, it is added to the URL Frontier.

第11步:如果这个URL之前没有被处理过,就将被添加到URL前线中。

3 Step 3 - Design deep dive 第三步 设计继续深入

Up until now, we have discussed the high-level design. Next, we will discuss the most important building components and techniques in depth:

到目前为止,我们已经讨论过高层级的设计。接下来,我们将深入讨论几个最重要的构建组件和技术。

- Depth-first search (DFS) vs Breadth-first search (BFS) 深度优先搜索(DFS)与广度优先搜索(BFS)

- URL frontier URL前线

- HTML Downloader HTML下载器

- Robustness 健壮性

- Extensibility 可扩展性

- Detect and avoid problematic content 检测和避免有问题的内容

3.1 DFS vs BFS DFS vs.BFS

You can think of the web as a directed graph where web pages serve as nodes and hyperlinks (URLs) as edges. The crawl process can be seen as traversing a directed graph from one web page to others. Two common graph traversal algorithms are DFS and BFS. However, DFS is usually not a good choice because the depth of DFS can be very deep.

你可以把网络想成一个有向图,其中网页就是节点,超链接(URL)是边。爬虫在网络上爬行的过程可以看作是从一个网页到其他网页的有向图遍历。常见的两种图遍历算法是DFS和BFS。但是,因为DFS的深度可能非常深,所以它通常不是一个好的选择。

BFS is commonly used by web crawlers and is implemented by a first-in-first-out (FIFO) queue. In a FIFO queue, URLs are dequeued in the order they are enqueued. However, this implementation has two problems:

BFS是爬虫常用的方法,通过先进先出(FIFO)队列来实现。在一个FIFO队列中,URL按照它们入列的顺序出列。尽管如此,这种实现方式还有以下两个问题。

- Most links from the same web page are linked back to the same host. In Figure 5, all the links in wikipedia.com are internal links, making the crawler busy processing URLs from the same host (wikipedia.com). When the crawler tries to download web pages in parallel, Wikipedia servers will be flooded with requests. This is considered as “impolite”. 同一个网页的大部分链接都指向同一个主机。如图5所示,wikipedia.com中的所有链接都是内部链接,这使得爬虫忙于处理来自同一个主机(wikipedia.com)的URL。当爬虫尝试并行下载网页时,维基百科的服务器会被大量请求“淹没”。这样做被认为是“不礼貌”的。

- Standard BFS does not take the priority of a URL into consideration. The web is large and not every page has the same level of quality and importance. Therefore, we may want to prioritize URLs according to their page ranks, web traffic, update frequency, etc. 标准的BFS并没有考虑URL的优先级。互联网很大,不是每个网页都有同样水平的质量和同等重要性。因此,我们可能想要基于网页的排名、网络流量、更新频率等对URL进行排序,以便优先处理某些网页。

3.2 URL frontier URL前线

URL frontier helps to address these problems. A URL frontier is a data structure that stores URLs to be downloaded. The URL frontier is an important component to ensure politeness, URL prioritization, and freshness. A few noteworthy papers on URL frontier are mentioned in the reference materials. The findings from these papers are as follows:

URL前线帮我们解决了这些问题。URL前线是一个重要组件,它是一个存储待下载URL的数据结构,能确保爬虫礼貌地访问网页,确定URL优先级并保证内容新鲜度。关于URL前线,建议细读Christopher Olston与Marc Najork合著的文章“Web Crawling”。这篇文章给出了如下结论。

Politeness 礼貌性

Generally, a web crawler should avoid sending too many requests to the same hosting server within a short period. Sending too many requests is considered as “impolite” or even treated as denial-of-service (DOS) attack. For example, without any constraint, the crawler can send thousands of requests every second to the same website. This can overwhelm the web servers.

一般来说,爬虫应该避免在短时间内对同一个服务器发送太多的请求。发送过多请求会被认为“不礼貌”,甚至可能被视为拒绝服务攻击(DoS)。举个例子,如果没有任何限制,爬虫可以对同一个网站每秒发送数千个请求。但这可能会让Web服务器不堪重负。

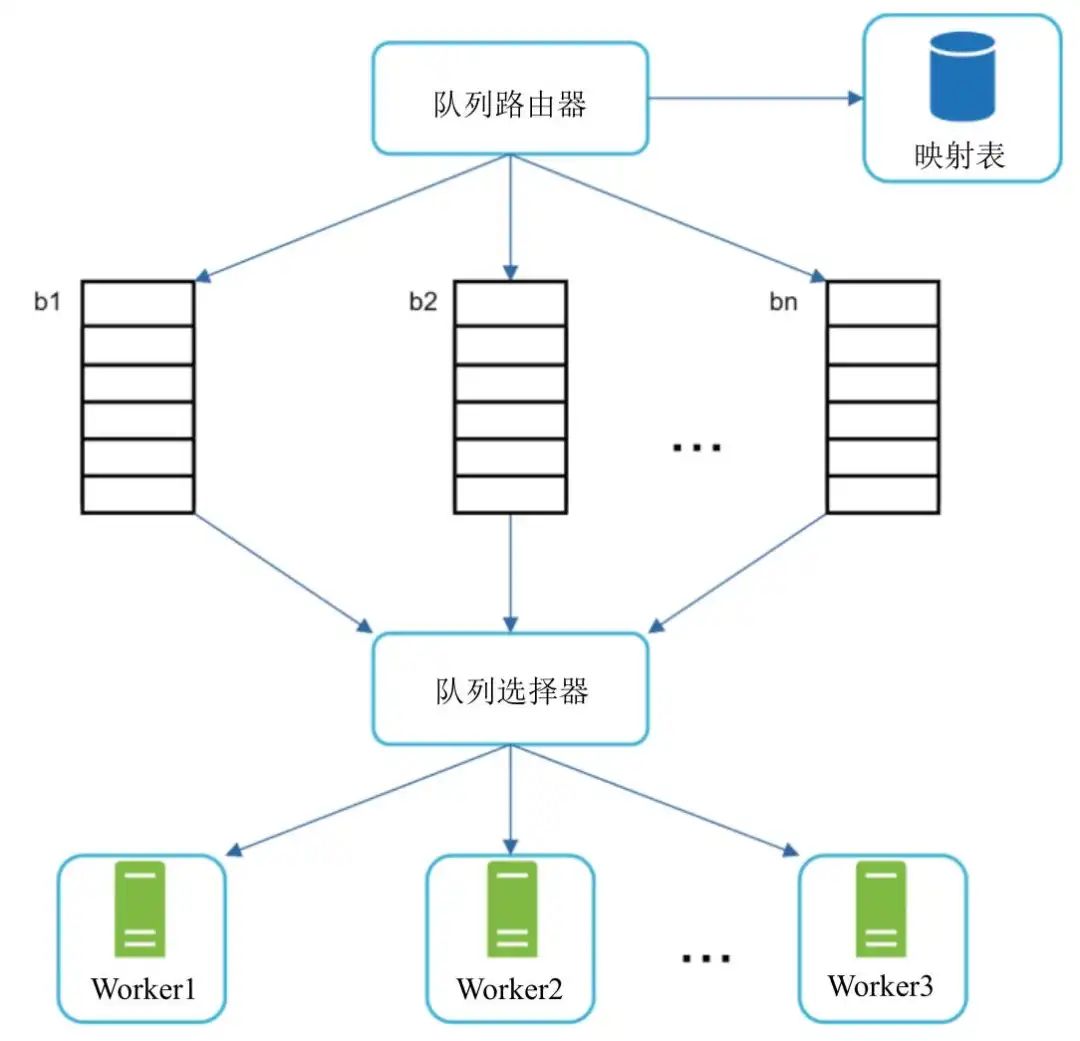

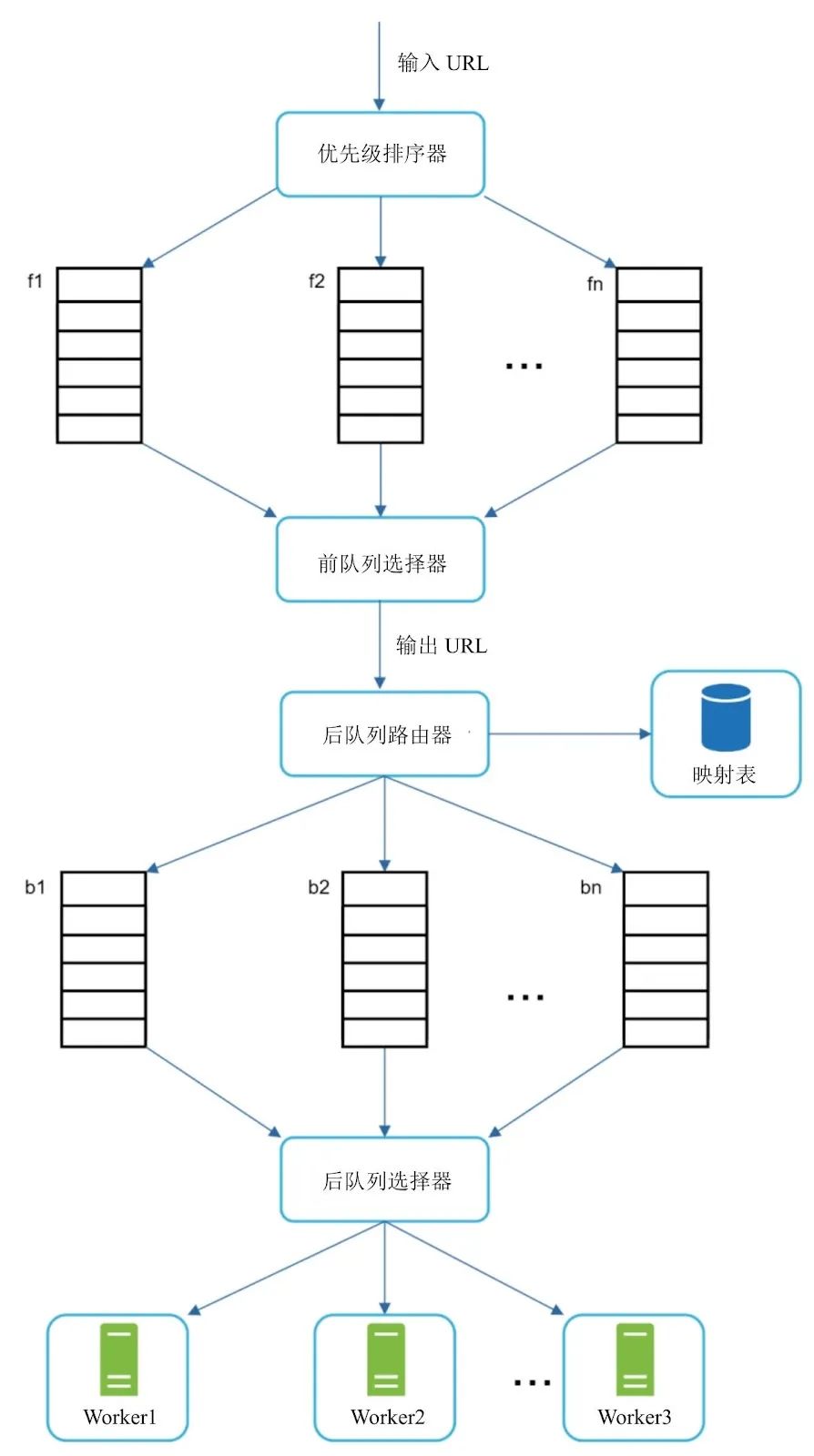

The general idea of enforcing politeness is to download one page at a time from the same host. A delay can be added between two download tasks. The politeness constraint is implemented by maintain a mapping from website hostnames to download (worker) threads. Each downloader thread has a separate FIFO queue and only downloads URLs obtained from that queue. Figure 6 shows the design that manages politeness.

确保礼貌性的大致思路是,从同一个主机每次只下载一个网页。可以在两次下载任务之间加入一定的延时。礼貌性约束是通过维护网站主机名和下载线程(Worker)的映射来实现的。每个下载线程都有独立的FIFO队列且只下载这个队列里的URL。图6展示了实现爬虫礼貌性的设计。

- Queue router: It ensures that each queue (b1, b2, … bn) only contains URLs from the same host. 队列路由器:确保每个队列(b1,b2,…,bn)只包含来自同一个主机的URL。

- Mapping table: It maps each host to a queue. 映射表:把每个主机映射到队列中(见表1)。

- FIFO queues b1, b2 to bn: Each queue contains URLs from the same host. FIFO队列(从b1到bn):每个队列只包含来自同一个主机的URL。

- Queue selector: Each worker thread is mapped to a FIFO queue, and it only downloads URLs from that queue. The queue selection logic is done by the Queue selector. 队列选择器:每个Worker都被映射到一个FIFO队列,它只下载来自这个队列的URL。队列选择器实现队列选择的逻辑。

- Worker thread 1 to N. A worker thread downloads web pages one by one from the same host. A delay can be added between two download tasks. 下载线程(Worker1到WorkerN):Worker一个接一个地下载来源于同一个主机的网页。在两个下载任务之间可以加入延时。

Priority 优先级

A random post from a discussion forum about Apple products carries very different weight than posts on the Apple home page. Even though they both have the “Apple” keyword, it is sensible for a crawler to crawl the Apple home page first.

某论坛上的一篇关于苹果产品的随机帖子与苹果官网上的文章相比,权重的差异很大。尽管它们都含有“苹果”这个关键字,但是对爬虫而言,先爬取苹果官网的网页肯定是更明智的选择。

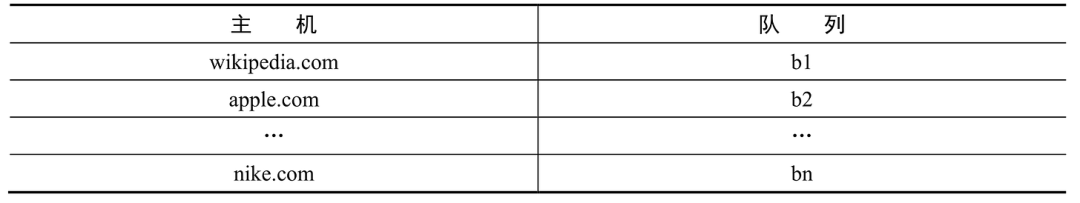

We prioritize URLs based on usefulness, which can be measured by PageRank, website traffic, update frequency, etc. “Prioritizer” is the component that handles URL prioritization. Refer to the reference materials for in-depth information about this concept.

我们对URL基于有用性来排优先级,可以通过PageRank、网站流量、更新频率等指标来度量。“优先级排序器”(Prioritizer)是处理URL优先级排序的组件。请参阅相关文章来了解关于这个组件的更多信息。

Figure 7 shows the design that manages URL priority.

图7展示了实现URL优先级排序的设计。

- Prioritizer: It takes URLs as input and computes the priorities. 优先级排序器:它接收URL作为输入并计算其优先级。

- Queue f1 to fn: Each queue has an assigned priority. Queues with high priority are selected with higher probability. 队列f1到fn:每个队列都有一个设定好的优先级。优先级高的队列有更高的概率被选中。

- Queue selector: Randomly choose a queue with a bias towards queues with higher priority. 队列选择器:从多个队列中随机选择一个,尽管优先级高的队列有更高的概率被选中,但这并不是绝对确定的,仍然存在一定的随机性。

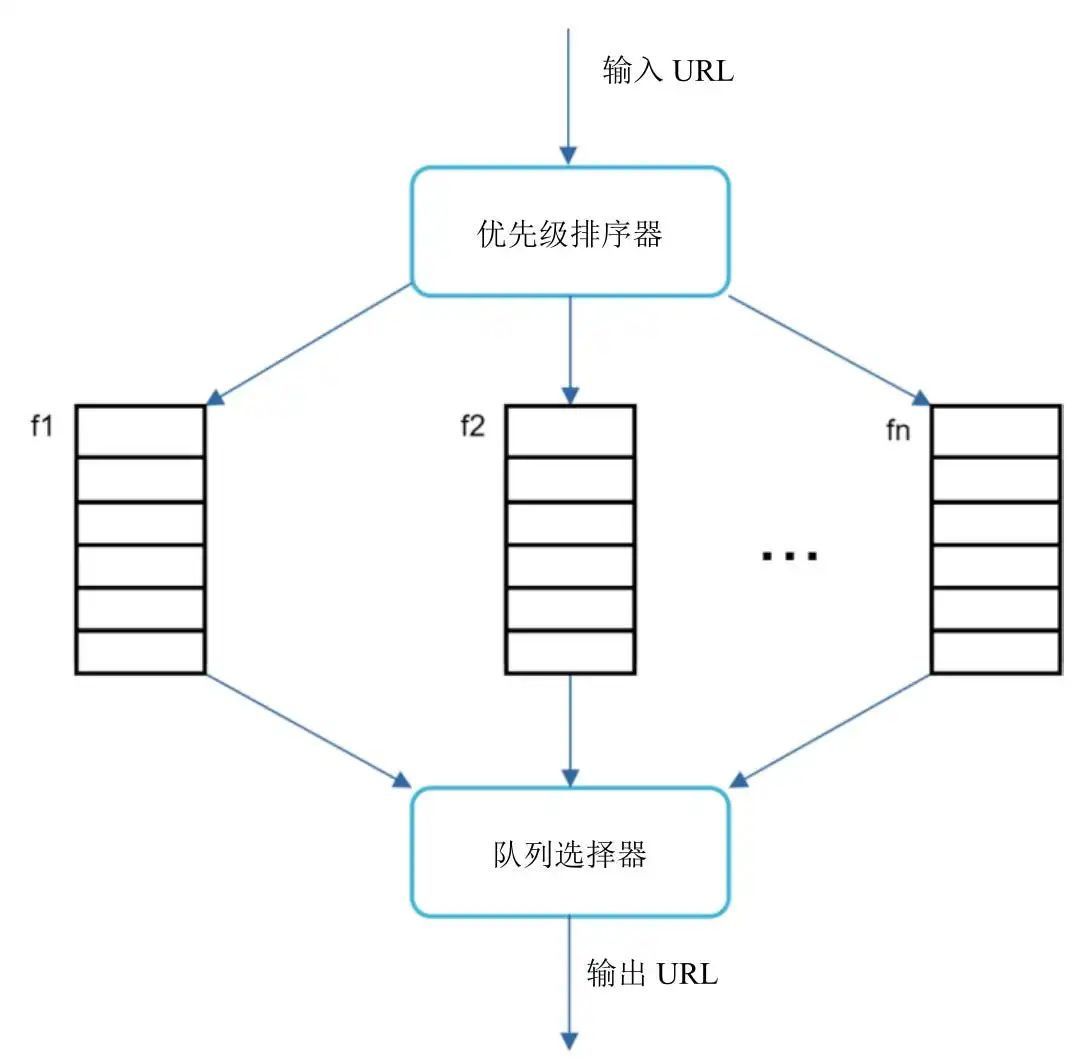

Figure 8 presents the URL frontier design, and it contains two modules:

图8展示了URL前线的设计,它包含两个模块。

- Front queues: manage prioritization 前队列:实现优先级管理。

- Back queues: manage politeness 后队列:实现礼貌性管理。

Freshness 新鲜度

Web pages are constantly being added, deleted, and edited. A web crawler must periodically recrawl downloaded pages to keep our data set fresh. Recrawl all the URLs is time-consuming and resource intensive. Few strategies to optimize freshness are listed as follows:

由于互联网上不断有网页被添加、删除和修改,所以爬虫必须定期重新爬取下载过的网页,以确保数据是最新的。重新爬取所有的URL是非常消耗时间和资源的。下面是两个优化新鲜度的策略。

- Recrawl based on web pages’ update history. 根据网页的更新历史来判断是否重新爬取。

- Prioritize URLs and recrawl important pages first and more frequently. 对URL按优先级排序,并且优先频繁地重新爬取重要的网页。

Storage for URL Frontier URL前线的存储

In real-world crawl for search engines, the number of URLs in the frontier could be hundreds of millions. Putting everything in memory is neither durable nor scalable. Keeping everything in the disk is undesirable neither because the disk is slow; and it can easily become a bottleneck for the crawl.

在搜索引擎的实际爬取过程中,URL前线中的URL数量可能上亿。把所有内容放在内存中,既不可持续也不可扩展;而把所有内容放在硬盘中也不是理想的方案,因为硬盘的访问速度很慢,很容易成为爬虫爬取数据的瓶颈。

We adopted a hybrid approach. The majority of URLs are stored on disk, so the storage space is not a problem. To reduce the cost of reading from the disk and writing to the disk, we maintain buffers in memory for enqueue/dequeue operations. Data in the buffer is periodically written to the disk.

我们采用了一种混合方案。将大部分的URL存储在硬盘上,这样存储空间就不是问题。为了降低从硬盘读/写的开销,我们在内存中维护了缓冲区以进行入队/出队操作。缓冲区中的数据会被定期写入硬盘。

3.3 HTML Downloader HTML下载器

The HTML Downloader downloads web pages from the internet using the HTTP protocol. Before discussing the HTML Downloader, we look at Robots Exclusion Protocol first ——robots.txt.

HTML下载器通过HTTP协议从互联网下载网页。在讨论HTML下载器之前,我们先看看机器人排除协议(Robots Exclusion Protocol)——robots.txt。

Robots.txt, called Robots Exclusion Protocol, is a standard used by websites to communicate with crawlers. It specifies what pages crawlers are allowed to download. Before attempting to crawl a web site, a crawler should check its corresponding robots.txt first and follow its rules.

robot.txt是网站和爬虫之间通信的标准。它标明了允许爬虫下载哪些网页。在尝试爬取一个网站之前,爬虫应该先检查对应的robots.txt并遵守其中的规则。

To avoid repeat downloads of robots.txt file, we cache the results of the file. The file is downloaded and saved to cache periodically. Here is a piece of robots.txt file taken from https://www.amazon.com/robots.txt. Some of the directories like creatorhub are disallowed for Google bot.

为了避免重复下载robots.txt文件,我们会缓存这个文件的结果。这个文件会被定期下载并保存在缓存中。下面是从https://www.amazon.com/robots.txt中截取的一段robots.txt文件内容。其中规定了如creatorhub之类的目录是不允许谷歌机器人爬取的。

User-agent: Googlebot

Disallow: /creatorhub/\*

Disallow: /rss/people/\*/reviews

Disallow: /gp/pdp/rss/\*/reviews

Disallow: /gp/cdp/member-reviews/

Disallow: /gp/aw/cr/

Besides robots.txt, performance optimization is another important concept we will cover for the HTML downloader.

除了robots.txt,性能优化是HTML下载器中的另一个重要概念。

Performance optimization 性能优化

Below is a list of performance optimizations for HTML downloader.

下面是HTML下载器的性能优化方法。

- Distributed crawl 分布式爬取

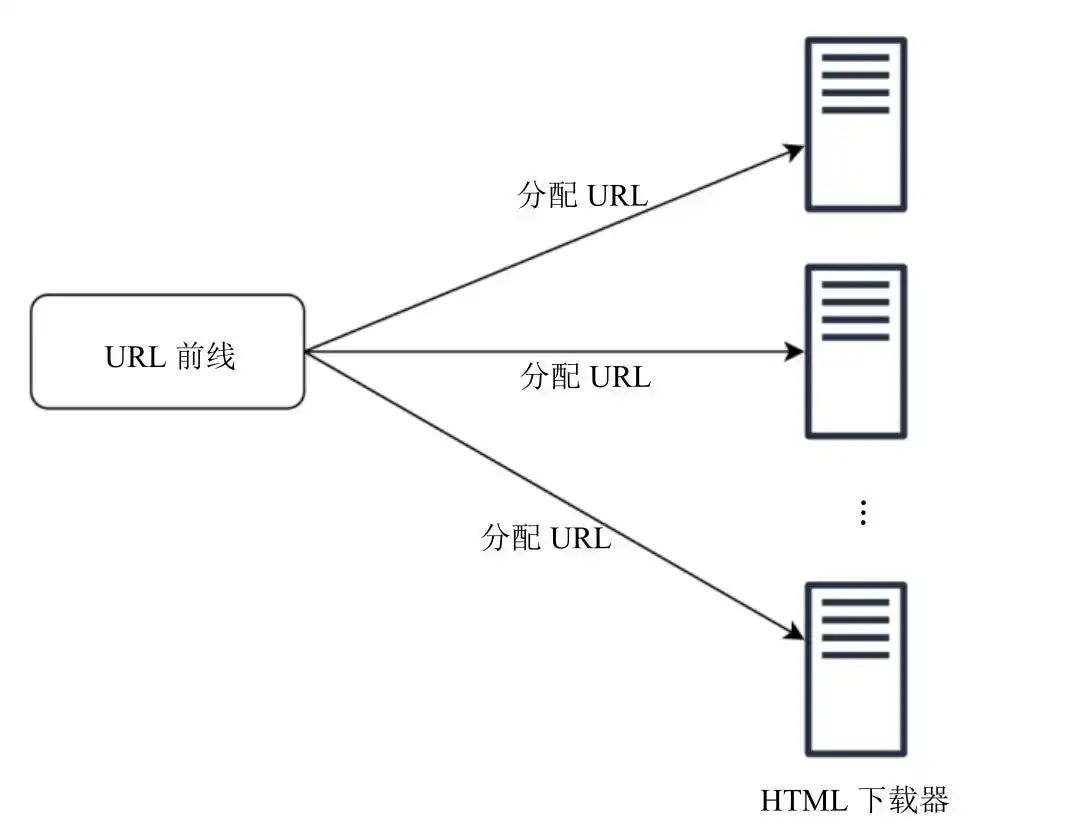

To achieve high performance, crawl jobs are distributed into multiple servers, and each server runs multiple threads. The URL space is partitioned into smaller pieces; so, each downloader is responsible for a subset of the URLs. Figure 9 shows an example of a distributed crawl.

为了实现高性能,爬取任务被分配给多个服务器,每个服务器中运行着多个线程。URL空间被分成较小的部分,这样每个下载器只负责处理一部分URL。图9展示了一个分布式爬取的例子。

- Cache DNS Resolver 缓存DNS解析器

DNS Resolver is a bottleneck for crawlers because DNS requests might take time due to the synchronous nature of many DNS interfaces. DNS response time ranges from 10ms to 200ms. Once a request to DNS is carried out by a crawler thread, other threads are blocked until the first request is completed. Maintaining our DNS cache to avoid calling DNS frequently is an effective technique for speed optimization. Our DNS cache keeps the domain name to IP address mapping and is updated periodically by cron jobs.

因为很多DNS接口是同步的,所以DNS请求可能要花较长时间,导致DNS解析器成为爬虫的一个性能瓶颈。DNS响应时间在10ms到200ms之间。一旦爬虫的一个线程发出DNS请求,其他线程就会被阻塞,直到该DNS请求完成。维护DNS缓存,避免频繁向DNS服务器发起请求,是一个提高爬虫爬行速度的有效技术。我们的DNS缓存保存了域名与IP地址之间的映射,并通过定时任务进行更新。

- Locality 本地性

Distribute crawl servers geographically. When crawl servers are closer to website hosts, crawlers experience faster download time. Design locality applies to most of the system components: crawl servers, cache, queue, storage, etc.

将爬虫服务器按地理位置分布。爬虫服务器离网站主机越近,爬虫的下载速度会越快。本地性设计可以应用到大部分系统组件上:爬虫服务器、缓存、队列、存储等。

- Short timeout 短超时时间

Some web servers respond slowly or may not respond at all. To avoid long wait time, a maximal wait time is specified. If a host does not respond within a predefined time, the crawler will stop the job and crawl some other pages.

一些Web服务器响应慢或者根本不响应。为了避免长时间等待,需要确定一个最长等待时间。如果一个主机在预定时间内没有响应,爬虫就会停止该任务转而爬取其他页面。

3.4 Robustness 健壮性

Besides performance optimization, robustness is also an important consideration. We present a few approaches to improve the system robustness:

除了性能优化,健壮性也是一个重要的考虑因素。以下是一些提升系统健壮性的方法。

- Consistent hashing: This helps to distribute loads among downloaders. A new downloader server can be added or removed using consistent hashing. Refer to the "Design consistent hashing" chapter for more details. 一致性哈希:有助于负载在HTML下载器之间均匀分布。使用一致性哈希,可以添加或者移除新的下载器服务器。可参考第5章了解关于一致性哈希的更多细节。

- Save crawl states and data: To guard against failures, crawl states and data are written to a storage system. A disrupted crawl can be restarted easily by loading saved states and data. 保存爬取状态和数据:为了应对故障,将爬取状态和数据写入存储系统。通过加载保存的爬取状态和数据,可以很容易地重启被中断的爬取过程。

- Exception handling: Errors are inevitable and common in a large-scale system. The crawler must handle exceptions gracefully without crashing the system. 异常处理:在大型系统中,错误是无法避免的,出错是很常见的事情。爬虫必须能“得体地”处理异常,避免系统崩溃。

- Data validation: This is an important measure to prevent system errors. 数据校验:这是防止系统错误的重要措施。

3.5 Extensibility 可扩展性

As almost every system evolves, one of the design goals is to make the system flexible enough to support new content types. The crawler can be extended by plugging in new modules. Figure 10 shows how to add new modules.

因为几乎所有系统都在演进,所以系统的设计目标之一就是要足够灵活以支持新的内容类型。爬虫可以通过插入新的模块来进行扩展。图10展示了如何添加新模块。

- PNG Downloader module is plugged-in to download PNG files. PNG下载器模块作为插件被添加进来,用于下载PNG文件。

- Web Monitor module is added to monitor the web and prevent copyright and trademark infringements. 网络监视器模块作为插件被添加进来,用于监控网络,以避免版权和商标侵权。

3.6 Detect and avoid problematic content 检测和避免有问题的内容

This section discusses the detection and prevention of redundant, meaningless, or harmful content.

本节讨论检测及避免重复、无意义或者有害内容的方法。

- Redundant content 重复内容

As discussed previously, nearly 30% of the web pages are duplicates. Hashes or checksums help to detect duplication.

如前所述,接近30%的网页是重复的。哈希和校验和(Checksum)可以帮助我们检测出重复内容。

- Spider traps 蜘蛛陷阱

A spider trap is a web page that causes a crawler in an infinite loop. For instance, an infinite deep directory structure is listed as follows: http://www.spidertrapexample.com/foo/bar/foo/bar/foo/bar/ … Such spider traps can be avoided by setting a maximal length for URLs. However, no one-size-fits-all solution exists to detect spider traps. Websites containing spider traps are easy to identify due to an unusually large number of web pages discovered on such websites. It is hard to develop automatic algorithms to avoid spider traps; however, a user can manually verify and identify a spider trap, and either exclude those websites from the crawler or apply some customized URL filters.

蜘蛛陷阱是可以导致爬虫陷入无限循环的网页,例如,一个无限深的目录结构www.spidertrapexample.com/foo/bar/foo/bar/foo/bar/…。可以通过设置最大URL长度来避免这样的蜘蛛陷阱。尽管如此,并不存在检测蜘蛛陷阱的通用解决方案。含有蜘蛛陷阱的网站是容易识别的,因为在这种网站上网页的数量异常多。但是很难开发出一个自动算法来躲避蜘蛛陷阱。不过,用户可以手动验证和识别蜘蛛陷阱,然后要么在爬取时排除这些网站,要么应用一些定制的URL过滤器。

- Data noise 数据噪声

Some of the contents have little or no value, such as advertisements, code snippets, spam URLs, etc. Those contents are not useful for crawlers and should be excluded if possible.

有些内容只有很少的或者根本没有任何价值,比如广告、代码片段、垃圾邮件URL等。这些内容对于爬虫来说没有用,如果有可能,应该将其排除。

4 Step 4 - Wrap up 第四步 总结

In this chapter, we first discussed the characteristics of a good crawler: scalability, politeness, extensibility, and robustness. Then, we proposed a design and discussed key components. Building a scalable web crawler is not a trivial task because the web is enormously large and full of traps. Even though we have covered many topics, we still miss many relevant talking points:

在本章中,我们先讨论了好爬虫的特点——它应该具有可扩展性、礼貌性和健壮性。接着,我们给出了设计方案并讨论了关键组件。因为互联网异常庞大且充满陷阱,所以创建一个可扩展的爬虫并非易事。即使讨论了很多内容,但我们依然漏掉了很多相关的讨论点,比如:

- Server-side rendering: Numerous websites use scripts like JavaScript, AJAX, etc to generate links on the fly. If we download and parse web pages directly, we will not be able to retrieve dynamically generated links. To solve this problem, we perform server-side rendering (also called dynamic rendering) first before parsing a page. 服务端渲染。无数网站使用JavaScript、AJAX等脚本来动态生成链接。如果直接下载和解析网页,我们并不能获取这些动态生成的链接。为了解决这个问题,我们会在解析网页之前先进行服务器端渲染(也叫动态渲染)。

- Filter out unwanted pages: With finite storage capacity and crawl resources, an anti-spam component is beneficial in filtering out low quality and spam pages. 滤掉不想要的网页。因为存储容量和爬虫资源是有限的,使用反垃圾组件,有助于滤掉低质量的垃圾页面。

- Database replication and sharding: Techniques like replication and sharding are used to improve the data layer availability, scalability, and reliability. 数据库复制和分片。复制和分片等技术可以增强数据层的可用性、可扩展性和可靠性。

- Horizontal scaling: For large scale crawl, hundreds or even thousands of servers are needed to perform download tasks. The key is to keep servers stateless. 横向扩展。对于大范围的爬取,需要成百上千的服务器来执行下载任务。保持服务器无状态是关键。

- Availability, consistency, and reliability: These concepts are at the core of any large system’s success. 可用性、一致性和可靠性。这些概念是任何大型系统成功的核心。

- Analytics: Collecting and analyzing data are important parts of any system because data is key ingredient for fine-tuning. 数据分析。收集和分析数据对任何系统来说都很重要,因为数据是优化系统的关键要素。

Congratulations on getting this far! Now give yourself a pat on the back. Good job!

恭喜你已经看到这里了。给自己一些鼓励。干得不错!

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2024-04-07,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录