一文入门高性能计算HPC-详解2

原创接上文: https://cloud.tencent.com/developer/article/2508936

MPI layer frameworks

Here is a list of all the component frameworks in the MPI layer of Open MPI:

bml: BTL management layercoll: MPI collective algorithms: 集体通信接口(Collective Communication Interface) 用于实现 MPI 集体通信接口的接口。MPI 接口提供错误检查和错误处理程序调用,但集体组件提供所有其他功能。组件选择是在通信器构造时按通信器完成的。mca_coll_base_comm_select() 用于创建组件 collm_comm_query 函数可用的组件列表,为每个可用的组件实例化一个模块,并设置模块集体函数指针。然后,mca_coll_base_comm_select() 循环遍历可用组件列表(通过实例化的模块),并使用模块的 coll_module_enable() 函数启用模块,如果成功,则将通信器集体函数设置为给定模块提供的函数,并跟踪它与哪个模块相关联。在通信器销毁时,将为通信器使用的每个模块调用模块析构函数。这可能导致单个通信器使用最多 N 个不同的组件,每个所需的集体函数一个。通信器间或通信器内的接口是相同的,组件应该能够在初始化期间处理任一类型的通信器(尽管处理可能包括指示组件不可用)。与 MPI 标准不同,所有不应使用的缓冲区(因此 MPI 标准不要求元组(缓冲区、数据类型、计数)可访问)都将替换为 NULL。例如,MPI_Reduce 中所有非根等级的 recvbuf 将在 MPI API 级别设置为 NULL。这样做的原因是允许集体组件指示缓冲区何时真正有效,以便将集体委托给其他模块的集体模块可以共享临时缓冲区。fbtl: point to point file byte transfer layer: abstraction for individual read: collective read and write operations for MPI I/Ofcoll: collective file system functions for MPI I/Ofs: file system functions for MPI I/Ohook: Generic hooks into Open MPIio: MPI I/Omtl: Matching transport layer, used for MPI point-to-point messages on some types of networksop: Back end computations for intrinsic MPI_Op operatorsosc: MPI one-sided communicationspml: MPI point-to-point management layer: P2P 管理层 (PML) 一种 MCA 组件类型,提供 MPI 层所需的 P2P 接口功能。PML 是一个相对较薄的层,主要用于通过多个传输(字节传输层 (BTL) MCA 组件类型的实例)进行消息的分段和调度,如下所示:

* ------------------------------------

* | MPI |

* ------------------------------------

* | PML |

* ------------------------------------

* | BTL (TCP) | BTL (SM) | BTL (...) |

* ------------------------------------在库初始化期间,MCA 框架会选择一个 PML 组件。最初,所有可用的 PML 都会被加载(可能作为共享库),并且它们的组件打开和初始化函数会被调用。MCA 框架会选择返回最高优先级的组件,并关闭/卸载可能已打开的任何其他 PML 组件。初始化所有 MCA 组件后,MPI/RTE 会向下调用 PML 以提供初始进程列表(ompi_proc_t 实例)和更改通知(添加/删除)。PML 模块必须选择用于到达给定目的地的 BTL 组件集。这些组件应缓存在挂在 ompi_proc_t 上的 PML 特定数据结构中。然后,PML 应应用调度算法(循环、加权分布等)来调度通过可用 BTL 传递消息。

sharedfp: shared file pointer operations for MPI I/Otopo: MPI topology routinesvprotocol: Protocols for the “v” PML

安装

从源码编译安装

configure参考文档: https://docs.open-mpi.org/en/main/installing-open-mpi/configure-cli-options/installation.html

wget https://download.open-mpi.org/release/open-mpi/v5.0/openmpi-5.0.6.tar.gz

tar -zxvf openmpi-5.0.6.tar.gz

cd openmpi-5.0.6/

mkdir build

cd build/

../configure

make -j64验证安装

https://docs.open-mpi.org/en/main/validate.html

root@hpc117:~/project/hpc/mpi/openmpi-5.0.6/build/ompi/tools/ompi_info# ./ompi_info

Package: Open MPI root@xw-desktop Distribution

Open MPI: 5.0.6

Open MPI repo revision: v5.0.6

Open MPI release date: Nov 15, 2024

MPI API: 3.1.0

Ident string: 5.0.6

Prefix: /usr/local

Configured architecture: x86_64-pc-linux-gnu

Configured by: root

Configured on: Fri Jan 24 09:36:58 UTC 2025

Configure host: xw-desktop

Configure command line:

Built by: root

Built on: Fri 24 Jan 2025 09:42:27 AM UTC

Built host: xw-desktop

C bindings: yes

Fort mpif.h: no

Fort use mpi: no

Fort use mpi size: deprecated-ompi-info-value

Fort use mpi_f08: no

Fort mpi_f08 compliance: The mpi_f08 module was not built

Fort mpi_f08 subarrays: no

Java bindings: no

Wrapper compiler rpath: runpath

C compiler: gcc

C compiler absolute: /bin/gcc

C compiler family name: GNU

C compiler version: 9.4.0

C++ compiler: g++

C++ compiler absolute: /bin/g++

Fort compiler: none

Fort compiler abs: none

Fort ignore TKR: no

Fort 08 assumed shape: no

Fort optional args: no

Fort INTERFACE: no

Fort ISO_FORTRAN_ENV: no

Fort STORAGE_SIZE: no

Fort BIND(C) (all): no

Fort ISO_C_BINDING: no

Fort SUBROUTINE BIND(C): no

Fort TYPE,BIND(C): no

Fort T,BIND(C,name="a"): no

Fort PRIVATE: no

Fort ABSTRACT: no

Fort ASYNCHRONOUS: no

Fort PROCEDURE: no

Fort USE...ONLY: no

Fort C_FUNLOC: no

Fort f08 using wrappers: no

Fort MPI_SIZEOF: no

C profiling: yes

Fort mpif.h profiling: no

Fort use mpi profiling: no

Fort use mpi_f08 prof: no

Thread support: posix (MPI_THREAD_MULTIPLE: yes, OPAL support: yes,

OMPI progress: no, Event lib: yes)

Sparse Groups: no

Internal debug support: no

MPI interface warnings: yes

MPI parameter check: runtime

Memory profiling support: no

Memory debugging support: no

dl support: yes

Heterogeneous support: no

MPI_WTIME support: native

Symbol vis. support: yes

Host topology support: yes

IPv6 support: no

MPI extensions: affinity, cuda, ftmpi, rocm

Fault Tolerance support: yes

FT MPI support: yes

MPI_MAX_PROCESSOR_NAME: 256

MPI_MAX_ERROR_STRING: 256

MPI_MAX_OBJECT_NAME: 64

MPI_MAX_INFO_KEY: 36

MPI_MAX_INFO_VAL: 256

MPI_MAX_PORT_NAME: 1024

MPI_MAX_DATAREP_STRING: 128

MCA accelerator: null (MCA v2.1.0, API v1.0.0, Component v5.0.6)

MCA allocator: basic (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA allocator: bucket (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA backtrace: execinfo (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA btl: self (MCA v2.1.0, API v3.3.0, Component v5.0.6)

MCA btl: sm (MCA v2.1.0, API v3.3.0, Component v5.0.6)

MCA btl: tcp (MCA v2.1.0, API v3.3.0, Component v5.0.6)

MCA dl: dlopen (MCA v2.1.0, API v1.0.0, Component v5.0.6)

MCA if: linux_ipv6 (MCA v2.1.0, API v2.0.0, Component

v5.0.6)

MCA if: posix_ipv4 (MCA v2.1.0, API v2.0.0, Component

v5.0.6)

MCA installdirs: env (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA installdirs: config (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA memory: patcher (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA mpool: hugepage (MCA v2.1.0, API v3.1.0, Component v5.0.6)

MCA patcher: overwrite (MCA v2.1.0, API v1.0.0, Component

v5.0.6)

MCA rcache: grdma (MCA v2.1.0, API v3.3.0, Component v5.0.6)

MCA reachable: weighted (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA shmem: mmap (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA shmem: posix (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA shmem: sysv (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA smsc: cma (MCA v2.1.0, API v1.0.0, Component v5.0.6)

MCA threads: pthreads (MCA v2.1.0, API v1.0.0, Component v5.0.6)

MCA timer: linux (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA bml: r2 (MCA v2.1.0, API v2.1.0, Component v5.0.6)

MCA coll: adapt (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: basic (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: han (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: inter (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: libnbc (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: self (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: sync (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: tuned (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: ftagree (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA coll: monitoring (MCA v2.1.0, API v2.4.0, Component

v5.0.6)

MCA coll: sm (MCA v2.1.0, API v2.4.0, Component v5.0.6)

MCA fbtl: posix (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA fcoll: dynamic (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA fcoll: dynamic_gen2 (MCA v2.1.0, API v2.0.0, Component

v5.0.6)

MCA fcoll: individual (MCA v2.1.0, API v2.0.0, Component

v5.0.6)

MCA fcoll: vulcan (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA fs: ufs (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA hook: comm_method (MCA v2.1.0, API v1.0.0, Component

v5.0.6)

MCA io: ompio (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA io: romio341 (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA op: avx (MCA v2.1.0, API v1.0.0, Component v5.0.6)

MCA osc: sm (MCA v2.1.0, API v3.0.0, Component v5.0.6)

MCA osc: monitoring (MCA v2.1.0, API v3.0.0, Component

v5.0.6)

MCA osc: rdma (MCA v2.1.0, API v3.0.0, Component v5.0.6)

MCA part: persist (MCA v2.1.0, API v4.0.0, Component v5.0.6)

MCA pml: cm (MCA v2.1.0, API v2.1.0, Component v5.0.6)

MCA pml: monitoring (MCA v2.1.0, API v2.1.0, Component

v5.0.6)

MCA pml: ob1 (MCA v2.1.0, API v2.1.0, Component v5.0.6)

MCA pml: v (MCA v2.1.0, API v2.1.0, Component v5.0.6)

MCA sharedfp: individual (MCA v2.1.0, API v2.0.0, Component

v5.0.6)

MCA sharedfp: lockedfile (MCA v2.1.0, API v2.0.0, Component

v5.0.6)

MCA sharedfp: sm (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA topo: basic (MCA v2.1.0, API v2.2.0, Component v5.0.6)

MCA topo: treematch (MCA v2.1.0, API v2.2.0, Component

v5.0.6)

MCA vprotocol: pessimist (MCA v2.1.0, API v2.0.0, Component

v5.0.6)完整日志:

ring测试:

cd ~/project/hpc/mpi/openmpi-5.0.6/examples

root@hpc117:~/project/hpc/mpi/openmpi-5.0.6/examples# mpirun --allow-run-as-root ring_c

Process 0 sending 10 to 1, tag 201 (18 processes in ring)

Process 0 sent to 1

Process 0 decremented value: 9

Process 0 decremented value: 8

Process 0 decremented value: 7

Process 0 decremented value: 6

Process 0 decremented value: 5

Process 0 decremented value: 4

Process 0 decremented value: 3

Process 0 decremented value: 2

Process 0 decremented value: 1

Process 0 decremented value: 0

Process 0 exiting

Process 16 exiting

Process 17 exiting

Process 1 exiting

Process 2 exiting

Process 3 exiting

Process 4 exiting

Process 6 exiting

Process 8 exiting

Process 9 exiting

Process 10 exiting

Process 11 exiting

Process 12 exiting

Process 13 exiting

Process 14 exiting

Process 15 exiting

Process 5 exiting

Process 7 exiting

root@hpc117:~/project/hpc/mpi/openmpi-5.0.6/examples# mpirun --allow-run-as-root hello_c

Hello, world, I am 7 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 13 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 17 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 4 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 5 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 9 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 10 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 12 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 16 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 2 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 1 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 3 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 14 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 8 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 15 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 6 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 0 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)

Hello, world, I am 11 of 18, (Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020, 87)依赖库

KNEM概述及论文

knem(Kernel-Assisted Intra-node MPI Communication Framework: 内核辅助节点内 MPI 通信框架)是一个 Linux 内核模块,允许直接在进程间进行内存复制(可选择使用硬件卸载),从而有可能增加同一服务器上消息之间发送大型消息的带宽。有关详细信息,请参阅Knem 网站, https://knem.gitlabpages.inria.fr/, KNEM 是一个 Linux 内核模块,可实现高性能节点内 MPI 通信,以处理大消息。KNEM 适用于自 2.6.15 以来的所有 Linux 内核,并支持异步和矢量数据传输以及将内存副本卸载到 Intel I/OAT 硬件上。 MPICH2 (自 1.1.1 版起)在 DMA LMT 中使用 KNEM 来提高单个节点内的大消息性能。Open MPI自 1.5 版起还在其 SM BTL 组件中包含 KNEM 支持。此外,NetPIPE自 3.7.2 版起包含 KNEM 后端。在此处 了解如何使用它们。 一般文档 涵盖安装、运行和使用 KNEM,而接口文档描述了编程接口以及如何将应用程序或 MPI 实现移植到 KNEM。 要获取最新的 KNEM 新闻、报告问题或提出问题,您应该订阅 knem 邮件列表。另请参阅新闻档案。 为什么? MPI 实现通常提供基于用户空间双副本的节点内通信策略。它非常适合较小的消息延迟,但它会浪费许多 CPU 周期、污染缓存并使内存总线饱和。KNEM 通过 Linux 内核中的单个副本将数据从一个进程传输到另一个进程。系统调用开销(目前约为 100ns)对于较小的消息延迟来说并不好,但对于较大的消息(通常从几十千字节开始),单个内存副本非常好。 一些特定于供应商的 MPI 堆栈(例如 Myricom MX、Qlogic PSM 等)提供了类似的功能,但它们可能只能在特定的硬件互连上运行,而 KNEM 是通用的(且开源的)。此外,这些竞争对手都没有像 KNEM 那样提供异步完成模型、I/OAT 复制卸载和/或矢量内存缓冲区支持。

查看动态库

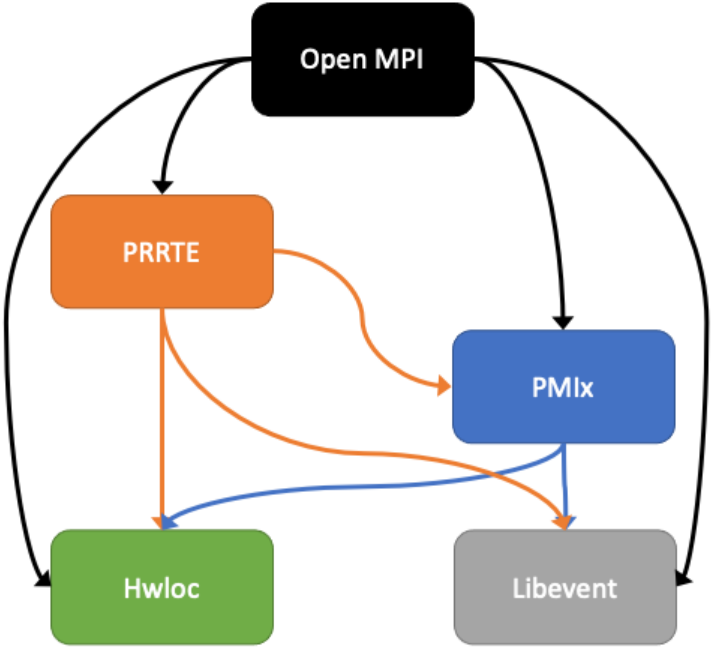

root@hpc117:~/project/hpc/mpi/openmpi-5.0.6/build/ompi/.libs# readelf -d libmpi.so|egrep -i 'rpath|runpath'

0x000000000000001d (RUNPATH) Library runpath: [/root/project/hpc/mpi/openmpi-5.0.6/build/opal/.libs:/root/project/hpc/mpi/openmpi-5.0.6/build/3rd-party/openpmix/src/.libs]

root@hpc117:~/project/hpc/mpi/openmpi-5.0.6/build/ompi/.libs#

root@hpc117:~/project/hpc/mpi/openmpi-5.0.6/build/ompi/.libs# readelf -d libmpi.so

Dynamic section at offset 0x302fe0 contains 35 entries:

Tag Type Name/Value

0x0000000000000001 (NEEDED) Shared library: [libopen-pal.so.80]

0x0000000000000001 (NEEDED) Shared library: [libpthread.so.0]

0x0000000000000001 (NEEDED) Shared library: [librt.so.1]

0x0000000000000001 (NEEDED) Shared library: [libpmix.so.2]

0x0000000000000001 (NEEDED) Shared library: [libm.so.6]

0x0000000000000001 (NEEDED) Shared library: [libevent_core-2.1.so.7]

0x0000000000000001 (NEEDED) Shared library: [libevent_pthreads-2.1.so.7]

0x0000000000000001 (NEEDED) Shared library: [libhwloc.so.15]

0x0000000000000001 (NEEDED) Shared library: [libc.so.6]

0x0000000000000001 (NEEDED) Shared library: [ld-linux-x86-64.so.2]

0x000000000000000e (SONAME) Library soname: [libmpi.so.40]

0x000000000000001d (RUNPATH) Library runpath: [/root/project/hpc/mpi/openmpi-5.0.6/build/opal/.libs:/root/project/hpc/mpi/openmpi-5.0.6/build/3rd-party/openpmix/src/.libs]

0x000000000000000c (INIT) 0x52000

0x000000000000000d (FINI) 0x294424

0x0000000000000019 (INIT_ARRAY) 0x302bf0

0x000000000000001b (INIT_ARRAYSZ) 8 (bytes)

0x000000000000001a (FINI_ARRAY) 0x302bf8

0x000000000000001c (FINI_ARRAYSZ) 8 (bytes)

0x000000006ffffef5 (GNU_HASH) 0x328

0x0000000000000005 (STRTAB) 0x1d990

0x0000000000000006 (SYMTAB) 0x6890

0x000000000000000a (STRSZ) 88846 (bytes)

0x000000000000000b (SYMENT) 24 (bytes)

0x0000000000000003 (PLTGOT) 0x306000

0x0000000000000002 (PLTRELSZ) 33024 (bytes)

0x0000000000000014 (PLTREL) RELA

0x0000000000000017 (JMPREL) 0x49680

0x0000000000000007 (RELA) 0x35490

0x0000000000000008 (RELASZ) 82416 (bytes)

0x0000000000000009 (RELAENT) 24 (bytes)

0x000000006ffffffe (VERNEED) 0x35360

0x000000006fffffff (VERNEEDNUM) 5

0x000000006ffffff0 (VERSYM) 0x3349e

0x000000006ffffff9 (RELACOUNT) 1456

0x0000000000000000 (NULL) 0x0MPI使用说明/参数解析

https://docs.open-mpi.org/en/main/man-openmpi/man1/mpirun.1.html#man1-mpirun

mpirun 和 mpiexec 是同义词。实际上,它们是指向同一可执行文件的符号链接。使用任何一个名称都会产生完全相同的行为。

mpirun [ options ] <program> [ <args> ]

mpirun [ global_options ]

[ local_options1 ] <program1> [ <args1> ] :

[ local_options2 ] <program2> [ <args2> ] :

... :

[ local_optionsN ] <programN> [ <argsN> ]

mpirun [ -n X ] [ --hostfile <filename> ] <program>OpenMPI与OFI

https://docs.open-mpi.org/en/main/tuning-apps/networking/ofi.html

查看OFI组件参数

root@hpc117:~/project/hpc/mpi/ompi/examples# ompi_info --param mtl ofi --level 9

MCA mtl: ofi (MCA v2.1.0, API v2.0.0, Component v5.0.6)

MCA mtl ofi: ---------------------------------------------------

MCA mtl ofi: parameter "mtl_ofi_priority" (current value: "25",

data source: default, level: 9 dev/all, type: int)

Priority of the OFI MTL component

MCA mtl ofi: parameter "mtl_ofi_progress_event_cnt" (current

value: "100", data source: default, level: 6

tuner/all, type: int)

Max number of events to read each call to OFI

progress (default: 100 events will be read per OFI

progress call)

MCA mtl ofi: parameter "mtl_ofi_tag_mode" (current value:

"auto", data source: default, level: 6 tuner/all,

type: int)

Mode specifying how many bits to use for various

MPI values in OFI/Libfabric communications. Some

Libfabric provider network types can support most

of Open MPI needs; others can only supply a limited

number of bits, which then must be split across the

MPI communicator ID, MPI source rank, and MPI tag.

Three different splitting schemes are available:

ofi_tag_full (30 bits for the communicator, 32 bits

for the source rank, and 32 bits for the tag),

ofi_tag_1 (12 bits for the communicator, 18 bits

source rank, 32 bits tag), ofi_tag_2 (24 bits for

the communicator, 18 bits source rank, 20 bits

tag). By default, this MCA variable is set to

"auto", which will first try to use ofi_tag_full,

and if that fails, fall back to ofi_tag_1.

Valid values: 1|auto, 2|ofi_tag_1, 3|ofi_tag_2,

4|ofi_tag_full

MCA mtl ofi: parameter "mtl_ofi_control_progress" (current

value: "unspec", data source: default, level: 3

user/all, type: int)

Specify control progress model (default:

unspecified, use provider's default). Set to auto

or manual for auto or manual progress respectively.

Valid values: 1|auto, 2|manual, 3|unspec

MCA mtl ofi: parameter "mtl_ofi_data_progress" (current value:

"unspec", data source: default, level: 3 user/all,

type: int)

Specify data progress model (default: unspecified,

use provider's default). Set to auto or manual for

auto or manual progress respectively.

Valid values: 1|auto, 2|manual, 3|unspec

MCA mtl ofi: parameter "mtl_ofi_av" (current value: "map", data

source: default, level: 3 user/all, type: int)

Specify AV type to use (default: map). Set to table

for FI_AV_TABLE AV type.

Valid values: 1|map, 2|table

MCA mtl ofi: parameter "mtl_ofi_enable_sep" (current value: "0",

data source: default, level: 3 user/all, type: int)

Enable SEP feature

MCA mtl ofi: parameter "mtl_ofi_thread_grouping" (current value:

"0", data source: default, level: 3 user/all, type:

int)

Enable/Disable Thread Grouping feature

MCA mtl ofi: parameter "mtl_ofi_num_ctxts" (current value: "1",

data source: default, level: 4 tuner/basic, type:

int)

Specify number of OFI contexts to create

MCA mtl ofi: parameter "mtl_ofi_disable_hmem" (current value:

"false", data source: default, level: 3 user/all,

type: bool)

Disable HMEM usage

Valid values: 0|f|false|disabled|no|n,

1|t|true|enabled|yes|y

MCA mtl ofi: parameter "mtl_ofi_provider_include" (current

value: "", data source: default, level: 1

user/basic, type: string, synonym of:

opal_common_ofi_provider_include)

Comma-delimited list of OFI providers that are

considered for use (e.g., "psm,psm2"; an empty

value means that all providers will be considered).

Mutually exclusive with mtl_ofi_provider_exclude.

MCA mtl ofi: parameter "mtl_ofi_provider_exclude" (current

value: "shm,sockets,tcp,udp,rstream,usnic,net",

data source: default, level: 1 user/basic, type:

string, synonym of:

opal_common_ofi_provider_exclude)

Comma-delimited list of OFI providers that are not

considered for use (default: "sockets,mxm"; empty

value means that all providers will be considered).

Mutually exclusive with mtl_ofi_provider_include.

MCA mtl ofi: parameter "mtl_ofi_verbose" (current value: "0",

data source: default, level: 3 user/all, type: int,

synonym of: opal_common_ofi_verbose)

Verbose level of the OFI components

root@hpc117:~/project/hpc/mpi/ompi/examples# - mtl_ofi_tag_mode 模式指定 OFI/Libfabric 通信中各种 MPI 值使用多少位。一些 Libfabric 提供商网络类型可以支持大多数 Open MPI 需求;其他类型只能提供有限数量的位,然后必须将其拆分为 MPI 通信器 ID、MPI 源等级和 MPI 标签。有三种不同的拆分方案可供选择:ofi_tag_full(通信器 30 位、源等级 32 位、标签 32 位)、ofi_tag_1(通信器 12 位、源等级 18 位、标签 32 位)、ofi_tag_2(通信器 24 位、源等级 18 位、标签 20 位)。默认情况下,此 MCA 变量设置为“自动”,它将首先尝试使用 ofi_tag_full,如果失败,则返回 ofi_tag_1。有效值:1|auto、2|ofi_tag_1、3|ofi_tag_2、4|ofi_tag_full

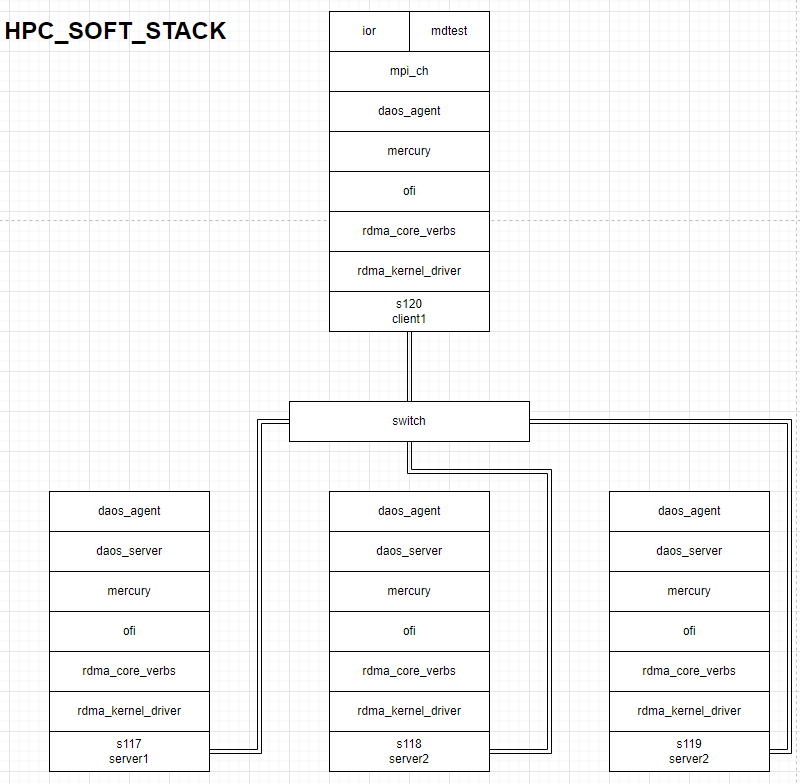

环境规划

HPC软件堆栈

Libfabric通讯库测试环境

需要服务器: 2台

客户端: 1台 + RDMA网卡1张

服务端: 1台 + RDMA网卡1张

libfabric测试

fi_pingpong

server:

cd /root/project/stor/daos/install/prereq/debug/ofi/bin

./fi_pingpong -e rdm -p "verbs;ofi_rxm" -m tagged -d mlx5_0 -v -I 2

root@hpc118:~/project/stor/daos/install/prereq/debug/ofi/bin# ./fi_pingpong -e rdm -p "verbs;ofi_rxm" -m tagged -d mlx5_0

bytes #sent #ack total time MB/sec usec/xfer Mxfers/sec

64 10 =10 1.2k 0.03s 0.04 1719.70 0.00

256 10 =10 5k 0.02s 0.27 932.10 0.00

1k 10 =10 20k 0.00s 140.27 7.30 0.14

4k 10 =10 80k 0.00s 438.07 9.35 0.11

64k 10 =10 1.2m 0.00s 1944.69 33.70 0.03

1m 10 =10 20m 0.01s 2745.68 381.90 0.00

client:

cd /root/project/stor/daos/install/prereq/debug/ofi/bin

./fi_pingpong -e rdm -p tcp -m tagged 10.20.10.117 -v -I 2

root@hpc117:~/project/stor/daos/install/prereq/debug/ofi/bin# ./fi_pingpong -e rdm -p "verbs;ofi_rxm" -m tagged 192.168.1.118

bytes #sent #ack total time MB/sec usec/xfer Mxfers/sec

64 10 =10 1.2k 0.03s 0.04 1718.80 0.00

256 10 =10 5k 0.02s 0.27 931.15 0.00

1k 10 =10 20k 0.00s 156.34 6.55 0.15

4k 10 =10 80k 0.00s 473.53 8.65 0.12

64k 10 =10 1.2m 0.00s 1982.93 33.05 0.03

1m 10 =10 20m 0.01s 2749.64 381.35 0.00 测试所有用例

root@hpc118:~/project/stor/daos/build/external/debug/ofi/build/fabtests/bin

./runfabtests.sh -vvv -t all verbs -s 192.168.1.118 -c 192.168.1.117 >> runfabtests.logUCX通讯库测试环境

需要服务器: 2台

客户端: 1台 + RDMA网卡1张

服务端: 1台 + RDMA网卡1张

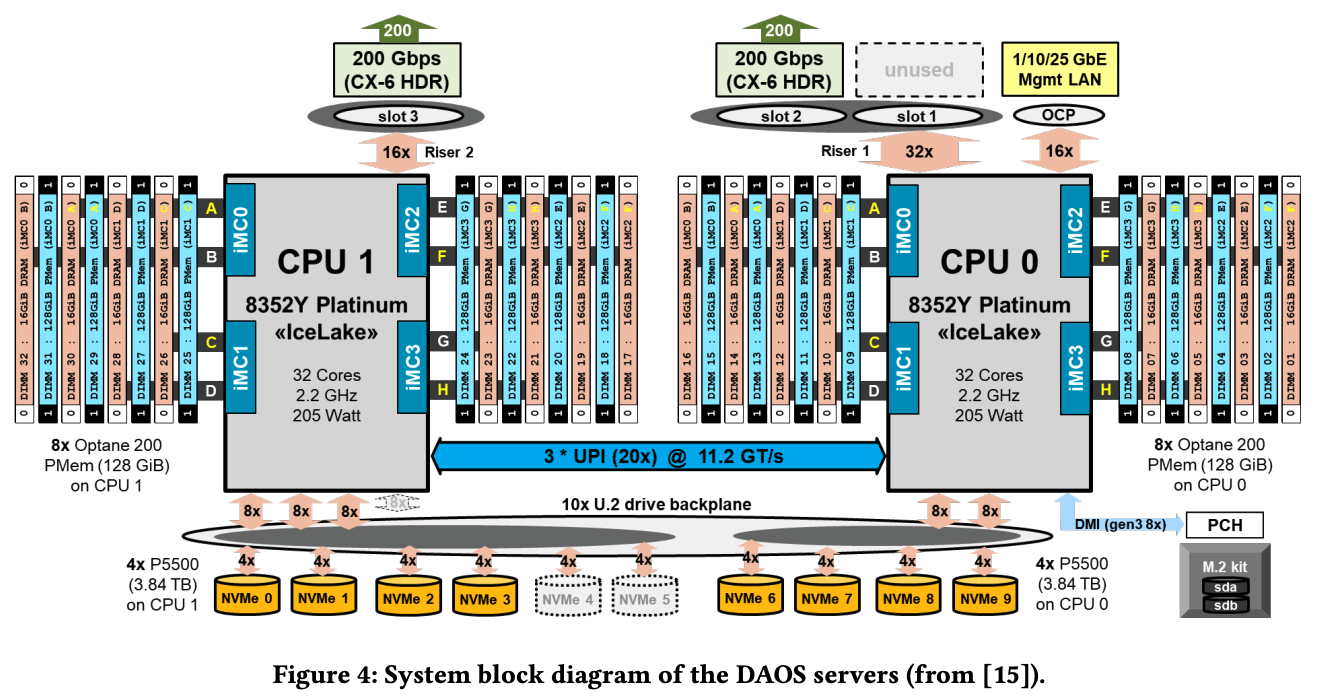

DAOS分布式并行文件系统及HPC MPI超算业务系统

需要服务器4台:

MPI客户端: 1台 + RDMA网卡1张

分布式存储集群3台 + RDMA网卡3张

注意事项

RDMA测试建议采取渐进式

- Verbs实现测试: 先将RDMA-CORE的基准测试跑通

- 通信库兼容测试: 将Libfabric/UCX的基准测试跑通

- 业务兼容测试: 最后再跑分布式存储和HPC/MPI基准测试

环境搭建

参考物理架构拓扑:

DAOS分布式存储和通信库

参考: https://docs.daos.io/v2.6/dev/development/

git clone --recurse-submodules https://github.com/daos-stack/daos.gitDAOS格式化存储/创建池/容器/查看Ranks

初次格式化:

root@hpc117:~# dmg storage format

Format Summary:

Hosts SCM Devices NVMe Devices

----- ----------- ------------

localhost 1 1

查看所有ranks:

root@hpc117:~# dmg sys query -v

Rank UUID Control Address Fault Domain State Reason

---- ---- --------------- ------------ ----- ------

0 388ec120-8bd4-4f54-829a-8629b22614aa 192.168.1.117:10001 /hpc117 Joined

1 74607630-a191-4b0f-a313-b290579db6f5 192.168.1.118:10001 /hpc118 Joined

2 e1e52cd2-2f43-4b69-b88b-4e5c93e36951 192.168.1.119:10001 /hpc119 Joined

创池/查池

root@hpc117:~# dmg pool create sxb -z 4g;dmg pool list --verbose

Creating DAOS pool with automatic storage allocation: 4.0 GB total, 6.38% ratio

Pool created with 6.00%,94.00% storage tier ratio

-------------------------------------------------

UUID : 89b0a7a0-167e-4e74-a0c8-e3ec47ff78f3

Service Leader : 2

Service Ranks : [0-2]

Storage Ranks : [0-2]

Total Size : 4.0 GB

Storage tier 0 (SCM) : 240 MB (80 MB / rank)

Storage tier 1 (NVMe): 3.8 GB (1.3 GB / rank)

Label UUID State SvcReps SCM Size SCM Used SCM Imbalance NVME Size NVME Used NVME Imbalance Disabled UpgradeNeeded? Rebuild State

----- ---- ----- ------- -------- -------- ------------- --------- --------- -------------- -------- -------------- -------------

sxb 89b0a7a0-167e-4e74-a0c8-e3ec47ff78f3 Ready [0-2] 240 MB 203 kB 0% 3.8 GB 126 MB 0% 0/3 None idle

创建容器/查询容器

root@hpc117:~# daos container create sxb --type POSIX sxb;daos container query sxb sxb --verbose; daos cont get-prop sxb sxb

Successfully created container 6806ea78-4d63-41d2-aea2-6f1d27da0c3e type POSIX

Container UUID : 6806ea78-4d63-41d2-aea2-6f1d27da0c3e

Container Label: sxb

Container Type : POSIX

Container UUID : 6806ea78-4d63-41d2-aea2-6f1d27da0c3e

Container Label : sxb

Container Type : POSIX

Pool UUID : 89b0a7a0-167e-4e74-a0c8-e3ec47ff78f3

Container redundancy factor : 0

Number of open handles : 1

Latest open time : 2025-02-14 09:07:42.546919424 +0800 CST (0x1cdfb1c241980004)

Latest close/modify time : 2025-02-14 09:07:42.620778496 +0800 CST (0x1cdfb1c288080003)

Number of snapshots : 0

Object Class : UNKNOWN

Dir Object Class : UNKNOWN

File Object Class : UNKNOWN

Chunk Size : 1.0 MiB

Properties for container sxb

Name Value

---- -----

Highest Allocated OID (alloc_oid) 0

Checksum (cksum) off

Checksum Chunk Size (cksum_size) 32 KiB

Compression (compression) off

Deduplication (dedup) off

Dedupe Threshold (dedup_threshold) 4.0 KiB

EC Cell Size (ec_cell_sz) 64 KiB

Performance domain affinity level of EC (ec_pda) 1

Encryption (encryption) off

Global Version (global_version) 4

Group (group) root@

Label (label) sxb

Layout Type (layout_type) POSIX (1)

Layout Version (layout_version) 1

Max Snapshot (max_snapshot) 0

Object Version (obj_version) 1

Owner (owner) root@

Performance domain level (perf_domain) root (255)

Redundancy Factor (rd_fac) rd_fac0

Redundancy Level (rd_lvl) node (2)

Performance domain affinity level of RP (rp_pda) 4294967295

Server Checksumming (srv_cksum) off

Health (status) HEALTHY

Access Control List (acl) A::OWNER@:rwdtTaAo, A:G:GROUP@:rwtT

root@hpc117:~#

# 挂载容器到到文件系统:

mkdir -p /tmp/sxb; dfuse --mountpoint=/tmp/sxb --pool=sxb --cont=sxb; df -h

#fio测试:

root@hpc117:~# fio --randrepeat=1 --ioengine=libaio --direct=1 --name=ccg_fio --iodepth=128 --numjobs=16 --size=1g --bs=4k --group_reporting=1 --readwrite=write --time_based=1 --runtime=60 --sync=0 --fdatasync=0 --filename=/tmp/sxb/daos_test

ccg_fio: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

...

fio-3.16

Starting 16 processes

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

ccg_fio: Laying out IO file (1 file / 1024MiB)

Jobs: 16 (f=16): [W(16)][100.0%][w=6438KiB/s][w=1609 IOPS][eta 00m:00s]

ccg_fio: (groupid=0, jobs=16): err= 0: pid=63892: Fri Feb 14 11:16:57 2025

write: IOPS=1787, BW=7149KiB/s (7320kB/s)(419MiB/60011msec); 0 zone resets

slat (nsec): min=1810, max=134914k, avg=8937479.33, stdev=8753325.29

clat (usec): min=12, max=2718.2k, avg=1117432.92, stdev=984758.15

lat (usec): min=1466, max=2739.2k, avg=1126371.42, stdev=992440.11

clat percentiles (msec):

| 1.00th=[ 107], 5.00th=[ 110], 10.00th=[ 112], 20.00th=[ 117],

| 30.00th=[ 138], 40.00th=[ 188], 50.00th=[ 651], 60.00th=[ 2005],

| 70.00th=[ 2056], 80.00th=[ 2140], 90.00th=[ 2232], 95.00th=[ 2265],

| 99.00th=[ 2534], 99.50th=[ 2635], 99.90th=[ 2702], 99.95th=[ 2702],

| 99.99th=[ 2702]

bw ( KiB/s): min= 2992, max=10072, per=99.84%, avg=7136.55, stdev=192.48, samples=1861

iops : min= 748, max= 2518, avg=1783.92, stdev=48.12, samples=1861

lat (usec) : 20=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.02%, 20=0.01%, 50=0.04%

lat (msec) : 100=0.06%, 250=49.45%, 500=0.33%, 750=0.18%, 1000=0.26%

lat (msec) : 2000=9.58%, >=2000=40.07%

cpu : usr=0.16%, sys=0.54%, ctx=160372, majf=0, minf=186

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.2%, 32=0.5%, >=64=99.1%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwts: total=0,107248,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

WRITE: bw=7149KiB/s (7320kB/s), 7149KiB/s-7149KiB/s (7320kB/s-7320kB/s), io=419MiB (439MB), run=60011-60011msec

root@hpc117:~# 构建MPICH

To build MPICH, including ROMIO with the DAOS ADIO driver:

export MPI_LIB=""

# to clone the latest development snapshot:

git clone https://github.com/pmodels/mpich

cd mpich

# to clone a specific tagged version:

git clone -b v3.4.3 https://github.com/pmodels/mpich mpich-3.4.3

cd mpich-3.4.3

git submodule update --init

./autogen.sh

mkdir build; cd build

LD_LIBRARY_PATH=/root/project/stor/daos/install/prereq/debug/ofi/lib:/root/project/stor/daos/install/lib64 \

LDFLAGS="-Wl,-rpath -Wl,/root/project/stor/daos/install/prereq/debug/ofi/lib:/root/project/stor/daos/install/lib64" \

LIBRARY_PATH=/root/project/stor/daos/install/lib64:/root/project/stor/daos/install/prereq/debug/ofi/lib:$LIBRARY_PATH \

../configure --prefix=$PREFIX --enable-fortran=all --enable-romio \

--enable-cxx --enable-g=all --enable-debuginfo --with-device=ch3:nemesis:ofi \

--with-file-system=ufs+daos --with-daos=/root/project/stor/daos/install \

--with-ofi=/root/project/stor/daos/install/prereq/debug/ofi

make -j64 V=1 2>&1 | tee m.txt

make install 2>&1 | tee mi.txt

set mpich env:

PREFIX=/root/project/hpc/mpi/daos/mpich-3.4.3/install

export PATH="$PREFIX/bin:$PATH"

export LD_LIBRARY_PATH="$PREFIX/lib:$LD_LIBRARY_PATH"

export INCLUDE="$PREFIX/include:$INCLUDE"编译IOR和测试

编译脚本

ref: https://docs.daos.io/v2.6/testing/ior/

git clone https://github.com/hpc/ior.git

cd ior/

./bootstrap

mkdir build;cd build

../configure --with-daos=/root/project/stor/daos/install --prefix=/root/project/hpc/ior/install

make -j64 V=1 2>&1 | tee m.txt

make install 2>&1 | tee mi.txt执行参考命令:

mpirun -hostfile /path/to/hostfile_clients -np 10 /root/project/hpc/ior/install/bin/ior -a POSIX -b 5G -t 1M -v -W -w -r -R -i 1 -o /tmp/daos_dfuse/testfile

mpirun -hostfile /path/to/hostfile_clients -np 10 <your_dir>/bin/mdtest -a POSIX -z 0 -F -C -i 1 -n 3334 -e 4096 -d /tmp/daos_dfuse/ -w 4096

dmg pool create sxb -z 4g; dmg pool list --verbose

daos container create sxb --type POSIX sxb; daos container query sxb sxb --verbose; daos cont get-prop sxb sxb

mkdir -p /tmp/sxb; dfuse --mountpoint=/tmp/sxb --pool=sxb --cont=sxb; df -h

mpirun -np 10 /root/project/hpc/ior/install/bin/ior -a POSIX -b 10M -t 1M -v -W -w -r -R -i 1 -o /tmp/sxb/testfile

mpirun -np 10 /root/project/hpc/ior/install/bin/mdtest -a POSIX -z 0 -F -C -i 1 -n 3334 -e 4096 -d /tmp/sxb/ -w 4096执行日志:

随机测试(ior):

root@hpc117:~/project/hpc/ior# mpirun -np 10 /root/project/hpc/ior/install/bin/ior -a POSIX -b 100M -t 1M -v -W -w -r -R -i 1 -o /tmp/sxb/testfile

IOR-4.1.0+dev: MPI Coordinated Test of Parallel I/O

Began : Tue Feb 18 20:12:23 2025

Command line : /root/project/hpc/ior/install/bin/ior -a POSIX -b 100M -t 1M -v -W -w -r -R -i 1 -o /tmp/sxb/testfile

Machine : Linux hpc117

TestID : 0

StartTime : Tue Feb 18 20:12:23 2025

Path : /tmp/sxb/testfile

FS : 3.7 GiB Used FS: 5.8% Inodes: -0.0 Mi Used Inodes: 0.0%

Options:

api : POSIX

apiVersion :

test filename : /tmp/sxb/testfile

access : single-shared-file

type : independent

segments : 1

ordering in a file : sequential

ordering inter file : no tasks offsets

nodes : 1

tasks : 10

clients per node : 10

memoryBuffer : CPU

dataAccess : CPU

GPUDirect : 0

repetitions : 1

xfersize : 1 MiB

blocksize : 100 MiB

aggregate filesize : 1000 MiB

verbose : 1

Results:

access bw(MiB/s) IOPS Latency(s) block(KiB) xfer(KiB) open(s) wr/rd(s) close(s) total(s) iter

------ --------- ---- ---------- ---------- --------- -------- -------- -------- -------- ----

write 474.49 474.62 0.021069 102400 1024.00 0.000736 2.11 0.000116 2.11 0

Verifying contents of the file(s) just written.

Tue Feb 18 20:12:25 2025

read 12187 12385 0.000806 102400 1024.00 0.001348 0.080743 0.000156 0.082052 0

remove - - - - - - - - 0.000907 0

Max Write: 474.49 MiB/sec (497.54 MB/sec)

Max Read: 12187.39 MiB/sec (12779.41 MB/sec)

Summary of all tests:

Operation Max(MiB) Min(MiB) Mean(MiB) StdDev Max(OPs) Min(OPs) Mean(OPs) StdDev Mean(s) Stonewall(s) Stonewall(MiB) Test# #Tasks tPN reps fPP reord reordoff reordrand seed segcnt blksiz xsize aggs(MiB) API RefNum

write 474.49 474.49 474.49 0.00 474.49 474.49 474.49 0.00 2.10754 NA NA 0 10 10 1 0 0 1 0 0 1 104857600 1048576 1000.0 POSIX 0

read 12187.39 12187.39 12187.39 0.00 12187.39 12187.39 12187.39 0.00 0.08205 NA NA 0 10 10 1 0 0 1 0 0 1 104857600 1048576 1000.0 POSIX 0

Finished : Tue Feb 18 20:12:26 2025

root@hpc117:~/project/hpc/ior#

元数据测试(mdtest):

root@hpc117:~/project/hpc/ior# mpirun -np 10 /root/project/hpc/ior/install/bin/mdtest -a POSIX -z 0 -F -C -i 1 -n 3334 -e 4096 -d /tmp/sxb/ -w 4096

-- started at 02/18/2025 20:23:17 --

mdtest-4.1.0+dev was launched with 10 total task(s) on 1 node(s)

Command line used: /root/project/hpc/ior/install/bin/mdtest '-a' 'POSIX' '-z' '0' '-F' '-C' '-i' '1' '-n' '3334' '-e' '4096' '-d' '/tmp/sxb/' '-w' '4096'

Nodemap: 1111111111

Path : /tmp/sxb/

FS : 3.7 GiB Used FS: 3.2% Inodes: -0.0 Mi Used Inodes: 0.0%

10 tasks, 33340 files

SUMMARY rate (in ops/sec): (of 1 iterations)

Operation Max Min Mean Std Dev

--------- --- --- ---- -------

File creation 1443.988 1443.988 1443.988 0.000

File stat 0.000 0.000 0.000 0.000

File read 0.000 0.000 0.000 0.000

File removal 0.000 0.000 0.000 0.000

Tree creation 2109.811 2109.811 2109.811 0.000

Tree removal 0.000 0.000 0.000 0.000

-- finished at 02/18/2025 20:23:41 --

root@hpc117:~/project/hpc/ior# 论文/规范解读

MPI规范

Chapter 14 I/O 简介

POSIX 提供了一种可广泛移植的文件系统模型,但 POSIX 接口无法实现并行 I/O 所需的可移植性(portability)和优化(optimization)。只有当并行 I/O 系统提供支持在进程之间划分文件数据的高级接口和支持在进程内存和文件之间完全传输全局数据结构的集体接口时,才能实现效率所需的重大优化(例如,分组 [55]、集体缓冲 [9、17、56、60、67] 和磁盘定向 I/O [50])。此外,通过支持异步 I/O、跨步访问和控制存储设备(磁盘)上的物理文件布局,可以进一步提高效率。本章中描述的 I/O 环境提供了这些功能。我们没有定义 I/O 访问模式来表达访问共享文件的常见模式(广播、缩减、分散、聚集),而是选择了另一种方法,即使用派生数据类型来表达数据分区。与一组有限的预定义访问模式相比,这种方法具有增加灵活性和表现力的优势

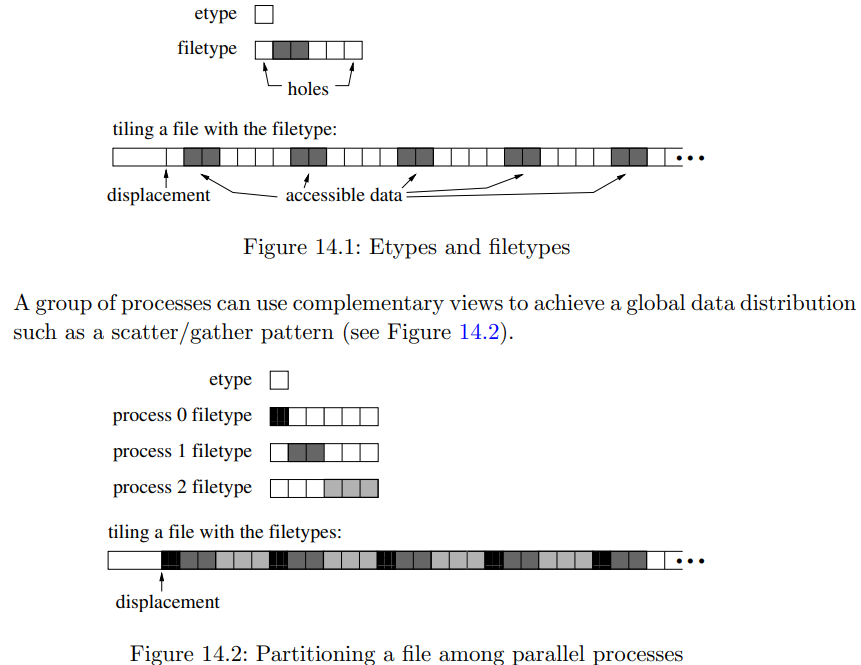

14.1.1 定义

文件(file):MPI 文件是类型化数据项的有序集合。MPI 支持对这些项的任何整数集进行随机或顺序访问。文件由一组进程集体打开。对文件的所有集体 I/O 调用都是针对该组的集体调用。

位移(displacement):文件位移是相对于文件开头的绝对字节位置。位移定义视图的开始位置。请注意,“文件位移”不同于“类型映射位移”。

etype:etype(基本数据类型)是数据访问和定位的单位。它可以是任何 MPI 预定义或派生的数据类型。可以使用任何 MPI 数据类型构造函数例程构造派生的 etype,前提是所有生成的类型映射位移都是非负的并且单调非递减。数据访问以 etype 为单位执行,读取或写入 etype 类型的整个数据项。偏移量表示为 etype 的计数;文件指针指向 etype 的开头。根据上下文,术语“etype”用于描述基本数据类型的三个方面之一:特定的 MPI 类型、该类型的数据项或该类型的范围。

文件类型:文件类型是将文件划分到进程之间的基础,并定义访问文件的模板。文件类型可以是单个 etype,也可以是从相同 etype 的多个实例构建的派生 MPI 数据类型。此外,文件类型中任何空洞的范围都必须是 etype 范围的倍数。文件类型的类型映射中的位移不需要不同,但它们必须是非负的并且单调不减。

视图:视图将打开文件中可见和可访问的当前数据集定义为一组有序的 etype。每个进程都有自己的文件视图,由三个量定义:位移、etype 和文件类型。从位移开始重复文件类型描述的模式以定义视图。重复模式定义为 MPI_TYPE_CONTIGUOUS 传递文件类型和任意大计数时产生的相同模式。图 14.1 显示了平铺的工作原理;请注意,此示例中的文件类型必须设置明确的下限和上限,以便初始和最终的空洞在视图中重复。用户可以在程序执行期间更改视图。默认视图是线性字节流(位移为零,etype 和文件类型等于 MPI_BYTE)在并行进程之间对文件进行

偏移:偏移是文件中相对于当前视图的位置,以 etype 计数表示。计算此位置时,将跳过视图的文件类型中的空洞。偏移 0 是视图中可见的第一个 etype 的位置(跳过位移和视图中的任何初始空洞后)。例如,图 14.2 中进程 1 的偏移量 2 是位移后文件中第八个 etype 的位置。 “显式偏移量”是在显式数据访问例程中用作参数的偏移量。

文件大小和文件结尾:MPI 文件的大小以字节为单位,从文件开头开始计算。新创建的文件的大小为零字节。使用大小作为绝对位移可给出紧跟在文件中最后一个字节之后的字节的位置。对于任何给定视图,文件结尾是当前视图中可访问的第一个 etype 的偏移量,从文件中的最后一个字节开始。

文件指针:文件指针是 MPI 维护的隐式偏移量。“单个文件指针”是打开文件的每个进程的本地文件指针。“共享文件指针”是打开文件的进程组共享的文件指针。

文件句柄:文件句柄是由 MPI_FILE_OPEN 创建并由 MPI_FILE_CLOSE 释放的不透明对象。对打开的文件的所有操作都通过文件句柄引用该文件

优化 MPI-IO 中的非连续访问

Rajeev Thakur William Gropp Ewing Lusk 数学和计算机科学部 阿贡国家实验室 9700 S. Cass Avenue Argonne, IL 60439, US

摘要:

许多并行应用程序的 I/O 访问模式包括对大量小的、不连续的数据块的访问。但是,如果应用程序的 I/O 需求通过发出许多小的、不同的 I/O 请求来满足,则 I/O 性能会急剧下降。为了避免这个问题,MPI-IO 允许用户使用单个 I/O 函数调用访问不连续的数据,这与 Unix I/O 不同。在本文中,我们将解释 MPI-IO 的这一特性对于高性能的重要性,以及它如何使实现能够执行优化。我们首先对 MPI-IO 中表达应用程序 I/O 需求的不同方式进行了分类——我们将它们分为四个级别,称为级别 0 到级别 3。我们证明,对于具有不连续访问模式的应用程序,如果用户编写应用程序以发出级别 3(不连续、集体)请求而不是级别 0(Unix 风格),则 I/O 性能会显著提高。然后,我们描述我们的 MPI-IO 实现 ROMIO 如何为非连续请求提供高性能。我们详细解释了 ROMIO 执行的两个关键优化:对来自一个进程的非连续请求进行数据筛选,以及对来自多个进程的非连续请求进行集体 I/O。我们描述了如何在多台机器和文件系统上可移植地实现这些优化,控制它们的内存需求,并实现高性能。我们通过三个应用程序(天体物理应用程序模板 (DIST3D)、NAS BTIO 基准测试和非结构化代码 (UNSTRUC))在五台不同的并行机器上的性能结果来展示性能和可移植性:HP Exemplar、IBM SP、Intel Paragon、NEC SX-4 和 SGI Origin200

结论:

上一节中的结果表明 MPI-IO 可以为应用程序提供良好的 I/O 性能。但是,要使用 MPI-IO 实现高性能,用户必须使用 MPI-IO 的一些高级功能,尤其是非连续访问和集体 I/O。通过发出 3 级 MPI-IO 请求(非连续、集体),图 12:UNSTRUC 的性能。0/1 级结果不适用于此应用程序,因为它们会花费过多的时间,因为每个请求的粒度都很小。在 IBM SP 上,由于 PIOFS 文件系统中没有文件锁定,ROMIO 将 2 级写入转换为 0 级写入,在这种情况下速度非常慢。因此,未显示 SP 上 2 级写入的结果。

我们实现了数百兆字节/秒的 I/O 带宽,而对于 0 级请求(Unix 风格),即使使用高性能文件系统,我们也只能实现不到 15 兆字节/秒的带宽。对于 3 级请求,实现的带宽仅受机器和底层文件系统的 I/O 功能的限制。我们相信,除了本文中考虑的那些应用程序之外,3 级请求的性能改进也是可以预期的。我们详细描述了 ROMIO 对非连续请求执行的优化:数据筛选和集体 I/O。我们注意到,为了实现高性能,必须谨慎实施这些优化,以最大限度地减少缓冲区复制和进程间通信的开销。否则,这些开销会严重影响性能。为了执行这些优化,MPI-IO 实现需要一定数量的临时缓冲区空间,这会减少应用程序可用的总内存量。但是,可以使用恒定数量的缓冲区空间来执行优化,该空间不会随着用户请求的大小而增加。我们的结果表明,通过允许 MPI-IO 实现每个进程使用少至 4 MB 的缓冲区空间(这在当今的高性能机器上是很小的量),用户可以获得 I/O 性能的大幅提升。我们注意到,MPI-IO 标准不要求实现执行任何这些优化。然而,即使实现不执行任何优化,而是将 3 级请求转换为对文件系统的几个 0 级请求,其性能也不会比用户发出 0 级请求更差。因此,没有理由不使用 3 级请求(或 2 级请求,其中 3 级请求是不可能的)。

扩展 POSIX I/O 接口:并行文件系统视角

POSIX 接口本身并不适合为高端应用程序提供良好的性能。POSIX I/O 接口需要扩展,以便运行在并行文件系统上的高并发高性能计算应用程序能够表现良好。本文介绍了集群上广泛使用的并行文件系统 (PVFS) 上 POSIX I/O 接口子集的参考实现的原理、设计和评估。一组微基准测试的实验结果证实,POSIX 接口的扩展极大地提高了可扩展性和性能

并行文件系统通常针对批量数据操作(带宽)进行调整,并且不公开促进批量元数据操作(延迟)的接口。后者的缺乏可能对性能产生严重影响(尤其是在没有客户端元数据缓存或元数据缓存命中率低的情况下),无论是对于无处不在的“ls”等交互式工具,还是对于遍历整个(或子集)命名空间的备份软件 [10]。诸如此类的工具通常会读取目录树的所有条目,并反复调用 stat 来检索每个条目的属性。尽管客户端名称和属性缓存可能会过滤掉大量访问(查找和 getattr 消息)以免访问服务器,但服务器可能仍然不堪重负,导致 I/O 性能显著下降。因此,需要批量元数据接口来减少服务器的开销。如前所述,建议的 getdents plus 系统调用不仅返回目录的指定条目数,而且还返回它们的属性(如果可能)(类似于 NFSv3 readdirplus)。

非连续读/写接口 (readx、writex) 此类系统调用将文件向量推广到内存向量数据传输。现有的向量系统调用 (readv、writev) 指定内存向量(偏移量、长度对的列表)并启动对文件连续部分的 I/O。所提出的系统调用 (readx、writex) 将内存的 strided 向量读/写到文件中的 strided 偏移量。指定的区域可以按任何顺序处理。虽然这些系统调用在从文件的非连续区域读取/写入方面类似于 POSIX listio 接口,但它们消除了 listio 接口的许多缺点。listio 接口在内存向量和文件区域中指定的大小之间施加一一对应关系,并要求内存和文件向量中的元素数量相同。此外,readx、writex 接口指定实现可以自由地进行任何重新排序、聚合或任何其他优化,以实现高效的 I/O 完成

结论

在本文中,我们介绍了一种常用集群并行文件系统的 POSIX I/O 系统调用接口扩展子集的原理、设计和参考实现。这些接口扩展解决了阻碍并行和分布式文件系统扩展的关键性能瓶颈(元数据和数据)。我们在中型集群上使用微基准测试的原型系统的性能结果表明,这些扩展实现了提高性能和可扩展性的既定目标。本文中介绍的接口扩展绝不是完整的,也不期望成为性能和可扩展性的唯一推动因素。我们预计,需要在文件系统和存储堆栈的所有级别进行优化,以使高端科学应用程序能够扩展。我们还预计,在当前和未来一代高端机器(如 IBM Blue Gene/L 和 Blue Gene/P)上运行的并行文件系统可能需要更新的系统调用接口。此类机器提出了集群环境所没有的一系列要求和挑战,可能需要重新审视 POSIX I/O 接口和线路协议请求。例如,代表 BG/L 机器上的一组计算节点从指定节点转发 I/O 需要聚合此类节点上的 I/O 和元数据请求以及复合操作的接口。解决这些问题超出了本文讨论的范围,将成为未来研究的一部分。虽然这些接口可以直接用于用户程序和实用程序,但我们预计这些接口的最大用户将是中间件和高级 I/O 库。特别是,我们预计 MPICH2 ROMIO 库(实现 MPI I/O 规范)可以利用提议的组打开系统调用和非连续 I/O 扩展来实现可扩展的集体文件打开。源代码、内核补丁文档、PVFS2 文件系统挂钩、测试程序和安装说明可在 [2] 中找到

常见问题(FAQ)

- 节点超额订阅

参考

HPC:

什么是高性能计算(HPC): https://mp.weixin.qq.com/s/97OF972JwIQQWFOeFKcPNw

IO500: https://github.com/IO500/io500

NvidiaMPI消息传递接口协议和硬件卸载(标签匹配): https://mp.weixin.qq.com/s/Ex16pWjTMDgX39XAJkjgbg

Nvidia HPC-X: https://developer.nvidia.com/networking/hpc-x

AWS_EFA: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/efa.html

Intel基准测试: https://www.cnblogs.com/liu-shaobo/p/13417891.html

HPC场景测试与常用工具: https://asterfusion.com/blog20241021-hpc/?srsltid=AfmBOoqlPK8w69DyDFscriKlM34l2LMGVDYvKtXTJzs3Wt8MLGgXj8cE

Intel MPI benchmark: https://github.com/intel/mpi-benchmarks

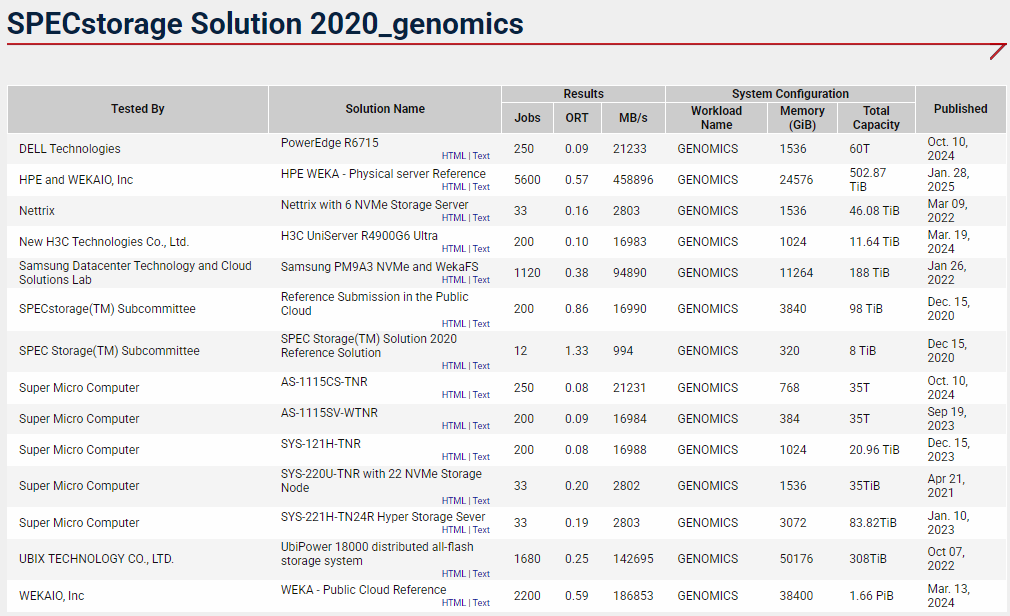

SPEC性能测试报告: https://www.spec.org/storage2020/results/genomics/

HPC空间: https://hpcadvisorycouncil.atlassian.net/wiki/spaces/HPCWORKS/overview?homepageId=7995578

HPC Works 空间使 HPC 用户能够查看构建 HPC 集群的所有功能,并调整其系统以实现最佳性能。此空间提供了在 HPC 集群上安装应用程序的指南,包括所需的软件组件、如何构建以及如何运行简单的基准测试。HPC 咨询委员会拥有一个高性能中心,并提供用于开发、测试、基准测试和优化应用程序的环境 - 获取集群访问权限

MPI常用链接:

MPI standard : http://www.mpi-forum.org/docs/docs.html MPI Forum : http://www.mpi-forum.org/ MPI implementations: – MPICH : http://www.mpich.org – MVAPICH : http://mvapich.cse.ohio-state.edu/ – Intel MPI: http://software.intel.com/en-us/intel-mpi-library/ – Microsoft MPI: www.microsoft.com/en-us/download/details.aspx?id=39961 – Open MPI : http://www.open-mpi.org/ – IBM MPI, Cray MPI, HP MPI, TH MPI, …

MPI标准(mpi_41_spec_消息传递接口标准.pdf)

MPI论坛/文档/标准: https://www.mpi-forum.org/docs/

Optimized All-to-all Connection Establishment for High-Performance MPI Libraries over InfiniBand.pdf

MPI_WIKI.pdf

OpenMPI文档: https://docs.open-mpi.org/en/main/, 网络插件支持: https://docs.open-mpi.org/en/main/release-notes/networks.html

Google MPI教程: https://www.google.com/search?q=MPI+tutorial

MPI教程: https://mpitutorial.com/tutorials/

Nvidia集合操作: https://docs.nvidia.com/deeplearning/nccl/user-guide/docs/usage/collectives.html

AlltoAll VS Allgather: https://stackoverflow.com/questions/15049190/difference-between-mpi-allgather-and-mpi-alltoall-functions

OpenMPI:

OpenMPI常见问题: https://www-lb.open-mpi.org/faq/, https://www.open-mpi.org/faq/, https://github.com/open-mpi/ompi/issues

OpenMPI参数详解: https://docs.open-mpi.org/en/main/man-openmpi/man1/mpirun.1.html

OpenMPI网络: https://docs.open-mpi.org/en/main/tuning-apps/networking/index.html

MPICH:

MPICH官方仓库/编译指导: https://github.com/pmodels/mpich

MPICH官方文档: http://www.mpich.org/

MPICH开发者手册/指南: https://github.com/pmodels/mpich/blob/main/doc/wiki/developer_guide.md

书籍和Paper: https://www.mpich.org/publications/

UCX:

统一通信 X(UCX) 实现高性能便携式网络加速-UCX入门教程HOTI2022: https://cloud.tencent.com/developer/article/2337395

Nvidia UCX与OpenMPI: https://docs.nvidia.com/networking/display/hpcxv212/unified+communication+-+x+framework+library, https://forums.developer.nvidia.com/t/building-openmpi-with-ucx-general-advice/205921

IOR:

IOR百科: https://wiki.lustre.org/IOR

IOR用户手册: https://ior.readthedocs.io/en/latest/intro.html, https://github.com/hpc/ior/blob/main/doc/USER_GUIDE

IOR仓库: https://github.com/hpc/ior

DAOS 支持IOR: https://daosio.atlassian.net/wiki/spaces/DC/pages/4843634915/IOR+-+with+MPIIO, https://github.com/hpc/ior/blob/main/README_DAOS

DAOS运行IOR和MDTEST: https://docs.daos.io/latest/testing/ior/

DOAS MPI测试: https://docs.daos.io/v2.6/user/mpi-io/

Libfabric:

Libfabric用户手册: https://ofiwg.github.io/libfabric/v1.22.0/man/, 端点类型: https://ofiwg.github.io/libfabric/v1.22.0/man/fi_endpoint.3.html

Libfabric MPI: https://www.youtube.com/watch?v=Sde8Vc8dZcM

Libfabric fabtests: https://github.com/ofiwg/libfabric/tree/main/fabtests, https://github.com/ofiwg/libfabric/blob/main/fabtests/man/fabtests.7.md

基于 Libfabric* 的英特尔® MPI 库: https://www.intel.com/content/www/us/en/developer/articles/technical/mpi-library-2019-over-libfabric.html

Intel开放互联接口OFI(libfabric)入门教程 rdma verbs gpu ai dma network HOTI 互联 HPC panda 博士: https://cloud.tencent.com/developer/article/2334882

OpenFabrics 接口简介-用于最大限度提高-高性能应用程序效率的新网络接口(API)-[译]: https://cloud.tencent.com/developer/article/2329772

Libfabric运行MPI参考: https://github.com/open-mpi/ompi/issues/9876, https://docs.open-mpi.org/en/main/tuning-apps/networking/ofi.html

DAOS:

dfuse文档: https://docs.daos.io/v2.6/user/filesystem/#dfuse-daos-fuse

书籍推荐:

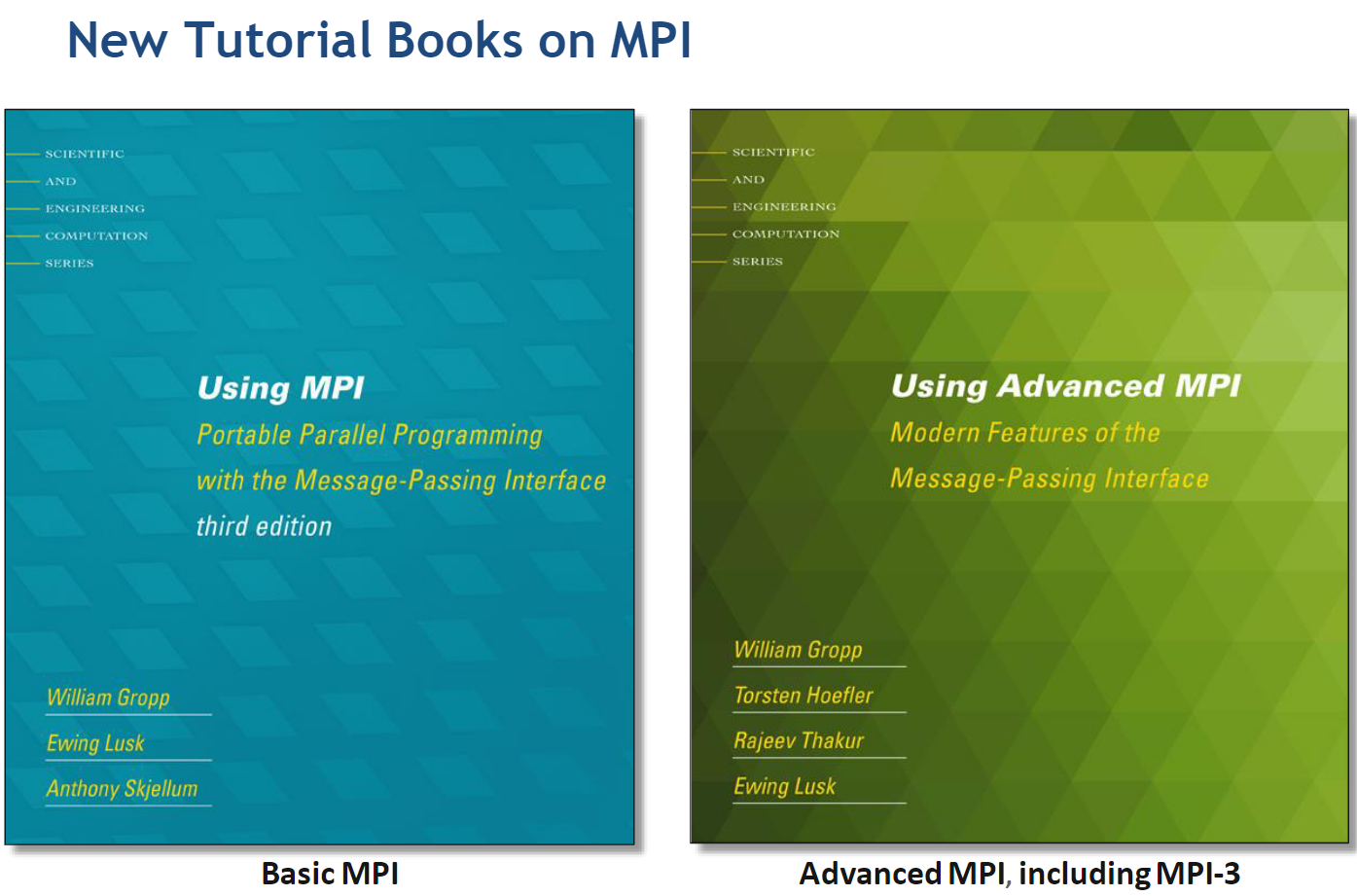

麻省理工学院出版社 出版的《使用 MPI-2:消息传递接口的高级功能》: https://wgropp.cs.illinois.edu/usingmpiweb/, 这两本书于 2014 年出版,介绍了如何使用 MPI(消息传递接口)编写并行程序。《使用 MPI》现已出版第 3 版,介绍了如何使用 MPI,包括模拟偏微分方程和 n 体问题所需的并行计算代码示例。《使用高级 MPI》介绍了 MPI 的其他功能,包括并行 I/O、单边或远程内存访问通信以及使用 MPI 中的线程和共享内存

NCCL:

NCCL:加速多 GPU 集体通信(NCCL通信原语和原理以及加速GPU通信)

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

评论

登录后参与评论

推荐阅读

目录