hive beeline基本命令详解

Beeline 是一个 Hive 客户端,使用 JDBC 连接到 HiveServer2,是集群上的服务。可以在集群上执行 Beeline 命令获取查询结果,而无需进入 hive 数据库。使用 beeline 命令时,需要首先启动 metastore 元数据服务和 hiveserver2 服务,其应用场景广泛,比如:优化查询结果展示效果、告警监控、查询结果导出等。

下面介绍一下 Beeline 命令使用时可以添加的参数,用以实现各种个性化需求。

使用 beeline --help 命令可以显示 beeline 的帮助

[omc@hadoop102 hive]$ beeline --help

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Usage: java org.apache.hive.cli.beeline.BeeLine

-u <database url> the JDBC URL to connect to

-c <named url> the named JDBC URL to connect to,

which should be present in beeline-site.xml

as the value of beeline.hs2.jdbc.url.<namedUrl>

-r reconnect to last saved connect url (in conjunction with !save)

-n <username> the username to connect as

-p <password> the password to connect as

-d <driver class> the driver class to use

-i <init file> script file for initialization

-e <query> query that should be executed

-f <exec file> script file that should be executed

-w (or) --password-file <password file> the password file to read password from

--hiveconf property=value Use value for given property

--hivevar name=value hive variable name and value

This is Hive specific settings in which variables

can be set at session level and referenced in Hive

commands or queries.

--property-file=<property-file> the file to read connection properties (url, driver, user, password) from

--color=[true/false] control whether color is used for display

--showHeader=[true/false] show column names in query results

--escapeCRLF=[true/false] show carriage return and line feeds in query results as escaped \r and \n

--headerInterval=ROWS; the interval between which heades are displayed

--fastConnect=[true/false] skip building table/column list for tab-completion

--autoCommit=[true/false] enable/disable automatic transaction commit

--verbose=[true/false] show verbose error messages and debug info

--showWarnings=[true/false] display connection warnings

--showDbInPrompt=[true/false] display the current database name in the prompt

--showNestedErrs=[true/false] display nested errors

--numberFormat=[pattern] format numbers using DecimalFormat pattern

--force=[true/false] continue running script even after errors

--maxWidth=MAXWIDTH the maximum width of the terminal

--maxColumnWidth=MAXCOLWIDTH the maximum width to use when displaying columns

--silent=[true/false] be more silent

--autosave=[true/false] automatically save preferences

--outputformat=[table/vertical/csv2/tsv2/dsv/csv/tsv] format mode for result display

Note that csv, and tsv are deprecated - use csv2, tsv2 instead

--incremental=[true/false] Defaults to false. When set to false, the entire result set

is fetched and buffered before being displayed, yielding optimal

display column sizing. When set to true, result rows are displayed

immediately as they are fetched, yielding lower latency and

memory usage at the price of extra display column padding.

Setting --incremental=true is recommended if you encounter an OutOfMemory

on the client side (due to the fetched result set size being large).

Only applicable if --outputformat=table.

--incrementalBufferRows=NUMROWS the number of rows to buffer when printing rows on stdout,

defaults to 1000; only applicable if --incremental=true

and --outputformat=table

--truncateTable=[true/false] truncate table column when it exceeds length

--delimiterForDSV=DELIMITER specify the delimiter for delimiter-separated values output format (default: |)

--isolation=LEVEL set the transaction isolation level

--nullemptystring=[true/false] set to true to get historic behavior of printing null as empty string

--maxHistoryRows=MAXHISTORYROWS The maximum number of rows to store beeline history.

--delimiter=DELIMITER set the query delimiter; multi-char delimiters are allowed, but quotation

marks, slashes, and -- are not allowed; defaults to ;

--convertBinaryArrayToString=[true/false] display binary column data as string or as byte array

--help display this message

Example:

1. Connect using simple authentication to HiveServer2 on localhost:10000

$ beeline -u jdbc:hive2://localhost:10000 username password

2. Connect using simple authentication to HiveServer2 on hs.local:10000 using -n for username and -p for password

$ beeline -n username -p password -u jdbc:hive2://hs2.local:10012

3. Connect using Kerberos authentication with hive/localhost@mydomain.com as HiveServer2 principal

$ beeline -u "jdbc:hive2://hs2.local:10013/default;principal=hive/localhost@mydomain.com"

4. Connect using SSL connection to HiveServer2 on localhost at 10000

$ beeline "jdbc:hive2://localhost:10000/default;ssl=true;sslTrustStore=/usr/local/truststore;trustStorePassword=mytruststorepassword"

5. Connect using LDAP authentication

$ beeline -u jdbc:hive2://hs2.local:10013/default <ldap-username> <ldap-password>

要连接 beeline 首先要指定数据库地址和用户名

-u---加载一个JDBC连接字符串:

beeline -u db_URL

-n---加载一个用户名:

beeline -n valid_user

类似这样:

[omc@hadoop102 hive]$ beeline -u jdbc:hive2://hadoop102:10000 -n omc

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://hadoop102:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.2 by Apache Hive

0: jdbc:hive2://hadoop102:10000>

-e---使用一个查询语句:

beeline -e "query_string"

可以直接执行一个 sql 语句,类似这样

[omc@hadoop102 bin]$ beeline -u jdbc:hive2://hadoop102:10000 -n omc -e 'select * from ods.test;'

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://hadoop102:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

INFO : Compiling command(queryId=omc_20220828141355_270f04a7-1b18-4e81-80b4-eaa620d56c07): select * from ods.test

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:test.id, type:int, comment:null), FieldSchema(name:test.name, type:string, comment:null), FieldSchema(name:test.age, type:int, comment:null), FieldSchema(name:test.phonenumber, type:string, comment:null), FieldSchema(name:test.address, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=omc_20220828141355_270f04a7-1b18-4e81-80b4-eaa620d56c07); Time taken: 1.845 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=omc_20220828141355_270f04a7-1b18-4e81-80b4-eaa620d56c07): select * from ods.test

INFO : Completed executing command(queryId=omc_20220828141355_270f04a7-1b18-4e81-80b4-eaa620d56c07); Time taken: 0.002 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+----------+-------------+-----------+-------------------+---------------+

| test.id | test.name | test.age | test.phonenumber | test.address |

+----------+-------------+-----------+-------------------+---------------+

| 1 | mba1398 | 20 | 10010 | shijiazhuang |

| 2 | datartisan | 30 | 1000 | bj |

+----------+-------------+-----------+-------------------+---------------+

2 rows selected (1.972 seconds)

Beeline version 3.1.2 by Apache Hive

Closing: 0: jdbc:hive2://hadoop102:10000

[omc@hadoop102 bin]$

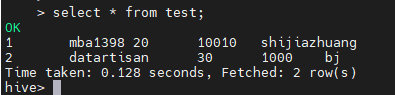

可以看出,beeline 对查询结果展示进行了优化,可以清晰的看出行和列,对比 hive 数据库中的展示效果,可以说是完胜。

除了可以直接加载 sql 语句,它还可以加载 sql 文件,用法如下:

-f---加载一个文件:beeline -f filepath 多个文件用

-f file1 -f file2

请看如下示例:

① 先创建一个 sql 脚本文件 scrip.sql,其内容为 select * from ods.test;

② 执行 beeline 命令

[omc@hadoop102 bin]$ beeline -u jdbc:hive2://hadoop102:10000 -n omc -f scrip.sql

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://hadoop102:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://hadoop102:10000> select * from ods.test;

INFO : Compiling command(queryId=omc_20220828142440_4548355c-4ebb-4850-91b0-0ef26b374640): select * from ods.test

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:test.id, type:int, comment:null), FieldSchema(name:test.name, type:string, comment:null), FieldSchema(name:test.age, type:int, comment:null), FieldSchema(name:test.phonenumber, type:string, comment:null), FieldSchema(name:test.address, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=omc_20220828142440_4548355c-4ebb-4850-91b0-0ef26b374640); Time taken: 0.151 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=omc_20220828142440_4548355c-4ebb-4850-91b0-0ef26b374640): select * from ods.test

INFO : Completed executing command(queryId=omc_20220828142440_4548355c-4ebb-4850-91b0-0ef26b374640); Time taken: 0.001 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+----------+-------------+-----------+-------------------+---------------+

| test.id | test.name | test.age | test.phonenumber | test.address |

+----------+-------------+-----------+-------------------+---------------+

| 1 | mba1398 | 20 | 10010 | shijiazhuang |

| 2 | datartisan | 30 | 1000 | bj |

+----------+-------------+-----------+-------------------+---------------+

2 rows selected (0.279 seconds)

0: jdbc:hive2://hadoop102:10000>

0: jdbc:hive2://hadoop102:10000> Closing: 0: jdbc:hive2://hadoop102:10000

[omc@hadoop102 bin]$

通过上述示例,不难发现一个问题,就是打印的无用信息过多,是否可以减少呢?答案是肯定的。

--silent=[true/false] ---减少显示的信息量:

beeline --silent=true

显示效果如下:

[omc@hadoop102 bin]$ beeline -u jdbc:hive2://hadoop102:10000 -n omc -f scrip.sql --silent=true

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

+----------+-------------+-----------+-------------------+---------------+

| test.id | test.name | test.age | test.phonenumber | test.address |

+----------+-------------+-----------+-------------------+---------------+

| 1 | mba1398 | 20 | 10010 | shijiazhuang |

| 2 | datartisan | 30 | 1000 | bj |

+----------+-------------+-----------+-------------------+---------------+

[omc@hadoop102 bin]$

beeline 除了可以读取文件,还可以将查询结果写入到一个文件中,请看如下示例:

[omc@hadoop102 bin]$ beeline -u jdbc:hive2://hadoop102:10000 -n omc -f scrip.sql --silent=true > result.txt

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

[omc@hadoop102 bin]$ cat result.txt

+----------+-------------+-----------+-------------------+---------------+

| test.id | test.name | test.age | test.phonenumber | test.address |

+----------+-------------+-----------+-------------------+---------------+

| 1 | mba1398 | 20 | 10010 | shijiazhuang |

| 2 | datartisan | 30 | 1000 | bj |

+----------+-------------+-----------+-------------------+---------------+

[omc@hadoop102 bin]$

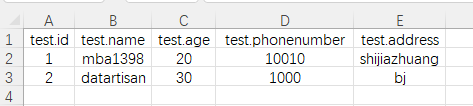

有人可能会说了,如果数据量大了,这种显示效果也不容易看,能否生成可以用excel打开的文件呢?可以!

首先要调整输出文件的格式

--outputformat=[table/vertical/csv/tsv/dsv/csv2/tsv2] ---输出格式:

beeline --outputformat=tsv

其次要调整查询结果的分隔符(默认是 |)。

--delimiterForDSV= DELIMITER ---分隔值输出格式的分隔符。默认是“|”字符。

[omc@hadoop102 bin]$ beeline -u jdbc:hive2://hadoop102:10000 -n omc -f scrip.sql --outputformat=csv2 --delimiterForDSV=',' --silent=true > result.csv

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hadoop-3.1.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

[omc@hadoop102 bin]$ cat result.csv

test.id,test.name,test.age,test.phonenumber,test.address

1,mba1398,20,10010,shijiazhuang

2,datartisan,30,1000,bj

[omc@hadoop102 bin]$

下载 result.csv 后,用 excel 打开,可以正常显示:

有了这些功能,可以满足优化查询结果显示,导出查询结果等需求,而将查询语句封装在 beeline shell 中又可以实现日常监控的功能,可以说 beeline 真的是太强大了。

下面是一些不太常用的功能,大家可以按需了解。

--autoCommit=[true/false] ---进入一个自动提交模式:

beeline --autoCommit=true

--autosave=[true/false] ---进入一个自动保存模式:

beeline --autosave=true

--color=[true/false] ---显示用到的颜色:

beeline --color=true

--fastConnect=[true/false] ---在连接时,跳过组建表等对象:

beeline --fastConnect=false

--force=[true/false] ---是否强制运行脚本:

beeline--force=true

--headerInterval=ROWS ---输出的表间隔格式,默认是100:

beeline --headerInterval=50

--hiveconf property=value ---设置属性值,以防被hive.conf.restricted.list重置:

beeline --hiveconf prop1=value1

--hivevar name=value ---设置变量名:

beeline --hivevar var1=value1

--incremental=[true/false] ---输出增量

--isolation=LEVEL ---设置事务隔离级别:

beeline --isolation=TRANSACTION_SERIALIZABLE

--maxColumnWidth=MAXCOLWIDTH ---设置字符串列的最大宽度:

beeline --maxColumnWidth=25

--maxWidth=MAXWIDTH ---设置截断数据的最大宽度:

beeline --maxWidth=150

--nullemptystring=[true/false] ---打印空字符串:

beeline --nullemptystring=false

--numberFormat=[pattern] ---数字使用DecimalFormat:

beeline --numberFormat="#,###,##0.00"

--showHeader=[true/false] ---显示查询结果的列名:

beeline --showHeader=false

--showNestedErrs=[true/false] ---显示嵌套错误:

beeline --showNestedErrs=true

--showWarnings=[true/false] ---显示警告:

beeline --showWarnings=true

--truncateTable=[true/false] ---是否在客户端截断表的列

--verbose=[true/false] ---显示详细错误信息和调试信息:

beeline --verbose=true

-d---使用一个驱动类:

beeline -d driver_class

到这里,beeline 的基本命令用法就介绍完了,你有用于其他场景吗?欢迎大家留言讨论。

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2022-08-28,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录