外包精通--0成本学习IT运维kubespray(一)--master高可用方案

原创外包精通--0成本学习IT运维kubespray(一)--master高可用方案

原创

Godev

修改于 2024-05-01 06:51:50

修改于 2024-05-01 06:51:50

# IT学习

无需时间

无需成本

适合人群:开发(不会部署),运维,外包

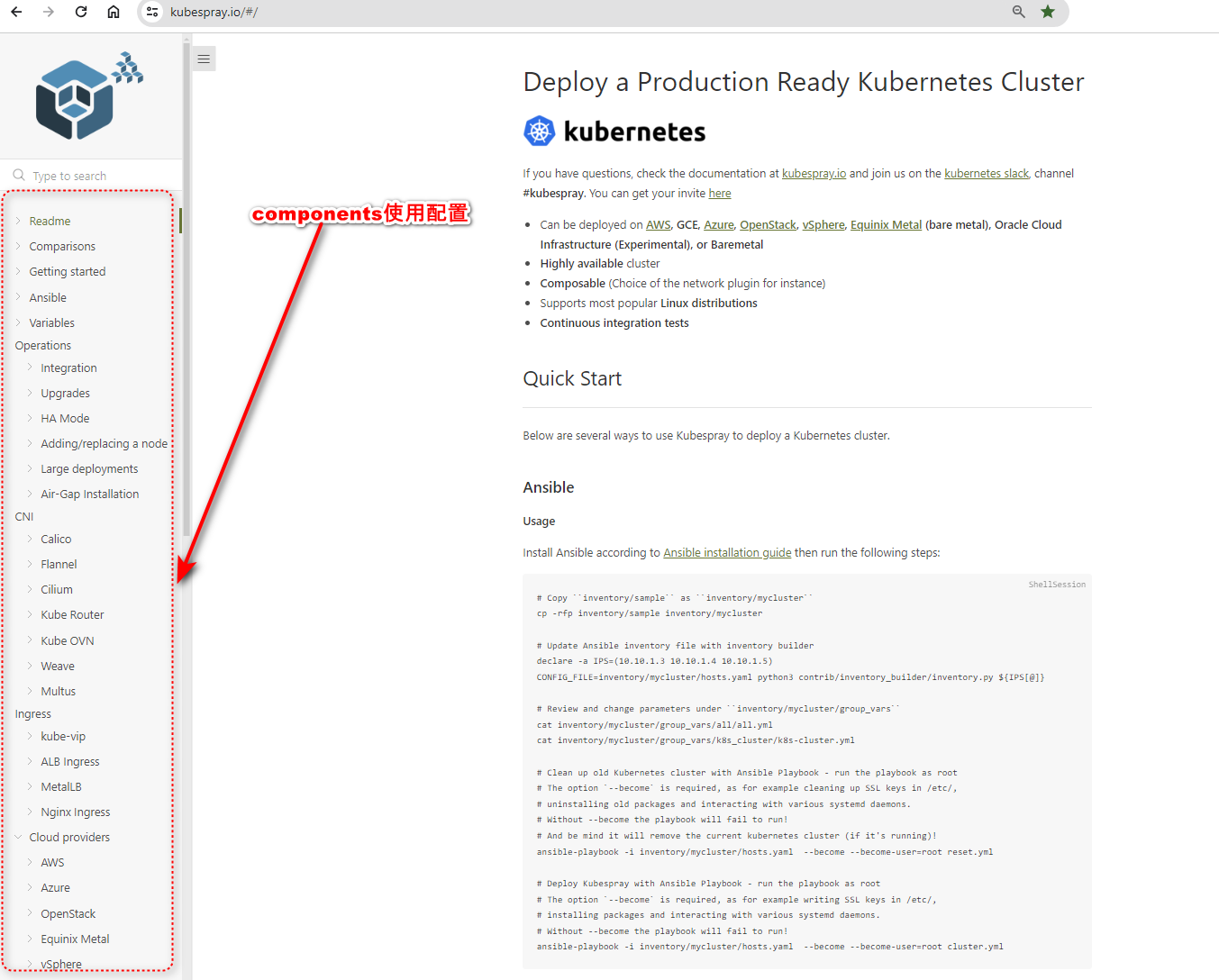

官网文档

GitHub地址:https://github.com/kubernetes-sigs/kubespray

部署环境

部署机环境

[root@node1 ~]# cat /etc/os-release

NAME="CentOS Stream"

VERSION="9"

ID="centos"

ID_LIKE="rhel fedora"

VERSION_ID="9"

PLATFORM_ID="platform:el9"

PRETTY_NAME="CentOS Stream 9"

ANSI_COLOR="0;31"

LOGO="fedora-logo-icon"

CPE_NAME="cpe:/o:centos:centos:9"

HOME_URL="https://centos.org/"

BUG_REPORT_URL="https://bugzilla.redhat.com/"

REDHAT_SUPPORT_PRODUCT="Red Hat Enterprise Linux 9"

REDHAT_SUPPORT_PRODUCT_VERSION="CentOS Stream"

[root@node1 ~]#主机列表及角色

IP | OS | 角色 |

|---|---|---|

192.168.2.129 | CentOS Stream release 9 | control-plane |

192.168.2.158 | CentOS Stream release 9 | control-plane |

192.168.2.234 | CentOS Stream release 9 | worknode |

kubespray version

kubespray-2.24.1kubespray准备及配置

# Install dependencies from ``requirements.txt``

sudo pip3 install -r requirements.txt

# Copy ``inventory/sample`` as ``inventory/mycluster``

cp -rfp inventory/sample inventory/prod

# Update Ansible inventory file with inventory builder

declare -a IPS=(192.168.2.121 192.168.2.167 192.168.2.190)

CONFIG_FILE=inventory/prod/hosts.yaml python3 contrib/inventory_builder/inventory.py ${IPS[@]}

# Review and change parameters under ``inventory/mycluster/group_vars``

cat inventory/prod/group_vars/all/all.yml

cat inventory/prod/group_vars/k8s_cluster/k8s-cluster.yml

# Deploy Kubespray with Ansible Playbook - run the playbook as root

# The option `--become` is required, as for example writing SSL keys in /etc/,

# installing packages and interacting with various systemd daemons.

# Without --become the playbook will fail to run!ansible 角色配置

kube_control_plane:无注释主机

kube_node:注释node1,node2

etcd:无注释主机

其他默认

无需设置主机名

[root@node1 kubespray-2.24.1]# cat inventory/prod/hosts.yaml

all:

hosts:

node1:

ansible_host: 192.168.2.129

ip: 192.168.2.129

access_ip: 192.168.2.129

node2:

ansible_host: 192.168.2.158

ip: 192.168.2.158

access_ip: 192.168.2.158

node3:

ansible_host: 192.168.2.234

ip: 192.168.2.234

access_ip: 192.168.2.234

children:

kube_control_plane:

hosts:

node1:

node2:

kube_node:

hosts:

#node1:

#node2:

node3:

etcd:

hosts:

node1:

node2:

node3:

k8s_cluster:

children:

kube_control_plane:

kube_node:

calico_rr:

hosts: {}

[root@node1 kubespray-2.24.1]#

inventory配置

all:环境只有3台,前3台无需注释

kube_control_plane:node1,node2

kube_node:node3

其他默认

[root@node1 kubespray-2.24.1]# cat inventory/prod/inventory.ini

# ## Configure 'ip' variable to bind kubernetes services on a

# ## different ip than the default iface

# ## We should set etcd_member_name for etcd cluster. The node that is not a etcd member do not need to set the value, or can set the empty string value.

[all]

node1 ansible_host=192.168.2.129 # ip=10.3.0.1 etcd_member_name=etcd1

node2 ansible_host=192.168.2.158 # ip=10.3.0.2 etcd_member_name=etcd2

node3 ansible_host=192.168.2.234 # ip=10.3.0.3 etcd_member_name=etcd3

# node4 ansible_host=192.168.2.15 # ip=10.3.0.4 etcd_member_name=etcd4

# node5 ansible_host=192.168.2.16 # ip=10.3.0.5 etcd_member_name=etcd5

# node6 ansible_host=192.168.2.17 # ip=10.3.0.6 etcd_member_name=etcd6

# ## configure a bastion host if your nodes are not directly reachable

# [bastion]

# bastion ansible_host=x.x.x.x ansible_user=some_user

[kube_control_plane]

node1

node2

# node3

[etcd]

node1

node2

node3

[kube_node]

# node2

node3

# node4

# node5

# node6

[calico_rr]

[k8s_cluster:children]

kube_control_plane

kube_node

calico_rr

[root@node1 kubespray-2.24.1]#

检查连通

(kubespray-venv) [root@localhost kubespray-2.24.1]# ansible -m ping all -i inventory/prod/hosts.yaml

[WARNING]: Skipping callback plugin 'ara_default', unable to load

node2 | SUCCESS => {

"changed": false,

"ping": "pong"

}

node3 | SUCCESS => {

"changed": false,

"ping": "pong"

}

node1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

(kubespray-venv) [root@localhost kubespray-2.24.1]#配置ansible 日志路径

在kubespray-2.24.1目录下修改ansible.cfg,增加一行

/var/log/ansible.log:自己想要的路径及日志文件名称

log_path = /var/log/ansible.logansible.cfg配置

[root@node1 kubespray-2.18.1]# cat ansible.cfg

[ssh_connection]

pipelining=True

ssh_args = -o ControlMaster=auto -o ControlPersist=30m -o ConnectionAttempts=100 -o UserKnownHostsFile=/dev/null

#control_path = ~/.ssh/ansible-%%r@%%h:%%p

[defaults]

# https://github.com/ansible/ansible/issues/56930 (to ignore group names with - and .)

force_valid_group_names = ignore

log_path = /var/log/ansible.log

host_key_checking=False

gathering = smart

fact_caching = jsonfile

fact_caching_connection = /tmp

fact_caching_timeout = 7200

stdout_callback = default

display_skipped_hosts = no

library = ./library

callback_whitelist = profile_tasks

roles_path = roles:$VIRTUAL_ENV/usr/local/share/kubespray/roles:$VIRTUAL_ENV/usr/local/share/ansible/roles:/usr/share/kubespray/roles

deprecation_warnings=False

inventory_ignore_extensions = ~, .orig, .bak, .ini, .cfg, .retry, .pyc, .pyo, .creds, .gpg

[inventory]

ignore_patterns = artifacts, credentials

[root@node1 kubespray-2.18.1]#k8s-cluster剧本配置

在kubespray-2.24.1路径下,vim inventory/prod/group_vars/k8s_cluster/k8s-cluster.yml

kube_proxy_strict_arp: true

vim inventory/prod/group_vars/k8s_cluster/addons.yml

metallb_enabled: true

metallb_speaker_enabled: true

metallb_ip_range:

- 10.5.0.0/16

metallb_controller_tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Equal"

value: ""

effect: "NoSchedule"

- key: "node-role.kubernetes.io/control-plane"

operator: "Equal"

value: ""

effect: "NoSchedule"

metallb_protocol: bgp

metallb_ip_range:

- 10.5.0.0/16

metallb_peers:

- peer_address: 192.0.2.1

peer_asn: 64512

my_asn: 4200000000

- peer_address: 192.0.2.2

peer_asn: 64513

my_asn: 4200000000

metallb_speaker_enabled: false

metallb_ip_range:

- 10.5.0.0/16

calico_advertise_service_loadbalancer_ips: "{{ metallb_ip_range }}"

metallb_speaker_enabled: false

metallb_ip_range:

- 10.5.0.0/16

metallb_additional_address_pools:

kube_service_pool_1:

ip_range:

- 10.6.0.0/16

protocol: "bgp"

auto_assign: false

kube_service_pool_2:

ip_range:

- 10.10.0.0/16

protocol: "bgp"

auto_assign: false

calico_advertise_service_loadbalancer_ips:

- 10.5.0.0/16

- 10.6.0.0/16

- 10.10.0.0/16kubespray默认容器平台为containerd

在kubespray-2.24.1路径下,vim inventory/prod/group_vars/k8s_cluster/k8s-cluster.yml

## Container runtime

## docker for docker, crio for cri-o and containerd for containerd.

## Default: containerd

#container_manager: containerd

container_manager: docker-ceIPv6配置

根据需求可选择是否注释掉

#kube_service_addresses_ipv6: fd85:ee78:d8a6:8607::1000/116

#kube_pods_subnet_ipv6: fd85:ee78:d8a6:8607::1:0000/112

#kube_network_node_prefix_ipv6: 120metallb配置

在kubespray-2.24.1路径下,vim inventory/prod/group_vars/k8s_cluster/addons.yml

metallb_enabled: true

metallb_speaker_enabled: false

metallb_controller_tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Equal"

value: ""

effect: "NoSchedule"

- key: "node-role.kubernetes.io/control-plane"

operator: "Equal"

value: ""

effect: "NoSchedule"

metallb_protocol: "bgp"

metallb_peers:

- peer_address: 192.0.2.1

peer_asn: 64512

my_asn: 4200000000

- peer_address: 192.0.2.2

peer_asn: 64513

my_asn: 4200000000网络插件默认配置calico

在kubespray-2.24.1路径下,vim inventory/prod/group_vars/k8s_cluster/k8s-cluster.yml

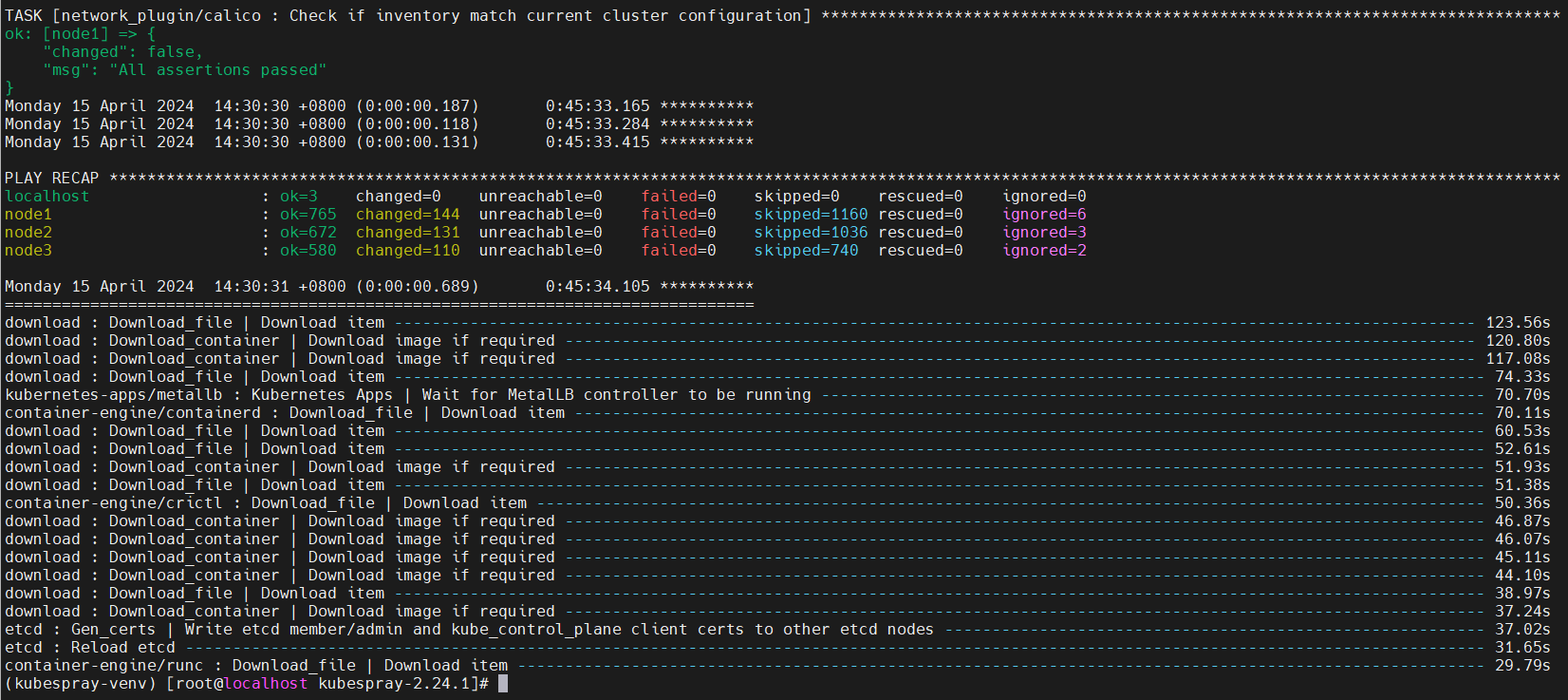

kube_network_plugin: calicoansible安装k8s命令

运行ansible命令后等待安装完成

ansible-playbook -i inventory/prod/hosts.yaml 安装结果

k8s机器状态

集群列表

[root@node1 kubespray-2.24.1]# kubectl get no -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node1 Ready control-plane 23h v1.28.6 192.168.2.129 <none> CentOS Stream 9 5.14.0-435.el9.x86_64 containerd://1.7.13

node2 Ready control-plane 23h v1.28.6 192.168.2.158 <none> CentOS Stream 9 5.14.0-435.el9.x86_64 containerd://1.7.13

node3 Ready <none> 23h v1.28.6 192.168.2.234 <none> CentOS Stream 9 5.14.0-435.el9.x86_64 containerd://1.7.13

[root@node1 kubespray-2.24.1]#组件状态

[root@node1 kubespray-2.24.1]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy ok

[root@node1 kubespray-2.24.1]#[root@node1 kubespray-2.24.1]# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-648dffd99-swvpz 1/1 Running 0 23h 10.233.64.129 node3 <none> <none>

kube-system calico-node-8wt58 1/1 Running 0 23h 192.168.2.129 node1 <none> <none>

kube-system calico-node-tmvh6 1/1 Running 0 23h 192.168.2.234 node3 <none> <none>

kube-system calico-node-xjcnn 1/1 Running 0 23h 192.168.2.158 node2 <none> <none>

kube-system coredns-77f7cc69db-56gbk 1/1 Running 0 23h 10.233.64.1 node2 <none> <none>

kube-system coredns-77f7cc69db-jlc4v 1/1 Running 0 23h 10.233.64.194 node1 <none> <none>

kube-system dns-autoscaler-8576bb9f5b-qffwd 1/1 Running 0 23h 10.233.64.193 node1 <none> <none>

kube-system kube-apiserver-node1 1/1 Running 1 23h 192.168.2.129 node1 <none> <none>

kube-system kube-apiserver-node2 1/1 Running 2 23h 192.168.2.158 node2 <none> <none>

kube-system kube-controller-manager-node1 1/1 Running 2 23h 192.168.2.129 node1 <none> <none>

kube-system kube-controller-manager-node2 1/1 Running 2 23h 192.168.2.158 node2 <none> <none>

kube-system kube-proxy-h8z5b 1/1 Running 0 23h 192.168.2.129 node1 <none> <none>

kube-system kube-proxy-kj2ss 1/1 Running 0 23h 192.168.2.234 node3 <none> <none>

kube-system kube-proxy-qp4hh 1/1 Running 0 23h 192.168.2.158 node2 <none> <none>

kube-system kube-scheduler-node1 1/1 Running 3 (22h ago) 23h 192.168.2.129 node1 <none> <none>

kube-system kube-scheduler-node2 1/1 Running 1 23h 192.168.2.158 node2 <none> <none>

kube-system nginx-proxy-node3 1/1 Running 0 23h 192.168.2.234 node3 <none> <none>

kube-system nodelocaldns-8b4nm 1/1 Running 0 23h 192.168.2.129 node1 <none> <none>

kube-system nodelocaldns-tjqwm 1/1 Running 0 23h 192.168.2.158 node2 <none> <none>

kube-system nodelocaldns-w79xk 1/1 Running 0 23h 192.168.2.234 node3 <none> <none>

metallb-system controller-666f99f6ff-qvqrd 1/1 Running 0 23h 10.233.64.130 node3 <none> <none>

metallb-system speaker-4djx5 1/1 Running 0 23h 192.168.2.234 node3 <none> <none>

metallb-system speaker-fnkfh 1/1 Running 0 23h 192.168.2.158 node2 <none> <none>

metallb-system speaker-wfd2t 1/1 Running 0 23h 192.168.2.129 node1 <none> <none>

[root@node1 kubespray-2.24.1]#

[root@node1 kubespray-2.24.1]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:10:f3:4b brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.2.129/24 brd 192.168.2.255 scope global dynamic noprefixroute ens160

valid_lft 35646sec preferred_lft 35646sec

inet6 fe80::20c:29ff:fe10:f34b/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether a2:1c:3f:1f:08:f2 brd ff:ff:ff:ff:ff:ff

inet 10.233.0.1/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.233.0.3/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.233.12.51/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

4: vxlan.calico: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 66:f9:37:c3:7e:94 brd ff:ff:ff:ff:ff:ff

inet 10.233.64.192/32 scope global vxlan.calico

valid_lft forever preferred_lft forever

inet6 fe80::64f9:37ff:fec3:7e94/64 scope link

valid_lft forever preferred_lft forever

7: nodelocaldns: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 6a:43:84:7d:e0:84 brd ff:ff:ff:ff:ff:ff

inet 169.254.25.10/32 scope global nodelocaldns

valid_lft forever preferred_lft forever

8: cali54896e63969@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-9942efc3-8da9-239c-2b50-941b4711d123

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

9: cali25c08d95289@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-4a9392c5-021a-02dd-9845-5681b2c09176

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

[root@node1 kubespray-2.24.1]#

[root@node1 kubespray-2.24.1]# kubectl get pod,svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 23h <none>

[root@node1 kubespray-2.24.1]#测试运行pod

[root@node1 kubespray-2.24.1]# kubectl create deploy web --image=nginx

deployment.apps/web created

[root@node1 kubespray-2.24.1]#

[root@node1 kubespray-2.24.1]# kubectl expose deploy web --port=80 --target-port=80 --name=web

service/web exposed

[root@node1 kubespray-2.24.1]#

[root@node1 kubespray-2.24.1]# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-76fd95c67-x9dg8 1/1 Running 0 96s 10.233.64.131 node3 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 23h <none>

service/web ClusterIP 10.233.28.165 <none> 80/TCP 16s app=web

[root@node1 kubespray-2.24.1]#[root@node1 kubespray-2.24.1]# curl 10.233.64.131

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@node1 kubespray-2.24.1]#

[root@node1 kubespray-2.24.1]# curl 10.233.28.165

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

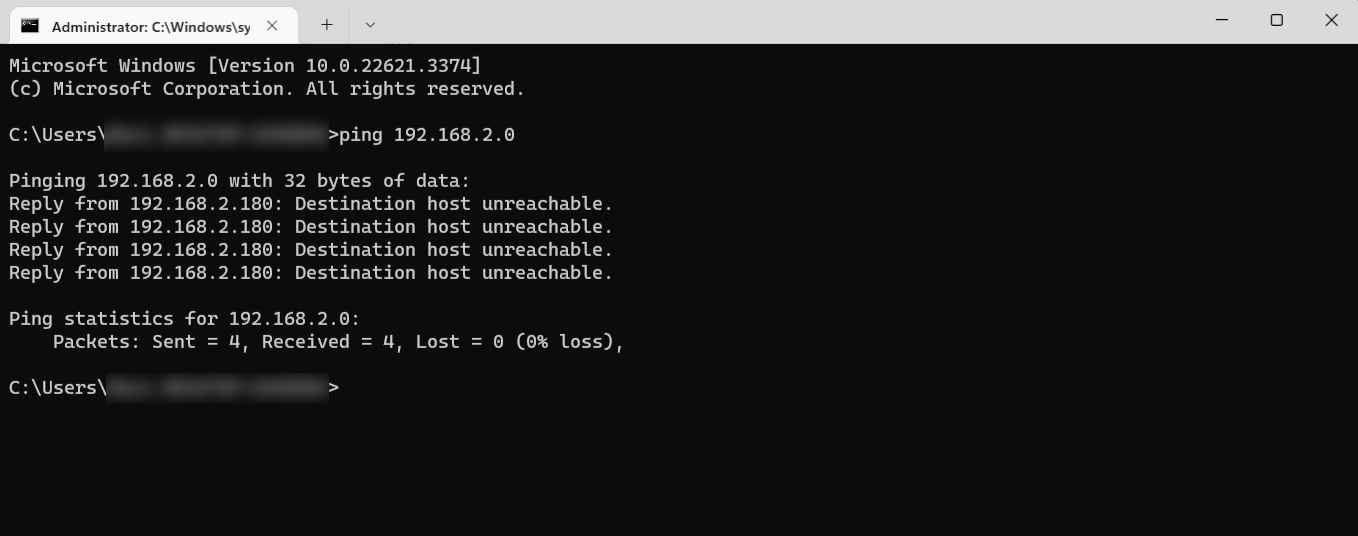

[root@node1 kubespray-2.24.1]#集群外部ping测试

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

评论

登录后参与评论

推荐阅读

目录