[MYSQL] 1分钟1GB binlog的超密集型日志怎么解析?

原创[MYSQL] 1分钟1GB binlog的超密集型日志怎么解析?

原创

大大刺猬

发布于 2025-11-20 17:27:48

发布于 2025-11-20 17:27:48

导读

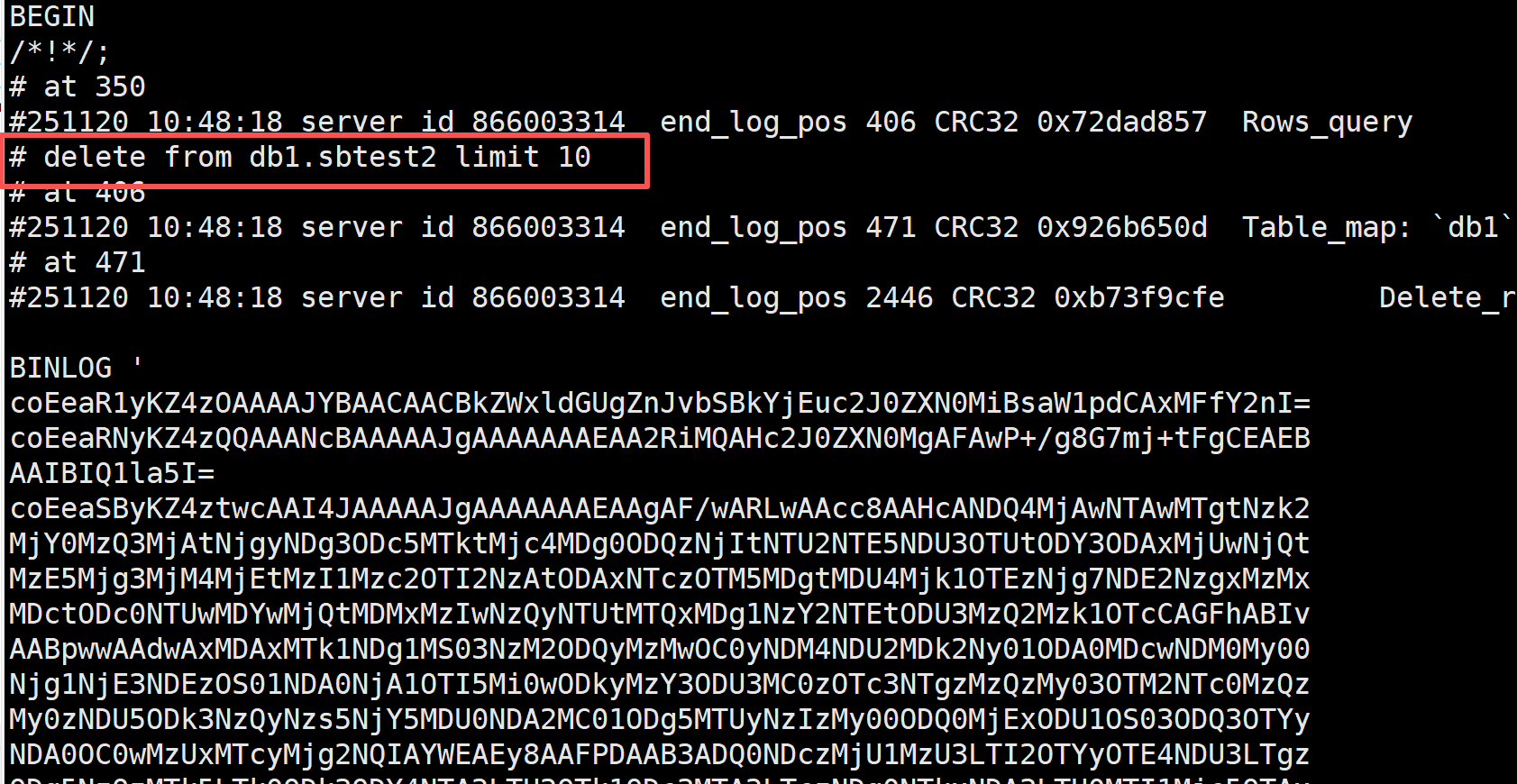

通常我们会使用binlog_format=ROW的格式,这样就没得函数之类的坑了, 主库更新的数据全部都会记录在binlog里面,主从回放基本上就没啥问题了. 但是呢, 这样的日志量会非常的多, 比如业务执行一条insert into t2 select * from t2这么一条简单的SQL,会把整个表的数据都记录下来; 表只要不是很小, 就会产生大量的binlog, 除了占用空间外还会影响我们分析binlog. 那么有没有参数可以记录下原始SQL呢? 有的,兄弟,包有的.

当启用参数binlog_rows_query_log_events的时候, 执行的SQL除了记录修改的数据外,还会额外记录原始的SQL(主从复制的时候能直接看到SQL), 这样我们就不需要看那一堆堆的row_event了.

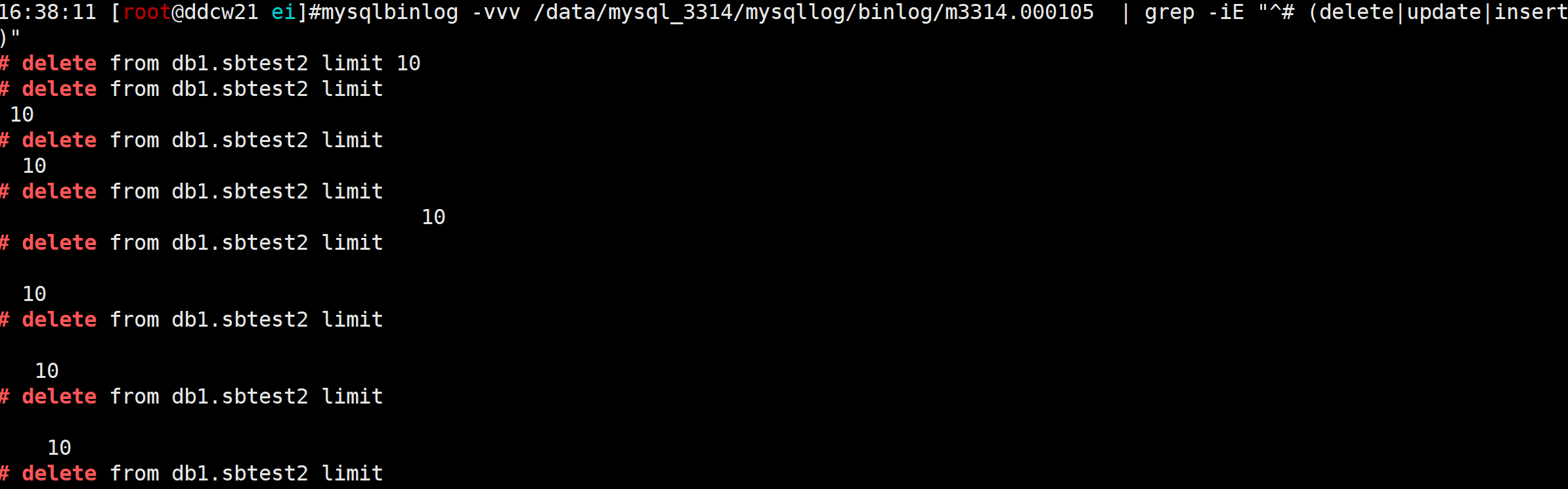

新问题又来了, 怎么在一堆堆的信息中找到我们的这个SQL语句呢? mysqlbinlog -vvv mysql-bin.xxxx | grep -iE "^# (delete|update|insert)"就可以啊, 是的. 但mysqlbinlog解析的时候会使用临时目录, 可能会把临时目录打爆, 也好解决, 换个大点的临时目录:export TMPDIR=/data. 哈哈,完美解决.

那如果Binlog很密集, 比如1分钟1GB日志,其中有很多insert into select的SQL, 你需要分析其中某部分的事务逻辑, 如果直接解析的话, 可能会产生几十GB的日志, 使用grep过滤这几十GB的日志是非常慢的. 而且有很大可能需要分析多个日志,这就得花费大量时间了, 而且还得观察临时空间, 免得告警.

我们分析密集型的binlog的时候除了mysqlbinlog外, 还有没有其它更好的方法呢?

解析binlog中的ROWS_QUERY_LOG_EVENT

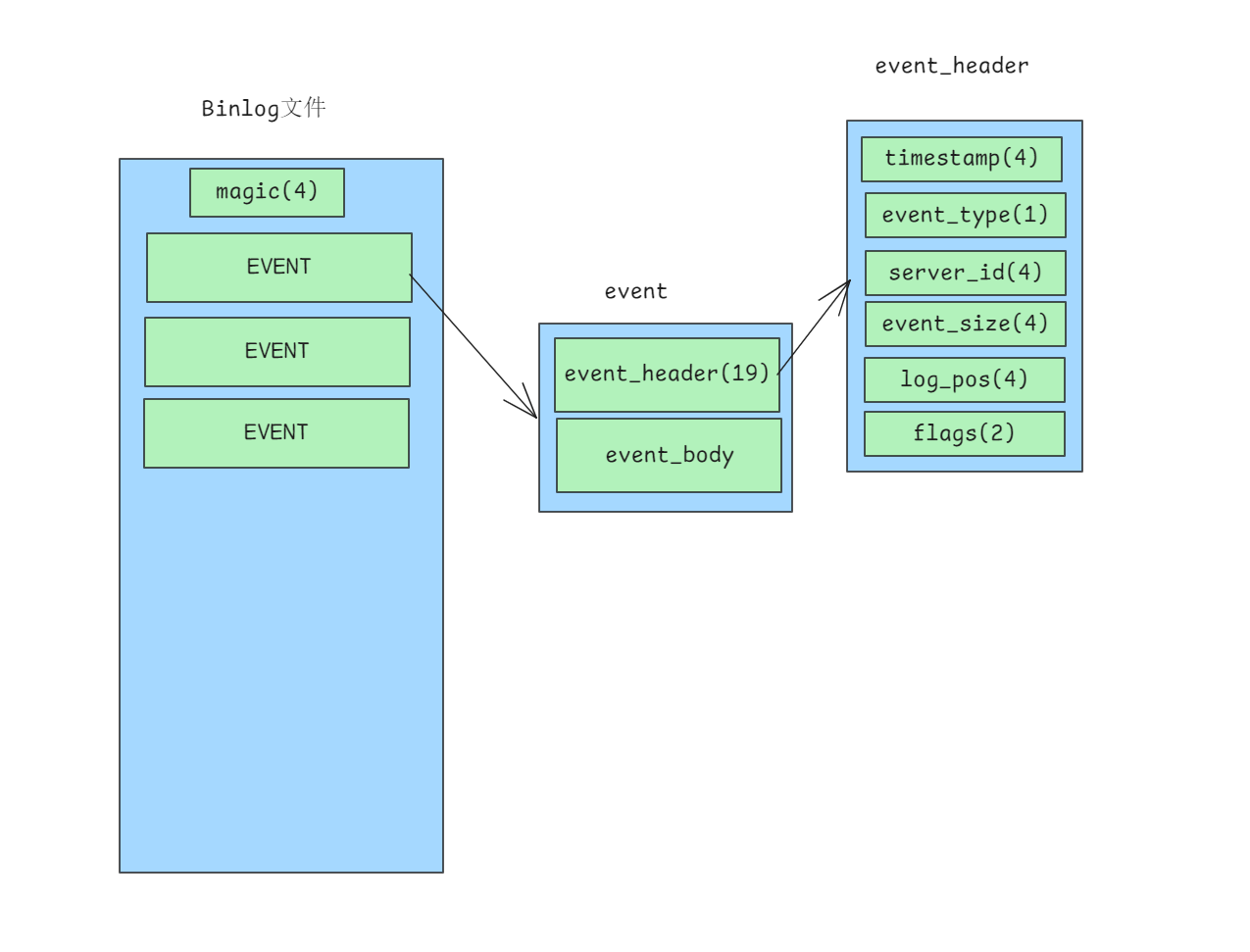

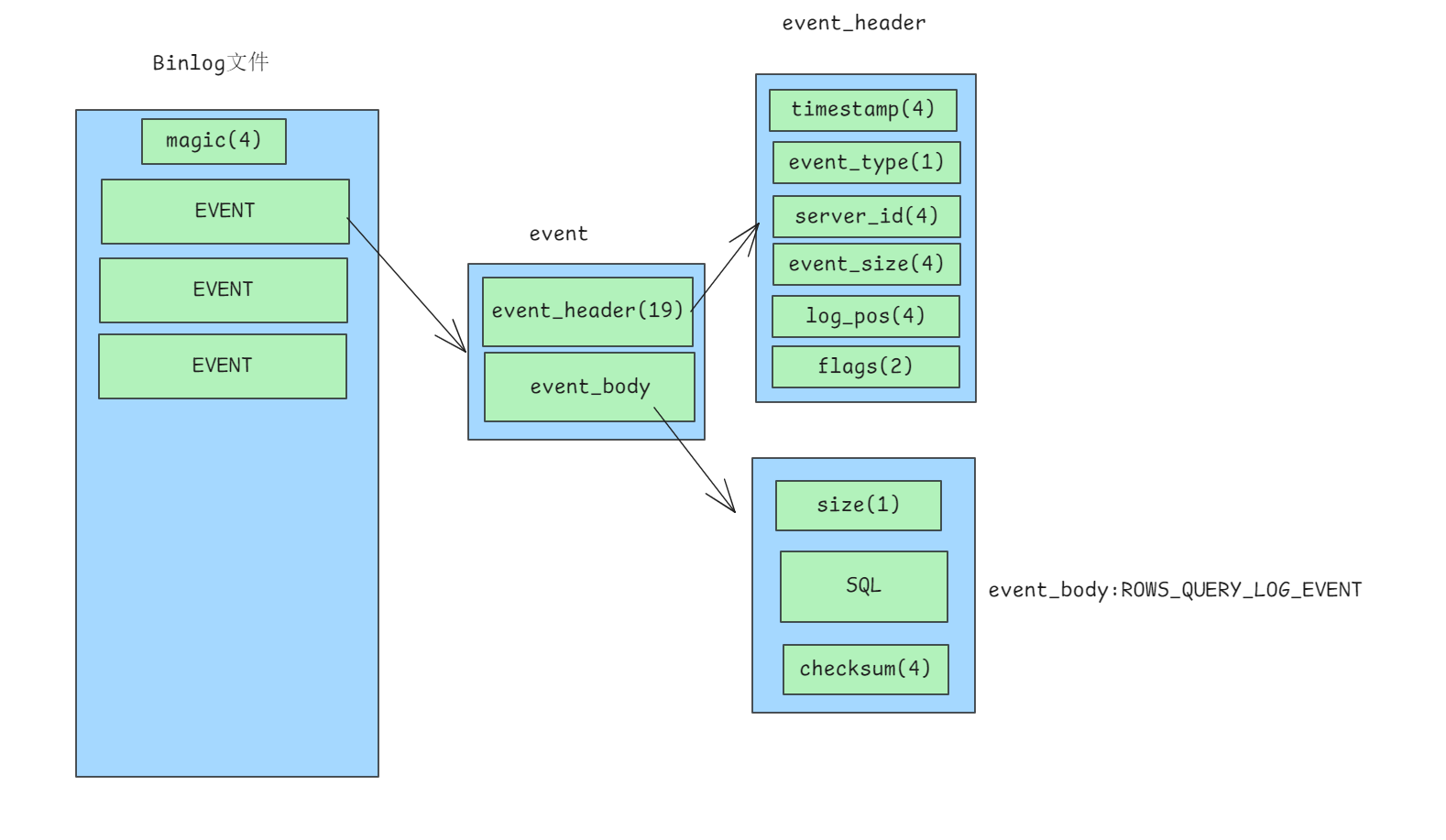

我们现在的需求是要只提取binlog中的业务SQL--ROWS_QUERY_LOG_EVENT, 貌似没有现成好用点的工具, 那我们就自己写一个吧. 我们先来回顾下binlog的格式: binlog由若干个event组成, 每个event由19字节的event_header和event_body组成.如下:

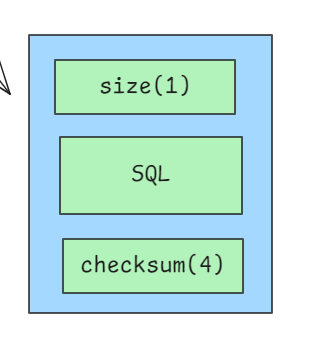

每种event的event_body结构都不一样, 本次的ROWS_QUERY_LOG_EVENT格式如下:

size是固定的1字节,用来记录业务SQL的长度, 超过1字节的部分,只记录1字节的内容. 也就是长度对255求余.

感觉没啥意义

然后就是编写脚本了, 由于我们要考虑的场景比较特殊, 就不做成通用的了, 也不考虑--start-position,--start-datetime,--table-include之类的功能了, 主打一个能直接手敲! 有兴趣的自己添加. 脚本如下:

#!/usr/bin/env python3

# write by ddcw @https://github.com/ddcw

# 解析binlog中 QUERY_EVENT和ROWS_QUERY_LOG_EVENT, 也就是开启参数binlog_rows_query_log_events的就能解析

# 简单解析, 先不支持时间过滤,指定POS等

import datetime

import struct

import sys

def format_timestamp(t):

return datetime.datetime.fromtimestamp(t).strftime('%Y-%m-%d %H:%M:%S')

def main():

filename = sys.argv[1]

with open(filename,'rb') as f:

checksum_alg = False

if f.read(4) != b'\xfebin':

f.tell(0,0) # relay log

while True:

bevent_header = f.read(19)

if len(bevent_header) != 19:

break

timestamp, event_type, server_id, event_size, log_pos, flags = struct.unpack('<LBLLLH',bevent_header)

msg = f'# time:{format_timestamp(timestamp)} server_id:{server_id} event_type:{event_type} event_size:{event_size} log_pos:{log_pos}'

#bevent_body = f.read(event_size-19)

#continue

if event_type == 15: # FORMAT_DESCRIPTION_EVENT

binlog_version, = struct.unpack('<H',f.read(2))

mysql_version_id = f.read(50).decode().strip()

create_timestamp, = struct.unpack('<L',f.read(4))

event_header_length, = struct.unpack('<B',f.read(1))

if mysql_version_id[:2] == '5.': # 5.x

event_post_header_len = f.read(38)

elif mysql_version_id[:4] == '8.4.': # 8.4

event_post_header_len = f.read(43)

elif mysql_version_id[:2] == '8.': # 8.0

event_post_header_len = f.read(41)

checksum_alg = True if struct.unpack('<B',f.read(1))[0] else 0

if checksum_alg:

f.seek(4,1)

print(f'{msg} create_time {format_timestamp(create_timestamp)} mysql_version:{mysql_version_id} create_time:{format_timestamp(create_timestamp)}')

elif event_type == 2: # QUERY_EVENT DDL

data = f.read(event_size-19)

thread_id,query_exec_time,db_len,error_code,status_vars_len = struct.unpack('<LLBHH',data[:13])

dbname = data[13+status_vars_len:][:db_len].decode()

ddl = data[13+status_vars_len+db_len+1:-4 if checksum_alg else -1].decode()

l = '\n'

if ddl != 'BEGIN':

print(f'{msg} thread_id:{thread_id} query_exec_time:{query_exec_time}\n{"USE "+dbname+";"+l if db_len>0 else ""}{ddl}\n\n')

elif event_type == 3: # STOP_EVENT 文件结束了

break

elif event_type == 33: # GTID_LOG_EVENT begin

f.seek(event_size-19,1)

print(f'{msg}\nBEGIN;')

elif event_type == 29: # ROWS_QUERY_LOG_EVENT query

data = f.read(event_size-19)

print(f'{msg}\n{data[1:-4 if checksum_alg else -1].decode()};')

elif event_type == 16: # XID_EVENT commit

f.seek(event_size-19,1)

print(f'{msg}\nCOMMIT;\n\n')

else: # 剩余的事务全部跳过

f.seek(event_size-19,1)

if __name__ == '__main__':

main()然后我们来测试下效果:

-- 删除存在的表,可选

drop table if exists db1.t20251120_rows_query;

-- 刷新下日志, 方便后续校验

flush binary logs;

-- 建表

create table db1.t20251120_rows_query(id int primary key auto_increment, name varchar(200));

-- 准备时间

insert into db1.t20251120_rows_query(name) values('ddcw');

-- 多加几条,比如来个10来遍

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

-- ....

-- 可以再删除几条,看看效果

delete from db1.t20251120_rows_query limit 10;

-- 看下日志叫啥

select @@log_bin_basename;

show master status;

然后我们就可以使用我们的校验来看下效果了:

17:07:23 [root@ddcw21 ei]#python3 get_sql_by_rows_query_log_event.py /data/mysql_3314/mysqllog/binlog/m3314.000106

# time:2025-11-20 17:06:38 server_id:866003314 event_type:15 event_size:122 log_pos:126 create_time 1970-01-01 08:00:00 mysql_version:8.0.28 create_time:1970-01-01 08:00:00

# time:2025-11-20 17:06:38 server_id:866003314 event_type:33 event_size:79 log_pos:276

BEGIN;

# time:2025-11-20 17:06:38 server_id:866003314 event_type:2 event_size:177 log_pos:453 thread_id:10 query_exec_time:0

USE db1;

create table db1.t20251120_rows_query(id int primary key auto_increment, name varchar(200))

# time:2025-11-20 17:06:38 server_id:866003314 event_type:33 event_size:79 log_pos:532

BEGIN;

# time:2025-11-20 17:06:38 server_id:866003314 event_type:29 event_size:81 log_pos:687

insert into db1.t20251120_rows_query(name) values('ddcw');

# time:2025-11-20 17:06:38 server_id:866003314 event_type:16 event_size:31 log_pos:835

COMMIT;

# time:2025-11-20 17:06:38 server_id:866003314 event_type:33 event_size:79 log_pos:914

BEGIN;

# time:2025-11-20 17:06:38 server_id:866003314 event_type:29 event_size:108 log_pos:1096

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:38 server_id:866003314 event_type:16 event_size:31 log_pos:1244

COMMIT;

# time:2025-11-20 17:06:39 server_id:866003314 event_type:33 event_size:79 log_pos:1323

BEGIN;

# time:2025-11-20 17:06:39 server_id:866003314 event_type:29 event_size:108 log_pos:1505

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:39 server_id:866003314 event_type:16 event_size:31 log_pos:1664

COMMIT;

# time:2025-11-20 17:06:40 server_id:866003314 event_type:33 event_size:79 log_pos:1743

BEGIN;

# time:2025-11-20 17:06:40 server_id:866003314 event_type:29 event_size:108 log_pos:1925

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:40 server_id:866003314 event_type:16 event_size:31 log_pos:2106

COMMIT;

# time:2025-11-20 17:06:41 server_id:866003314 event_type:33 event_size:79 log_pos:2185

BEGIN;

# time:2025-11-20 17:06:41 server_id:866003314 event_type:29 event_size:108 log_pos:2367

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:41 server_id:866003314 event_type:16 event_size:31 log_pos:2592

COMMIT;

# time:2025-11-20 17:06:41 server_id:866003314 event_type:33 event_size:79 log_pos:2671

BEGIN;

# time:2025-11-20 17:06:41 server_id:866003314 event_type:29 event_size:108 log_pos:2853

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:41 server_id:866003314 event_type:16 event_size:31 log_pos:3166

COMMIT;

# time:2025-11-20 17:06:42 server_id:866003314 event_type:33 event_size:79 log_pos:3245

BEGIN;

# time:2025-11-20 17:06:42 server_id:866003314 event_type:29 event_size:108 log_pos:3427

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:42 server_id:866003314 event_type:16 event_size:31 log_pos:3916

COMMIT;

# time:2025-11-20 17:06:42 server_id:866003314 event_type:33 event_size:79 log_pos:3995

BEGIN;

# time:2025-11-20 17:06:42 server_id:866003314 event_type:29 event_size:108 log_pos:4177

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:42 server_id:866003314 event_type:16 event_size:31 log_pos:5018

COMMIT;

# time:2025-11-20 17:06:43 server_id:866003314 event_type:33 event_size:79 log_pos:5097

BEGIN;

# time:2025-11-20 17:06:43 server_id:866003314 event_type:29 event_size:108 log_pos:5279

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:43 server_id:866003314 event_type:16 event_size:31 log_pos:6824

COMMIT;

# time:2025-11-20 17:06:43 server_id:866003314 event_type:33 event_size:79 log_pos:6903

BEGIN;

# time:2025-11-20 17:06:43 server_id:866003314 event_type:29 event_size:108 log_pos:7085

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:43 server_id:866003314 event_type:16 event_size:31 log_pos:10038

COMMIT;

# time:2025-11-20 17:06:44 server_id:866003314 event_type:33 event_size:79 log_pos:10117

BEGIN;

# time:2025-11-20 17:06:44 server_id:866003314 event_type:29 event_size:108 log_pos:10299

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:44 server_id:866003314 event_type:16 event_size:31 log_pos:16068

COMMIT;

# time:2025-11-20 17:06:44 server_id:866003314 event_type:33 event_size:79 log_pos:16147

BEGIN;

# time:2025-11-20 17:06:44 server_id:866003314 event_type:29 event_size:108 log_pos:16329

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:44 server_id:866003314 event_type:16 event_size:31 log_pos:27765

COMMIT;

# time:2025-11-20 17:06:45 server_id:866003314 event_type:33 event_size:79 log_pos:27844

BEGIN;

# time:2025-11-20 17:06:45 server_id:866003314 event_type:29 event_size:108 log_pos:28026

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:45 server_id:866003314 event_type:16 event_size:31 log_pos:50761

COMMIT;

# time:2025-11-20 17:06:45 server_id:866003314 event_type:33 event_size:79 log_pos:50840

BEGIN;

# time:2025-11-20 17:06:45 server_id:866003314 event_type:29 event_size:108 log_pos:51022

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:45 server_id:866003314 event_type:16 event_size:31 log_pos:96390

COMMIT;

# time:2025-11-20 17:06:46 server_id:866003314 event_type:33 event_size:80 log_pos:96470

BEGIN;

# time:2025-11-20 17:06:46 server_id:866003314 event_type:29 event_size:108 log_pos:96652

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:46 server_id:866003314 event_type:16 event_size:31 log_pos:187286

COMMIT;

# time:2025-11-20 17:06:46 server_id:866003314 event_type:33 event_size:80 log_pos:187366

BEGIN;

# time:2025-11-20 17:06:46 server_id:866003314 event_type:29 event_size:108 log_pos:187548

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:46 server_id:866003314 event_type:16 event_size:31 log_pos:368679

COMMIT;

# time:2025-11-20 17:06:47 server_id:866003314 event_type:33 event_size:80 log_pos:368759

BEGIN;

# time:2025-11-20 17:06:47 server_id:866003314 event_type:29 event_size:108 log_pos:368941

insert into db1.t20251120_rows_query(name) select name from db1.t20251120_rows_query;

# time:2025-11-20 17:06:47 server_id:866003314 event_type:16 event_size:31 log_pos:731066

COMMIT;

# time:2025-11-20 17:06:51 server_id:866003314 event_type:33 event_size:79 log_pos:731145

BEGIN;

# time:2025-11-20 17:06:51 server_id:866003314 event_type:29 event_size:69 log_pos:731288

delete from db1.t20251120_rows_query limit 10;

# time:2025-11-20 17:06:51 server_id:866003314 event_type:16 event_size:31 log_pos:731535

COMMIT;效果很满意, 下次还会回购

看起来是达到了我们要的效果, 那本该有的一堆堆的数据没了,只剩下我们需要的业务SQL, 而且解析很快,资源也几乎不消耗(除非业务SQL很多),tmpdir也不需要设置了,简直完美! 剩下的就是分析了(分析其实也可以使用脚本的).

总结

本次需求不复杂,只是查看下binlog中记录的业务SQL而已, 所以能很快的编写相关脚本来实现, 前提是得熟悉binlog的结构, 也就是打好基础很重要!

参考: https://dev.mysql.com/doc/refman/8.0/en/replication-options-binary-log.html

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

评论

登录后参与评论

推荐阅读

目录