合并视频时迅速的-AVMutableVideoCompositionLayerInstruction不对齐

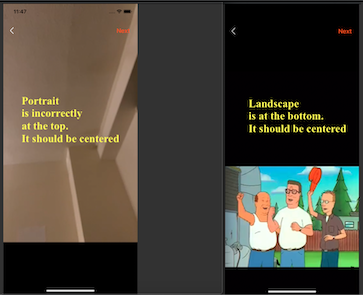

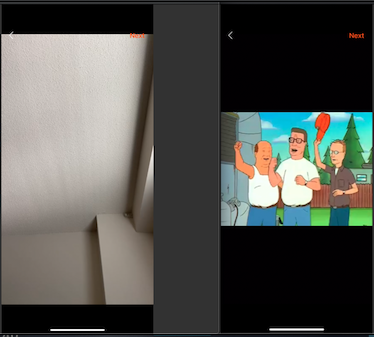

我跟踪雷·温德里希来合并视频。最终的结果是一个合并视频,其中肖像视频在屏幕顶部,景观视频在屏幕底部。在下面的图像中,先播放肖像视频,然后播放风景视频。风景录像是从照片图书馆。

代码:

func mergVideos() {

let mixComposition = AVMutableComposition()

let videoCompositionTrack = mixComposition.addMutableTrack(withMediaType: .video, preferredTrackID: Int32(kCMPersistentTrackID_Invalid))

let audioCompositionTrack = mixComposition.addMutableTrack(withMediaType: .audio, preferredTrackID: Int32(kCMPersistentTrackID_Invalid))

var count = 0

var insertTime = CMTime.zero

var instructions = [AVMutableVideoCompositionInstruction]()

for videoAsset in arrOfAssets {

let audioTrack = videoAsset.tracks(withMediaType: .audio)[0]

do {

try videoCompositionTrack?.insertTimeRange(CMTimeRangeMake(start: .zero, duration: videoAsset.duration), of: videoAsset.tracks(withMediaType: .video)[0], at: insertTime)

try audioCompositionTrack?.insertTimeRange(CMTimeRangeMake(start: .zero, duration: videoAsset.duration), of: audioTrack, at: insertTime)

let layerInstruction = videoCompositionInstruction(videoCompositionTrack!, asset: videoAsset, count: count)

let videoCompositionInstruction = AVMutableVideoCompositionInstruction()

videoCompositionInstruction.timeRange = CMTimeRangeMake(start: insertTime, duration: videoAsset.duration)

videoCompositionInstruction.layerInstructions = [layerInstruction]

instructions.append(videoCompositionInstruction)

insertTime = CMTimeAdd(insertTime, videoAsset.duration)

count += 1

} catch { }

}

let videoComposition = AVMutableVideoComposition()

videoComposition.instructions = instructions

videoComposition.frameDuration = CMTimeMake(value: 1, timescale: 30)

videoComposition.renderSize = CGSize(width: UIScreen.main.bounds.width, height: UIScreen.main.bounds.height)

// ...

exporter.videoComposition = videoComposition

}Ray Wenderlich代码:

func videoCompositionInstruction(_ track: AVCompositionTrack, asset: AVAsset, count: Int) -> AVMutableVideoCompositionLayerInstruction {

let instruction = AVMutableVideoCompositionLayerInstruction(assetTrack: track)

let assetTrack = asset.tracks(withMediaType: .video)[0]

let transform = assetTrack.preferredTransform

let assetInfo = orientationFromTransform(transform)

var scaleToFitRatio = UIScreen.main.bounds.width / assetTrack.naturalSize.width

if assetInfo.isPortrait {

scaleToFitRatio = UIScreen.main.bounds.width / assetTrack.naturalSize.height

let scaleFactor = CGAffineTransform(scaleX: scaleToFitRatio, y: scaleToFitRatio)

instruction.setTransform(assetTrack.preferredTransform.concatenating(scaleFactor), at: .zero)

} else {

let scaleFactor = CGAffineTransform(scaleX: scaleToFitRatio, y: scaleToFitRatio)

var concat = assetTrack.preferredTransform.concatenating(scaleFactor)

.concatenating(CGAffineTransform(translationX: 0,y: UIScreen.main.bounds.width / 2))

if assetInfo.orientation == .down {

let fixUpsideDown = CGAffineTransform(rotationAngle: CGFloat(Double.pi))

let windowBounds = UIScreen.main.bounds

let yFix = assetTrack.naturalSize.height + windowBounds.height

let centerFix = CGAffineTransform(translationX: assetTrack.naturalSize.width, y: yFix)

concat = fixUpsideDown.concatenating(centerFix).concatenating(scaleFactor)

}

instruction.setTransform(concat, at: .zero)

}

if count == 0 {

instruction.setOpacity(0.0, at: asset.duration)

}

return instruction

}

func orientationFromTransform(_ transform: CGAffineTransform) -> (orientation: UIImage.Orientation, isPortrait: Bool) {

var assetOrientation = UIImage.Orientation.up

var isPortrait = false

let tfA = transform.a

let tfB = transform.b

let tfC = transform.c

let tfD = transform.d

if tfA == 0 && tfB == 1.0 && tfC == -1.0 && tfD == 0 {

assetOrientation = .right

isPortrait = true

} else if tfA == 0 && tfB == -1.0 && tfC == 1.0 && tfD == 0 {

assetOrientation = .left

isPortrait = true

} else if tfA == 1.0 && tfB == 0 && tfC == 0 && tfD == 1.0 {

assetOrientation = .up

} else if tfA == -1.0 && tfB == 0 && tfC == 0 && tfD == -1.0 {

assetOrientation = .down

}

return (assetOrientation, isPortrait)

}我还遵循了这个介质帖子的代码。它将呈现大小设置为默认的let renderSize = CGSize(width: 1280.0, height: 720.0),而不是使用整个屏幕的Ray。

在1280/720的结果是,肖像视频是正确的,但与景观视频,声音播放,但视频没有在屏幕上。我没有添加风景图片,因为它只是一个黑色的屏幕。

回答 1

Stack Overflow用户

发布于 2022-03-13 14:50:44

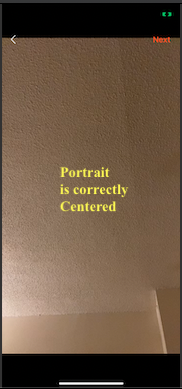

我让它同时适用于肖像和风景。

我测试了这个答案与录像记录在肖像,景观左右,倒置,前相机,和后面的相机。我没出过任何问题。我不是CGAffineTransform专家,所以如果有人有更好的答案,请发出来。

Ray的合并代码可以工作,但是它不适用于不同方向的视频。我使用这个回答来检查preferredTransform的属性,用于orientation检查。

我还为portrait orientation部件使用了一个portrait orientation答案,对于landscape orientation部件使用了一个被否决答案。被否决的答案把我带到了他的GitHub,那里的景观代码是,不正确的,但足够接近,我可以调整它,使它正常工作。

需要指出的一点是,@DonMag的评论告诉我使用720x1280的好处。下面的代码将将所有视频与renderSize of 720x1280合并,这将使它们保持相同的大小。

代码:

// class property

let renderSize = CGSize(width: 720, height: 1280) // for higher quality use CGSize(width: 1080, height: 1920)

func mergVideos() {

let mixComposition = AVMutableComposition()

let videoCompositionTrack = mixComposition.addMutableTrack(withMediaType: .video, preferredTrackID: Int32(kCMPersistentTrackID_Invalid))

let audioCompositionTrack = mixComposition.addMutableTrack(withMediaType: .audio, preferredTrackID: Int32(kCMPersistentTrackID_Invalid))

var count = 0

var insertTime = CMTime.zero

var instructions = [AVMutableVideoCompositionInstruction]()

for videoAsset in arrOfAssets {

guard let firstTrack = videoAsset.tracks.first, let _ = videoAsset.tracks(withMediaType: .video).first else { continue }

do {

try videoCompositionTrack?.insertTimeRange(CMTimeRangeMake(start: .zero, duration: videoAsset.duration), of: videoAsset.tracks(withMediaType: .video)[0], at: insertTime)

if let audioTrack = videoAsset.tracks(withMediaType: .audio).first {

try audioCompositionTrack?.insertTimeRange(CMTimeRangeMake(start: .zero, duration: videoAsset.duration), of: audioTrack, at: insertTime)

}

let layerInstruction = videoCompositionInstruction(firstTrack, asset: videoAsset, count: count)

let videoCompositionInstruction = AVMutableVideoCompositionInstruction()

videoCompositionInstruction.timeRange = CMTimeRangeMake(start: insertTime, duration: videoAsset.duration)

videoCompositionInstruction.layerInstructions = [layerInstruction]

instructions.append(videoCompositionInstruction)

insertTime = CMTimeAdd(insertTime, videoAsset.duration)

count += 1

} catch { }

}

let videoComposition = AVMutableVideoComposition()

videoComposition.instructions = instructions

videoComposition.frameDuration = CMTimeMake(value: 1, timescale: 30)

videoComposition.renderSize = self.renderSize // <--- **** IMPORTANT ****

// ...

exporter.videoComposition = videoComposition

}这个答案的大多数重要部分()都取代了RW代码:

func videoCompositionInstruction(_ firstTrack: AVAssetTrack, asset: AVAsset, count: Int) -> AVMutableVideoCompositionLayerInstruction {

let instruction = AVMutableVideoCompositionLayerInstruction(assetTrack: firstTrack)

let assetTrack = asset.tracks(withMediaType: .video)[0]

let t = assetTrack.fixedPreferredTransform // new transform fix

let assetInfo = orientationFromTransform(t)

if assetInfo.isPortrait {

let scaleToFitRatio = self.renderSize.width / assetTrack.naturalSize.height

let scaleFactor = CGAffineTransform(scaleX: scaleToFitRatio, y: scaleToFitRatio)

var finalTransform = assetTrack.fixedPreferredTransform.concatenating(scaleFactor)

// This was needed in the case of the OP's answer that I used for the portrait part. I haven't tested this but this is what he said: "(if video not taking entire screen and leaving some parts black - don't know when actually needed so you'll have to try and see when it's needed)"

if assetInfo.orientation == .rightMirrored || assetInfo.orientation == .leftMirrored {

finalTransform = finalTransform.translatedBy(x: -transform.ty, y: 0)

}

instruction.setTransform(finalTransform, at: CMTime.zero)

} else {

let renderRect = CGRect(x: 0, y: 0, width: self.renderSize.width, height: self.renderSize.height)

let videoRect = CGRect(origin: .zero, size: assetTrack.naturalSize).applying(assetTrack.fixedPreferredTransform)

let scale = renderRect.width / videoRect.width

let transform = CGAffineTransform(scaleX: renderRect.width / videoRect.width, y: (videoRect.height * scale) / assetTrack.naturalSize.height)

let translate = CGAffineTransform(translationX: .zero, y: ((self.renderSize.height - (videoRect.height * scale))) / 2)

instruction.setTransform(assetTrack.fixedPreferredTransform.concatenating(transform).concatenating(translate), at: .zero)

}

if count == 0 {

instruction.setOpacity(0.0, at: asset.duration)

}

return instruction

}新的定向检查:

func orientationFromTransform(_ transform: CGAffineTransform) -> (orientation: UIImage.Orientation, isPortrait: Bool) {

var assetOrientation = UIImage.Orientation.up

var isPortrait = false

if transform.a == 0 && transform.b == 1.0 && transform.c == -1.0 && transform.d == 0 {

assetOrientation = .right

isPortrait = true

} else if transform.a == 0 && transform.b == 1.0 && transform.c == 1.0 && transform.d == 0 {

assetOrientation = .rightMirrored

isPortrait = true

} else if transform.a == 0 && transform.b == -1.0 && transform.c == 1.0 && transform.d == 0 {

assetOrientation = .left

isPortrait = true

} else if transform.a == 0 && transform.b == -1.0 && transform.c == -1.0 && transform.d == 0 {

assetOrientation = .leftMirrored

isPortrait = true

} else if transform.a == 1.0 && transform.b == 0 && transform.c == 0 && transform.d == 1.0 {

assetOrientation = .up

} else if transform.a == -1.0 && transform.b == 0 && transform.c == 0 && transform.d == -1.0 {

assetOrientation = .down

}

}preferredTransform 修复

extension AVAssetTrack {

var fixedPreferredTransform: CGAffineTransform {

var t = preferredTransform

switch(t.a, t.b, t.c, t.d) {

case (1, 0, 0, 1):

t.tx = 0

t.ty = 0

case (1, 0, 0, -1):

t.tx = 0

t.ty = naturalSize.height

case (-1, 0, 0, 1):

t.tx = naturalSize.width

t.ty = 0

case (-1, 0, 0, -1):

t.tx = naturalSize.width

t.ty = naturalSize.height

case (0, -1, 1, 0):

t.tx = 0

t.ty = naturalSize.width

case (0, 1, -1, 0):

t.tx = naturalSize.height

t.ty = 0

case (0, 1, 1, 0):

t.tx = 0

t.ty = 0

case (0, -1, -1, 0):

t.tx = naturalSize.height

t.ty = naturalSize.width

default:

break

}

return t

}

}https://stackoverflow.com/questions/71447131

复制相似问题