spring云数据流- s3源不使用minio

spring云数据流- s3源不使用minio

提问于 2022-07-14 15:19:59

我正在运行一个类似的对接器-组成您可以在spring文档中找到的在本地机器中运行流的文档。

version: '3.8'

volumes:

zookeeper_data:

driver: local

kafka_data:

driver: local

elastic_data:

driver: local

minio_data1:

driver: local

minio_data2:

driver: local

networks:

sdf:

driver: "bridge"

services:

minio:

image: quay.io/minio/minio:latest

container_name: minio

command: server --console-address ":9001" http://minio/data{1...2}

ports:

- '9000:9000'

- '9001:9001'

expose:

- "9000"

- "9001"

# environment:

# MINIO_ROOT_USER: minioadmin 093DrIkcXK8J3SC1

# MINIO_ROOT_PASSWORD: minioadmin CfjqeNxAtDLnUK8Fbhka8RwzfZTNlrf5

hostname: minio

volumes:

- minio_data1:/data1

- minio_data2:/data2

networks:

- sdf

zookeeper:

image: bitnami/zookeeper:3

container_name: zookeeper

ports:

- '2181:2181'

volumes:

- 'zookeeper_data:/bitnami'

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

networks:

- sdf

kafka:

image: bitnami/kafka:2

container_name: kafka

ports:

- '9092:9092'

- '29092:29092'

volumes:

- 'kafka_data:/bitnami'

environment:

- KAFKA_CREATE_TOPICS="requests:1:1,responses:1:1,notifications:1:1"

#- KAFKA_AUTO_CREATE_TOPICS_ENABLE=false

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

- KAFKA_CFG_LISTENERS=PLAINTEXT://:29092,PLAINTEXT_HOST://:9092

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://kafka:29092,PLAINTEXT_HOST://localhost:9092

depends_on:

- zookeeper

networks:

- sdf

dataflow-server:

user: root

image: springcloud/spring-cloud-dataflow-server:${DATAFLOW_VERSION:-2.9.1}${BP_JVM_VERSION:-}

container_name: dataflow-server

ports:

- "9393:9393"

environment:

- SPRING_CLOUD_DATAFLOW_APPLICATIONPROPERTIES_STREAM_SPRING_CLOUD_STREAM_KAFKA_BINDER_BROKERS=PLAINTEXT://kafka:29092

- SPRING_CLOUD_DATAFLOW_APPLICATIONPROPERTIES_STREAM_SPRING_CLOUD_STREAM_KAFKA_STREAMS_BINDER_BROKERS=PLAINTEXT://kafka:29092

- SPRING_CLOUD_DATAFLOW_APPLICATIONPROPERTIES_STREAM_SPRING_CLOUD_STREAM_KAFKA_BINDER_ZKNODES=zookeeper:2181

- SPRING_CLOUD_DATAFLOW_APPLICATIONPROPERTIES_STREAM_SPRING_CLOUD_STREAM_KAFKA_STREAMS_BINDER_ZKNODES=zookeeper:2181

- SPRING_CLOUD_DATAFLOW_APPLICATIONPROPERTIES_STREAM_SPRING_KAFKA_STREAMS_PROPERTIES_METRICS_RECORDING_LEVEL=DEBUG

# Set CLOSECONTEXTENABLED=true to ensure that the CRT launcher is closed.

- SPRING_CLOUD_DATAFLOW_APPLICATIONPROPERTIES_TASK_SPRING_CLOUD_TASK_CLOSECONTEXTENABLED=true

- SPRING_CLOUD_SKIPPER_CLIENT_SERVER_URI=${SKIPPER_URI:-http://skipper-server:7577}/api

- SPRING_DATASOURCE_URL=jdbc:mysql://mysql:3306/dataflow

- SPRING_DATASOURCE_USERNAME=root

- SPRING_DATASOURCE_PASSWORD=rootpw

- SPRING_DATASOURCE_DRIVER_CLASS_NAME=org.mariadb.jdbc.Driver

# (Optionally) authenticate the default Docker Hub access for the App Metadata access.

# - SPRING_CLOUD_DATAFLOW_CONTAINER_REGISTRY_CONFIGURATIONS_DEFAULT_USER=${METADATA_DEFAULT_DOCKERHUB_USER}

# - SPRING_CLOUD_DATAFLOW_CONTAINER_REGISTRY_CONFIGURATIONS_DEFAULT_SECRET=${METADATA_DEFAULT_DOCKERHUB_PASSWORD}

# - SPRING_CLOUD_DATAFLOW_CONTAINER_REGISTRYCONFIGURATIONS_DEFAULT_USER=${METADATA_DEFAULT_DOCKERHUB_USER}

# - SPRING_CLOUD_DATAFLOW_CONTAINER_REGISTRYCONFIGURATIONS_DEFAULT_SECRET=${METADATA_DEFAULT_DOCKERHUB_PASSWORD}

depends_on:

- kafka

- skipper-server

entrypoint: >

bin/sh -c "

apt-get update && apt-get install --no-install-recommends -y wget &&

wget --no-check-certificate -P /tmp/ https://raw.githubusercontent.com/vishnubob/wait-for-it/master/wait-for-it.sh &&

chmod a+x /tmp/wait-for-it.sh &&

/tmp/wait-for-it.sh mysql:3306 -- /cnb/process/web"

restart: always

volumes:

- ${HOST_MOUNT_PATH:-.}:${DOCKER_MOUNT_PATH:-/home/cnb/scdf}

networks:

- sdf

app-import-stream:

image: springcloud/baseimage:1.0.0

container_name: dataflow-app-import-stream

depends_on:

- dataflow-server

command: >

/bin/sh -c "

./wait-for-it.sh -t 360 dataflow-server:9393;

wget -qO- '${DATAFLOW_URI:-http://dataflow-server:9393}/apps' --no-check-certificate --post-data='uri=${STREAM_APPS_URI:-https://dataflow.spring.io/kafka-maven-latest&force=true}';

wget -qO- '${DATAFLOW_URI:-http://dataflow-server:9393}/apps/sink/ver-log/3.0.1' --no-check-certificate --post-data='uri=maven://org.springframework.cloud.stream.app:log-sink-kafka:3.0.1';

wget -qO- '${DATAFLOW_URI:-http://dataflow-server:9393}/apps/sink/ver-log/2.1.5.RELEASE' --no-check-certificate --post-data='uri=maven://org.springframework.cloud.stream.app:log-sink-kafka:2.1.5.RELEASE';

wget -qO- '${DATAFLOW_URI:-http://dataflow-server:9393}/apps/sink/dataflow-tasklauncher/${DATAFLOW_VERSION:-2.9.1}' --no-check-certificate --post-data='uri=maven://org.springframework.cloud:spring-cloud-dataflow-tasklauncher-sink-kafka:${DATAFLOW_VERSION:-2.9.1}';

echo 'Maven Stream apps imported'"

networks:

- sdf

skipper-server:

user: root

image: springcloud/spring-cloud-skipper-server:${SKIPPER_VERSION:-2.8.1}${BP_JVM_VERSION:-}

container_name: skipper-server

ports:

- "7577:7577"

- ${APPS_PORT_RANGE:-20000-20195:20000-20195}

environment:

- SPRING_CLOUD_SKIPPER_SERVER_PLATFORM_LOCAL_ACCOUNTS_DEFAULT_PORTRANGE_LOW=20000

- SPRING_CLOUD_SKIPPER_SERVER_PLATFORM_LOCAL_ACCOUNTS_DEFAULT_PORTRANGE_HIGH=20190

- SPRING_DATASOURCE_URL=jdbc:mysql://mysql:3306/dataflow

- SPRING_DATASOURCE_USERNAME=root

- SPRING_DATASOURCE_PASSWORD=rootpw

- SPRING_DATASOURCE_DRIVER_CLASS_NAME=org.mariadb.jdbc.Driver

- LOGGING_LEVEL_ORG_SPRINGFRAMEWORK_CLOUD_SKIPPER_SERVER_DEPLOYER=ERROR

entrypoint: >

bin/sh -c "

apt-get update && apt-get install --no-install-recommends -y wget &&

wget --no-check-certificate -P /tmp/ https://raw.githubusercontent.com/vishnubob/wait-for-it/master/wait-for-it.sh &&

chmod a+x /tmp/wait-for-it.sh &&

/tmp/wait-for-it.sh mysql:3306 -- /cnb/process/web"

restart: always

volumes:

- ${HOST_MOUNT_PATH:-.}:${DOCKER_MOUNT_PATH:-/home/cnb/scdf}

networks:

- sdf

mysql:

image: mysql:5.7.25

container_name: mysql

environment:

MYSQL_DATABASE: dataflow

MYSQL_USER: root

MYSQL_ROOT_PASSWORD: rootpw

expose:

- 3306

networks:

- sdf我能够将Elasticsearch添加到这个对接器中-compose并成功地连接到它(使用elasticsearch接收器),但是这里我只是保留对这个问题的关注。

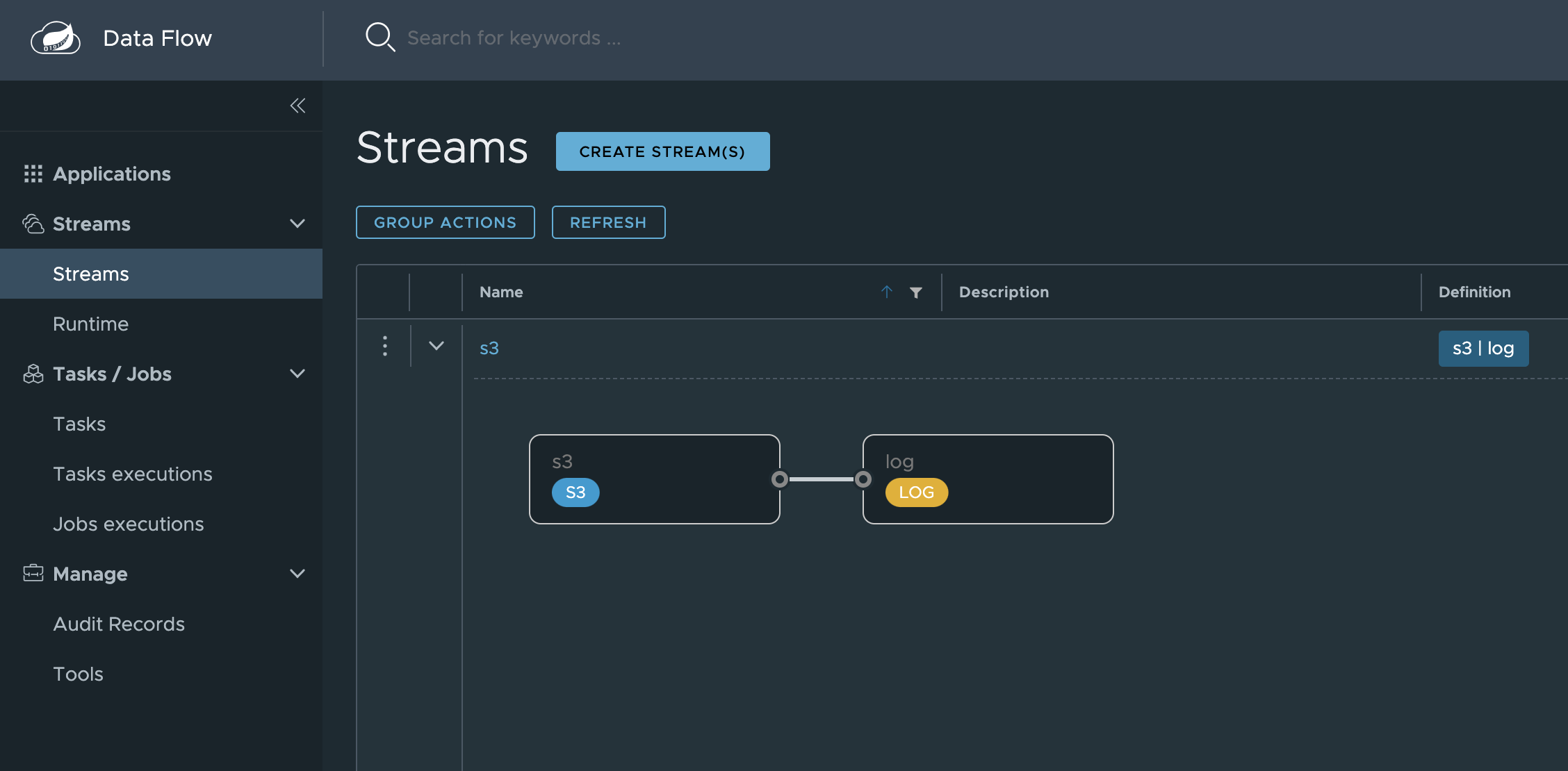

我就是这样配置s3源代码的

app.s3.cloud.aws.credentials.accessKey=093DrIkcXK8J3SC1

app.s3.cloud.aws.credentials.secretKey=CfjqeNxAtDLnUK8Fbhka8RwzfZTNlrf5

app.s3.cloud.aws.region.static=us-west-1

app.s3.cloud.aws.stack.auto=false

app.s3.common.endpoint-url=http://minio:9000

app.s3.supplier.remote-dir=/kafka-connect/topics

app.s3.logging.level.org.apache.http=DEBUG

这是我正在犯的错误

2022-07-14 14:11:32.625 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAddCookies : CookieSpec selected: default

2022-07-14 14:11:32.625 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAuthCache : Auth cache not set in the context

2022-07-14 14:11:32.625 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection request: [route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:32.626 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection leased: [id: 44][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 1 of 50; total allocated: 1 of 50]

2022-07-14 14:11:32.626 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Opening connection {}->http://kafka-connect.minio:9000

2022-07-14 14:11:32.626 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.DefaultManagedHttpClientConnection : http-outgoing-44: Shutdown connection

2022-07-14 14:11:32.626 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Connection discarded

2022-07-14 14:11:32.626 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection released: [id: 44][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:32.678 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAddCookies : CookieSpec selected: default

2022-07-14 14:11:32.679 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAuthCache : Auth cache not set in the context

2022-07-14 14:11:32.679 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection request: [route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:32.679 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection leased: [id: 45][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 1 of 50; total allocated: 1 of 50]

2022-07-14 14:11:32.679 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Opening connection {}->http://kafka-connect.minio:9000

2022-07-14 14:11:32.679 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.DefaultManagedHttpClientConnection : http-outgoing-45: Shutdown connection

2022-07-14 14:11:32.679 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Connection discarded

2022-07-14 14:11:32.679 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection released: [id: 45][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:32.704 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAddCookies : CookieSpec selected: default

2022-07-14 14:11:32.704 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAuthCache : Auth cache not set in the context

2022-07-14 14:11:32.704 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection request: [route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:32.704 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection leased: [id: 46][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 1 of 50; total allocated: 1 of 50]

2022-07-14 14:11:32.705 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Opening connection {}->http://kafka-connect.minio:9000

2022-07-14 14:11:32.705 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.DefaultManagedHttpClientConnection : http-outgoing-46: Shutdown connection

2022-07-14 14:11:32.705 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Connection discarded

2022-07-14 14:11:32.705 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection released: [id: 46][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:33.021 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAddCookies : CookieSpec selected: default

2022-07-14 14:11:33.021 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.h.client.protocol.RequestAuthCache : Auth cache not set in the context

2022-07-14 14:11:33.021 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection request: [route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:33.021 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection leased: [id: 47][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 1 of 50; total allocated: 1 of 50]

2022-07-14 14:11:33.021 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Opening connection {}->http://kafka-connect.minio:9000

2022-07-14 14:11:33.021 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.DefaultManagedHttpClientConnection : http-outgoing-47: Shutdown connection

2022-07-14 14:11:33.021 DEBUG [s3-source,,] 470 --- [oundedElastic-4] o.a.http.impl.execchain.MainClientExec : Connection discarded

2022-07-14 14:11:33.022 DEBUG [s3-source,,] 470 --- [oundedElastic-4] h.i.c.PoolingHttpClientConnectionManager : Connection released: [id: 47][route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]

2022-07-14 14:11:33.026 ERROR [s3-source,,] 470 --- [oundedElastic-4] o.s.i.util.IntegrationReactiveUtils : Error from Flux for : org.springframework.integration.aws.inbound.S3InboundFileSynchronizingMessageSource@3d20e22e

org.springframework.messaging.MessagingException: Problem occurred while synchronizing '/kafka-connect/topics' to local directory; nested exception is org.springframework.messaging.MessagingException: Failed to execute on session; nested exception is com.amazonaws.SdkClientException: Unable to execute HTTP request: kafka-connect.minio

at org.springframework.integration.file.remote.synchronizer.AbstractInboundFileSynchronizer.synchronizeToLocalDirectory(AbstractInboundFileSynchronizer.java:348) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

at org.springframework.integration.file.remote.synchronizer.AbstractInboundFileSynchronizingMessageSource.doReceive(AbstractInboundFileSynchronizingMessageSource.java:267) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

at org.springframework.integration.file.remote.synchronizer.AbstractInboundFileSynchronizingMessageSource.doReceive(AbstractInboundFileSynchronizingMessageSource.java:69) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

at org.springframework.integration.endpoint.AbstractFetchLimitingMessageSource.doReceive(AbstractFetchLimitingMessageSource.java:47) ~[spring-integration-core-5.5.12.jar!/:5.5.12]

at org.springframework.integration.endpoint.AbstractMessageSource.receive(AbstractMessageSource.java:142) ~[spring-integration-core-5.5.12.jar!/:5.5.12]

at org.springframework.integration.util.IntegrationReactiveUtils.lambda$messageSourceToFlux$0(IntegrationReactiveUtils.java:83) ~[spring-integration-core-5.5.12.jar!/:5.5.12]

at reactor.core.publisher.MonoCreate$DefaultMonoSink.onRequest(MonoCreate.java:221) ~[reactor-core-3.4.18.jar!/:3.4.18]

at org.springframework.integration.util.IntegrationReactiveUtils.lambda$messageSourceToFlux$1(IntegrationReactiveUtils.java:83) ~[spring-integration-core-5.5.12.jar!/:5.5.12]

at reactor.core.publisher.MonoCreate.subscribe(MonoCreate.java:58) ~[reactor-core-3.4.18.jar!/:3.4.18]

at reactor.core.publisher.Mono.subscribe(Mono.java:4400) ~[reactor-core-3.4.18.jar!/:3.4.18]

at reactor.core.publisher.MonoSubscribeOn$SubscribeOnSubscriber.run(MonoSubscribeOn.java:126) ~[reactor-core-3.4.18.jar!/:3.4.18]

at org.springframework.cloud.sleuth.instrument.reactor.ReactorSleuth.lambda$null$6(ReactorSleuth.java:324) ~[spring-cloud-sleuth-instrumentation-3.1.3.jar!/:3.1.3]

at reactor.core.scheduler.WorkerTask.call(WorkerTask.java:84) ~[reactor-core-3.4.18.jar!/:3.4.18]

at reactor.core.scheduler.WorkerTask.call(WorkerTask.java:37) ~[reactor-core-3.4.18.jar!/:3.4.18]

at java.base/java.util.concurrent.FutureTask.run(Unknown Source) ~[na:na]

at java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(Unknown Source) ~[na:na]

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source) ~[na:na]

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source) ~[na:na]

at java.base/java.lang.Thread.run(Unknown Source) ~[na:na]

Caused by: org.springframework.messaging.MessagingException: Failed to execute on session; nested exception is com.amazonaws.SdkClientException: Unable to execute HTTP request: kafka-connect.minio

at org.springframework.integration.file.remote.RemoteFileTemplate.execute(RemoteFileTemplate.java:461) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

at org.springframework.integration.file.remote.synchronizer.AbstractInboundFileSynchronizer.synchronizeToLocalDirectory(AbstractInboundFileSynchronizer.java:341) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

... 18 common frames omitted

Caused by: com.amazonaws.SdkClientException: Unable to execute HTTP request: kafka-connect.minio

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.handleRetryableException(AmazonHttpClient.java:1207) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeHelper(AmazonHttpClient.java:1153) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.doExecute(AmazonHttpClient.java:802) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeWithTimer(AmazonHttpClient.java:770) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.execute(AmazonHttpClient.java:744) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.access$500(AmazonHttpClient.java:704) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutionBuilderImpl.execute(AmazonHttpClient.java:686) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:550) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:530) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5062) ~[aws-java-sdk-s3-1.11.792.jar!/:na]

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5008) ~[aws-java-sdk-s3-1.11.792.jar!/:na]

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:5002) ~[aws-java-sdk-s3-1.11.792.jar!/:na]

at com.amazonaws.services.s3.AmazonS3Client.listObjects(AmazonS3Client.java:898) ~[aws-java-sdk-s3-1.11.792.jar!/:na]

at org.springframework.integration.aws.support.S3Session.list(S3Session.java:91) ~[spring-integration-aws-2.3.4.RELEASE.jar!/:na]

at org.springframework.integration.aws.support.S3Session.list(S3Session.java:52) ~[spring-integration-aws-2.3.4.RELEASE.jar!/:na]

at org.springframework.integration.file.remote.synchronizer.AbstractInboundFileSynchronizer.transferFilesFromRemoteToLocal(AbstractInboundFileSynchronizer.java:356) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

at org.springframework.integration.file.remote.synchronizer.AbstractInboundFileSynchronizer.lambda$synchronizeToLocalDirectory$0(AbstractInboundFileSynchronizer.java:342) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

at org.springframework.integration.file.remote.RemoteFileTemplate.execute(RemoteFileTemplate.java:452) ~[spring-integration-file-5.5.12.jar!/:5.5.12]

... 19 common frames omitted

Caused by: java.net.UnknownHostException: kafka-connect.minio

at java.base/java.net.InetAddress$CachedAddresses.get(Unknown Source) ~[na:na]

at java.base/java.net.InetAddress.getAllByName0(Unknown Source) ~[na:na]

at java.base/java.net.InetAddress.getAllByName(Unknown Source) ~[na:na]

at java.base/java.net.InetAddress.getAllByName(Unknown Source) ~[na:na]

at com.amazonaws.SystemDefaultDnsResolver.resolve(SystemDefaultDnsResolver.java:27) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.DelegatingDnsResolver.resolve(DelegatingDnsResolver.java:38) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:112) ~[httpclient-4.5.13.jar!/:4.5.13]

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:376) ~[httpclient-4.5.13.jar!/:4.5.13]

at jdk.internal.reflect.GeneratedMethodAccessor73.invoke(Unknown Source) ~[na:na]

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(Unknown Source) ~[na:na]

at java.base/java.lang.reflect.Method.invoke(Unknown Source) ~[na:na]

at com.amazonaws.http.conn.ClientConnectionManagerFactory$Handler.invoke(ClientConnectionManagerFactory.java:76) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.conn.$Proxy145.connect(Unknown Source) ~[na:na]

at org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:393) ~[httpclient-4.5.13.jar!/:4.5.13]

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236) ~[httpclient-4.5.13.jar!/:4.5.13]

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186) ~[httpclient-4.5.13.jar!/:4.5.13]

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185) ~[httpclient-4.5.13.jar!/:4.5.13]

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83) ~[httpclient-4.5.13.jar!/:4.5.13]

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56) ~[httpclient-4.5.13.jar!/:4.5.13]

at com.amazonaws.http.apache.client.impl.SdkHttpClient.execute(SdkHttpClient.java:72) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeOneRequest(AmazonHttpClient.java:1330) ~[aws-java-sdk-core-1.11.792.jar!/:na]

at com.amazonaws.http.AmazonHttpClient$RequestExecutor.executeHelper(AmazonHttpClient.java:1145) ~[aws-java-sdk-core-1.11.792.jar!/:na]

... 35 common frames omitted调试正在尝试连接的http连接:

Connection request: [route: {}->http://kafka-connect.minio:9000][total available: 0; route allocated: 0 of 50; total allocated: 0 of 50]这会导致未知主机,因为主机kafka-connect.minio显然不存在。但是我成功地压缩了http://minio:9000 (从运行s3连接器的容器-跳过服务器中)。

所以我的问题是:

为什么它试图连接到http://{BUCKET_NAME}.minio:9000而不是http://minio:9000,然后转到桶中呢?

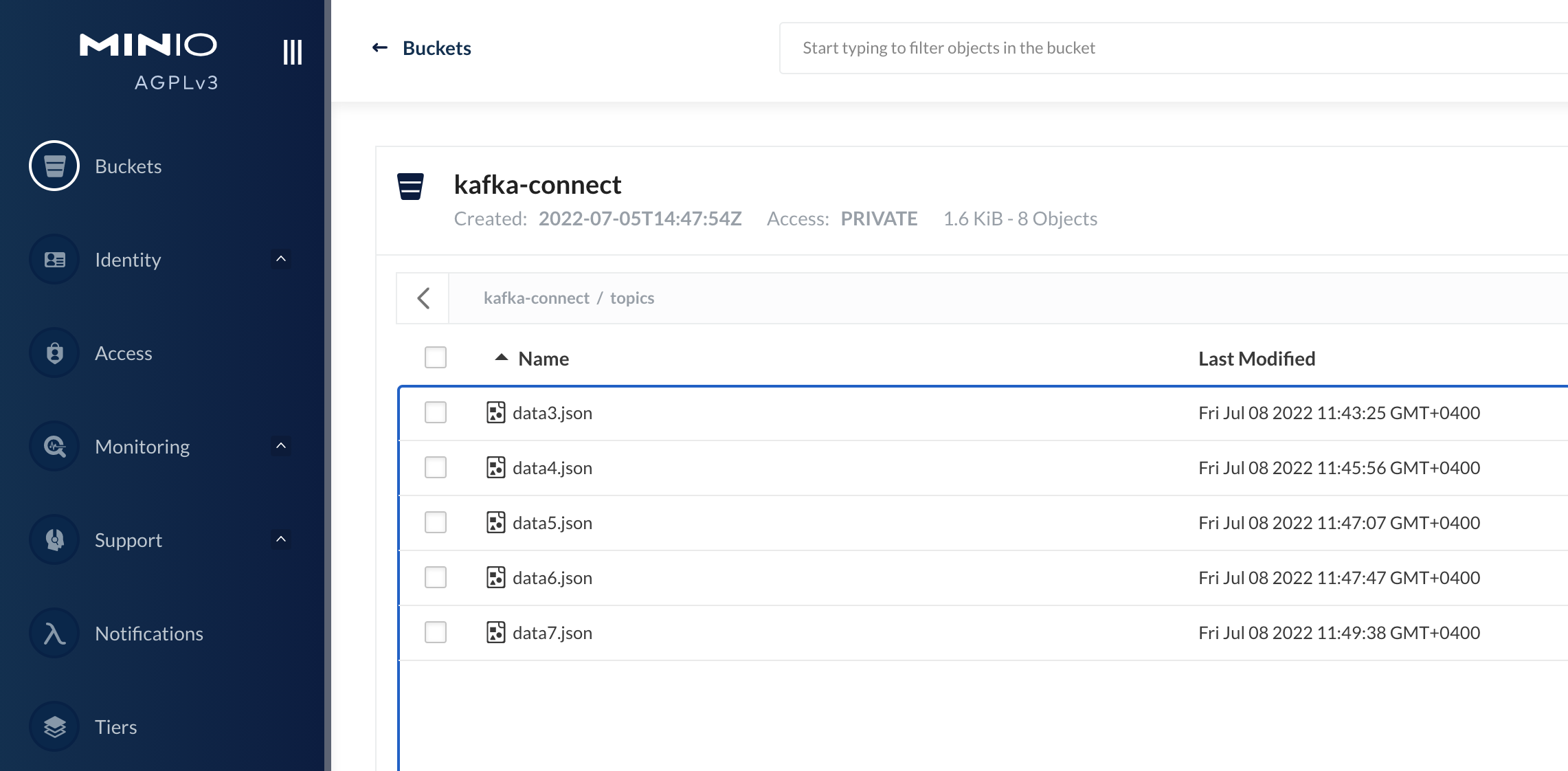

我能够连接到同一个Minio,用Kafka connect (s3-source)连接到同一个桶,我正在用类似的方法进行连接,这意味着,kafka-connect是一个单独的容器,位于同一个对接器-组合中,它可以连接到minio容器并获取文件。您可以在这里看到这是如何实现的,kafka connect s3 source not working with Minio

回答 1

Stack Overflow用户

发布于 2022-07-21 06:22:14

添加此属性解决了问题。

app.s3.path-style-access=true默认情况下为false,而对于kafka-connect情况,默认情况下为true。

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/72982927

复制相关文章

相似问题