有没有办法用HuggingFace TrainerAPI在同一张图上绘制训练和验证损失?

我正在微调一个HuggingFace变压器模型(PyTorch版本),使用HF Seq2SeqTrainingArguments & Seq2SeqTrainer,我想在Tensorboard上显示火车和验证损失(在相同的图表中)。

据我所知,为了把这两个损失放在一起,我需要使用SummaryWriter。HF回调文档描述了一个可以接收tb_writer参数的tb_writer函数:

然而,我不知道什么是正确的方式使用它,如果它甚至应该与培训者API一起使用。

我的代码如下所示:

args = Seq2SeqTrainingArguments(

output_dir=output_dir,

evaluation_strategy='epoch',

learning_rate= 1e-5,

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=batch_size,

weight_decay=0.01,

save_total_limit=3,

num_train_epochs=num_train_epochs,

predict_with_generate=True,

logging_steps=logging_steps,

report_to='tensorboard',

push_to_hub=False,

)

trainer = Seq2SeqTrainer(

model,

args,

train_dataset=tokenized_train_data,

eval_dataset=tokenized_val_data,

data_collator=data_collator,

tokenizer=tokenizer,

compute_metrics=compute_metrics,

)我假设我应该在训练器中包含对TensorBoard的回调,例如,

callbacks = [TensorBoardCallback(tb_writer=tb_writer)]但我找不到一个关于如何使用/导入什么来使用它的全面示例。

我还在GitHub上找到了这个特性请求,

https://github.com/huggingface/transformers/pull/4020

但没有使用的例子,所以我很困惑.

如有任何见解,将不胜感激。

回答 2

Stack Overflow用户

发布于 2022-10-07 00:51:31

这相当简单。你在"Seq2SeqTrainingArguments“中提到了这一点。没有必要在"Seq2SeqTrainer“函数中显式地定义它。

model_arguments = Seq2SeqTrainingArguments(output_dir= "./best_model/",

num_train_epochs = EPOCHS,

overwrite_output_dir= True,

do_train= True,

do_eval= True,

do_predict= True,

auto_find_batch_size= True,

evaluation_strategy = 'epoch',

warmup_steps = 10000,

logging_dir = "./log_files/",

disable_tqdm = False,

load_best_model_at_end = True,

save_strategy= 'epoch',

save_total_limit = 1,

per_device_eval_batch_size= BATCH_SIZE,

per_device_train_batch_size= BATCH_SIZE,

predict_with_generate=True,

report_to='wandb',

run_name="rober_based_encoder_decoder_text_summarisation"

)同时,您还可以进行其他回调:

early_stopping = EarlyStoppingCallback(early_stopping_patience= 5,

early_stopping_threshold= 0.001)然后将参数和回调作为列表传递给训练器参数:

trainer = Seq2SeqTrainer(model = model,

compute_metrics= compute_metrics,

args= model_arguments,

train_dataset= Train,

eval_dataset= Val,

tokenizer=tokenizer,

callbacks= [early_stopping, ]

)训练模特。训练前一定要登录魔杖。

trainer.train()Stack Overflow用户

发布于 2022-10-28 01:43:34

我所知道的在同一个TensorBoard图上绘制两个值的唯一方法是在相同的根目录下使用两个单独的SummaryWriter。例如,日志目录可能是:log_dir/train和log_dir/eval。

这种方法在this answer中使用,但用于TensorFlow而不是pytorch。

为了使用Trainer API实现这一点,需要使用两个SummaryWriter的自定义回调。

import os

from transformers.integrations import TrainerCallback, is_tensorboard_available

def custom_rewrite_logs(d, mode):

new_d = {}

eval_prefix = "eval_"

eval_prefix_len = len(eval_prefix)

test_prefix = "test_"

test_prefix_len = len(test_prefix)

for k, v in d.items():

if mode == 'eval' and k.startswith(eval_prefix):

if k[eval_prefix_len:] == 'loss':

new_d["combined/" + k[eval_prefix_len:]] = v

elif mode == 'test' and k.startswith(test_prefix):

if k[test_prefix_len:] == 'loss':

new_d["combined/" + k[test_prefix_len:]] = v

elif mode == 'train':

if k == 'loss':

new_d["combined/" + k] = v

return new_d

class CombinedTensorBoardCallback(TrainerCallback):

"""

A [`TrainerCallback`] that sends the logs to [TensorBoard](https://www.tensorflow.org/tensorboard).

Args:

tb_writer (`SummaryWriter`, *optional*):

The writer to use. Will instantiate one if not set.

"""

def __init__(self, tb_writers=None):

has_tensorboard = is_tensorboard_available()

if not has_tensorboard:

raise RuntimeError(

"TensorBoardCallback requires tensorboard to be installed. Either update your PyTorch version or"

" install tensorboardX."

)

if has_tensorboard:

try:

from torch.utils.tensorboard import SummaryWriter # noqa: F401

self._SummaryWriter = SummaryWriter

except ImportError:

try:

from tensorboardX import SummaryWriter

self._SummaryWriter = SummaryWriter

except ImportError:

self._SummaryWriter = None

else:

self._SummaryWriter = None

self.tb_writers = tb_writers

def _init_summary_writer(self, args, log_dir=None):

log_dir = log_dir or args.logging_dir

if self._SummaryWriter is not None:

self.tb_writers = dict(train=self._SummaryWriter(log_dir=os.path.join(log_dir, 'train')),

eval=self._SummaryWriter(log_dir=os.path.join(log_dir, 'eval')))

def on_train_begin(self, args, state, control, **kwargs):

if not state.is_world_process_zero:

return

log_dir = None

if state.is_hyper_param_search:

trial_name = state.trial_name

if trial_name is not None:

log_dir = os.path.join(args.logging_dir, trial_name)

if self.tb_writers is None:

self._init_summary_writer(args, log_dir)

for k, tbw in self.tb_writers.items():

tbw.add_text("args", args.to_json_string())

if "model" in kwargs:

model = kwargs["model"]

if hasattr(model, "config") and model.config is not None:

model_config_json = model.config.to_json_string()

tbw.add_text("model_config", model_config_json)

# Version of TensorBoard coming from tensorboardX does not have this method.

if hasattr(tbw, "add_hparams"):

tbw.add_hparams(args.to_sanitized_dict(), metric_dict={})

def on_log(self, args, state, control, logs=None, **kwargs):

if not state.is_world_process_zero:

return

if self.tb_writers is None:

self._init_summary_writer(args)

for tbk, tbw in self.tb_writers.items():

logs_new = custom_rewrite_logs(logs, mode=tbk)

for k, v in logs_new.items():

if isinstance(v, (int, float)):

tbw.add_scalar(k, v, state.global_step)

else:

logger.warning(

"Trainer is attempting to log a value of "

f'"{v}" of type {type(v)} for key "{k}" as a scalar. '

"This invocation of Tensorboard's writer.add_scalar() "

"is incorrect so we dropped this attribute."

)

tbw.flush()

def on_train_end(self, args, state, control, **kwargs):

for tbw in self.tb_writers.values():

tbw.close()

self.tb_writers = None如果除了损失之外,还想将train和eval结合起来,那么应该相应地修改custom_rewrite_logs。

与往常一样,回调位于Trainer构造函数中。在我的测试示例中,它是:

trainer = Trainer(

model=rnn,

args=train_args,

train_dataset=train_dataset,

eval_dataset=validation_dataset,

tokenizer=tokenizer,

compute_metrics=compute_metrics,

callbacks=[CombinedTensorBoardCallback]

)另外,您可能希望删除默认的TensorBoardCallback,或者除了组合损失图之外,培训损失和验证损失都会像默认情况一样分别出现。

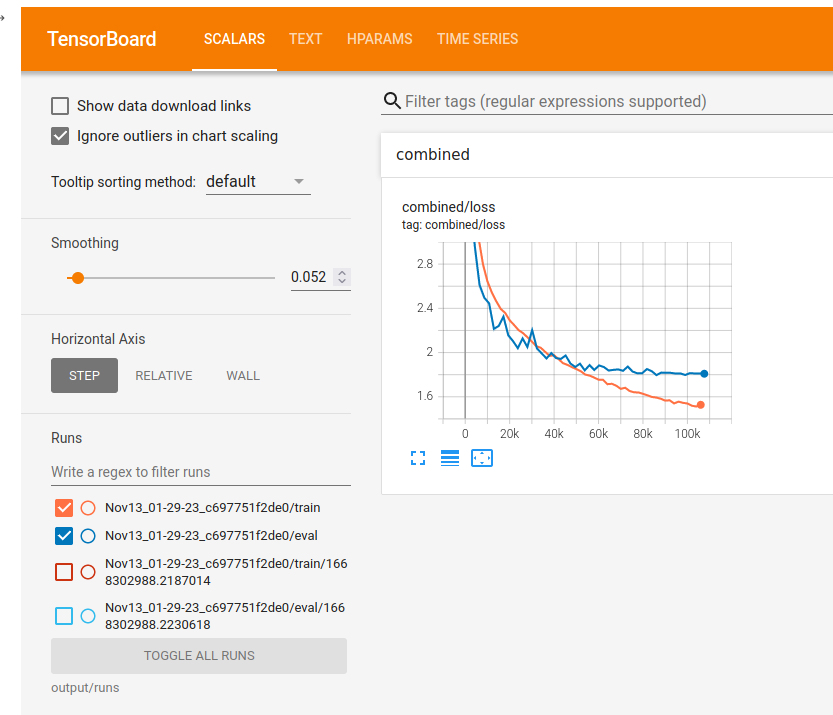

trainer.remove_callback(TensorBoardCallback)下面是生成的TensorBoard视图:

https://stackoverflow.com/questions/73281901

复制相似问题