如何提高目标跟踪速度(Yolov4 DeepSort)

最近,我开始对计算机视觉技术产生兴趣,并通过了几个教程来解决我的业务中的一些问题。该地区共有4栋建筑物,问题是如何控制进出这些建筑物的人数。此外,还必须考虑到服务人员的流动。

我尝试使用以下存储库来解决这些问题:

https://github.com/theAIGuysCode/yolov4-deepsort

在我看来,这会解决我的问题。但是,现在有一个问题,处理速度的视频记录从中央电视台的相机。我试着运行一个10秒的视频片段,脚本在211秒内完成。在我看来是很长的时间。

我能做什么来提高处理速度?告诉我哪里能找到答案。

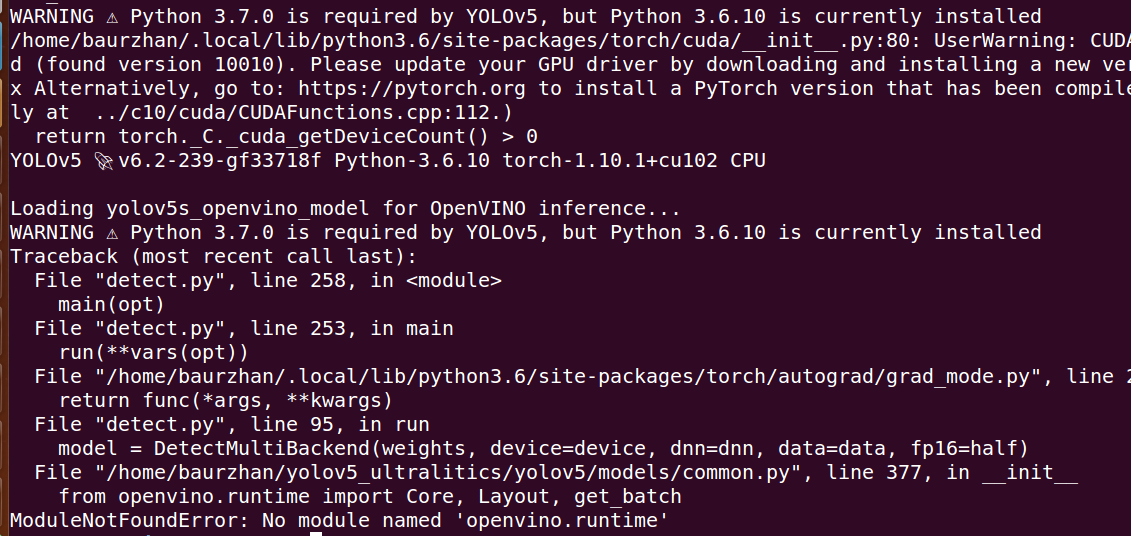

尝试安装openvino时出错

Building wheels for collected packages: tokenizers

Building wheel for tokenizers (pyproject.toml) ... error

ERROR: Command errored out with exit status 1:

command: /usr/bin/python3.6 /home/baurzhan/.local/lib/python3.6/site-packages/pip/_vendor/pep517/in_process/_in_process.py build_wheel /tmp/tmpr56p_xyt

cwd: /tmp/pip-install-pfzy6bre/tokenizers_dce0e65cae1e4e7c9325570d12cd6d63

Complete output (51 lines):

running bdist_wheel

running build

running build_py

creating build

creating build/lib.linux-x86_64-3.6

creating build/lib.linux-x86_64-3.6/tokenizers

copying py_src/tokenizers/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers

creating build/lib.linux-x86_64-3.6/tokenizers/models

copying py_src/tokenizers/models/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/models

creating build/lib.linux-x86_64-3.6/tokenizers/decoders

copying py_src/tokenizers/decoders/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/decoders

creating build/lib.linux-x86_64-3.6/tokenizers/normalizers

copying py_src/tokenizers/normalizers/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/normalizers

creating build/lib.linux-x86_64-3.6/tokenizers/pre_tokenizers

copying py_src/tokenizers/pre_tokenizers/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/pre_tokenizers

creating build/lib.linux-x86_64-3.6/tokenizers/processors

copying py_src/tokenizers/processors/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/processors

creating build/lib.linux-x86_64-3.6/tokenizers/trainers

copying py_src/tokenizers/trainers/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/trainers

creating build/lib.linux-x86_64-3.6/tokenizers/implementations

copying py_src/tokenizers/implementations/sentencepiece_unigram.py -> build/lib.linux-x86_64-3.6/tokenizers/implementations

copying py_src/tokenizers/implementations/base_tokenizer.py -> build/lib.linux-x86_64-3.6/tokenizers/implementations

copying py_src/tokenizers/implementations/bert_wordpiece.py -> build/lib.linux-x86_64-3.6/tokenizers/implementations

copying py_src/tokenizers/implementations/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/implementations

copying py_src/tokenizers/implementations/sentencepiece_bpe.py -> build/lib.linux-x86_64-3.6/tokenizers/implementations

copying py_src/tokenizers/implementations/byte_level_bpe.py -> build/lib.linux-x86_64-3.6/tokenizers/implementations

copying py_src/tokenizers/implementations/char_level_bpe.py -> build/lib.linux-x86_64-3.6/tokenizers/implementations

creating build/lib.linux-x86_64-3.6/tokenizers/tools

copying py_src/tokenizers/tools/visualizer.py -> build/lib.linux-x86_64-3.6/tokenizers/tools

copying py_src/tokenizers/tools/__init__.py -> build/lib.linux-x86_64-3.6/tokenizers/tools

copying py_src/tokenizers/__init__.pyi -> build/lib.linux-x86_64-3.6/tokenizers

copying py_src/tokenizers/models/__init__.pyi -> build/lib.linux-x86_64-3.6/tokenizers/models

copying py_src/tokenizers/decoders/__init__.pyi -> build/lib.linux-x86_64-3.6/tokenizers/decoders

copying py_src/tokenizers/normalizers/__init__.pyi -> build/lib.linux-x86_64-3.6/tokenizers/normalizers

copying py_src/tokenizers/pre_tokenizers/__init__.pyi -> build/lib.linux-x86_64-3.6/tokenizers/pre_tokenizers

copying py_src/tokenizers/processors/__init__.pyi -> build/lib.linux-x86_64-3.6/tokenizers/processors

copying py_src/tokenizers/trainers/__init__.pyi -> build/lib.linux-x86_64-3.6/tokenizers/trainers

copying py_src/tokenizers/tools/visualizer-styles.css -> build/lib.linux-x86_64-3.6/tokenizers/tools

running build_ext

running build_rust

error: can't find Rust compiler

If you are using an outdated pip version, it is possible a prebuilt wheel is available for this package but pip is not able to install from it. Installing from the wheel would avoid the need for a Rust compiler.

To update pip, run:

pip install --upgrade pip

and then retry package installation.

If you did intend to build this package from source, try installing a Rust compiler from your system package manager and ensure it is on the PATH during installation. Alternatively, rustup (available at https://rustup.rs) is the recommended way to download and update the Rust compiler toolchain.

----------------------------------------

ERROR: Failed building wheel for tokenizers

Failed to build tokenizers

ERROR: Could not build wheels for tokenizers, which is required to install pyproject.toml-based projects回答 2

Stack Overflow用户

发布于 2022-09-26 14:02:25

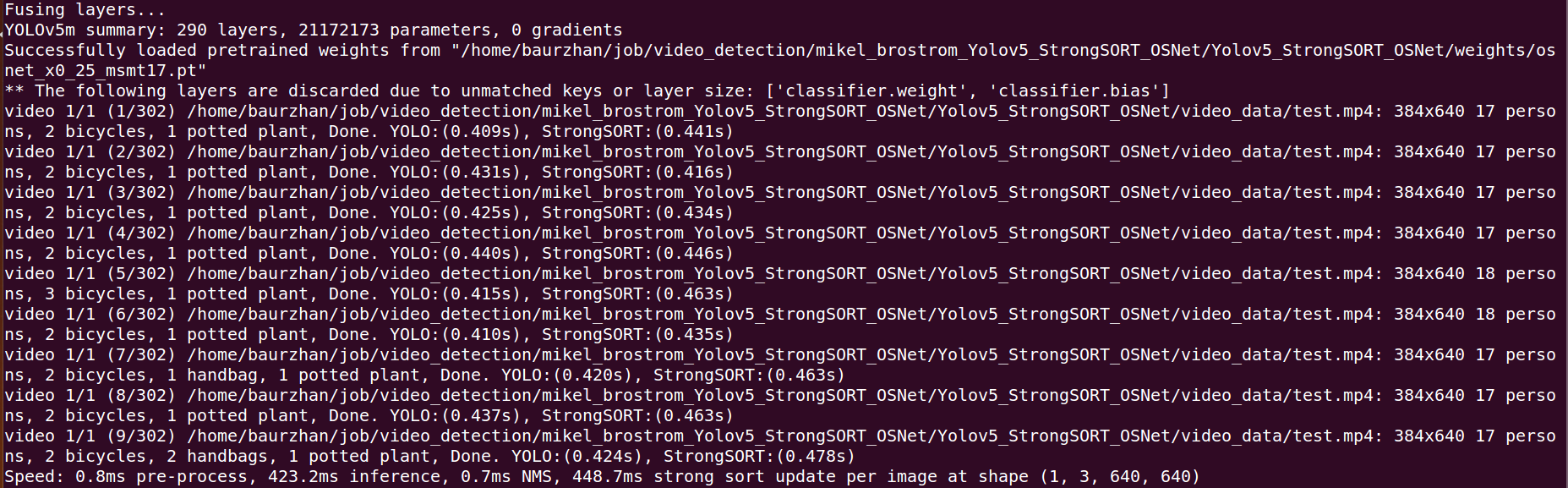

免责声明,我是https://github.com/mikel-brostrom/Yolov5_StrongSORT_OSNet的创造者和维护者。

我没有看到存储库中有任何CPU友好框架的导出功能,比如: onnx或openvino。在CPU上实现快速推理是通过在CPU上快速运行的模型来实现的。

您可以轻松地实现10 CPU在CPU上使用我的回购。一些随机结果在CPU上运行(Intel Core™i7-8850H CPU @ 2.60GHz×12)和openvino模型:

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.079s), StrongSORT:(0.025s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.080s), StrongSORT:(0.022s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.075s), StrongSORT:(0.022s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.078s), StrongSORT:(0.022s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.080s), StrongSORT:(0.022s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.112s), StrongSORT:(0.022s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.083s), StrongSORT:(0.022s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.078s), StrongSORT:(0.022s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.078s), StrongSORT:(0.024s)

0: 640x640 2 persons, 1 chair, 1 refrigerator, Done. YOLO:(0.085s), StrongSORT:(0.023s)Stack Overflow用户

发布于 2022-10-19 05:46:15

您是否有机会检查您的代码部分所花费的时间,以找出瓶颈所在?有一些方法可以改进从摄像机抓取和捕获帧,改进压缩帧的解码(例如,从h.264到原始格式)(以及防止通过GPU-零拷贝机制将像素数据从GPU-视频编解码器复制到推理机)。

然后推理本身-有许多不同的预先训练的对象-人-/行人-检测模型。模型可以被优化(例如通过OpenVINO的工具)在特定的底层加速器(如CPU、GPU、VPU、FPGA)上运行得很好,比如使用INT8 8优化的CPU指令集。

模型可以压缩,检查是否稀疏。

然后对多个检测到的物体进行后处理样滤波(过滤置信度,通过NMS对重叠进行过滤),然后跟踪。

https://stackoverflow.com/questions/73498209

复制相似问题