数据库上的log4j2 Loki附录

我试图从Databricks集群将日志发送到Loki实例使用此附件。

- 我添加了一个init脚本 (见下文),它修改驱动程序和执行器的

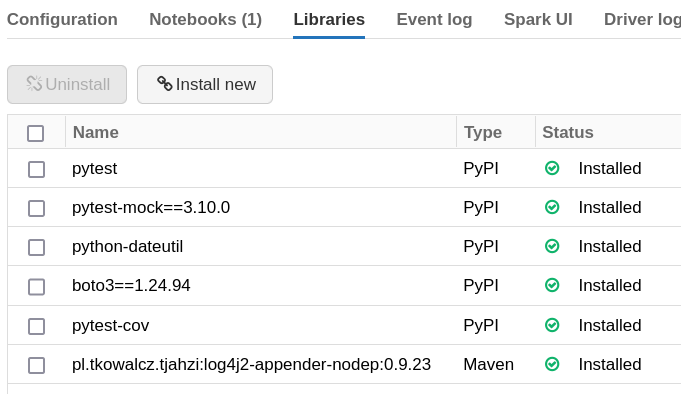

log4j2.xml文件。 - 我在集群配置的库选项卡中添加了一个库。作为maven库添加。我可以从日志中看到它是成功下载和安装的。

- 做了

echo "" >> log4j2.xml,等待30秒,等待它重新读取配置。同样的错误。

init脚本

for f in /databricks/spark/dbconf/log4j/executor/log4j2.xml /databricks/spark/dbconf/log4j/driver/log4j2.xml; do

sed -i 's/<Configuration /<Configuration monitorInterval="30" /' "$f"

sed -i 's/packages="com.databricks.logging"/packages="pl.tkowalcz.tjahzi.log4j2, com.databricks.logging"/' "$f"

sed -i 's~<Appenders>~<Appenders>\n <Loki name="Loki" bufferSizeMegabytes="64">\n <host>loki.atops.abc.com</host>\n <port>3100</port>\n\n <ThresholdFilter level="ALL"/>\n <PatternLayout>\n <Pattern>%X{tid} [%t] %d{MM-dd HH:mm:ss.SSS} %5p %c{1} - %m%n%exception{full}</Pattern>\n </PatternLayout>\n\n <Header name="X-Scope-OrgID" value="ABC"/>\n <Label name="server" value="Databricks"/>\n <Label name="foo" value="bar"/>\n <Label name="system" value="abc"/>\n <LogLevelLabel>log_level</LogLevelLabel>\n </Loki>\n~' $f

sed -i 's/\(<Root.*\)/\1\n <AppenderRef ref="Loki"\/>/' "$f"

done在集群的stdout中,我看到以下错误(我认为这意味着jar不存在类路径,或者它不知何故在寻找错误的类):

ERROR Error processing element Loki ([Appenders: null]): CLASS_NOT_FOUND

ERROR Unable to locate appender "Loki" for logger config "root"还试图将类名添加到Appender规范中:

<Loki name="Loki" class="pl.tkowalcz.tjahzi.log4j2.LokiAppender" bufferSizeMegabytes="64">

而不是

<Loki name="Loki" bufferSizeMegabytes="64">

同样的错误。

find /databricks -name '*log4j2-appender-nodep*' -type f什么也没发现。

我还尝试下载jar文件,将其放到dbfs中,然后作为JAR库安装(而不是MAVEN库):

$ databricks fs cp local/log4j2-appender-nodep-0.9.23.jar dbfs:/Shared/log4j2-appender-nodep-0.9.23.jar

$ databricks libraries install --cluster-id 1-2-345 --jar "dbfs:/Shared/log4j2-appender-nodep-0.9.23.jar"同样的错误。

我可以使用curl从teh集群发布到Loki意图,我可以在Grafana GUI中看到这个日志:

ds=$(date +%s%N) && \

echo $ds && \

curl -v -H "Content-Type: application/json" -XPOST -s "http://loki.atops.abc.com:3100/loki/api/v1/push" --data-raw '{"streams": [{ "stream": { "foo": "bar2", "system": "abc" }, "values": [ [ "'$ds'", "'$ds': testing, testing" ] ] }]}'这是init脚本之后的conf文件内容:cat /databricks/spark/dbconf/log4j/driver/log4j2.xml

<?xml version="1.0" encoding="UTF-8"?><Configuration monitorInterval="30" status="INFO" packages="pl.tkowalcz.tjahzi.log4j2, com.databricks.logging" shutdownHook="disable">

<Appenders>

<Loki name="Loki" bufferSizeMegabytes="64">

<host>loki.atops.abc.com</host>

<port>3100</port>

<ThresholdFilter level="ALL"/>

<PatternLayout>

<Pattern>%X{tid} [%t] %d{MM-dd HH:mm:ss.SSS} %5p %c{1} - %m%n%exception{full}</Pattern>

</PatternLayout>

<Header name="X-Scope-OrgID" value="ABC"/>

<Label name="server" value="Databricks"/>

<Label name="foo" value="bar"/>

<Label name="system" value="abc"/>

<LogLevelLabel>log_level</LogLevelLabel>

</Loki>

<RollingFile name="publicFile.rolling" fileName="logs/log4j-active.log" filePattern="logs/log4j-%d{yyyy-MM-dd-HH}.log.gz" immediateFlush="true" bufferedIO="false" bufferSize="8192" createOnDemand="true">

<Policies>

<TimeBasedTriggeringPolicy/>

</Policies>

<PatternLayout pattern="%d{yy/MM/dd HH:mm:ss} %p %c{1}: %m%n%ex"/>

</RollingFile>

---snip---

</Appenders>

<Loggers>

<Root level="INFO">

<AppenderRef ref="Loki"/>

<AppenderRef ref="publicFile.rolling.rewrite"/>

</Root>

<Logger name="privateLog" level="INFO" additivity="false">

<AppenderRef ref="privateFile.rolling.rewrite"/>

</Logger>

---snip---

</Loggers>

</Configuration>回答 1

Stack Overflow用户

发布于 2022-11-04 07:24:18

这很可能是因为log4j是在集群启动时初始化的,但是您在库UI中指定的库是在集群启动后安装的,所以在开始时无法使用loki库。

解决方案是从用于log4j配置的相同init脚本中安装loki库&只需将库&它的依赖项复制到/databricks/jars/文件夹(例如,来自DBFS,它将作为/dbfs/...提供给脚本)。

例如,如果您有上传您的jar文件到/dbfs/Shared/custom_jars/log4j2-appender-nodep-0.9.23.jar,那么在init脚本中添加以下内容:

#!/bin/bash

cp /dbfs/Shared/custom_jars/log4j2-appender-nodep-0.9.23.jar /databricks/jars/

# other code to update log4j2.xml etc...https://stackoverflow.com/questions/74309819

复制相似问题