在角角中共享一组层的权重

在角角中共享一组层的权重

提问于 2020-12-10 10:50:07

在另一个问题中,人们可以重用不同输入层上的密集层来实现权重共享。我现在想知道如何将这一原则扩展到整个层;我的尝试如下:

from keras.layers import Input, Dense, BatchNormalization, PReLU

from keras.initializers import Constant

from keras import backend as K

def embedding_block(dim):

dense = Dense(dim, activation=None, kernel_initializer='glorot_normal')

activ = PReLU(alpha_initializer=Constant(value=0.25))(dense)

bnorm = BatchNormalization()(activ)

return bnorm

def embedding_stack():

return embedding_block(32)(embedding_block(16)(embedding_block(8)))

common_embedding = embedding_stack()在这里,我创建了“嵌入块”,它有一个单一的、密度很高的可变维层,我试图将它串到一个“嵌入堆栈”中,该堆栈由具有不断增长的维度的块组成。然后,我想将这个“公共嵌入”应用于几个输入层(它们都具有相同的形状),这样就可以共享权重。

上面的代码在

<ipython-input-33-835f06ed7bbb> in embedding_block(dim)

1 def embedding_block(dim):

2 dense = Dense(dim, activation=None, kernel_initializer='glorot_normal')

----> 3 activ = PReLU(alpha_initializer=Constant(value=0.25))(dense)

4 bnorm = BatchNormalization()(activ)

5 return bnorm

/localenv/lib/python3.8/site-packages/tensorflow/python/keras/engine/base_layer.py in __call__(self, *args, **kwargs)

980 with ops.name_scope_v2(name_scope):

981 if not self.built:

--> 982 self._maybe_build(inputs)

983

984 with ops.enable_auto_cast_variables(self._compute_dtype_object):

/localenv/lib/python3.8/site-packages/tensorflow/python/keras/engine/base_layer.py in _maybe_build(self, inputs)

2641 # operations.

2642 with tf_utils.maybe_init_scope(self):

-> 2643 self.build(input_shapes) # pylint:disable=not-callable

2644 # We must set also ensure that the layer is marked as built, and the build

2645 # shape is stored since user defined build functions may not be calling

/localenv/lib/python3.8/site-packages/tensorflow/python/keras/utils/tf_utils.py in wrapper(instance, input_shape)

321 if input_shape is not None:

322 input_shape = convert_shapes(input_shape, to_tuples=True)

--> 323 output_shape = fn(instance, input_shape)

324 # Return shapes from `fn` as TensorShapes.

325 if output_shape is not None:

/localenv/lib/python3.8/site-packages/tensorflow/python/keras/layers/advanced_activations.py in build(self, input_shape)

138 @tf_utils.shape_type_conversion

139 def build(self, input_shape):

--> 140 param_shape = list(input_shape[1:])

141 if self.shared_axes is not None:

142 for i in self.shared_axes:

TypeError: 'NoneType' object is not subscriptable做这件事的正确方法是什么?谢谢!

回答 1

Stack Overflow用户

回答已采纳

发布于 2020-12-10 13:12:47

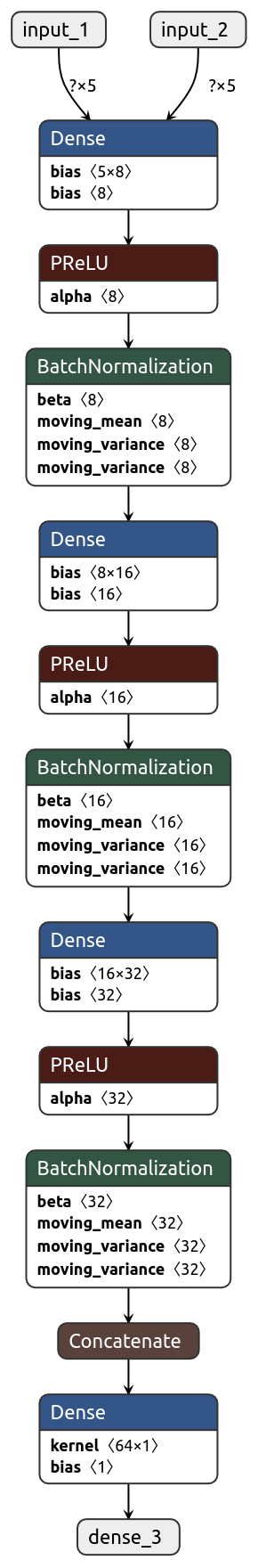

您需要将层实例化与模型创建分离。

下面是一个使用for循环的简单方法:

from tensorflow.keras import layers, initializers, Model

def embedding_block(dim):

dense = layers.Dense(dim, activation=None, kernel_initializer='glorot_normal')

activ = layers.PReLU(alpha_initializer=initializers.Constant(value=0.25))

bnorm = layers.BatchNormalization()

return [dense, activ, bnorm]

stack = embedding_block(8) + embedding_block(16) + embedding_block(32)

inp1 = layers.Input((5,))

inp2 = layers.Input((5,))

x,y = inp1,inp2

for layer in stack:

x = layer(x)

y = layer(y)

concat_layer = layers.Concatenate()([x,y])

pred = layers.Dense(1, activation="sigmoid")(concat_layer)

model = Model(inputs = [inp1, inp2], outputs=pred)我们首先创建每个层,然后使用functional迭代它们来创建模型。

您可以在内特恩中分析网络,以确定权重确实是共享的:

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/65233116

复制相关文章

相似问题