呈现到纹理- ClearRenderTargetView()工作,但没有对象呈现到纹理(呈现到屏幕工作良好)

我试着把场景渲染成纹理,然后显示在屏幕的角落里。

我想我可以这样做:

- 渲染场景(我的

Engine::render()方法将设置着色器并进行绘制调用)- works ok。 - 将渲染目标更改为纹理。

- 再次渲染场景-不工作。

context->ClearRenderTargetView(texture->getRenderTargetView(), { 1.0f, 0.0f, 0.0f, 1.0f } )to 将我的纹理设置为红色(对于步骤1中的场景,我使用不同的颜色),但是没有对象被呈现在上面。 - 将渲染目标更改为原始目标。

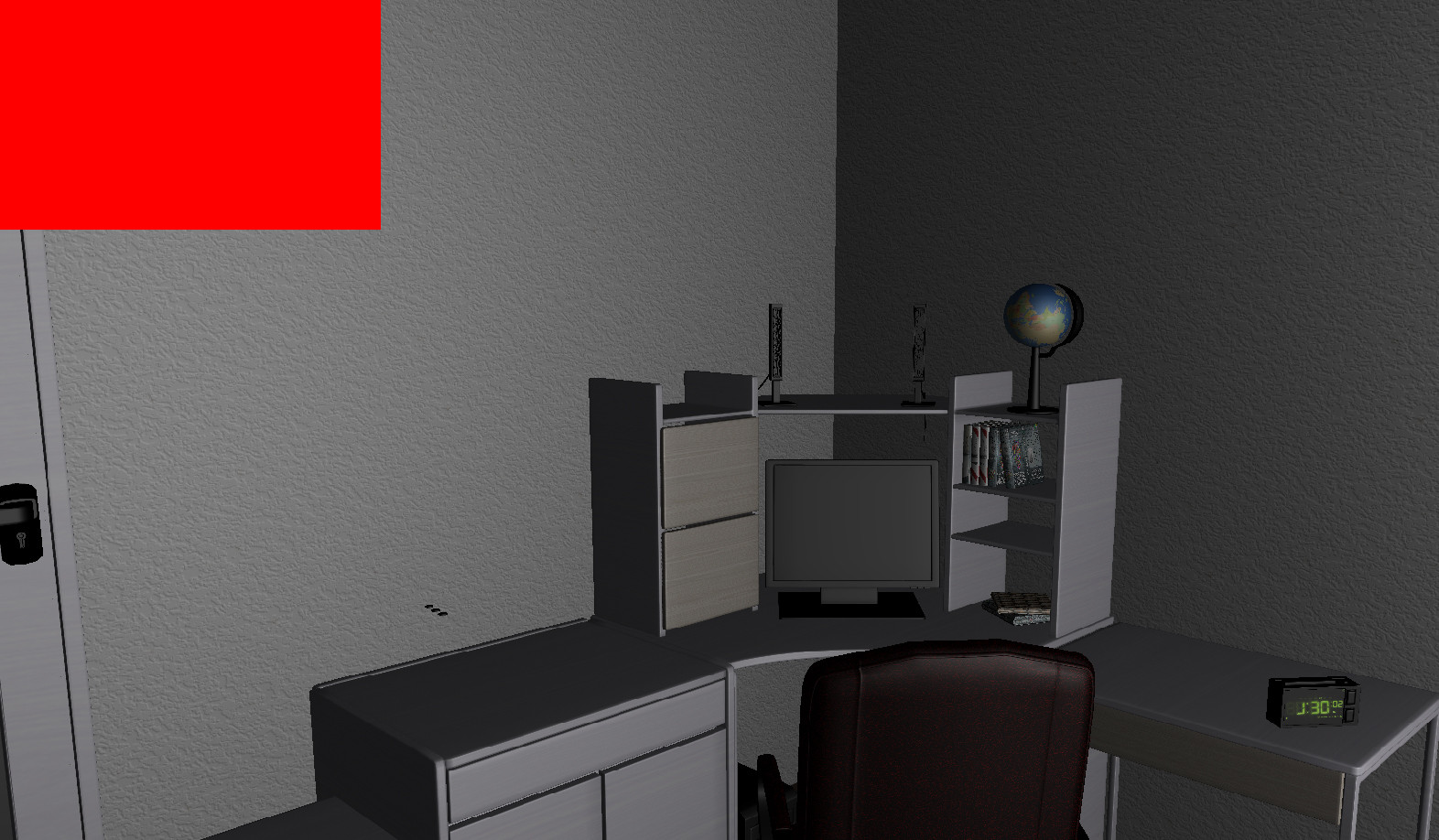

- 最后一次渲染场景,在具有我在步骤3中呈现的纹理的角处有矩形。- works ok。我看到了场景,角落里的小长方形。问题是,它只是红色的(我猜,在步骤3中呈现出了一些问题)。

结果(应该是“图像中的图像”而不是红色的矩形):

步骤2. -4的代码:

context->OMSetRenderTargets(1, &textureRenderTargetView, depthStencilView);

float bg[4] = { 1.0f, 0.0f, 0.0f, 1.0f };

context->ClearRenderTargetView(textureRenderTargetView, bg); //backgroundColor - red, green, blue, alpha

render();

context->OMSetRenderTargets(1, &myRenderTargetView, depthStencilView); //bind render target back to previous value (not to texture)render()方法不会改变(在步骤1中工作,为什么当我呈现到时它不能工作?),并以swapChain->Present(0, 0)结尾。

我知道ClearRenderTargetView会影响我的纹理(没有它,它不会改变颜色为红色)。但是渲染的其余部分要么没有输出到它,要么就有了另一个问题。

,我错过什么了吗?

我创建了纹理、着色器资源视图并基于本教程为其呈现目标(可能我的D3D11_TEXTURE2D_DESC中有错误?):

D3D11_TEXTURE2D_DESC textureDesc;

D3D11_RENDER_TARGET_VIEW_DESC renderTargetViewDesc;

D3D11_SHADER_RESOURCE_VIEW_DESC shaderResourceViewDesc;

//1. create render target

ZeroMemory(&textureDesc, sizeof(textureDesc));

//setup the texture description

//we will need to have this texture bound as a render target AND a shader resource

textureDesc.Width = size.getX();

textureDesc.Height = size.getY();

textureDesc.MipLevels = 1;

textureDesc.ArraySize = 1;

textureDesc.Format = DXGI_FORMAT_R32G32B32A32_FLOAT;

textureDesc.SampleDesc.Count = 1;

textureDesc.Usage = D3D11_USAGE_DEFAULT;

textureDesc.BindFlags = D3D11_BIND_RENDER_TARGET | D3D11_BIND_SHADER_RESOURCE;

textureDesc.CPUAccessFlags = 0;

textureDesc.MiscFlags = 0;

//create the texture

device->CreateTexture2D(&textureDesc, NULL, &textureRenderTarget);

//2. create render target view

//setup the description of the render target view.

renderTargetViewDesc.Format = textureDesc.Format;

renderTargetViewDesc.ViewDimension = D3D11_RTV_DIMENSION_TEXTURE2D;

renderTargetViewDesc.Texture2D.MipSlice = 0;

//create the render target view

device->CreateRenderTargetView(textureRenderTarget, &renderTargetViewDesc, &textureRenderTargetView);

//3. create shader resource view

//setup the description of the shader resource view.

shaderResourceViewDesc.Format = textureDesc.Format;

shaderResourceViewDesc.ViewDimension = D3D11_SRV_DIMENSION_TEXTURE2D;

shaderResourceViewDesc.Texture2D.MostDetailedMip = 0;

shaderResourceViewDesc.Texture2D.MipLevels = 1;

//create the shader resource view.

device->CreateShaderResourceView(textureRenderTarget, &shaderResourceViewDesc, &texture);深度缓冲器:

D3D11_TEXTURE2D_DESC descDepth;

ZeroMemory(&descDepth, sizeof(descDepth));

descDepth.Width = width;

descDepth.Height = height;

descDepth.MipLevels = 1;

descDepth.ArraySize = 1;

descDepth.Format = DXGI_FORMAT_D24_UNORM_S8_UINT;

descDepth.SampleDesc.Count = sampleCount;

descDepth.SampleDesc.Quality = maxQualityLevel;

descDepth.Usage = D3D11_USAGE_DEFAULT;

descDepth.BindFlags = D3D11_BIND_DEPTH_STENCIL;

descDepth.CPUAccessFlags = 0;

descDepth.MiscFlags = 0;交换链是这样的:

DXGI_SWAP_CHAIN_DESC sd;

ZeroMemory(&sd, sizeof(sd));

sd.BufferCount = 1;

sd.BufferDesc.Width = width;

sd.BufferDesc.Height = height;

sd.BufferDesc.Format = DXGI_FORMAT_R8G8B8A8_UNORM;

sd.BufferDesc.RefreshRate.Numerator = numerator; //60

sd.BufferDesc.RefreshRate.Denominator = denominator; //1

sd.BufferUsage = DXGI_USAGE_RENDER_TARGET_OUTPUT;

sd.OutputWindow = *hwnd;

sd.SampleDesc.Count = sampleCount; //1 (and 0 for quality) to turn off multisampling

sd.SampleDesc.Quality = maxQualityLevel;

sd.Windowed = fullScreen ? FALSE : TRUE;

sd.Flags = DXGI_SWAP_CHAIN_FLAG_ALLOW_MODE_SWITCH; //allow full-screen switchin

// Set the scan line ordering and scaling to unspecified.

sd.BufferDesc.ScanlineOrdering = DXGI_MODE_SCANLINE_ORDER_UNSPECIFIED;

sd.BufferDesc.Scaling = DXGI_MODE_SCALING_UNSPECIFIED;

// Discard the back buffer contents after presenting.

sd.SwapEffect = DXGI_SWAP_EFFECT_DISCARD;我以这种方式创建默认的呈现目标视图:

//create a render target view

ID3D11Texture2D* pBackBuffer = NULL;

result = swapChain->GetBuffer(0, __uuidof(ID3D11Texture2D), (LPVOID*)&pBackBuffer);

ERROR_HANDLE(SUCCEEDED(result), L"The swapChain->GetBuffer() failed.", MOD_GRAPHIC);

//Create the render target view with the back buffer pointer.

result = device->CreateRenderTargetView(pBackBuffer, NULL, &myRenderTargetView);回答 1

Stack Overflow用户

发布于 2015-08-11 17:34:34

正如@Gnietschow所建议的,经过一些调试之后,我发现了一个错误:

D3D11错误:ID3D11DeviceContext::OMSetRenderTarget: 插槽0处的RenderTargetView不能与DepthStencilView相匹配。只有当视图的有效维度以及资源类型、多样本计数和多样本质量相等时,DepthStencilViews才能与RenderTargetViews一起使用。 槽0处的RenderTargetView具有(w:1680,h:1050,as:1),而资源是一个带有(mc:1,mq:0)的。 DepthStencilView具有(w:1680,h:1050,as:1),而资源是一个带有(mc:8,mq:16)的。

因此,基本上,我的渲染目标(纹理)没有使用反混叠,而我的后缓冲区/深度缓冲区。

我必须在SampleDesc.Count和D3D11_TEXTURE2D_DESC中将1更改为1,将SampleDesc.Quality更改为0,以匹配从纹理到渲染的值。换句话说,当渲染到纹理时,我不得不关闭反混叠。

我想知道,为什么不支持反混叠?当我将SampleDesc.Count和SampleDesc.Quality设置为我的标准值(8和16,这些值在渲染场景时在GPU上很好地工作)时,对于纹理渲染目标,device->CreateTexture2D(...)失败了(即使我到处使用相同的值)。

https://stackoverflow.com/questions/31931194

复制相似问题