用Open改变照片中的幻灯片

用Open改变照片中的幻灯片

提问于 2015-09-22 12:58:29

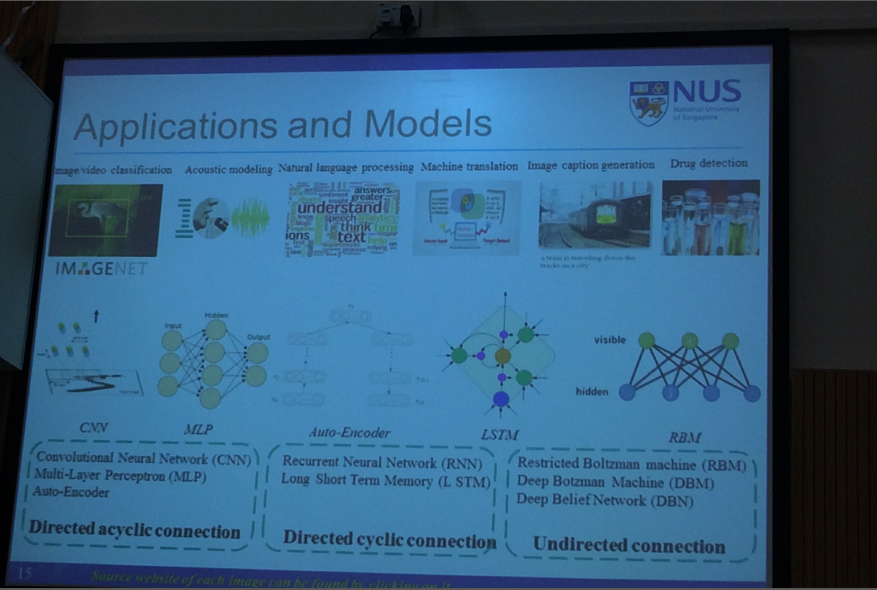

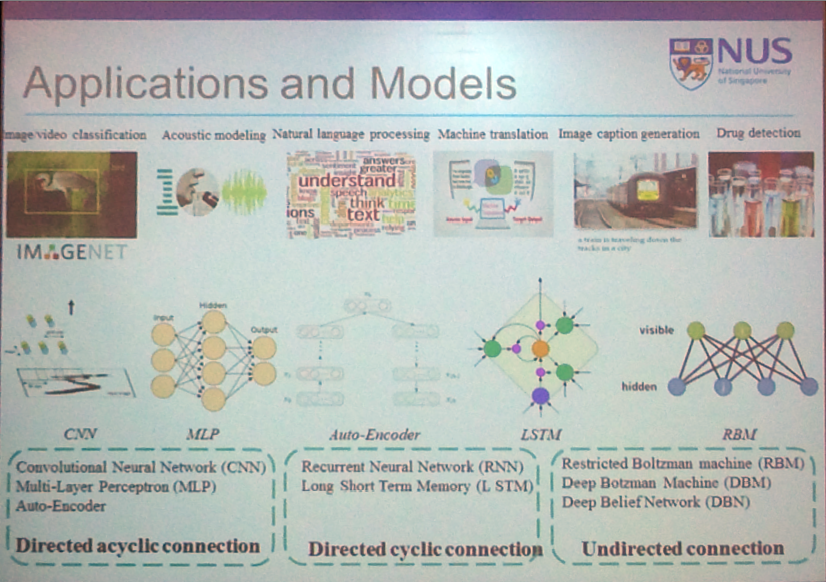

如何使用或合适的图像处理工具更改幻灯片以使自动生成更好的OpenCV?我想它需要检测投影仪,自动对比颜色,透视变形它才能变得更好。

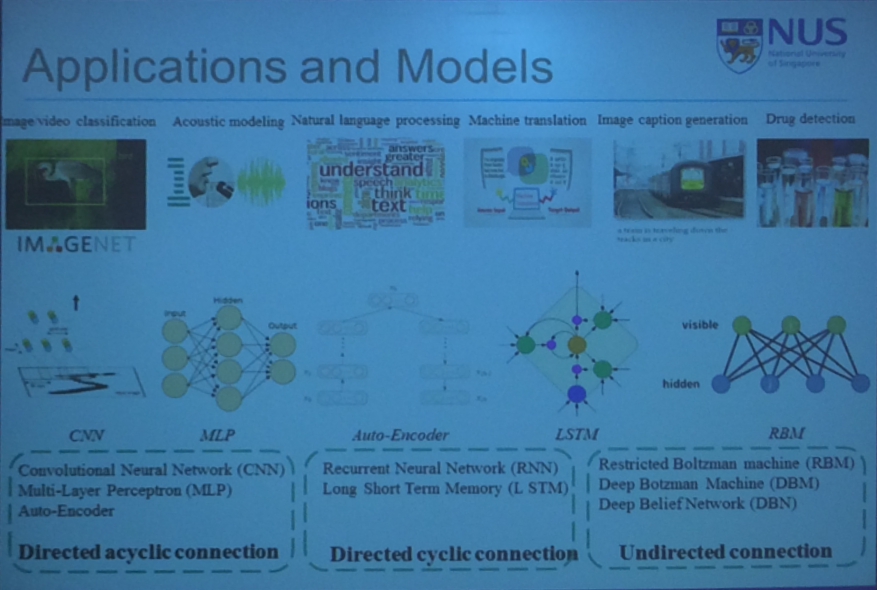

我使用photoshop来手动转换

至

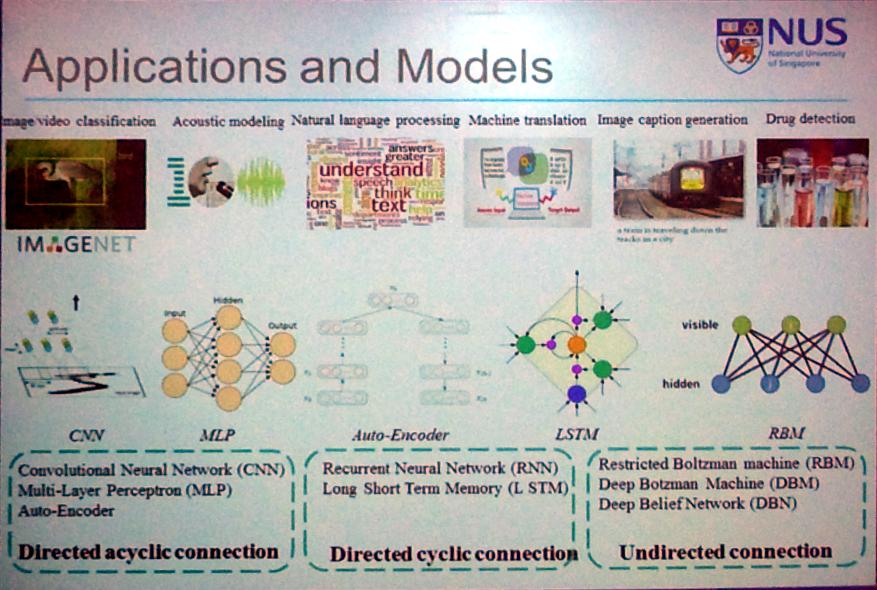

通过使用1)自动对比度2) Photoshop中的透视翘曲

回答 1

Stack Overflow用户

回答已采纳

发布于 2015-09-27 11:24:58

您可以:

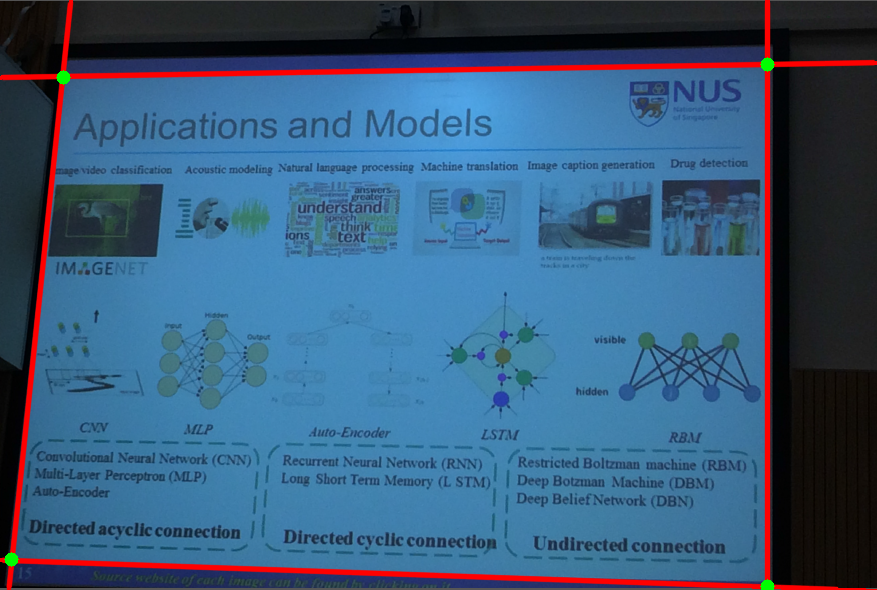

1)将HSV图像阈值设置在几乎蓝色的颜色上,以获得海报掩码:

2)找出外线及其交汇处:

3)采用透视变换:

4)进行颜色增强。这里我使用了相当于Matlab的不调整。有关在这里中的移植,请参见OpenCV。

这是完整的密码。评论意见应澄清每一步。如果有什么不清楚的地方请告诉我。

#include <opencv2\opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void imadjust(const Mat1b& src, Mat1b& dst, int tol = 1, Vec2i in = Vec2i(0, 255), Vec2i out = Vec2i(0, 255))

{

// src : input CV_8UC1 image

// dst : output CV_8UC1 imge

// tol : tolerance, from 0 to 100.

// in : src image bounds

// out : dst image buonds

dst = src.clone();

tol = max(0, min(100, tol));

if (tol > 0)

{

// Compute in and out limits

// Histogram

vector<int> hist(256, 0);

for (int r = 0; r < src.rows; ++r) {

for (int c = 0; c < src.cols; ++c) {

hist[src(r, c)]++;

}

}

// Cumulative histogram

vector<int> cum = hist;

for (int i = 1; i < hist.size(); ++i) {

cum[i] = cum[i - 1] + hist[i];

}

// Compute bounds

int total = src.rows * src.cols;

int low_bound = total * tol / 100;

int upp_bound = total * (100 - tol) / 100;

in[0] = distance(cum.begin(), lower_bound(cum.begin(), cum.end(), low_bound));

in[1] = distance(cum.begin(), lower_bound(cum.begin(), cum.end(), upp_bound));

}

// Stretching

float scale = float(out[1] - out[0]) / float(in[1] - in[0]);

for (int r = 0; r < dst.rows; ++r)

{

for (int c = 0; c < dst.cols; ++c)

{

int vs = max(src(r, c) - in[0], 0);

int vd = min(int(vs * scale + 0.5f) + out[0], out[1]);

dst(r, c) = saturate_cast<uchar>(vd);

}

}

}

int main()

{

// Load image

Mat3b img = imread("path_to_image");

Mat3b dbg = img.clone(); // Debug image

// Convert to HSV

Mat3b hsv;

cvtColor(img, hsv, COLOR_BGR2HSV);

// Threshold on HSV values

Mat1b mask;

inRange(hsv, Scalar(100, 140, 120), Scalar(110, 170, 200), mask);

// Get the external boundaries

Mat1b top(mask.rows, mask.cols, uchar(0));

Mat1b bottom(mask.rows, mask.cols, uchar(0));

Mat1b left(mask.rows, mask.cols, uchar(0));

Mat1b right(mask.rows, mask.cols, uchar(0));

for (int r = 0; r < mask.rows; ++r)

{

// Find first in row

for (int c = 0; c < mask.cols; ++c)

{

if (mask(r, c))

{

left(r, c) = 255;

break;

}

}

// Find last in row

for (int c = mask.cols - 1; c >= 0; --c)

{

if (mask(r, c))

{

right(r, c) = 255;

break;

}

}

}

for (int c = 0; c < mask.cols; ++c)

{

// Find first in col

for (int r = 0; r < mask.rows; ++r)

{

if (mask(r, c))

{

top(r, c) = 255;

break;

}

}

// Find last in col

for (int r = mask.rows - 1; r >= 0; --r)

{

if (mask(r, c))

{

bottom(r, c) = 255;

break;

}

}

}

// Find lines

vector<Vec2f> linesTop, linesBottom, linesLeft, linesRight;

HoughLines(top, linesTop, 1, CV_PI / 180.0, 100);

HoughLines(bottom, linesBottom, 1, CV_PI / 180.0, 100);

HoughLines(left, linesLeft, 1, CV_PI / 180.0, 100);

HoughLines(right, linesRight, 1, CV_PI / 180.0, 100);

// Find intersections

Mat1b maskLines(mask.rows, mask.cols, uchar(0));

if (linesTop.empty() || linesBottom.empty() || linesLeft.empty() || linesRight.empty())

{

cout << "No enough lines detected" << endl;

return -1;

}

// Keep only the first line detected for each side

vector<Vec2f> lines{ linesTop[0], linesBottom[0], linesLeft[0], linesRight[0] };

for (size_t i = 0; i < lines.size(); i++)

{

float rho = lines[i][0], theta = lines[i][1];

// Get 2 points on each line

Point pt1, pt2;

double a = cos(theta), b = sin(theta);

double x0 = a*rho, y0 = b*rho;

pt1.x = cvRound(x0 + 1000 * (-b));

pt1.y = cvRound(y0 + 1000 * (a));

pt2.x = cvRound(x0 - 1000 * (-b));

pt2.y = cvRound(y0 - 1000 * (a));

// Draw lines

Mat1b maskCurrentLine(mask.rows, mask.cols, uchar(0));

line(maskCurrentLine, pt1, pt2, Scalar(1), 1);

maskLines += maskCurrentLine;

line(dbg, pt1, pt2, Scalar(0, 0, 255), 3, CV_AA);

}

// Keep only intersections

maskLines = maskLines > 1;

// Get ordered set of vertices

vector<Point2f> vertices;

// Top left

Mat1b tl(maskLines(Rect(0, 0, mask.cols / 2, mask.rows / 2)));

for (int r = 0; r < tl.rows; ++r)

{

for (int c = 0; c < tl.cols; ++c)

{

if (tl(r, c))

{

vertices.push_back(Point2f(c, r));

}

}

}

// Top right

Mat1b tr(maskLines(Rect(mask.cols / 2, 0, mask.cols / 2, mask.rows / 2)));

for (int r = 0; r < tr.rows; ++r)

{

for (int c = 0; c < tr.cols; ++c)

{

if (tr(r, c))

{

vertices.push_back(Point2f(mask.cols / 2 + c, r));

}

}

}

// Bottom right

Mat1b br(maskLines(Rect(mask.cols / 2, mask.rows / 2, mask.cols / 2, mask.rows / 2)));

for (int r = 0; r < br.rows; ++r)

{

for (int c = 0; c < br.cols; ++c)

{

if (br(r, c))

{

vertices.push_back(Point2f(mask.cols / 2 + c, mask.rows / 2 + r));

}

}

}

// Bottom left

Mat1b bl(maskLines(Rect(0, mask.rows / 2, mask.cols / 2, mask.rows / 2)));

for (int r = 0; r < bl.rows; ++r)

{

for (int c = 0; c < bl.cols; ++c)

{

if (bl(r, c))

{

vertices.push_back(Point2f(c, mask.rows / 2 + r));

}

}

}

// Draw vertices

for (int i = 0; i < vertices.size(); ++i)

{

circle(dbg, vertices[i], 7, Scalar(0,255,0), CV_FILLED);

}

// Init output image

Mat3b result(img.rows, img.cols, Vec3b(0, 0, 0));

// Output vertices

vector<Point2f> verticesOut = { Point2f(0, 0), Point2f(img.cols, 0), Point2f(img.cols, img.rows), Point2f(0, img.rows) };

// Get transformation

Mat M = getPerspectiveTransform(vertices, verticesOut);

warpPerspective(img, result, M, result.size());

// Imadjust

vector<Mat1b> planes;

split(result, planes);

for (int i = 0; i < planes.size(); ++i)

{

imadjust(planes[i], planes[i]);

}

Mat3b adjusted;

merge(planes, adjusted);

imshow("Result", result);

imshow("Adjusted", adjusted);

waitKey();

return 0;

}页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/32717546

复制相关文章

相似问题