如何利用emgu cv在图像中找到具有任意旋转角的黑色方格

如何利用emgu cv在图像中找到具有任意旋转角的黑色方格

提问于 2016-02-07 07:39:00

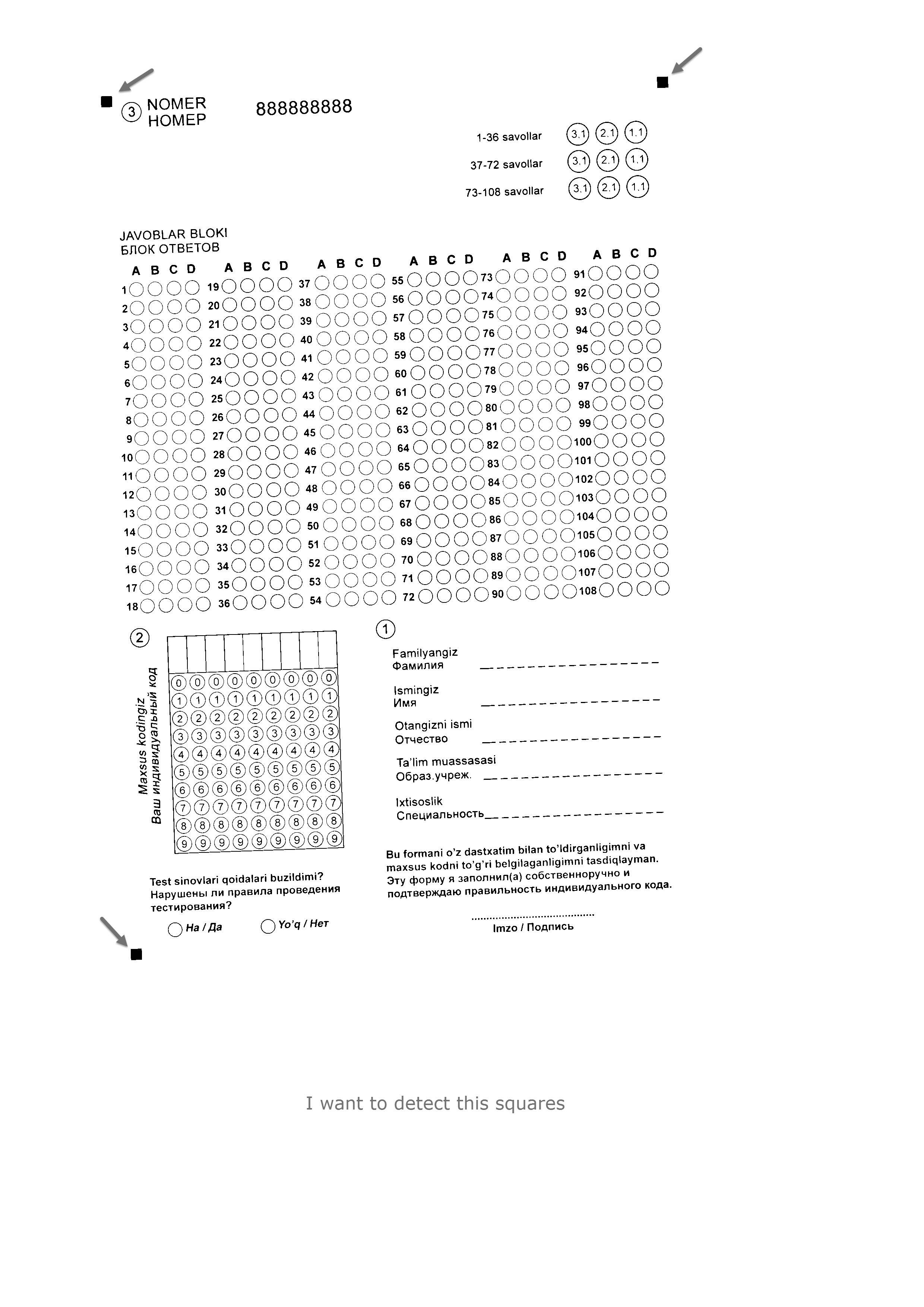

我需要在测试表格中找到三个黑色方块的坐标。我从站点emgu.com中获取了示例代码,并稍微修改了它,但他没有找到我需要的东西。图像的大小为A4,测试表单的大小为A5。我希望能得到你的帮助:)我差点忘了,方格的大小是30个像素。

private void DetectRectangles(Image<Gray, byte> img)

{

var size = new Size(3, 3);

CvInvoke.GaussianBlur(img, img, size, 0);

CvInvoke.AdaptiveThreshold(img, img, 255, AdaptiveThresholdType.MeanC, ThresholdType.Binary, 75, 100);

UMat cannyEdges = new UMat();

CvInvoke.Canny(img, cannyEdges, 180, 120);

var boxList = new List<RotatedRect>();

using (VectorOfVectorOfPoint contours = new VectorOfVectorOfPoint())

{

CvInvoke.FindContours(cannyEdges, contours, null, RetrType.Tree, ChainApproxMethod.ChainApproxSimple);

int count = contours.Size;

for (int i = 0; i < count; i++)

{

using (VectorOfPoint contour = contours[i])

using (VectorOfPoint approxContour = new VectorOfPoint())

{

CvInvoke.ApproxPolyDP(contour, approxContour, CvInvoke.ArcLength(contour, true) * 0.05, true);

var area = CvInvoke.ContourArea(approxContour);

if (area > 800 && area < 1000)

{

if (approxContour.Size == 4)

{

bool isRectangle = true;

Point[] pts = approxContour.ToArray();

LineSegment2D[] edges = PointCollection.PolyLine(pts, true);

for (int j = 0; j < edges.Length; j++)

{

double angle = Math.Abs(edges[(j + 1) % edges.Length].GetExteriorAngleDegree(edges[j]));

if (angle < 75 || angle > 94)

{

isRectangle = false;

break;

}

}

if (isRectangle)

boxList.Add(CvInvoke.MinAreaRect(approxContour));

}

}

}

}

}

var resultimg = new Image<Bgr,byte>(img.Width, img.Height);

CvInvoke.CvtColor(img, resultimg, ColorConversion.Gray2Bgr);

foreach (RotatedRect box in boxList)

{

CvInvoke.Polylines(resultimg, Array.ConvertAll(box.GetVertices(), Point.Round), true, new Bgr(Color.Red).MCvScalar, 2);

}

imageBox1.Image = resultimg;

resultimg.Save("result_img.jpg"); }输入图像:

回答 1

Stack Overflow用户

回答已采纳

发布于 2016-02-07 08:33:04

由于您正在寻找一个非常特定的对象,因此可以使用以下算法:

- 反转图像,使前景变为白色,背景变为黑色。

- 寻找连通部件的轮廓

- 每个等高线

a.计算最小面积矩形

box。 b.计算box:barea的面积 计算等高线的面积:carea应用一些约束,确保你的轮廓是你要寻找的正方形。

步骤3d的约束条件如下:

barea / carea的比率应该很高(比方说高于0.9),这意味着等高线属于一个几乎是矩形的圆点。box的纵横比应该接近1,这意味着box基本上是一个正方形。- 平方的大小应该是几乎

30,以拒绝其他更小或更大的方块在图像中。

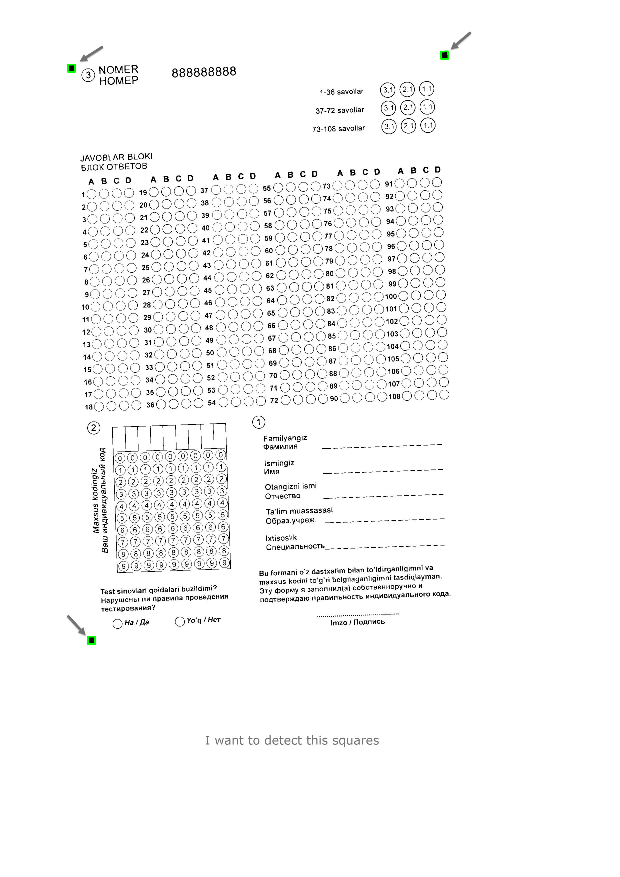

我运行的结果是:

这是密码。对不起,它是C++,但是由于它都是OpenCV函数调用,所以您应该能够轻松地将其移植到C#。至少,您可以将其用作引用:

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

// Load image

Mat1b img = imread("path_to_image", IMREAD_GRAYSCALE);

// Create the output image

Mat3b out;

cvtColor(img, out, COLOR_GRAY2BGR);

// Create debug image

Mat3b dbg = out.clone();

// Binarize (to remove jpeg arifacts)

img = img > 200;

// Invert image

img = ~img;

// Find connected components

vector<vector<Point>> contours;

findContours(img.clone(), contours, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

vector<RotatedRect> squares;

// For each contour

for (int i = 0; i < contours.size(); ++i)

{

// Find rotated bounding box

RotatedRect box = minAreaRect(contours[i]);

// Compute the area of the contour

double carea = contourArea(contours[i]);

// Compute the area of the box

double barea = box.size.area();

// Constraint #1

if ((carea / barea) > 0.9)

{

drawContours(dbg, contours, i, Scalar(0, 0, 255), 7);

// Constraint #2

if (min(box.size.height, box.size.width) / max(box.size.height, box.size.width) > 0.95)

{

drawContours(dbg, contours, i, Scalar(255, 0, 0), 5);

// Constraint #3

if (box.size.width > 25 && box.size.width < 35)

{

drawContours(dbg, contours, i, Scalar(0, 255, 0), 3);

// Found the square!

squares.push_back(box);

}

}

}

// Draw output

for (int i = 0; i < squares.size(); ++i)

{

Point2f pts[4];

squares[i].points(pts);

for (int j = 0; j < 4; ++j)

{

line(out, pts[j], pts[(j + 1) % 4], Scalar(0,255,0), 5);

}

}

}

// Resize for better visualization

resize(out, out, Size(), 0.25, 0.25);

resize(dbg, dbg, Size(), 0.25, 0.25);

// Show images

imshow("Steps", dbg);

imshow("Result", out);

waitKey();

return 0;

}页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/35250994

复制相关文章

相似问题