如何从TensorFlow变量中记录单个标量值?

如何从TensorFlow变量中记录单个标量值?

提问于 2016-04-24 14:50:08

如何(使用SummaryWriter,例如TensorBoard)记录张量Variable的单个标量元素?例如,如何在网络中记录给定层或节点的单个权重?

在我的示例代码中,我将一个通用的前馈神经网络按到服务中,以进行简单的线性回归,并希望(在这种情况下)在学习过程中记录唯一隐藏层中唯一节点的权重。

例如,我可以在会话期间显式地获取这些值。

sess.run(layer_weights)[0][i][0]对于i-th权重,其中layer_weights是权重Variable的列表;但我不知道如何记录相应的标量值。如果我试着

w1 = tf.slice(layer_weights[0], [0], [1])[0]

tf.scalar_summary('w1', w1)或

w1 = layer_weights[0][1][0]

tf.scalar_summary('w1', w1)我得到了

ValueError:形状(5,1)必须有1级

如何从TensorFlow Variable记录单个标量值

from __future__ import (absolute_import, print_function, division, unicode_literals)

import numpy as np

import tensorflow as tf

# Basic model parameters as external flags

flags = tf.app.flags

FLAGS = flags.FLAGS

flags.DEFINE_float('network_nodes', [5, 1], 'The number of nodes in each layer, including input and output.')

flags.DEFINE_float('epochs', 250, 'Epochs to run')

flags.DEFINE_float('learning_rate', 0.15, 'Initial learning rate.')

flags.DEFINE_string('data_dir', './data', 'Directory to hold training and test data.')

flags.DEFINE_string('train_dir', './_tmp/train', 'Directory to log training (and the network def).')

flags.DEFINE_string('test_dir', './_tmp/test', 'Directory to log testing.')

def variable_summaries(var, name):

with tf.name_scope("summaries"):

mean = tf.reduce_mean(var)

tf.scalar_summary('mean/' + name, mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_sum(tf.square(var - mean)))

tf.scalar_summary('sttdev/' + name, stddev)

tf.scalar_summary('max/' + name, tf.reduce_max(var))

tf.scalar_summary('min/' + name, tf.reduce_min(var))

tf.histogram_summary(name, var)

def add_layer(input_tensor, input_dim, output_dim, neuron_fn, layer_name):

with tf.name_scope(layer_name):

with tf.name_scope("weights"):

weights = tf.Variable(tf.truncated_normal([input_dim, output_dim], stddev=0.1))

with tf.name_scope("biases"):

biases = tf.Variable(tf.constant(0.1, shape=[output_dim]))

with tf.name_scope('activations'):

with tf.name_scope('weighted_inputs'):

weighted_inputs = tf.matmul(input_tensor, weights) + biases

tf.histogram_summary(layer_name + '/weighted_inputs', weighted_inputs)

output = neuron_fn(weighted_inputs)

return output, weights, biases

def make_ff_network(nodes, input_activation, hidden_activation_fn=tf.nn.sigmoid, output_activation_fn=tf.nn.softmax):

layer_activations = [input_activation]

layer_weights = []

layer_biases = []

n_layers = len(nodes)

for l in range(1, n_layers):

a, w, b = add_layer(layer_activations[l - 1], nodes[l - 1], nodes[l],

output_activation_fn if l == n_layers - 1 else hidden_activation_fn,

'output_layer' if l == n_layers - 1 else 'hidden_layer' + (

'_{}'.format(l) if n_layers > 3 else ''))

layer_activations += [a]

layer_weights += [w]

layer_biases += [b]

with tf.name_scope('output'):

net_activation = tf.identity(layer_activations[-1], name='network_activation')

return net_activation, layer_weights, layer_biases

# Inputs and outputs

with tf.name_scope('data'):

x = tf.placeholder(tf.float32, shape=[None, FLAGS.network_nodes[0]], name='inputs')

y_ = tf.placeholder(tf.float32, shape=[None, FLAGS.network_nodes[-1]], name='correct_outputs')

# Network structure

y, layer_weights, layer_biases = make_ff_network(FLAGS.network_nodes, x, output_activation_fn=tf.identity)

# Metrics and operations

with tf.name_scope('accuracy'):

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.square(y - y_))

# NONE OF THESE WORK:

#w1 = tf.slice(layer_weights[0], [0], [1])[0]

#tf.scalar_summary('w1', w1)

#w1 = layer_weights[0][1][0]

#tf.scalar_summary('w1', w1)

tf.scalar_summary('loss', loss)

train_step = tf.train.GradientDescentOptimizer(FLAGS.learning_rate).minimize(loss)

# Logging

train_writer = tf.train.SummaryWriter(FLAGS.train_dir, tf.get_default_graph())

test_writer = tf.train.SummaryWriter(FLAGS.test_dir)

merged = tf.merge_all_summaries()

W = np.array([1.0, 2.0, 3.0, 4.0, 5.0])

train_x = np.random.rand(100000, FLAGS.network_nodes[0])

train_y = np.array([np.dot(W, train_x.T)+ 6.0]).T

test_x = np.random.rand(1000, FLAGS.network_nodes[0])

test_y = np.array([np.dot(W, test_x.T)+ 6.0]).T

with tf.Session() as sess:

sess.run(tf.initialize_all_variables())

for ep in range(FLAGS.epochs):

sess.run(train_step, feed_dict={x: train_x, y_: train_y})

summary = sess.run(merged, feed_dict={x: test_x, y_: test_y})

test_writer.add_summary(summary, ep+1)

# THESE WORK

print('w1 = {}'.format(sess.run(layer_weights)[0][0][0]))

print('w2 = {}'.format(sess.run(layer_weights)[0][1][0]))

print('w3 = {}'.format(sess.run(layer_weights)[0][2][0]))

print('w4 = {}'.format(sess.run(layer_weights)[0][3][0]))

print('w5 = {}'.format(sess.run(layer_weights)[0][4][0]))

print(' b = {}'.format(sess.run(layer_biases)[0][0]))回答 1

Stack Overflow用户

回答已采纳

发布于 2016-04-24 21:14:02

代码中有不同的错误。

主要的问题是,您要将张量的python列表传递给scalar_summary。错误说你传递的张量没有等级1,这与切片操作有关。

您希望传递权重,并记录每一层的权重。这样做的一种方法是记录每一层的每个权重:

for weight in layer_weights:

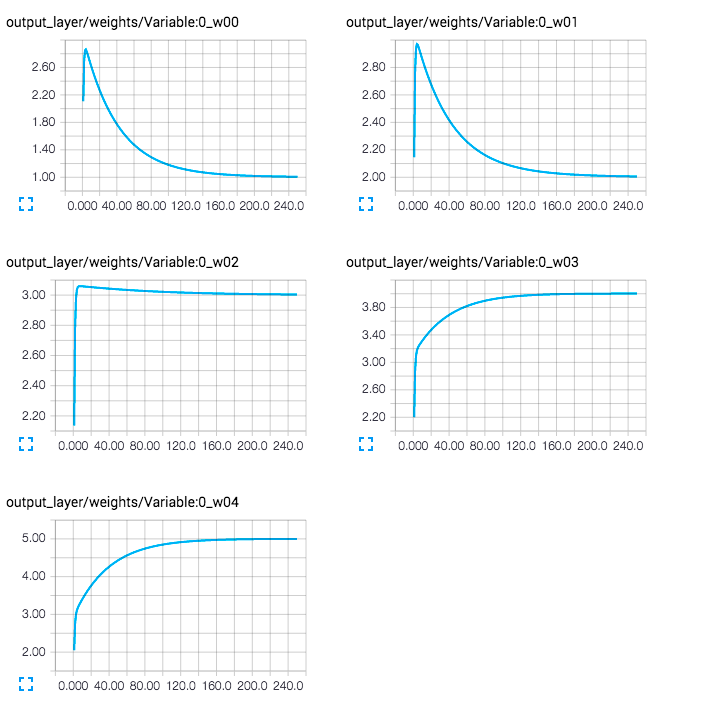

tf.scalar_summary([ ['%s_w%d%d' % (weight.name, i,j) for i in xrange(len(layer_weights))] for j in xrange(5) ], weight)这将在拉伸板tensorboard --logdir=./_tmp/test中生成这些漂亮的图形。

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/36824659

复制相关文章

相似问题