AVFoundation -在视频中添加模糊背景

AVFoundation -在视频中添加模糊背景

提问于 2017-05-22 06:08:48

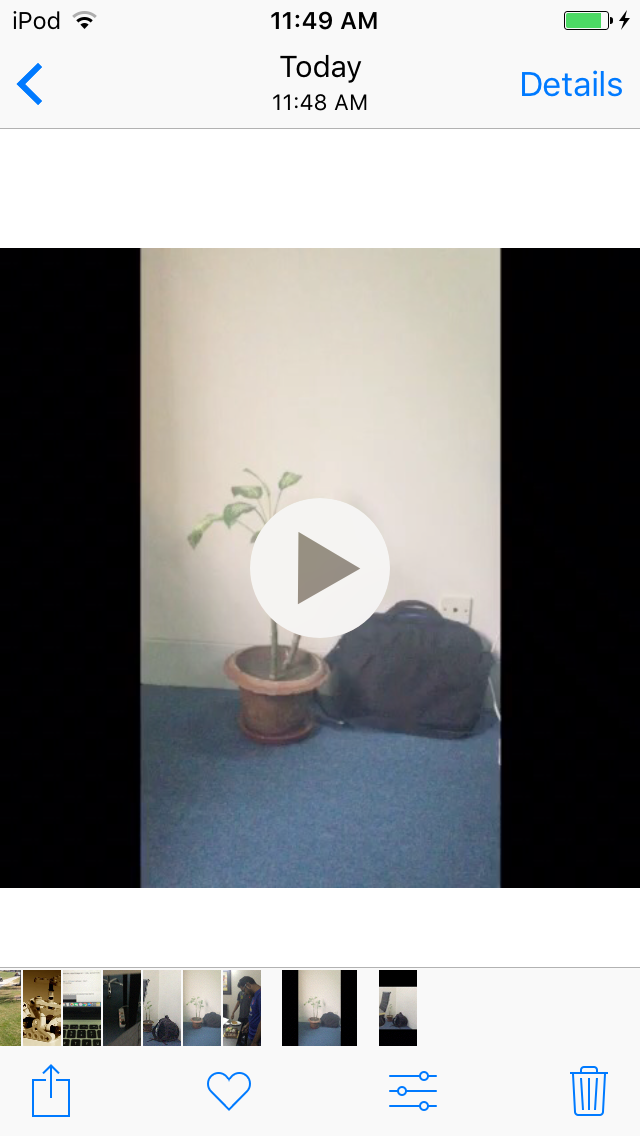

我正在Swift上开发一个视频编辑应用程序。在我的例子中,输出视频如下所示

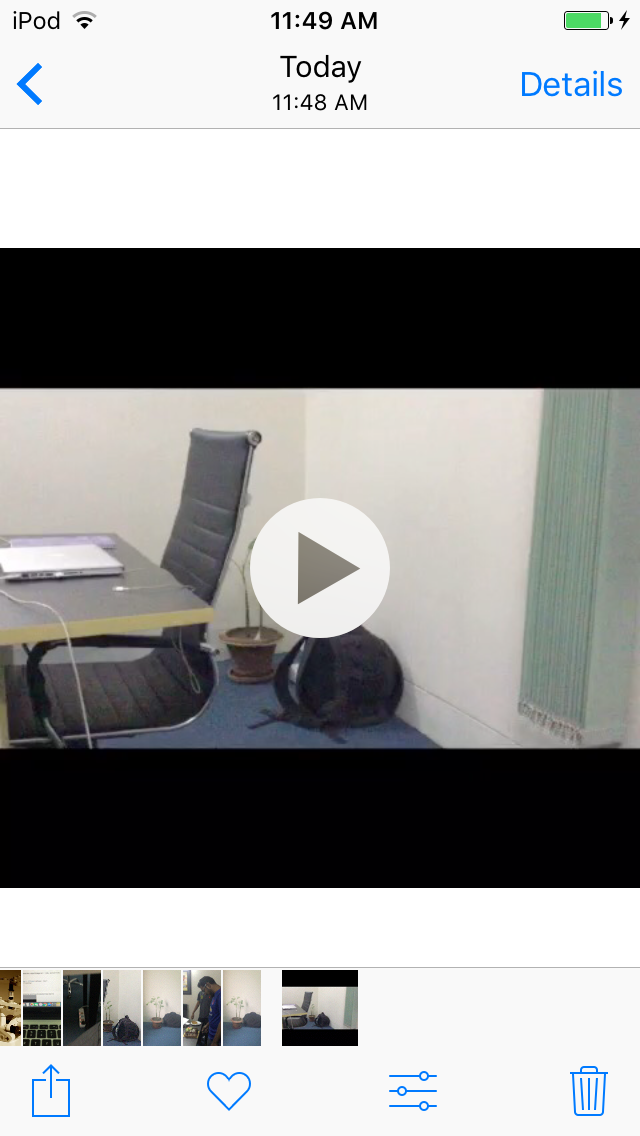

我正试图用模糊的效果填充黑色的部分,就像这样

我搜索了,但没有找到任何有效的解决方案。任何帮助都是很大的帮助。

回答 4

Stack Overflow用户

回答已采纳

发布于 2018-08-31 05:42:11

Swift 4-在视频中添加模糊背景

也许我对这个问题的回答迟到了,但我仍然没有找到任何解决这个要求的方法。因此,分享我的工作:

特性

- 单视频支持

- 多视频合并支持

- 以任何比例支撑任何画布

- 将最终视频保存到摄像机卷中

- 管理所有视频方向

向视频添加模糊背景的步骤

- 合并所有没有音频的视频 ( a)需要呈现的区域大小。 ( b)需要计算视频在这一区域的规模和位置。用于aspectFill属性。

- 为合并视频添加模糊效果

- 将一个接一个的视频放在模糊视频的中心。

合并视频

func mergeVideos(_ videos: Array<AVURLAsset>, inArea area:CGSize, completion: @escaping (_ error: Error?, _ url:URL?) -> Swift.Void) {

// Create AVMutableComposition Object.This object will hold our multiple AVMutableCompositionTrack.

let mixComposition = AVMutableComposition()

var instructionLayers : Array<AVMutableVideoCompositionLayerInstruction> = []

for asset in videos {

// Here we are creating the AVMutableCompositionTrack. See how we are adding a new track to our AVMutableComposition.

let track = mixComposition.addMutableTrack(withMediaType: AVMediaType.video, preferredTrackID: kCMPersistentTrackID_Invalid)

// Now we set the length of the track equal to the length of the asset and add the asset to out newly created track at kCMTimeZero for first track and lastAssetTime for current track so video plays from the start of the track to end.

if let videoTrack = asset.tracks(withMediaType: AVMediaType.video).first {

/// Hide time for this video's layer

let opacityStartTime: CMTime = CMTimeMakeWithSeconds(0, asset.duration.timescale)

let opacityEndTime: CMTime = CMTimeAdd(mixComposition.duration, asset.duration)

let hideAfter: CMTime = CMTimeAdd(opacityStartTime, opacityEndTime)

let timeRange = CMTimeRangeMake(kCMTimeZero, asset.duration)

try? track?.insertTimeRange(timeRange, of: videoTrack, at: mixComposition.duration)

/// Layer instrcution

let layerInstruction = AVMutableVideoCompositionLayerInstruction(assetTrack: track!)

layerInstruction.setOpacity(0.0, at: hideAfter)

/// Add logic for aspectFit in given area

let properties = scaleAndPositionInAspectFillMode(forTrack: videoTrack, inArea: area)

/// Checking for orientation

let videoOrientation: UIImageOrientation = self.getVideoOrientation(forTrack: videoTrack)

let assetSize = self.assetSize(forTrack: videoTrack)

if (videoOrientation == .down) {

/// Rotate

let defaultTransfrom = asset.preferredTransform

let rotateTransform = CGAffineTransform(rotationAngle: -CGFloat(Double.pi/2.0))

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

var ytranslation: CGFloat = assetSize.height

var xtranslation: CGFloat = 0

if properties.position.y == 0 {

xtranslation = -(assetSize.width - ((size.width/size.height) * assetSize.height))/2.0

}

else {

ytranslation = assetSize.height - (assetSize.height - ((size.height/size.width) * assetSize.width))/2.0

}

let translationTransform = CGAffineTransform(translationX: xtranslation, y: ytranslation)

// Final transformation - Concatination

let finalTransform = defaultTransfrom.concatenating(rotateTransform).concatenating(translationTransform).concatenating(scaleTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

else if (videoOrientation == .left) {

/// Rotate

let defaultTransfrom = asset.preferredTransform

let rotateTransform = CGAffineTransform(rotationAngle: -CGFloat(Double.pi))

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

var ytranslation: CGFloat = assetSize.height

var xtranslation: CGFloat = assetSize.width

if properties.position.y == 0 {

xtranslation = assetSize.width - (assetSize.width - ((size.width/size.height) * assetSize.height))/2.0

}

else {

ytranslation = assetSize.height - (assetSize.height - ((size.height/size.width) * assetSize.width))/2.0

}

let translationTransform = CGAffineTransform(translationX: xtranslation, y: ytranslation)

// Final transformation - Concatination

let finalTransform = defaultTransfrom.concatenating(rotateTransform).concatenating(translationTransform).concatenating(scaleTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

else if (videoOrientation == .right) {

/// No need to rotate

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

let translationTransform = CGAffineTransform(translationX: properties.position.x, y: properties.position.y)

let finalTransform = scaleTransform.concatenating(translationTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

else {

/// Rotate

let defaultTransfrom = asset.preferredTransform

let rotateTransform = CGAffineTransform(rotationAngle: CGFloat(Double.pi/2.0))

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

var ytranslation: CGFloat = 0

var xtranslation: CGFloat = assetSize.width

if properties.position.y == 0 {

xtranslation = assetSize.width - (assetSize.width - ((size.width/size.height) * assetSize.height))/2.0

}

else {

ytranslation = -(assetSize.height - ((size.height/size.width) * assetSize.width))/2.0

}

let translationTransform = CGAffineTransform(translationX: xtranslation, y: ytranslation)

// Final transformation - Concatination

let finalTransform = defaultTransfrom.concatenating(rotateTransform).concatenating(translationTransform).concatenating(scaleTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

instructionLayers.append(layerInstruction)

}

}

let mainInstruction = AVMutableVideoCompositionInstruction()

mainInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, mixComposition.duration)

mainInstruction.layerInstructions = instructionLayers

let mainCompositionInst = AVMutableVideoComposition()

mainCompositionInst.instructions = [mainInstruction]

mainCompositionInst.frameDuration = CMTimeMake(1, 30)

mainCompositionInst.renderSize = area

//let url = URL(fileURLWithPath: "/Users/enacteservices/Desktop/final_video.mov")

let url = self.videoOutputURL

try? FileManager.default.removeItem(at: url)

let exporter = AVAssetExportSession(asset: mixComposition, presetName: AVAssetExportPresetHighestQuality)

exporter?.outputURL = url

exporter?.outputFileType = .mp4

exporter?.videoComposition = mainCompositionInst

exporter?.shouldOptimizeForNetworkUse = true

exporter?.exportAsynchronously(completionHandler: {

if let anError = exporter?.error {

completion(anError, nil)

}

else if exporter?.status == AVAssetExportSessionStatus.completed {

completion(nil, url)

}

})

}添加模糊效应

func addBlurEffect(toVideo asset:AVURLAsset, completion: @escaping (_ error: Error?, _ url:URL?) -> Swift.Void) {

let filter = CIFilter(name: "CIGaussianBlur")

let composition = AVVideoComposition(asset: asset, applyingCIFiltersWithHandler: { request in

// Clamp to avoid blurring transparent pixels at the image edges

let source: CIImage? = request.sourceImage.clampedToExtent()

filter?.setValue(source, forKey: kCIInputImageKey)

filter?.setValue(10.0, forKey: kCIInputRadiusKey)

// Crop the blurred output to the bounds of the original image

let output: CIImage? = filter?.outputImage?.cropped(to: request.sourceImage.extent)

// Provide the filter output to the composition

if let anOutput = output {

request.finish(with: anOutput, context: nil)

}

})

//let url = URL(fileURLWithPath: "/Users/enacteservices/Desktop/final_video.mov")

let url = self.videoOutputURL

// Remove any prevouis videos at that path

try? FileManager.default.removeItem(at: url)

let exporter = AVAssetExportSession(asset: asset, presetName: AVAssetExportPresetHighestQuality)

// assign all instruction for the video processing (in this case the transformation for cropping the video

exporter?.videoComposition = composition

exporter?.outputFileType = .mp4

exporter?.outputURL = url

exporter?.exportAsynchronously(completionHandler: {

if let anError = exporter?.error {

completion(anError, nil)

}

else if exporter?.status == AVAssetExportSessionStatus.completed {

completion(nil, url)

}

})

}将一个接一个的视频放置在模糊视频的中心

这将是你最后的视频网址.

func addAllVideosAtCenterOfBlur(videos: Array<AVURLAsset>, blurVideo: AVURLAsset, completion: @escaping (_ error: Error?, _ url:URL?) -> Swift.Void) {

// Create AVMutableComposition Object.This object will hold our multiple AVMutableCompositionTrack.

let mixComposition = AVMutableComposition()

var instructionLayers : Array<AVMutableVideoCompositionLayerInstruction> = []

// Add blur video first

let blurVideoTrack = mixComposition.addMutableTrack(withMediaType: AVMediaType.video, preferredTrackID: kCMPersistentTrackID_Invalid)

// Blur layer instruction

if let videoTrack = blurVideo.tracks(withMediaType: AVMediaType.video).first {

let timeRange = CMTimeRangeMake(kCMTimeZero, blurVideo.duration)

try? blurVideoTrack?.insertTimeRange(timeRange, of: videoTrack, at: kCMTimeZero)

}

/// Add other videos at center of the blur video

var startAt = kCMTimeZero

for asset in videos {

/// Time Range of asset

let timeRange = CMTimeRangeMake(kCMTimeZero, asset.duration)

// Here we are creating the AVMutableCompositionTrack. See how we are adding a new track to our AVMutableComposition.

let track = mixComposition.addMutableTrack(withMediaType: AVMediaType.video, preferredTrackID: kCMPersistentTrackID_Invalid)

// Now we set the length of the track equal to the length of the asset and add the asset to out newly created track at kCMTimeZero for first track and lastAssetTime for current track so video plays from the start of the track to end.

if let videoTrack = asset.tracks(withMediaType: AVMediaType.video).first {

/// Hide time for this video's layer

let opacityStartTime: CMTime = CMTimeMakeWithSeconds(0, asset.duration.timescale)

let opacityEndTime: CMTime = CMTimeAdd(startAt, asset.duration)

let hideAfter: CMTime = CMTimeAdd(opacityStartTime, opacityEndTime)

/// Adding video track

try? track?.insertTimeRange(timeRange, of: videoTrack, at: startAt)

/// Layer instrcution

let layerInstruction = AVMutableVideoCompositionLayerInstruction(assetTrack: track!)

layerInstruction.setOpacity(0.0, at: hideAfter)

/// Add logic for aspectFit in given area

let properties = scaleAndPositionInAspectFitMode(forTrack: videoTrack, inArea: size)

/// Checking for orientation

let videoOrientation: UIImageOrientation = self.getVideoOrientation(forTrack: videoTrack)

let assetSize = self.assetSize(forTrack: videoTrack)

if (videoOrientation == .down) {

/// Rotate

let defaultTransfrom = asset.preferredTransform

let rotateTransform = CGAffineTransform(rotationAngle: -CGFloat(Double.pi/2.0))

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

var ytranslation: CGFloat = assetSize.height

var xtranslation: CGFloat = 0

if properties.position.y == 0 {

xtranslation = -(assetSize.width - ((size.width/size.height) * assetSize.height))/2.0

}

else {

ytranslation = assetSize.height - (assetSize.height - ((size.height/size.width) * assetSize.width))/2.0

}

let translationTransform = CGAffineTransform(translationX: xtranslation, y: ytranslation)

// Final transformation - Concatination

let finalTransform = defaultTransfrom.concatenating(rotateTransform).concatenating(translationTransform).concatenating(scaleTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

else if (videoOrientation == .left) {

/// Rotate

let defaultTransfrom = asset.preferredTransform

let rotateTransform = CGAffineTransform(rotationAngle: -CGFloat(Double.pi))

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

var ytranslation: CGFloat = assetSize.height

var xtranslation: CGFloat = assetSize.width

if properties.position.y == 0 {

xtranslation = assetSize.width - (assetSize.width - ((size.width/size.height) * assetSize.height))/2.0

}

else {

ytranslation = assetSize.height - (assetSize.height - ((size.height/size.width) * assetSize.width))/2.0

}

let translationTransform = CGAffineTransform(translationX: xtranslation, y: ytranslation)

// Final transformation - Concatination

let finalTransform = defaultTransfrom.concatenating(rotateTransform).concatenating(translationTransform).concatenating(scaleTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

else if (videoOrientation == .right) {

/// No need to rotate

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

let translationTransform = CGAffineTransform(translationX: properties.position.x, y: properties.position.y)

let finalTransform = scaleTransform.concatenating(translationTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

else {

/// Rotate

let defaultTransfrom = asset.preferredTransform

let rotateTransform = CGAffineTransform(rotationAngle: CGFloat(Double.pi/2.0))

// Scale

let scaleTransform = CGAffineTransform(scaleX: properties.scale.width, y: properties.scale.height)

// Translate

var ytranslation: CGFloat = 0

var xtranslation: CGFloat = assetSize.width

if properties.position.y == 0 {

xtranslation = assetSize.width - (assetSize.width - ((size.width/size.height) * assetSize.height))/2.0

}

else {

ytranslation = -(assetSize.height - ((size.height/size.width) * assetSize.width))/2.0

}

let translationTransform = CGAffineTransform(translationX: xtranslation, y: ytranslation)

// Final transformation - Concatination

let finalTransform = defaultTransfrom.concatenating(rotateTransform).concatenating(translationTransform).concatenating(scaleTransform)

layerInstruction.setTransform(finalTransform, at: kCMTimeZero)

}

instructionLayers.append(layerInstruction)

}

/// Adding audio

if let audioTrack = asset.tracks(withMediaType: AVMediaType.audio).first {

let aTrack = mixComposition.addMutableTrack(withMediaType: AVMediaType.audio, preferredTrackID: kCMPersistentTrackID_Invalid)

try? aTrack?.insertTimeRange(timeRange, of: audioTrack, at: startAt)

}

// Increase the startAt time

startAt = CMTimeAdd(startAt, asset.duration)

}

/// Blur layer instruction

let layerInstruction = AVMutableVideoCompositionLayerInstruction(assetTrack: blurVideoTrack!)

instructionLayers.append(layerInstruction)

let mainInstruction = AVMutableVideoCompositionInstruction()

mainInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, blurVideo.duration)

mainInstruction.layerInstructions = instructionLayers

let mainCompositionInst = AVMutableVideoComposition()

mainCompositionInst.instructions = [mainInstruction]

mainCompositionInst.frameDuration = CMTimeMake(1, 30)

mainCompositionInst.renderSize = size

//let url = URL(fileURLWithPath: "/Users/enacteservices/Desktop/final_video.mov")

let url = self.videoOutputURL

try? FileManager.default.removeItem(at: url)

let exporter = AVAssetExportSession(asset: mixComposition, presetName: AVAssetExportPresetHighestQuality)

exporter?.outputURL = url

exporter?.outputFileType = .mp4

exporter?.videoComposition = mainCompositionInst

exporter?.shouldOptimizeForNetworkUse = true

exporter?.exportAsynchronously(completionHandler: {

if let anError = exporter?.error {

completion(anError, nil)

}

else if exporter?.status == AVAssetExportSessionStatus.completed {

completion(nil, url)

}

})

}对于上述代码中使用的帮助方法,请下载所附的示例代码。

另外,如果有更短的方法来做这件事,我也期待着你的到来。因为我必须输出3次视频才能达到这个目的。

Stack Overflow用户

发布于 2017-06-04 08:02:09

Stack Overflow用户

发布于 2018-06-28 07:32:56

您可以使用AVVideoComposition在视频上添加模糊,这是经过测试的。

-(void)applyBlurOnAsset:(AVAsset *)asset Completion:(void(^)(BOOL success, NSError* error, NSURL* videoUrl))completion{

CIFilter *filter = [CIFilter filterWithName:@"CIGaussianBlur"];

AVVideoComposition *composition = [AVVideoComposition videoCompositionWithAsset: asset

applyingCIFiltersWithHandler:^(AVAsynchronousCIImageFilteringRequest *request){

// Clamp to avoid blurring transparent pixels at the image edges

CIImage *source = [request.sourceImage imageByClampingToExtent];

[filter setValue:source forKey:kCIInputImageKey];

[filter setValue:[NSNumber numberWithDouble:10.0] forKey:kCIInputRadiusKey];

// Crop the blurred output to the bounds of the original image

CIImage *output = [filter.outputImage imageByCroppingToRect:request.sourceImage.extent];

// Provide the filter output to the composition

[request finishWithImage:output context:nil];

}];

NSURL *outputUrl = [[NSURL alloc] initWithString:@"Your Output path"];

//Remove any prevouis videos at that path

[[NSFileManager defaultManager] removeItemAtURL:outputUrl error:nil];

AVAssetExportSession *exporter = [[AVAssetExportSession alloc] initWithAsset:asset presetName:AVAssetExportPreset960x540] ;

// assign all instruction for the video processing (in this case the transformation for cropping the video

exporter.videoComposition = composition;

exporter.outputFileType = AVFileTypeMPEG4;

if (outputUrl){

exporter.outputURL = outputUrl;

[exporter exportAsynchronouslyWithCompletionHandler:^{

switch ([exporter status]) {

case AVAssetExportSessionStatusFailed:

NSLog(@"crop Export failed: %@", [[exporter error] localizedDescription]);

if (completion){

dispatch_async(dispatch_get_main_queue(), ^{

completion(NO,[exporter error],nil);

});

return;

}

break;

case AVAssetExportSessionStatusCancelled:

NSLog(@"crop Export canceled");

if (completion){

dispatch_async(dispatch_get_main_queue(), ^{

completion(NO,nil,nil);

});

return;

}

break;

default:

break;

}

if (completion){

dispatch_async(dispatch_get_main_queue(), ^{

completion(YES,nil,outputUrl);

});

}

}];

}}

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/44105717

复制相关文章

相似问题