Sql按最新重复字段分组

Sql按最新重复字段分组

提问于 2018-02-28 10:00:24

我甚至不知道这个问题的好标题是什么。

但我有张桌子

create table trans

(

[transid] INT IDENTITY (1, 1) NOT NULL,

[customerid] int not null,

[points] decimal(10,2) not null,

[date] datetime not null

)和记录:

--cus1

INSERT INTO trans ( customerid , points , date )

VALUES ( 1, 10, '2016-01-01' ) , ( 1, 20, '2017-02-01' ) , ( 1, 22, '2017-03-01' ) ,

( 1, 24, '2018-02-01' ) , ( 1, 50, '2018-02-25' ) , ( 2, 44, '2016-02-01' ) ,

( 2, 20, '2017-02-01' ) , ( 2, 32, '2017-03-01' ) , ( 2, 15, '2018-02-01' ) ,

( 2, 10, '2018-02-25' ) , ( 3, 10, '2018-02-25' ) , ( 4, 44, '2015-02-01' ) ,

( 4, 20, '2015-03-01' ) , ( 4, 32, '2016-04-01' ) , ( 4, 15, '2016-05-01' ) ,

( 4, 10, '2017-02-25' ) , ( 4, 10, '2018-02-27' ) ,( 4, 20, '2018-02-28' ) ,

( 5, 44, '2015-02-01' ) , ( 5, 20, '2015-03-01' ) , ( 5, 32, '2016-04-01' ) ,

( 5, 15, '2016-05-01' ) ,( 5, 10, '2017-02-25' );

-- selecting the data

select * from trans生产:

transid customerid points date

----------- ----------- --------------------------------------- -----------------------

1 1 10.00 2016-01-01 00:00:00.000

2 1 20.00 2017-02-01 00:00:00.000

3 1 22.00 2017-03-01 00:00:00.000

4 1 24.00 2018-02-01 00:00:00.000

5 1 50.00 2018-02-25 00:00:00.000

6 2 44.00 2016-02-01 00:00:00.000

7 2 20.00 2017-02-01 00:00:00.000

8 2 32.00 2017-03-01 00:00:00.000

9 2 15.00 2018-02-01 00:00:00.000

10 2 10.00 2018-02-25 00:00:00.000

11 3 10.00 2018-02-25 00:00:00.000

12 4 44.00 2015-02-01 00:00:00.000

13 4 20.00 2015-03-01 00:00:00.000

14 4 32.00 2016-04-01 00:00:00.000

15 4 15.00 2016-05-01 00:00:00.000

16 4 10.00 2017-02-25 00:00:00.000

17 4 10.00 2018-02-27 00:00:00.000

18 4 20.00 2018-02-28 00:00:00.000

19 5 44.00 2015-02-01 00:00:00.000

20 5 20.00 2015-03-01 00:00:00.000

21 5 32.00 2016-04-01 00:00:00.000

22 5 15.00 2016-05-01 00:00:00.000

23 5 10.00 2017-02-25 00:00:00.000我正试着把所有的顾客分类,并总结他们的观点。但这是一个陷阱,如果反式在1年内不活跃(下一次转换是1年及以上),那么积分将过期。

对于这种情况,每个客户的积分应该是:

Customer1 20+22+24+50

Customer2 20+32+15+10

Customer3 10

Customer4 10+20

Customer5 0到目前为止,我的情况如下:

select

t1.transid as transid1,

t1.customerid as customerid1,

t1.date as date1,

t1.points as points1,

t1.rank1 as rank1,

t2.transid as transid2,

t2.customerid as customerid2,

t2.points as points2,

isnull(t2.date,getUTCDate()) as date2,

isnull(t2.rank2,t1.rank1+1) as rank2,

cast(case when(t1.date > dateadd(year,-1,isnull(t2.date,getUTCDate()))) Then 0 ELSE 1 END as bit) as ShouldExpire

from

(

select transid,CustomerID,Date,points,

RANK() OVER(PARTITION BY CustomerID ORDER BY date ASC) AS RANK1

from trans

)t1

left join

(

select transid,CustomerID,Date,points,

RANK() OVER(PARTITION BY CustomerID ORDER BY date ASC) AS RANK2

from trans

)t2 on t1.RANK1=t2.RANK2-1

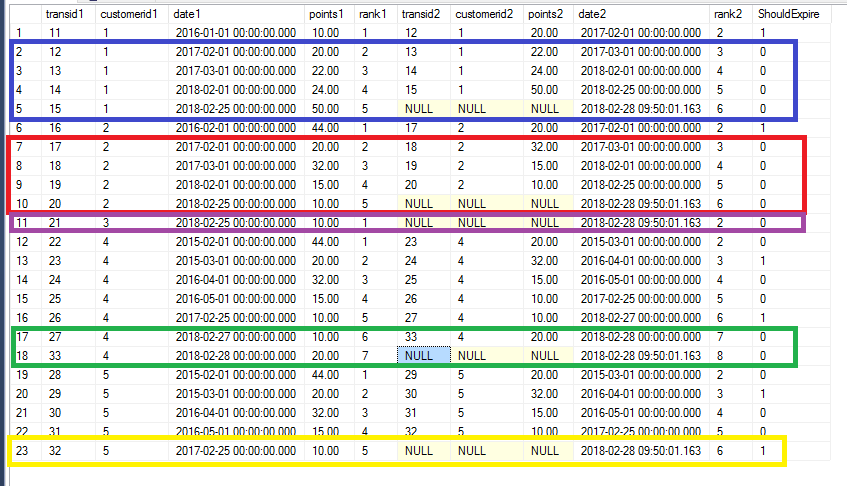

and t1.customerid=t2.customerid这给

从上表中,如何检查ShouldExpire字段的最大值(Rank1)是否为客户,如果为1,则总分为0,否则,所有连续的0之和,直到没有更多的记录或满足1?

还是有更好的方法来解决这个问题?

回答 4

Stack Overflow用户

回答已采纳

发布于 2018-02-28 13:19:34

下面的查询使用LEAD获取下一条记录的日期--使用相同的CustomerID片:

;WITH CTE AS (

SELECT transid, CustomerID, [Date], points,

LEAD([Date]) OVER (PARTITION BY CustomerID

ORDER BY date ASC) AS nextDate,

CASE

WHEN [date] > DATEADD(YEAR,

-1,

-- same LEAD() here as above

ISNULL(LEAD([Date]) OVER (PARTITION BY CustomerID

ORDER BY date ASC),

getUTCDate()))

THEN 0

ELSE 1

END AS ShouldExpire

FROM trans

)

SELECT transid, CustomerID, [Date], points, nextDate, ShouldExpire

FROM CTE

ORDER BY CustomerID, [Date]输出:

transid CustomerID Date points nextDate ShouldExpire

-------------------------------------------------------------

1 1 2016-01-01 10.00 2017-02-01 1 <-- last exp. for 1

2 1 2017-02-01 20.00 2017-03-01 0

3 1 2017-03-01 22.00 2018-02-01 0

4 1 2018-02-01 24.00 2018-02-25 0

5 1 2018-02-25 50.00 NULL 0

6 2 2016-02-01 44.00 2017-02-01 1 <-- last exp. for 2

7 2 2017-02-01 20.00 2017-03-01 0

8 2 2017-03-01 32.00 2018-02-01 0

9 2 2018-02-01 15.00 2018-02-25 0

10 2 2018-02-25 10.00 NULL 0

11 3 2018-02-25 10.00 NULL 0 <-- no exp. for 3

12 4 2015-02-01 44.00 2015-03-01 0

13 4 2015-03-01 20.00 2016-04-01 1

14 4 2016-04-01 32.00 2016-05-01 0

15 4 2016-05-01 15.00 2017-02-25 0

16 4 2017-02-25 10.00 2018-02-27 1 <-- last exp. for 4

17 4 2018-02-27 10.00 2018-02-28 0

18 4 2018-02-28 20.00 NULL 0

19 5 2015-02-01 44.00 2015-03-01 0

20 5 2015-03-01 20.00 2016-04-01 1

21 5 2016-04-01 32.00 2016-05-01 0

22 5 2016-05-01 15.00 2017-02-25 0

23 5 2017-02-25 10.00 NULL 1 <-- last exp. for 5现在,您似乎想要计算上一次过期后的点数之和。

使用上述CTE作为基础,您可以通过以下方法获得所需的结果:

;WITH CTE AS (

... above query here ...

)

SELECT CustomerID,

SUM(CASE WHEN rnk = 0 THEN points ELSE 0 END) AS sumOfPoints

FROM (

SELECT transid, CustomerID, [Date], points, nextDate, ShouldExpire,

SUM(ShouldExpire) OVER (PARTITION BY CustomerID ORDER BY [Date] DESC) AS rnk

FROM CTE

) AS t

GROUP BY CustomerID输出:

CustomerID sumOfPoints

-----------------------

1 116.00

2 77.00

3 10.00

4 30.00

5 0.00Demo here

Stack Overflow用户

发布于 2018-02-28 10:27:57

这里棘手的部分是在所有点过期时转储,然后重新开始累积它们。我以为,如果只有一笔交易,直到有一笔新的交易,我们才能到期,即使第一笔交易是一年前的事了?

对于客户#5,我也得到了一个不同的答案,因为他们似乎有一个尚未过期的“交易链”?

以下是我的查询:

WITH ordered AS (

SELECT

*,

ROW_NUMBER() OVER (PARTITION BY customerid ORDER BY [date]) AS order_id

FROM

trans),

max_transid AS (

SELECT

customerid,

MAX(transid) AS max_transid

FROM

trans

GROUP BY

customerid),

not_expired AS (

SELECT

t1.customerid,

t1.points,

t1.[date] AS t1_date,

CASE

WHEN m.customerid IS NOT NULL THEN GETDATE()

ELSE t2.[date]

END AS t2_date

FROM

ordered t1

LEFT JOIN ordered t2 ON t2.customerid = t1.customerid AND t1.transid != t2.transid AND t2.order_id = t1.order_id + 1 AND t1.[date] > DATEADD(YEAR, -1, t2.[date])

LEFT JOIN max_transid m ON m.customerid = t1.customerid AND m.max_transid = t1.transid

),

max_not_expired AS (

SELECT

customerid,

MAX(t1_date) AS max_expired

FROM

not_expired

WHERE

t2_date IS NULL

GROUP BY

customerid)

SELECT

n.customerid,

SUM(n.points) AS points

FROM

not_expired n

LEFT JOIN max_not_expired m ON m.customerid = n.customerid

WHERE

ISNULL(m.max_expired, '19000101') < n.t1_date

GROUP BY

n.customerid;可以将其重构为更简单,但我想说明得出最终答案的步骤:

customerid points

1 116.00

2 77.00

3 10.00

4 30.00

5 57.00Stack Overflow用户

发布于 2018-02-28 10:12:33

你能试试这个吗?

SELECT customerid,

Sum(t1.points)

FROM trans t1

WHERE NOT EXISTS (SELECT 1

FROM trans t2

WHERE Datediff(year, t1.date, t2.date) >= 1)

GROUP BY t1.customerid 希望能帮上忙!

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/49026695

复制相关文章

相似问题