GCP语音到文本- Java不起作用

GCP语音到文本- Java不起作用

提问于 2019-08-13 09:50:04

我在Chrome中使用.webm记录了一个示例MediaRecorder文件。当我使用Google客户端获取视频的转录时,它会返回空的转录。下面是我的代码

SpeechSettings settings = null;

Path path = Paths.get("D:\\scrap\\gcp_test.webm");

byte[] content = null;

try {

content = Files.readAllBytes(path);

settings = SpeechSettings.newBuilder().setCredentialsProvider(credentialsProvider).build();

} catch (IOException e1) {

throw new IllegalStateException(e1);

}

try (SpeechClient speech = SpeechClient.create(settings)) {

// Builds the request for remote FLAC file

RecognitionConfig config = RecognitionConfig.newBuilder()

.setEncoding(AudioEncoding.LINEAR16)

.setLanguageCode("en-US")

.setUseEnhanced(true)

.setModel("video")

.setEnableAutomaticPunctuation(true)

.setSampleRateHertz(48000)

.build();

RecognitionAudio audio = RecognitionAudio.newBuilder().setContent(ByteString.copyFrom(content)).build();

// RecognitionAudio audio = RecognitionAudio.newBuilder().setUri("gs://xxxx/gcp_test.webm") .build();

// Use blocking call for getting audio transcript

RecognizeResponse response = speech.recognize(config, audio);

List<SpeechRecognitionResult> results = response.getResultsList();

for (SpeechRecognitionResult result : results) {

SpeechRecognitionAlternative alternative = result.getAlternativesList().get(0);

System.out.printf("Transcription: %s%n", alternative.getTranscript());

}

} catch (Exception e) {

e.printStackTrace();

System.err.println(e.getMessage());

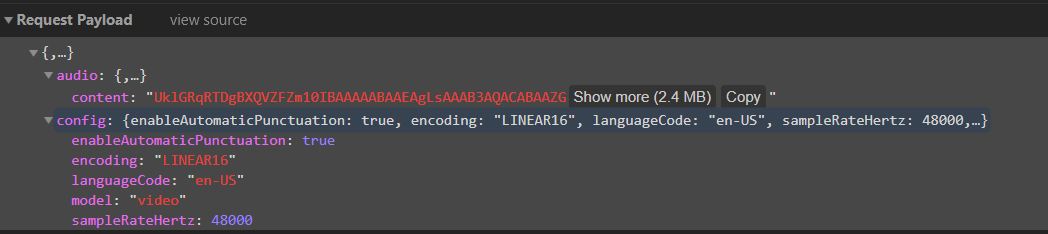

}如果,我使用相同的文件并访问演示部分中的https://cloud.google.com/speech-to-text/和upload文件。它似乎运作良好,并显示转录。我对这里出了什么问题一无所知。我验证了演示发送的请求,这里是什么样子?

我正在发送一组确切的参数,但这不起作用。试图上传文件到云存储,但这也带来了同样的结果(没有转录)。

回答 2

Stack Overflow用户

回答已采纳

发布于 2019-08-23 06:47:44

在经历了错误和测试(以及查看javascript示例)之后,我可以解决这个问题。音频的序列化版本应采用FLAC格式。我将视频文件(Webm)发送到Google。站点上的演示使用Javascript提取音频流,然后以base64格式发送数据以使其工作。

下面是我执行的获得输出的步骤。

- 使用FFMPEG从webm中提取音频流为FLAC格式。

ffmpeg -i sample.webm -vn -acodec flac sample.flac - 提取的文件应该使用存储云或作为ByteString发送。

- 在调用speech时设置适当的模型(对于英语语言

video模型有效,而对于法语command_and_search)。我对此没有任何合乎逻辑的理由。我在Google云站点上的演示尝试和错误之后才意识到这一点。

Stack Overflow用户

发布于 2020-04-18 07:03:43

我得到了flac编码文件的结果。

示例代码显示带有时间戳的单词,

public class SpeechToTextSample {

public static void main(String... args) throws Exception {

try (SpeechClient speechClient = SpeechClient.create()) {

String gcsUriFlac = "gs://yourfile.flac";

RecognitionConfig config =

RecognitionConfig.newBuilder()

.setEncoding(AudioEncoding.FLAC)

.setEnableWordTimeOffsets(true)

.setLanguageCode("en-US")

.build();

RecognitionAudio audio = RecognitionAudio.newBuilder().setUri(gcsUriFlac).build(); //for large files

OperationFuture<LongRunningRecognizeResponse, LongRunningRecognizeMetadata> response = speechClient.longRunningRecognizeAsync(config, audio);

while (!response.isDone()) {

System.out.println("Waiting for response...");

Thread.sleep(1000);

}

// Performs speech recognition on the audio file

List<SpeechRecognitionResult> results = response.get().getResultsList();

for (SpeechRecognitionResult result : results) {

SpeechRecognitionAlternative alternative = result.getAlternativesList().get(0);

System.out.printf("Transcription: %s%n", alternative.getTranscript());

for (WordInfo wordInfo : alternative.getWordsList()) {

System.out.println(wordInfo.getWord());

System.out.printf(

"\t%s.%s sec - %s.%s sec\n",

wordInfo.getStartTime().getSeconds(),

wordInfo.getStartTime().getNanos() / 100000000,

wordInfo.getEndTime().getSeconds(),

wordInfo.getEndTime().getNanos() / 100000000);

}

}

}

}

}GCP支持不同的语言,我用了"en-US“作为我的例子。请参考以下链接文档以了解语言列表。

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/57475225

复制相关文章

相似问题