本指导适用于在 TencentOS Server 3 上使用 HuggingFace TGI 推理框架运行 LLaMA 模型的官方 Demo,以 Docker 方式启动。

HuggingFace TGI 环境准备

拉取 HuggingFace TGI 相关镜像,并同时配置下载模型的镜像源,这里我们测试 LLaMA-7b 模型:

docker run -it --name HFTGI_llama_7b --gpus all -e HF_ENDPOINT="https://hf-mirror.com" -e HF_HUB_OFFLINE=1 -p 8080:80 -v $PWD/data:/data ghcr.io/huggingface/text-generation-inference:latest --model-id openlm-research/open_llama_7b --trust-remote-code

说明:

该命令有以下几点需要说明:

由于中国大陆无法下载 Hugging Face 网站模型,首先需要对下载网站换源,使用国内镜像网站的 HF-Mirror 模型。其中

-e HF_ENDPOINT="https://hf-mirror.com"就是将下载模型的网站换为镜像网站,-e必须加上。如果模型为 Hugging Face 中的 Gated Models(即需要 User access tokens),需注意,User access tokens 部分的内容。注意也要添加

-e,即此时命令为 -e HF_ENDPOINT="https://hf-mirror.com" -e HF_TOKEN=example_token,其中 example_token 为在网站上生成的 token。由于国内无法连接 Hugging Face hub,代码会调用 Hugging Face API 并长时间等待响应,且最终还是无法连接。为了避免长时间等待,根据router/src/main.rs 文件第212行代码

if std::env::var("HF_HUB_OFFLINE") == Ok("1".to_string())设置环境变量HF_HUB_OFFLINE=1,这样就不会去调用 Hugging Face API 而是直接 offline 推理,避免了长时间的等待。该命令在拉取镜像之后会下载模型,为了避免容器如果被删除后模型反复下载,使用

-v $PWD/data:/data 将容器内部 /data 文件夹里下载的模型通过挂载的方式在服务器本地 $PWD/data 也进行保存。下载镜像的地址为

ghcr.io/huggingface/text-generation-inference:latest,由于 ghcr.io 国内可替代的镜像源几乎没有,请耐心等待下载。如出现 docker: unexpected EOF,等错误则是由于下载太慢导致,反复尝试下载即可。如实在下载失败次数太多,可以将 ghcr.io改为 ghcr.chenby.cn,可以加快下载速度(但不保证将来此镜像源还可以使用,仅供参考)。--model-id 参数为 openlm-research/open_llama_7b,该名称需要与 Hugging Face 官网名称一致。本指导我们测试 LLaMA-7b,请注意 LLaMA-3b-V2 模型经测试暂不支持使用 HuggingFace TGI 推理框架加速运行,会出现

RuntimeError: Unsupported head size: 100 的错误。一旦镜像和模型都准备好,会看到如下内容(参考):

...INFO hf_hub: Token file not found "/root/.cache/huggingface/token"INFO text_generation_launcher: Default `max_input_tokens` to 4095INFO text_generation_launcher: Default `max_total_tokens` to 4096INFO text_generation_launcher: Default `max_batch_prefill_tokens` to 4145INFO text_generation_launcher: Using default cuda graphs [1, 2, 4, 8, 16, 32]INFO download: text_generation_launcher: Starting check and download process for openlm-research/open_llama_7bINFO text_generation_launcher: Detected system cudaINFO text_generation_launcher: Files are already present on the host. Skipping download.INFO download: text_generation_launcher: Successfully downloaded weights for openlm-research/open_llama_7bINFO shard-manager: text_generation_launcher: Starting shard rank=0INFO text_generation_launcher: Detected system cudaINFO text_generation_launcher: Server started at unix:///tmp/text-generation-server-0INFO shard-manager: text_generation_launcher: Shard ready in 5.904282524s rank=0INFO text_generation_launcher: Starting WebserverWARN text_generation_router: router/src/main.rs:218: Offline mode active using cache defaultsINFO text_generation_router: router/src/main.rs:349: Using config Some(Llama)WARN text_generation_router: router/src/main.rs:351: Could not find a fast tokenizer implementation for openlm-research/open_llama_7bWARN text_generation_router: router/src/main.rs:352: Rust input length validation and truncation is disabledWARN text_generation_router: router/src/main.rs:358: no pipeline tag found for model openlm-research/open_llama_7bWARN text_generation_router: router/src/main.rs:376: Invalid hostname, defaulting to 0.0.0.0INFO text_generation_router::server: router/src/server.rs:1577: Warming up modelINFO text_generation_launcher: Cuda Graphs are enabled for sizes [32, 16, 8, 4, 2, 1]INFO text_generation_router::server: router/src/server.rs:1604: Using scheduler V3INFO text_generation_router::server: router/src/server.rs:1656: Setting max batch total tokens to 58928INFO text_generation_router::server: router/src/server.rs:1894: Connected

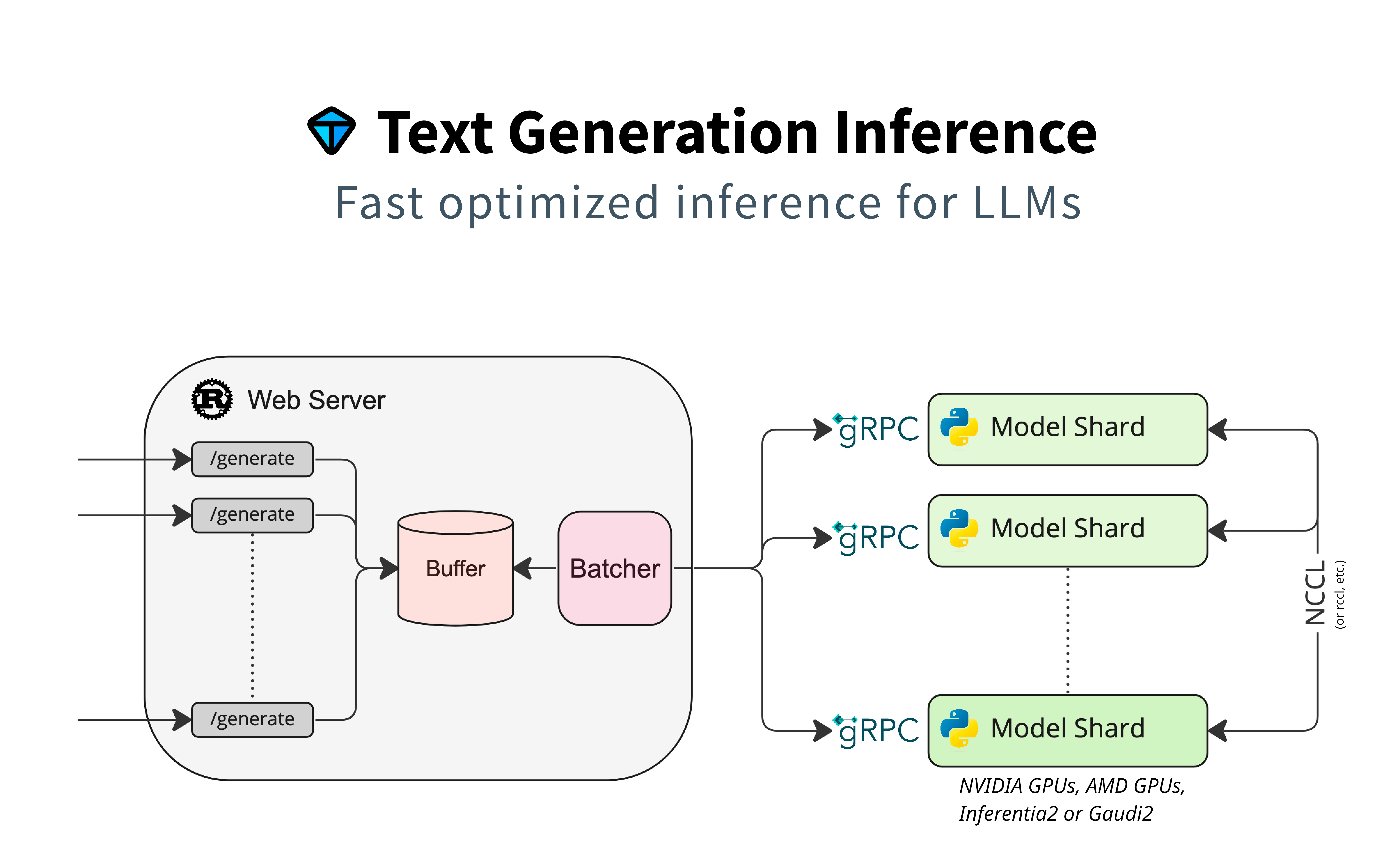

由于 HuggingFace TGI 是使用 Web Server 的形式交互(如下图所示),可以看到最后显示 connected,则说明 Webserver 连接成功。

注意:

如果出现以下错误:

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 64.00 MiB. GPU 。

如果报错说明显存不足,则无法运行。

退出容器后重启 Web Server

如果退出容器,且退出容器后容器停止运行,重新启动容器即可重启 Web Server,命令如下:

#重新启动容器docker start HFTGI_llama_7b#实时查看Web Server的运行状态docker logs -f HFTGI_llama_7b

重新启动后会自动启动 Web Server 进程,即可通过服务器本地发送请求运行模型。

运行模型

当前窗口连接 Web Server 成功之后,请不要关掉窗口以及做任何可能使 Web Server 停止的活动(查看 GPU 显存使用情况即可知道 Web Server 是否还在运行中)。

以 bash 方式交互运行

另外开一个窗口,输入以下命令:

curl -v http://localhost:8080/generate -X POST -d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' -H 'Content-Type: application/json'

inputs 则为输入给模型的 prompt,同时窗口返回输出如下(参考):

Note: Unnecessary use of -X or --request, POST is already inferred.* Trying ::1...* TCP_NODELAY set* Connected to localhost (::1) port 8080 (#0)> POST /generate HTTP/1.1> Host: localhost:8080> User-Agent: curl/7.61.1> Accept: */*> Content-Type: application/json> Content-Length: 70>* upload completely sent off: 70 out of 70 bytes< HTTP/1.1 200 OK< content-type: application/json< x-compute-type: 1-nvidia-l40< x-compute-time: 0.426205521< x-compute-characters: 22< x-total-time: 426< x-validation-time: 0< x-queue-time: 0< x-inference-time: 426< x-time-per-token: 21< x-prompt-tokens: 4075< x-generated-tokens: 20< content-length: 126< vary: origin, access-control-request-method, access-control-request-headers< access-control-allow-origin: *< date: Thu, 18 Jul 2024 08:14:28 GMT<* Connection #0 to host localhost left intact{"generated_text":"\\nDeep Learning is a subfield of Machine Learning that uses artificial neural networks to solve problems."}

说明模型使用 HuggingFace TGI 推理框架运行模型成功,同时在 Web Server 窗口可以看到以下内容:

INFO generate{parameters=GenerateParameters { best_of: None, temperature: None, repetition_penalty: None, frequency_penalty: None, top_k: None, top_p: None, typical_p: None, do_sample: false, max_new_tokens: Some(20), return_full_text: None, stop: [], truncate: None, watermark: false, details: false, decoder_input_details: false, seed: None, top_n_tokens: None, grammar: None, adapter_id: None } total_time="426.205521ms" validation_time="37.499µs" queue_time="64.159µs" inference_time="426.104203ms" time_per_token="21.30521ms" seed="None"}: text_generation_router::server: router/src/server.rs:322: Success

也证明 HuggingFace TGI 推理框架运行 LLaMA-7b 成功并正常返回输出。

以 TGI Client 方式运行

另外开一个窗口,首先在服务器本地安装运行 TGI Client 必要的包,使用 pip 安装:

#将pip换成清华源加快下载速度pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple#安装text-generation包pip install text-generation

安装完成后,在服务器本地新建 TGI_Client.py 文件,输入以下代码:

from text_generation import Client# Generateclient = Client("http://localhost:8080")output = client.generate("Why is the sky blue?", max_new_tokens=92).generated_textprint(f"Generate Output: {output}")# Generate streamtext = ""for response in client.generate_stream("Why is the sky blue?", max_new_tokens=92):if not response.token.special:text += response.token.textprint(f"Generate stream Output: {text}")

执行代码文件:

python TGI_Client.py

以上代码会以 client.generate 的方式输出 output 以及 client.generate_stream 以字符流的形式输出,输出结果如下(参考):

Generate Output:The sky is blue because of the scattering of light by the atmosphere.The scattering of light by the atmosphere is caused by the presence of molecules in the atmosphere.The molecules in the atmosphere are made up of atoms.The atoms in the atmosphere are made up of protons, neutrons, and electrons.The protons, neutrons, and electrons in the atmosphere are arranged in a way that allows them to scatter light.Generate stream Output:The sky is blue because of the scattering of light by the atmosphere.The scattering of light by the atmosphere is caused by the presence of molecules in the atmosphere.The molecules in the atmosphere are made up of atoms.The atoms in the atmosphere are made up of protons, neutrons, and electrons.The protons, neutrons, and electrons in the atmosphere are arranged in a way that allows them to scatter light.

同时 Web Server 窗口会输出:

INFO compat_generate{default_return_full_text=true compute_type=Extension(ComputeType("1-nvidia-l40"))}:generate_stream{parameters=GenerateParameters { best_of: None, temperature: None, repetition_penalty: None, frequency_penalty: None, top_k: None, top_p: None, typical_p: None, do_sample: false, max_new_tokens: Some(92), return_full_text: Some(false), stop: [], truncate: None, watermark: false, details: true, decoder_input_details: false, seed: None, top_n_tokens: None, grammar: None, adapter_id: None } total_time="1.942765325s" validation_time="22.049µs" queue_time="40.4µs" inference_time="1.942703056s" time_per_token="21.116337ms" seed="None"}: text_generation_router::server: router/src/server.rs:511: Success

表明运行成功。

运行 LangChain

另外开一个窗口,首先在服务器本地安装运行 LangChain 必要的包,使用 pip 安装:

#将pip换成清华源加快下载速度pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple#安装必要的包pip install langchain transformers langchain_community

安装完成后,在服务器本地新建 LangChain.py 文件,输入以下代码:

# Wrapper to TGI client with langchainfrom langchain.llms import HuggingFaceTextGenInferencefrom langchain import PromptTemplate, LLMChaininference_server_url_local = "http://localhost:8080"llm_local = HuggingFaceTextGenInference(inference_server_url=inference_server_url_local,max_new_tokens=400,top_k=10,top_p=0.95,typical_p=0.95,temperature=0.7,repetition_penalty=1.03,)question = "whats 2 * (1 + 2)"template = """Question: {question}Answer: Let's think step by step."""prompt = PromptTemplate(template=template,input_variables= ["question"])llm_chain_local = LLMChain(prompt=prompt, llm=llm_local)output = llm_chain_local({question})print(output)

执行代码文件:

python LangChain.py

我们让模型计算2 * (1 + 2),并逐步输出,模型输出如下(参考):

{'question': {'whats 2 * (1 + 2)'}, 'text': '\\nStep 1. We start with 2 * (1 + 2)\\nStep 2. Now, we multiply the first number by 2 and add it to the second number.\\nStep 3. So we have 2 * (1 + 2) = 2 * 3\\nStep 4. Now we multiply the first number by 2 again and add it to the third number.\\nStep 5. So we have 2 * 3 = 6\\nStep 6. Now, we add the first number to the third number.\\nStep 7. So we have 6 = 2 + 3\\nStep 8. Now we add the first number to the second number.\\nStep 9. So we have 6 = 2 + 3 + 3\\nStep 10. Now we add the first number to the first number.\\nStep 11. So we have 6 = 2 + 3 + 3 + 3\\nStep 12. Now we add the first number to the second number.\\nStep 13. So we have 6 = 2 + 3 + 3 + 3 + 3\\nStep 14. Now we add the first number to the first number.\\nStep 15. So we have 6 = 2 + 3 + 3 + 3 + 3 + 3\\nStep 16. Now we add the first number to the second number.\\nStep 17. So we have 6 = 2 + 3 + 3 + 3 + 3 + 3 + 3\\nStep 18. Now we add the first number to the first number.\\nStep 19. So we have 6 = 2 + 3 + 3 + 3 + 3 + 3 + 3 +'}

可以看到有正常输出,表明运行成功,同时 Web Server 窗口会输出:

INFO compat_generate{default_return_full_text=true compute_type=Extension(ComputeType("1-nvidia-l40"))}:generate{parameters=GenerateParameters { best_of: None, temperature: Some(0.7), repetition_penalty: Some(1.03), frequency_penalty: None, top_k: Some(10), top_p: Some(0.95), typical_p: Some(0.95), do_sample: false, max_new_tokens: Some(400), return_full_text: Some(false), stop: [], truncate: None, watermark: false, details: true, decoder_input_details: false, seed: None, top_n_tokens: None, grammar: None, adapter_id: None } total_time="8.646906066s" validation_time="20.12µs" queue_time="65.869µs" inference_time="8.646820347s" time_per_token="21.61705ms" seed="Some(15119903715577785552)"}: text_generation_router::server: router/src/server.rs:322: Success

注意事项

说明:

由于 OpenCloudOS 是 TencentOS Server 的开源版本,理论上上述文档当中的所有操作同样适用于 OpenCloudOS。

参考文档