LangChain学习:Chat with Your Data

LangChain学习:Chat with Your Data

Michael阿明

发布于 2023-07-25 14:51:47

发布于 2023-07-25 14:51:47

1. 加载文档

1.1. PDF

from config import api_type, api_key, api_base, api_version, model_name

from langchain.chat_models import AzureChatOpenAI

llm = AzureChatOpenAI(

openai_api_base=api_base,

openai_api_version=api_version,

deployment_name=model_name,

openai_api_key=api_key,

openai_api_type=api_type,

temperature=0.5,

)我们加载这样一篇 pdf 文档:

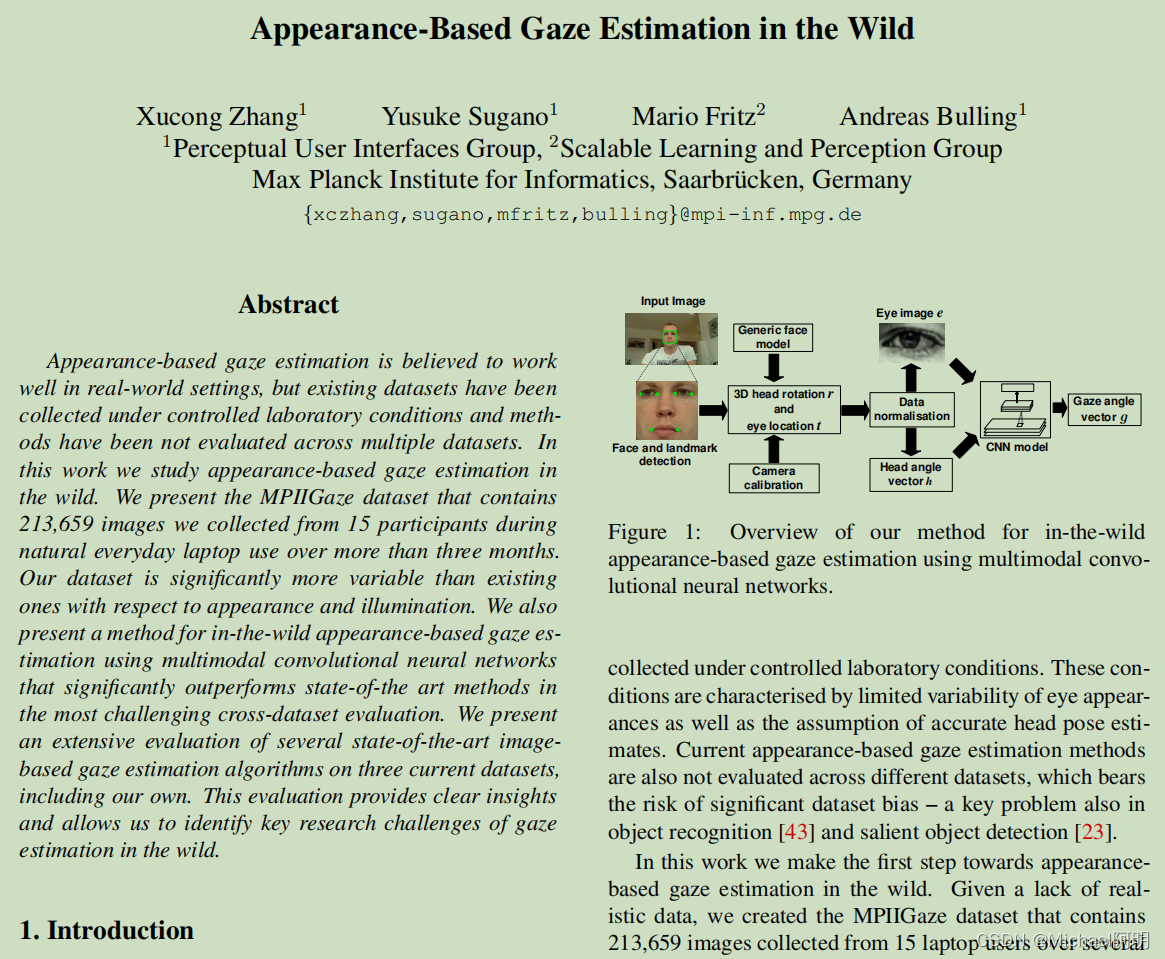

## pdf

from langchain.document_loaders import PyPDFLoader

loader = PyPDFLoader("CVPR2015-Appearance-Based-Gaze-Estimation-in-the-Wild.pdf")

pages = loader.load()

print(len(pages))

page = pages[0]

print(page.page_content[0:500])

print(page.metadata)输出:

Appearance-Based Gaze Estimation in the Wild

Xucong Zhang1Yusuke Sugano1Mario Fritz2Andreas Bulling1

1Perceptual User Interfaces Group,2Scalable Learning and Perception Group

Max Planck Institute for Informatics, Saarbr ¨ucken, Germany

{xczhang,sugano,mfritz,bulling }@mpi-inf.mpg.de

Abstract

Appearance-based gaze estimation is believed to work

well in real-world settings, but existing datasets have been

collected under controlled laboratory conditions and meth-

ods have been not evaluated across

{'source': 'CVPR2015-Appearance-Based-Gaze-Estimation-in-the-Wild.pdf', 'page': 0}只能输出 PDF 中的文字

1.2 油管视频

from langchain.document_loaders.generic import GenericLoader

from langchain.document_loaders.parsers import OpenAIWhisperParser

from langchain.document_loaders.blob_loaders.youtube_audio import YoutubeAudioLoader

url="https://www.youtube.com/watch?v=jGwO_UgTS7I"

save_dir="youtube/"

loader = GenericLoader(

YoutubeAudioLoader([url],save_dir),

OpenAIWhisperParser()

)

docs = loader.load()

docs[0].page_content[0:500]这块没有跑通,大概就是下载视频提取语音,调用OpenAIWhisperParser转成文字

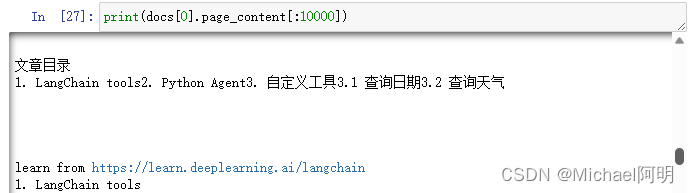

1.3 URL

from langchain.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://michael.blog.csdn.net/article/details/131733577")

docs = loader.load()

print(docs[0].page_content[:500])

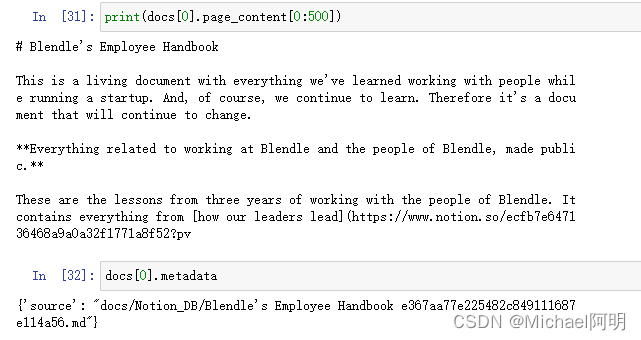

1.4 Notion

需要先导出 Notion 文档到本地

from langchain.document_loaders import NotionDirectoryLoader

loader = NotionDirectoryLoader("docs/Notion_DB")

docs = loader.load()

2. 文档切分

2.1 CharacterTextSplitter

from langchain.text_splitter import RecursiveCharacterTextSplitter, CharacterTextSplitter

chunk_size = 26

chunk_overlap = 4

r_splitter = RecursiveCharacterTextSplitter(

chunk_size=chunk_size,

chunk_overlap=chunk_overlap

)

c_splitter = CharacterTextSplitter(

chunk_size=chunk_size,

chunk_overlap=chunk_overlap

)

text1 = 'abcdefghijklmnopqrstuvwxyz'

print(r_splitter.split_text(text1))

text2 = 'abcdefghijklmnopqrstuvwxyzabcdefg'

print(r_splitter.split_text(text2))输出:

['abcdefghijklmnopqrstuvwxyz']

['abcdefghijklmnopqrstuvwxyz', 'wxyzabcdefg']第一个字符串没有超过 chunk_size ,只切出来1个字符串

第二个字符串超过了,且设置了chunk_overlap,需要在切分点往前再找些字符补上

text3 = "a b c d e f g h i j k l m n o p q r s t u v w x y z"

print(r_splitter.split_text(text3))输出:切完的字符如何遇到首末有空格,会被去掉

['a b c d e f g h i j k l m', 'l m n o p q r s t u v w x', 'w x y z']print(c_splitter.split_text(text3))输出: CharacterTextSplitter 会忽略空格进行切分

['a b c d e f g h i j k l m n o p q r s t u v w x y z']对如下的文本进行切分:

When writing documents, writers will use document structure to group content. This can convey to the reader, which idea's are related. For example, closely related ideas are in sentances. Similar ideas are in paragraphs. Paragraphs form a document.

Paragraphs are often delimited with a carriage return or two carriage returns. Carriage returns are the "backslash n" you see embedded in this string. Sentences have a period at the end, but also, have a space.and words are separated by space.print(c_splitter.split_text(some_text))切出了2段文本:

'When writing documents, writers will use document structure to group content. This can convey to the reader, which idea\'s are related. For example, closely related ideas are in sentances. Similar ideas are in paragraphs. Paragraphs form a document. \n\n Paragraphs are often delimited with a carriage return or two carriage returns. Carriage returns are the "backslash n" you see embedded in this string. Sentences have a period at the end, but also,''have a space.and words are separated by space.'可以看出切分效果不是很好

2.2 RecursiveCharacterTextSplitter(推荐)

print(r_splitter.split_text(some_text))也切出了2段文本

"When writing documents, writers will use document structure to group content. This can convey to the reader, which idea's are related. For example, closely related ideas are in sentances. Similar ideas are in paragraphs. Paragraphs form a document."'Paragraphs are often delimited with a carriage return or two carriage returns. Carriage returns are the "backslash n" you see embedded in this string. Sentences have a period at the end, but also, have a space.and words are separated by space.'RecursiveCharacterTextSplitter切分效果较好,推荐使用

- 降低分段大小,添加分隔符

r_splitter = RecursiveCharacterTextSplitter(

chunk_size=150,

chunk_overlap=0,

separators=["\n\n", "\n", "\. ", " ", ""]

)

r_splitter.split_text(some_text)切出了5段

When writing documents, writers will use document structure to group content. This can convey to the reader, which idea's are related

. For example, closely related ideas are in sentances. Similar ideas are in paragraphs. Paragraphs form a document.

Paragraphs are often delimited with a carriage return or two carriage returns

. Carriage returns are the "backslash n" you see embedded in this string

. Sentences have a period at the end, but also, have a space.and words are separated by space.- 添加正则分隔符处理

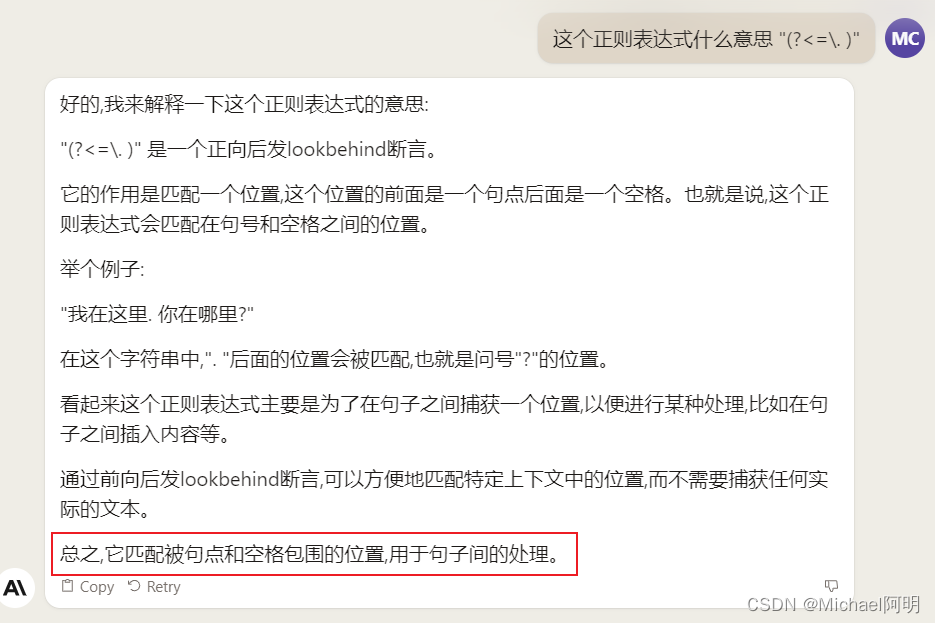

r_splitter = RecursiveCharacterTextSplitter(

chunk_size=150,

chunk_overlap=0,

separators=["\n\n", "\n", "(?<=\. )", " ", ""]

)When writing documents, writers will use document structure to group content. This can convey to the reader, which idea's are related.

For example, closely related ideas are in sentances. Similar ideas are in paragraphs. Paragraphs form a document.

Paragraphs are often delimited with a carriage return or two carriage returns.

Carriage returns are the "backslash n" you see embedded in this string.

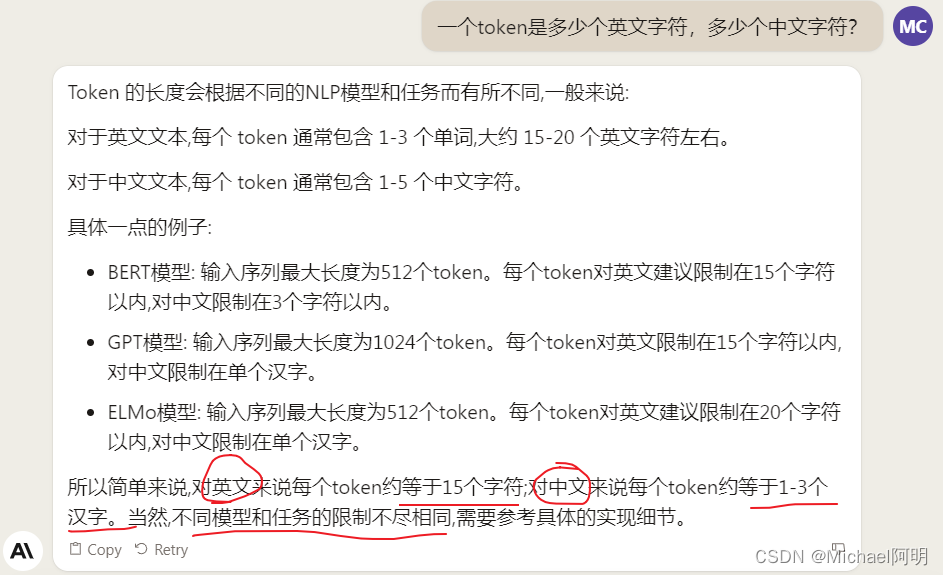

Sentences have a period at the end, but also, have a space.and words are separated by space.2.3 Token splitting

大模型会对输入的 token 数量有限制,可以使用这种切分方法,token 通常大约是4个英文字符

from langchain.text_splitter import TokenTextSplitter

text_splitter = TokenTextSplitter(chunk_size=1, chunk_overlap=0)

text1 = "foo bar bazzyfoo"

print(text_splitter.split_text(text1))

# ['foo', ' bar', ' b', 'az', 'zy', 'foo']

text1 = "我喜欢学习深度学习的知识"

print(text_splitter.split_text(text1))

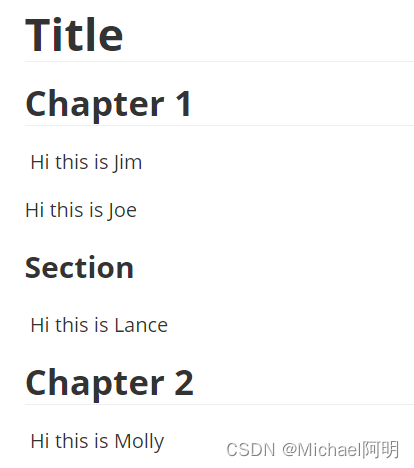

# ['我喜欢学', '习深度学�', '�的知识']2.4 MarkDown切分

from langchain.text_splitter import MarkdownHeaderTextSplitter

markdown_document = """# Title\n\n \

## Chapter 1\n\n \

Hi this is Jim\n\n Hi this is Joe\n\n \

### Section \n\n \

Hi this is Lance \n\n

## Chapter 2\n\n \

Hi this is Molly"""

print(markdown_document)

headers_to_split_on = [

("#", "Header 1"),

("##", "Header 2"),

("###", "Header 3"),

]

markdown_splitter = MarkdownHeaderTextSplitter(

headers_to_split_on=headers_to_split_on

)

md_header_splits = markdown_splitter.split_text(markdown_document)

for x in md_header_splits:

print(x)预览下

输出了3段:

page_content='Hi this is Jim \nHi this is Joe' metadata={'Header 1': 'Title', 'Header 2': 'Chapter 1'}

page_content='Hi this is Lance' metadata={'Header 1': 'Title', 'Header 2': 'Chapter 1', 'Header 3': 'Section'}

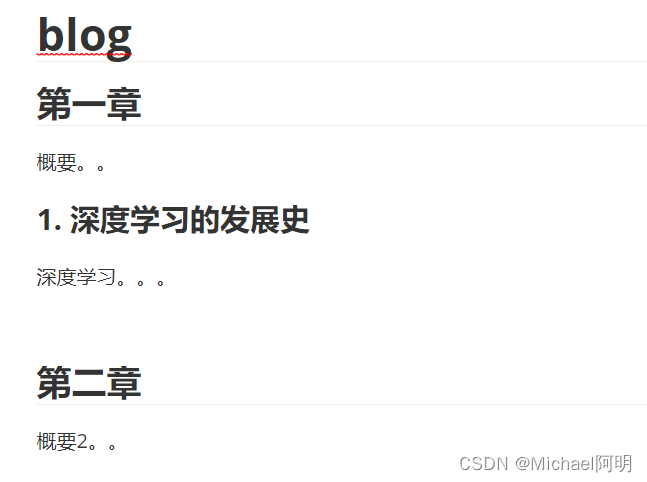

page_content='Hi this is Molly' metadata={'Header 1': 'Title', 'Header 2': 'Chapter 2'}MD文件切分

loader = NotionDirectoryLoader("./md/")

docs = loader.load()

txt = ' '.join([d.page_content for d in docs])

headers_to_split_on = [

("#", "Header 1"),

("##", "Header 2"),

]

markdown_splitter = MarkdownHeaderTextSplitter(

headers_to_split_on=headers_to_split_on

)

md_header_splits = markdown_splitter.split_text(txt)

for x in md_header_splits:

print(x)输出:

page_content='概要。。 \n### 1. 深度学习的发展史 \n深度学习。。。' metadata={'Header 1': 'blog', 'Header 2': '第一章'}

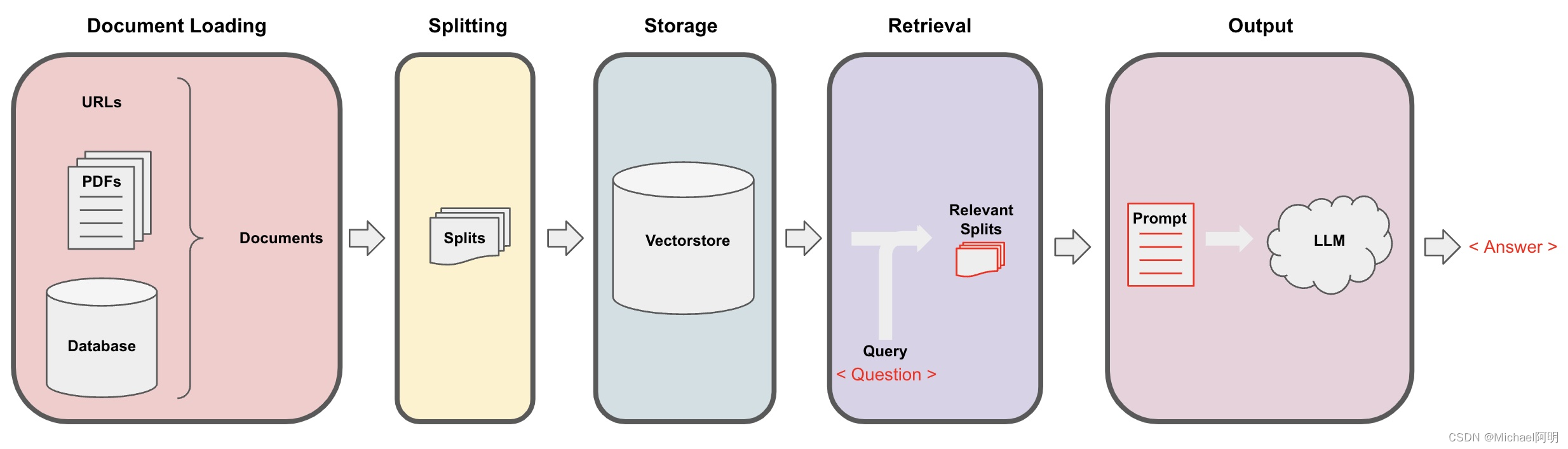

page_content='概要2。。' metadata={'Header 1': 'blog', 'Header 2': '第二章'}3. 向量存储、embeddings

- 加载文档

from langchain.document_loaders import PyPDFLoader

# Load PDF

loaders = [

# Duplicate documents on purpose - messy data

PyPDFLoader("docs/cs229_lectures/MachineLearning-Lecture01.pdf"),

PyPDFLoader("docs/cs229_lectures/MachineLearning-Lecture01.pdf"),

PyPDFLoader("docs/cs229_lectures/MachineLearning-Lecture02.pdf"),

PyPDFLoader("docs/cs229_lectures/MachineLearning-Lecture03.pdf")

]

docs = []

for loader in loaders:

docs.extend(loader.load())- 切分文档

# Split

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 1500,

chunk_overlap = 150

)

splits = text_splitter.split_documents(docs)- 向量存储

# pip install chromadb

from langchain.embeddings.openai import OpenAIEmbeddings

embedding = OpenAIEmbeddings()

from langchain.vectorstores import Chroma

persist_directory = 'docs/chroma/'

vectordb = Chroma.from_documents(

documents=splits,

embedding=embedding,

persist_directory=persist_directory

)

print(vectordb._collection.count())

# 显示存储了多少个文档切片 splits- 相似文档搜索

question = "is there an email i can ask for help"

docs = vectordb.similarity_search(question,k=3)

print(docs[0].page_content)

vectordb.persist() # 保存

由于加载文档的时候有一个重复的 PDF,所以查询的时候可能会出现两个一样的查询结果

然后 question = "what did they say about regression in the third lecture?" 让它从第三个文档中去查,会查出来一些不是第三个文档中的结果

4. Retrieval 检索

max_marginal_relevance_search可以解决上面检索出来的结果重复的问题

docs_mmr = vectordb.max_marginal_relevance_search(question,k=3)- 指定从哪个文档里搜索,加

filter 字典

docs = vectordb.similarity_search(

question,

k=3,

filter={"source":"docs/cs229_lectures/MachineLearning-Lecture03.pdf"}

)- 让模型自己推断从哪个文件里搜索

from langchain.llms import OpenAI

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain.chains.query_constructor.base import AttributeInfo

metadata_field_info = [

AttributeInfo(

name="source",

description="The lecture the chunk is from, should be one of `docs/cs229_lectures/MachineLearning-Lecture01.pdf`, `docs/cs229_lectures/MachineLearning-Lecture02.pdf`, or `docs/cs229_lectures/MachineLearning-Lecture03.pdf`",

type="string",

),

AttributeInfo(

name="page",

description="The page from the lecture",

type="integer",

),

]

document_content_description = "Lecture notes"

llm = OpenAI(temperature=0)

retriever = SelfQueryRetriever.from_llm(

llm,

vectordb,

document_content_description,

metadata_field_info,

verbose=True

)

question = "what did they say about regression in the third lecture?"

docs = retriever.get_relevant_documents(question)这样模型就自己推断该从哪个文件里进行搜索

- 上下文压缩 另一种提高检索文档质量的方法是压缩 与查询最相关的信息可能隐藏在包含大量不相关文本的文档中 传递完整的文档可能会导致更昂贵的LLM调用和较差的响应

from langchain.retrievers import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import LLMChainExtractor

compressor = LLMChainExtractor.from_llm(llm)

compression_retriever = ContextualCompressionRetriever(

base_compressor=compressor,

base_retriever=vectordb.as_retriever()

)

compressed_docs = compression_retriever.get_relevant_documents(question)上面的结果中有重复的,如果要去重加一个参数 search_type = "mmr"

base_retriever=vectordb.as_retriever(search_type = "mmr")- 其他类型检索

SVMRetriever、TFIDFRetriever

from langchain.retrievers import SVMRetriever

from langchain.retrievers import TFIDFRetriever

from langchain.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

svm_retriever = SVMRetriever.from_texts(splits,embedding)

tfidf_retriever = TFIDFRetriever.from_texts(splits)

docs_svm=svm_retriever.get_relevant_documents(question)

docs_tfidf=tfidf_retriever.get_relevant_documents(question)5. QA 问答

RetrievalQAchain

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=vectordb.as_retriever()

)

result = qa_chain({"query": question})- 提示词

from langchain.prompts import PromptTemplate

template = """使用以下context来回答问题。

如果你不知道答案,就说你不知道,不要试图编造答案。

最多使用三句话。尽量使答案简明扼要。

总是在回答的最后说“谢谢你的提问!”。

{context}

问题:{question}

有用的答案:"""

QA_CHAIN_PROMPT = PromptTemplate.from_template(template)

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=vectordb.as_retriever(),

return_source_documents=True,

chain_type_kwargs={"prompt": QA_CHAIN_PROMPT}

)

question = "概率论是课程的主题吗?"

result = qa_chain({"query": question})

print(result["result"])

# '是的,概率论是这门课程的主题之一,但不是唯一的主题。'

print(result["source_documents"][0])

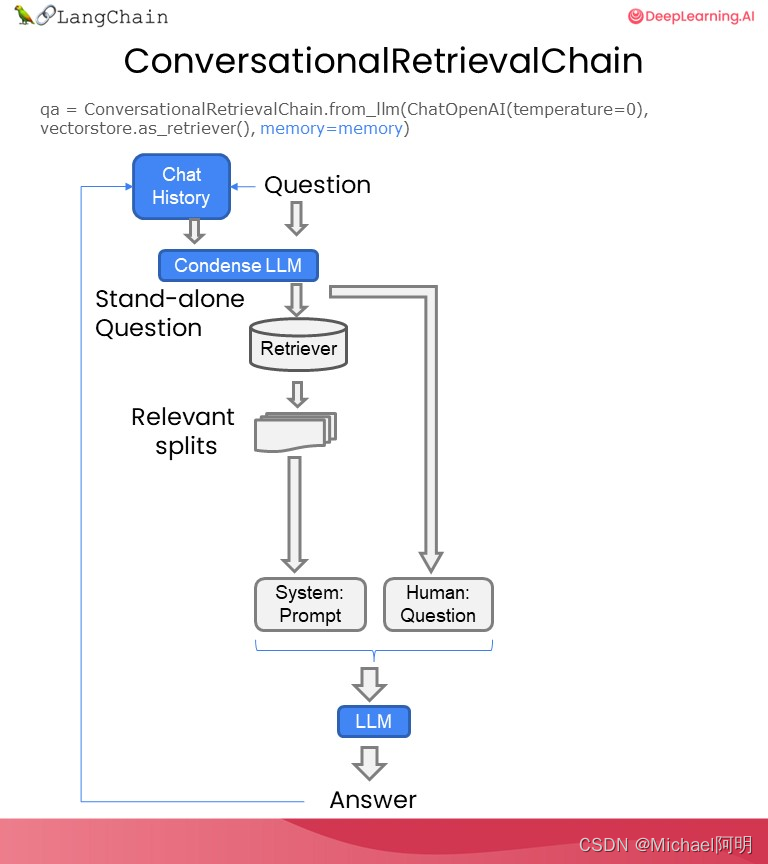

# 打印相关来源6. chat

添加对话存储

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

from langchain.chains import ConversationalRetrievalChain

retriever=vectordb.as_retriever()

qa = ConversationalRetrievalChain.from_llm(

llm,

retriever=retriever,

memory=memory

)

question = "我没有学过概率论,可以上这个课程吗?"

result = qa({"question": question})

print(result['answer'])输出:这个课程需要学生具备基本的概率论、统计学和线性代数知识。对于概率论和统计学,大多数本科统计学课程(如斯坦福大学的Stat 116)就足够了。对于线性代数,大多数本科线性代数课程(如斯坦福大学的Math 51、103、Math 113或CS205)也足够了。具体来说,需要学生了解随机变量、期望、方差、矩阵、向量、矩阵乘法、矩阵求逆等基本概念。如果学生对这些知识不太熟悉,也没关系,课程会在复习环节进行讲解。

- 文档对话机器人

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter, RecursiveCharacterTextSplitter

from langchain.vectorstores import DocArrayInMemorySearch

from langchain.document_loaders import TextLoader

from langchain.chains import RetrievalQA, ConversationalRetrievalChain

from langchain.memory import ConversationBufferMemory

from langchain.chat_models import ChatOpenAI

from langchain.document_loaders import TextLoader

from langchain.document_loaders import PyPDFLoader封装函数:加载文档、切分、LLM链

def load_db(file, chain_type, k):

# load documents

loader = PyPDFLoader(file)

documents = loader.load()

# split documents

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=150)

docs = text_splitter.split_documents(documents)

# define embedding

embeddings = OpenAIEmbeddings()

# create vector database from data

db = DocArrayInMemorySearch.from_documents(docs, embeddings)

# define retriever

retriever = db.as_retriever(search_type="similarity", search_kwargs={"k": k})

# create a chatbot chain. Memory is managed externally.

qa = ConversationalRetrievalChain.from_llm(

llm=ChatOpenAI(model_name=llm_name, temperature=0),

chain_type=chain_type,

retriever=retriever,

return_source_documents=True,

return_generated_question=True,

)

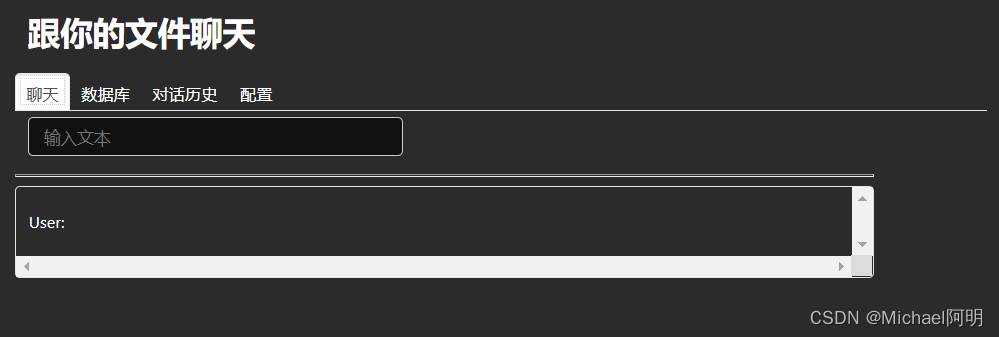

return qa 构建用户界面及其响应处理 使用了 https://panel.holoviz.org/ https://param.holoviz.org/

import panel as pn

import param

class cbfs(param.Parameterized):

chat_history = param.List([])

answer = param.String("")

db_query = param.String("")

db_response = param.List([])

def __init__(self, **params):

super(cbfs, self).__init__( **params)

self.panels = []

self.loaded_file = "docs/cs229_lectures/MachineLearning-Lecture01.pdf"

self.qa = load_db(self.loaded_file,"stuff", 4)

def call_load_db(self, count):

if count == 0 or file_input.value is None: # init or no file specified :

return pn.pane.Markdown(f"Loaded File: {self.loaded_file}")

else:

file_input.save("temp.pdf") # local copy

self.loaded_file = file_input.filename

button_load.button_style="outline"

self.qa = load_db("temp.pdf", "stuff", 4)

button_load.button_style="solid"

self.clr_history()

return pn.pane.Markdown(f"Loaded File: {self.loaded_file}")

def convchain(self, query):

if not query:

return pn.WidgetBox(pn.Row('User:', pn.pane.Markdown("", width=600)), scroll=True)

result = self.qa({"question": query, "chat_history": self.chat_history})

self.chat_history.extend([(query, result["answer"])])

self.db_query = result["generated_question"]

self.db_response = result["source_documents"]

self.answer = result['answer']

self.panels.extend([

pn.Row('User:', pn.pane.Markdown(query, width=600)),

pn.Row('ChatBot:', pn.pane.Markdown(self.answer, width=600, style={'background-color': '#F6F6F6'}))

])

inp.value = '' #clears loading indicator when cleared

return pn.WidgetBox(*self.panels,scroll=True)

@param.depends('db_query ', )

def get_lquest(self):

if not self.db_query :

return pn.Column(

pn.Row(pn.pane.Markdown(f"Last question to DB:", styles={'background-color': '#F6F6F6'})),

pn.Row(pn.pane.Str("no DB accesses so far"))

)

return pn.Column(

pn.Row(pn.pane.Markdown(f"DB query:", styles={'background-color': '#F6F6F6'})),

pn.pane.Str(self.db_query )

)

@param.depends('db_response', )

def get_sources(self):

if not self.db_response:

return

rlist=[pn.Row(pn.pane.Markdown(f"Result of DB lookup:", styles={'background-color': '#F6F6F6'}))]

for doc in self.db_response:

rlist.append(pn.Row(pn.pane.Str(doc)))

return pn.WidgetBox(*rlist, width=600, scroll=True)

@param.depends('convchain', 'clr_history')

def get_chats(self):

if not self.chat_history:

return pn.WidgetBox(pn.Row(pn.pane.Str("No History Yet")), width=600, scroll=True)

rlist=[pn.Row(pn.pane.Markdown(f"Current Chat History variable", styles={'background-color': '#F6F6F6'}))]

for exchange in self.chat_history:

rlist.append(pn.Row(pn.pane.Str(exchange)))

return pn.WidgetBox(*rlist, width=600, scroll=True)

def clr_history(self,count=0):

self.chat_history = []

return UI

cb = cbfs()

file_input = pn.widgets.FileInput(accept='.pdf')

button_load = pn.widgets.Button(name="加载数据库", button_type='primary')

button_clearhistory = pn.widgets.Button(name="清空记录", button_type='warning')

button_clearhistory.on_click(cb.clr_history)

inp = pn.widgets.TextInput( placeholder='输入文本')

bound_button_load = pn.bind(cb.call_load_db, button_load.param.clicks)

conversation = pn.bind(cb.convchain, inp)

jpg_pane = pn.pane.Image( './img/convchain.jpg')

tab1 = pn.Column(

pn.Row(inp),

pn.layout.Divider(),

pn.panel(conversation, loading_indicator=True, height=300),

pn.layout.Divider(),

)

tab2= pn.Column(

pn.panel(cb.get_lquest),

pn.layout.Divider(),

pn.panel(cb.get_sources ),

)

tab3= pn.Column(

pn.panel(cb.get_chats),

pn.layout.Divider(),

)

tab4=pn.Column(

pn.Row( file_input, button_load, bound_button_load),

pn.Row( button_clearhistory, pn.pane.Markdown("清空对话记录,开始新的对话" )),

pn.layout.Divider(),

pn.Row(jpg_pane.clone(width=400))

)

dashboard = pn.Column(

pn.Row(pn.pane.Markdown('# 跟你的文件聊天')),

pn.Tabs(('聊天', tab1), ('数据库', tab2), ('对话历史', tab3),('配置', tab4))

)

pn.extension()

dashboard

本文参与 腾讯云自媒体同步曝光计划,分享自作者个人站点/博客。

原始发表:2023-07-22,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录