猫头虎分享:Qwen2.5-Coder 系列模型运行与使用教程

猫头虎分享:Qwen2.5-Coder 系列模型运行与使用教程

猫头虎

发布于 2024-11-18 09:12:17

发布于 2024-11-18 09:12:17

猫头虎分享:Qwen2.5-Coder 系列模型运行与使用教程

引言

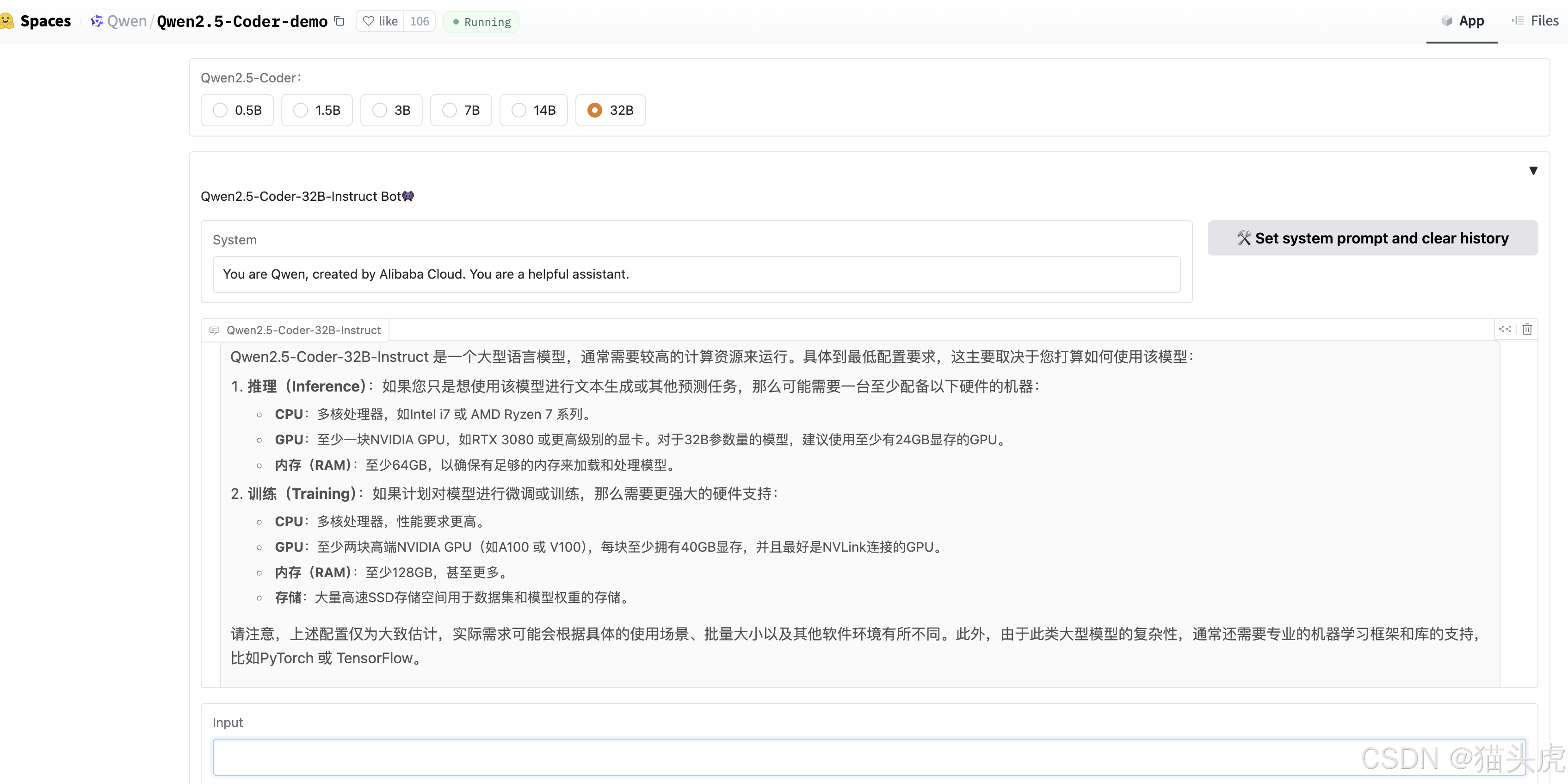

大家好,我是猫头虎!今天我们深入探讨阿里通义千问推出的 Qwen2.5-Coder 系列,这是一个强大、丰富且实用的开源代码生成模型系列,尤其是旗舰版 Qwen2.5-Coder-32B-Instruct 被誉为媲美 GPT-4o 的开源代码模型。🎉 下面为大家带来详细的运行和使用教程。

正文

📑 Qwen2.5-Coder 系列简介

Qwen2.5-Coder 系列以「强大」、「丰富」和「实用」为核心特点:

- 强大:Qwen2.5-Coder-32B-Instruct 展现出卓越的代码生成、数学及一般能力,尤其是在代码生成基准上表现出色。

- 丰富:模型包含 0.5B、1.5B、3B、7B、14B 和 32B 六种尺寸,可适应不同开发者的需求。

- 实用:在代码助手和成果产出等场景中表现优异,支持 92 种编程语言,满足多样化需求。

💾 模型基本信息

- 上下文长度:支持 128K tokens 的上下文理解和生成。

- 支持语言:包含 92 种编程语言,涵盖主流开发语言和框架。

- 特殊标记更新:为确保一致性,更新了特殊 tokens 及其对应的 token id。

常用标记

{

"<|fim_prefix|>": 151659,

"<|fim_middle|>": 151660,

"<|fim_suffix|>": 151661,

"<|fim_pad|>": 151662,

"<|repo_name|>": 151663,

"<|file_sep|>": 151664,

"<|im_start|>": 151644,

"<|im_end|>": 151645

}📥 模型下载

模型名称 | 类型 | 上下文长度 | 下载链接 |

|---|---|---|---|

Qwen2.5-Coder-0.5B | 基础模型 | 32K | 🤗 Hugging Face • 🤖 ModelScope |

Qwen2.5-Coder-32B-Instruct | 指令模型 | 128K | 🤗 Hugging Face • 🤖 ModelScope |

Qwen2.5-Coder-14B-Instruct-AWQ | 指令模型 | 128K | 🤗 Hugging Face • 🤖 ModelScope |

🚀 运行环境要求

Python 版本:>=3.9

Transformers 库:>4.37.0(支持 Qwen2.5 密集模型)

安装依赖:执行以下命令以安装必要依赖包:

pip install -r requirements.txt🏃 快速开始指南

Qwen2.5-Coder-32B-Instruct 聊天模式示例

下面演示如何使用 Qwen2.5-Coder-32B-Instruct 进行代码聊天:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen2.5-Coder-32B-Instruct"

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype="auto", device_map="auto")

tokenizer = AutoTokenizer.from_pretrained(model_name)

prompt = "write a quick sort algorithm."

messages = [

{"role": "system", "content": "You are Qwen, created by Alibaba Cloud. You are a helpful assistant."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(**model_inputs, max_new_tokens=512)

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(response)- apply_chat_template:用于将消息转换为模型理解的格式。

- max_new_tokens:控制响应的最大长度。

基本代码生成使用

Qwen2.5-Coder-32B 可用于代码完成任务:

from transformers import AutoTokenizer, AutoModelForCausalLM

device = "cuda"

TOKENIZER = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-Coder-32B")

MODEL = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-Coder-32B", device_map="auto").eval()

input_text = "#write a quick sort algorithm"

model_inputs = TOKENIZER([input_text], return_tensors="pt").to(device)

generated_ids = MODEL.generate(model_inputs.input_ids, max_new_tokens=512, do_sample=False)[0]

output_text = TOKENIZER.decode(generated_ids[len(model_inputs.input_ids[0]):], skip_special_tokens=True)

print(f"Prompt: {input_text}\n\nGenerated text: {output_text}")处理长文本

当前配置支持 32,768 tokens 的上下文长度。可通过在 config.json 中启用 YaRN 技术提升长文本处理能力:

{

...,

"rope_scaling": {

"factor": 4.0,

"original_max_position_embeddings": 32768,

"type": "yarn"

}

}文件级代码补全(填充中间)

代码插入任务需使用特殊标记 <|fim_prefix|>、<|fim_suffix|>、<|fim_middle|> 进行标记:

from transformers import AutoTokenizer, AutoModelForCausalLM

device = "cuda"

TOKENIZER = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-Coder-32B")

MODEL = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-Coder-32B", device_map="auto").eval()

input_text = """<|fim_prefix|>def quicksort(arr):

if len(arr) <= 1:

return arr

pivot = arr[len(arr) // 2]

<|fim_suffix|>

middle = [x for x in arr if x == pivot]

right = [x for x in arr if x > pivot]

return quicksort(left) + middle + quicksort(right)<|fim_middle|>"""

model_inputs = TOKENIZER([input_text], return_tensors="pt").to(device)

generated_ids = MODEL.generate(model_inputs.input_ids, max_new_tokens=512, do_sample=False)[0]

output_text = TOKENIZER.decode(generated_ids[len(model_inputs.input_ids[0]):], skip_special_tokens=True)

print(f"Prompt: {input_text}\n\nGenerated text: {output_text}")仓库级代码补全

仓库级补全任务使用 <|repo_name|> 和 <|file_sep|> 标记文件关系。假设 repo_name 是仓库名,包含文件 file_path1 和 file_path2,格式如下:

input_text = f'''<|repo_name|>{repo_name}

<|file_sep|>{file_path1}

{file_content1}

<|file_sep|>{file_path2}

{file_content2}'''离线批量推理示例

Qwen2.5-Coder 支持 vLLM 离线推理:

from transformers import AutoTokenizer

from vllm import LLM, SamplingParams

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-Coder-32B")

sampling_params = SamplingParams(temperature=0.7, top_p=0.8, repetition_penalty=1.05, max_tokens=1024)

llm = LLM(model="Qwen/Qwen2.5-Coder-32B")

prompt = "#write a quick sort algorithm.\ndef quick_sort("

outputs = llm.generate([prompt], sampling_params)

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")📘 总结

Qwen2.5-Coder 系列模型提供了强大且多样化的代码生成能力,为开发者和研究人员提供了灵活的工具。通过灵活的 API 支持和丰富的模型尺寸,Qwen2.5-Coder 系列能够满足从基础代码补全到复杂代码生成的各类需求。

猫头虎:关注我们,了解更多 AI 模型相关的最新消息!

粉丝福利

👉 更多信息:有任何疑问或者需要进一步探讨的内容,欢迎点击文末名片获取更多信息。我是猫头虎,期待与您的交流! 🦉💬

- 链接:[直达链接]https://zhaimengpt1.kimi.asia/list

本文参与 腾讯云自媒体同步曝光计划,分享自作者个人站点/博客。

原始发表:2024-11-18,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录