Kafka - 3.x 分区分配策略及再平衡不完全指北

Kafka - 3.x 分区分配策略及再平衡不完全指北

生产经验——分区分配策略及再平衡

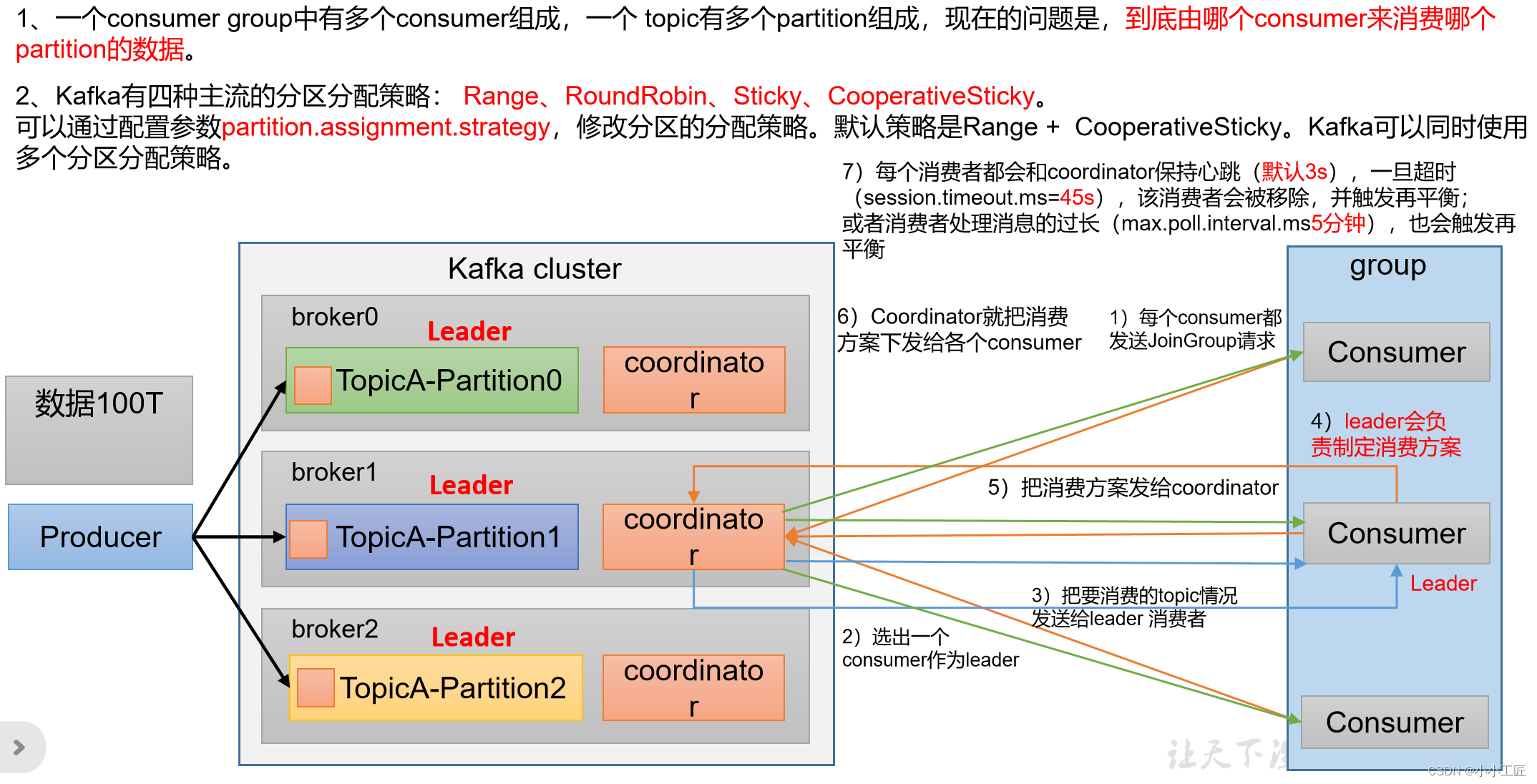

在Apache Kafka中,确定哪个Consumer消费哪个Partition的数据是由Kafka的Group Coordinator和Partition Assignment策略来管理的。以下是一些关于这个过程的详细解释:

- Consumer Group:Consumer Group是一组Consumer的集合,它们协作地消费一个或多个Kafka Topic中的数据。Consumer Group通常用于实现消息处理的负载均衡,确保每个消息被处理一次,而不被重复处理。

- Topic和Partition:Kafka Topic可以分成多个Partition,每个Partition是数据的一个子集。分割Topic成多个Partition有助于实现数据并行处理和提高吞吐量。

- Group Coordinator:Kafka集群中有一个Group Coordinator,它负责管理Consumer Group的活动。Consumer Group的成员定期与Group Coordinator通信,以确保它们的状态和分配情况。

- Partition Assignment策略:在Consumer Group启动时,Group Coordinator负责为Consumer Group的每个成员分配要消费的Partition。这个分配是根据Partition Assignment策略来完成的,Kafka提供了不同的策略来实现不同的分配方式,例如Round Robin、Range、或自定义分配策略。

- Round Robin:这是一种简单的策略,每个Consumer依次分配一个Partition,然后再循环。这样可以均匀分配Partition,但可能会导致不均衡,因为某些Partition可能比其他Partition更大或更活跃。

- Range:Range策略尝试将相邻的Partition分配给相同的Consumer,以最大程度地减少网络传输。

- 自定义策略:你也可以编写自定义的Partition Assignment策略来满足特定需求。

最终,每个Consumer会被分配一组Partition,它们将负责从这些Partition中消费数据。这个分配过程是动态的,因此如果Consumer Group的成员数目变化,或者有新的Partition被添加到Topic中,分配策略将会重新计算并分配Partition。

需要注意的是,Kafka的分配策略和Consumer Group的协调机制使得数据的消费和负载均衡变得相对容易管理,同时允许水平扩展,以适应不同规模的工作负载。

参数名称 | 描述 |

|---|---|

heartbeat.interval.ms | Kafka消费者和coordinator之间的心跳时间,默认3秒。必须小于session.timeout.ms,且不应该高于session.timeout.ms的1/3。 |

session.timeout.ms | Kafka消费者和coordinator之间连接的超时时间,默认45秒。超过该值,消费者被移除,消费者组执行再平衡。 |

max.poll.interval.ms | 消费者处理消息的最大时长,默认是5分钟。超过该值,消费者被移除,消费者组执行再平衡。 |

partition.assignment.strategy | 消费者分区分配策略,默认策略是Range + CooperativeSticky。Kafka可以同时使用多个分区分配策略。可选策略包括:Range、RoundRobin、Sticky、CooperativeSticky。 |

生产者分区分配之Range及再平衡

Range分区策略原理

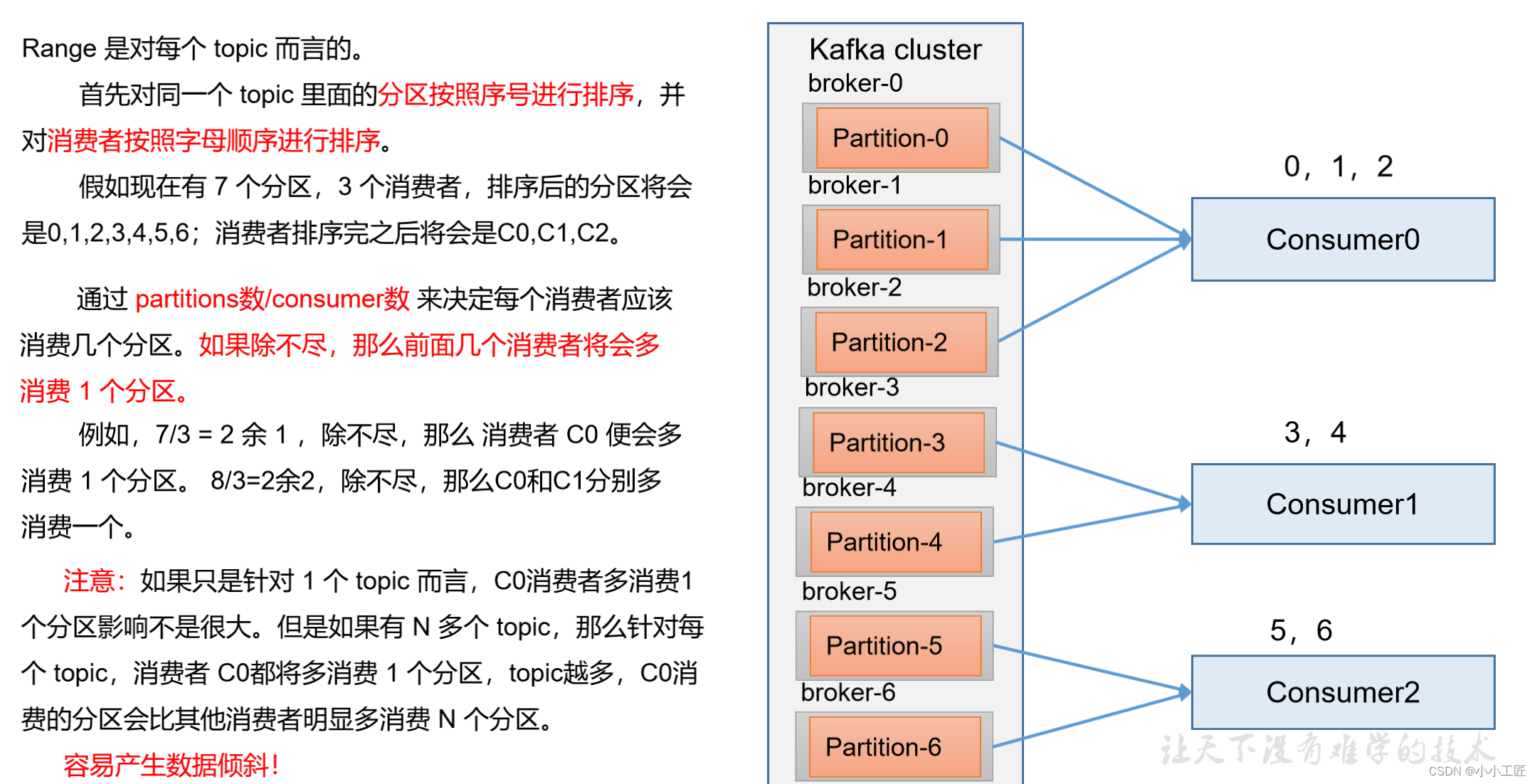

Range分区分配策略是Kafka中一种用于分配分区给消费者的策略。它的基本原理是将一组连续的分区分配给每个消费者,这样每个消费者负责一定范围内的连续分区。这种分配方式有助于减少网络传输和提高局部性,因为相邻的分区通常在相同的Broker上,并且减少了Leader的切换。

以下是Range分区分配策略的详细原理和工作流程:

- 确定可用分区:首先,消费者组需要确定可用的分区。这通常涉及到订阅一个或多个Kafka Topic,然后获取每个Topic的所有分区列表。

- 排序分区:接下来,消费者组对分区进行排序。这是为了确保所有消费者在分配分区时都能按照相同的顺序进行操作。通常,分区按照它们的分区号(Partition ID)进行升序排序。

- 确定消费者数目:消费者组需要知道有多少个消费者成员。这可以通过与Group Coordinator通信来获取成员列表,然后计算消费者的数量。

- 分配分区:现在,Range分区分配策略开始分配分区给消费者。它通过如下步骤完成: a. 将排序后的分区列表均匀地分割成若干区块,区块的数量等于消费者的数量。 b. 然后,每个消费者被分配一个区块,这个区块包含一组连续的分区。 c. 每个消费者被分配的区块是按照均匀分布的原则进行分配的,确保每个消费者的分区负载大致相等。 d. 如果分区数量无法被整除地分配给消费者数目,最后一个消费者可能会获得稍多于其他消费者的分区,以确保所有分区都分配出去。

- 分配完成:一旦分配完成,每个消费者就知道它需要消费哪些分区,以及如何访问这些分区。消费者会开始从这些分区中拉取数据,进行处理。

总的来说,Range分区分配策略通过将一组连续的分区分配给每个消费者来实现负载均衡。这有助于减少网络开销,并提高数据局部性,因为相邻的分区通常位于相同的Broker上,从而减少了Leader的切换次数,提高了整体性能。这是Kafka的默认分区分配策略之一,但你也可以选择其他策略来满足特定需求。

Range分区分配策略及再平衡案例

1)准备 ① 修改主题groupTest01的分区为7个分区

[xxx@hadoop102 kafka]$ bin/kafka-topics.sh --bootstrap-server hadoop102:9092 --alter --topic groupTest01--partitions 7② 创建3个消费者,并组成消费者组group02,消费主题groupTest01 ③ 观察分区分配情况

启动第一个消费者,观察分区分配情况

// consumer01

Successfully synced group in generation Generation{generationId=1, memberId='consumer-group02-1-4b019db6-948a-40d2-9d8d-8bbd79f59b14', protocol='range'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-1, groupTest01-2, groupTest01-3, groupTest01-4, groupTest01-5, groupTest01-6])

Adding newly assigned partitions: groupTest01-3, groupTest01-2, groupTest01-1, groupTest01-0, groupTest01-6, groupTest01-5, groupTest01-4

启动第二个消费者,观察分区分配情况

// consumer01

Successfully synced group in generation Generation{generationId=2, memberId='consumer-group02-1-4b019db6-948a-40d2-9d8d-8bbd79f59b14', protocol='range'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-1, groupTest01-2, groupTest01-3])

Adding newly assigned partitions: groupTest01-3, groupTest01-2, groupTest01-1, groupTest01-0

// consumer02

Successfully synced group in generation Generation{generationId=2, memberId='consumer-group02-1-60afd984-6916-4101-8e72-ae52fa8ded6c', protocol='range'}

Notifying assignor about the new Assignment(partitions=[groupTest01-4, groupTest01-5, groupTest01-6])

Adding newly assigned partitions: groupTest01-6, groupTest01-5, groupTest01-4

启动第三个消费者,观察分区分配情况

// consumer01

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group02-1-4b019db6-948a-40d2-9d8d-8bbd79f59b14', protocol='range'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-1, groupTest01-2])

Adding newly assigned partitions: groupTest01-2, groupTest01-1, groupTest01-0

// consumer02

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group02-1-60afd984-6916-4101-8e72-ae52fa8ded6c', protocol='range'}

Notifying assignor about the new Assignment(partitions=[groupTest01-3, groupTest01-4])

Adding newly assigned partitions: groupTest01-3, groupTest01-4

// consumer03

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group02-1-fd15f50f-0a33-4e3a-8b8c-237252a41f4d', protocol='range'}

Notifying assignor about the new Assignment(partitions=[groupTest01-5, groupTest01-6])

Adding newly assigned partitions: groupTest01-6, groupTest01-5思考:如果有消费者退出,分区分配会是什么样子?

生产者分区分配之RoundRobin策略及再平衡

RoundRobin分区策略原理

RoundRobin分区分配策略是Kafka中一种常用的消费者分区分配策略,它的原理非常简单:每个消费者依次轮流分配Topic的分区,以确保分区分配是均匀的。这意味着每个消费者将依次处理不同分区的数据,然后再重新开始。

以下是RoundRobin分区分配策略的详细原理:

- 消费者加入Group:当一个新的消费者加入Consumer Group时,或者已经存在的消费者需要重新平衡分区,Group Coordinator(Kafka集群中的一个组协调器)会触发分区分配过程。

- 计算分区分配:在RoundRobin策略下,Group Coordinator会遍历Topic的所有分区,并将它们按顺序分配给消费者。假设有3个分区(Partition 0、Partition 1、Partition 2)和2个消费者(Consumer A和Consumer B),分配可能如下:

- Consumer A: Partition 0

- Consumer B: Partition 1

- Consumer A: Partition 2

- Consumer B: Partition 0

- Consumer A: Partition 1

- Consumer B: Partition 2

- 分区分配循环:这个过程会持续下去,循环分配分区,确保每个消费者都有机会消费所有分区。这也有助于实现负载均衡,因为每个消费者都会处理不同的分区,从而分散了负载。

- 动态负载均衡:如果新的消费者加入Consumer Group或有现有消费者离开,分区分配将会重新计算,以确保适应消费者的数量变化。

RoundRobin分区分配策略的优点是简单且公平,每个消费者都有相同的机会消费每个分区。然而,它可能不适用于所有情况,特别是当分区的大小或活跃度不均匀时。在这种情况下,其他分区分配策略如Range或Sticky可能更适合,它们会更精细地考虑分区的特性来实现更好的负载均衡。

RoundRobin分区分配策略及再平衡案例

- 修改消费者代码,消费者组都是group03。

- 修改分区分配策略为roundrobin

properties.put(ConsumerConfig.PARTITION_ASSIGNMENT_STRATEGY_CONFIG, RoundRobinAssignor.class.getName());依次启动3个消费者,观察控制台输出

启动消费者CustomConsumer01,观察控制台输出

// customConsumer01

Successfully synced group in generation Generation{generationId=1, memberId='consumer-group03-1-2d38c78b-b17d-4d43-93f5-4b676f703177', protocol='roundrobin'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-1, groupTest01-2, groupTest01-3, groupTest01-4, groupTest01-5, groupTest01-6])

Adding newly assigned partitions: groupTest01-3, groupTest01-2, groupTest01-1, groupTest01-0, groupTest01-6, groupTest01-5, groupTest01-4

启动消费者CustomConsumer02,观察控制台输出

// customConsumer01

Successfully synced group in generation Generation{generationId=2, memberId='consumer-group03-1-2d38c78b-b17d-4d43-93f5-4b676f703177', protocol='roundrobin'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-2, groupTest01-4, groupTest01-6])

Adding newly assigned partitions: groupTest01-2, groupTest01-0, groupTest01-6, groupTest01-4

// customConsumter02

Successfully synced group in generation Generation{generationId=2, memberId='consumer-group03-1-5771a594-4e99-47e8-9df6-ca820af6698b', protocol='roundrobin'}

Notifying assignor about the new Assignment(partitions=[groupTest01-1, groupTest01-3, groupTest01-5])

Adding newly assigned partitions: groupTest01-3, groupTest01-1, groupTest01-5

启动消费者CustomConsumer03,观察控制台输出

// customConsumer01

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group03-1-2d38c78b-b17d-4d43-93f5-4b676f703177', protocol='roundrobin'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-3, groupTest01-6])

Adding newly assigned partitions: groupTest01-3, groupTest01-0, groupTest01-6

// customConsumter02

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group03-1-5771a594-4e99-47e8-9df6-ca820af6698b', protocol='roundrobin'}

Notifying assignor about the new Assignment(partitions=[groupTest01-1, groupTest01-4])

Adding newly assigned partitions: groupTest01-1, groupTest01-4

// customConsumter03

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group03-1-d493b011-d6ea-4c36-8ae5-3597db635219', protocol='roundrobin'}

Notifying assignor about the new Assignment(partitions=[groupTest01-2, groupTest01-5])

Adding newly assigned partitions: groupTest01-2, groupTest01-5思考:如果有消费者退出,分区分配会是什么样子?

生产者分区分配之Sticky及再平衡

Sticky分区策略原理

可以理解为分配的结果带有“粘性的”。即在执行一次新的分配之前,考虑上一次分配的结果,尽量少的调整分配的变动,可以节省大量的开销。粘性分区是Kafka从0.11.x版本开始引入这种分配策略,首先会尽量均衡的放置分区到消费者上面,在出现同一消费者组内消费者出现问题的时候,会尽量保持原有分配的分区不变化

"Sticky"分区分配策略是Kafka中的一种分区分配策略,用于将分区分配给消费者。它的主要目标是尽量减少分区再分配(rebalancing)的频率,以提高消费者组的稳定性。

Sticky分区分配策略的原理如下:

- 初始化分配:初始时,Kafka将分区均匀分配给消费者。每个消费者按顺序获取一个或多个分区,以确保尽可能平均地分配负载。

- 粘性分区分配:一旦分区被分配给某个消费者,该分区将尽量保持分配给同一消费者。这是策略名称"Sticky"的来源,因为它试图将分区"粘"在已经处理它的消费者上。

- 分区重新平衡:如果有新的消费者加入消费者组,或者有消费者离开,系统需要执行分区再分配。但是,Sticky策略会尽量减小重新平衡的频率。它不会立刻重新分配所有分区,而是尽量将已分配的分区保持不变,并只分配新增的分区。

- 重新平衡的触发:重新平衡可以由以下几种情况触发:

- 消费者加入或退出消费者组。

- 消费者心跳超时。

- 某个分区失去联系的情况下,可能会重新分配。

- 维持粘性:Sticky策略会尽力保持分区分配的稳定性,以减少分区再分配的次数,从而降低了整个消费者组的不稳定性。这有助于提高系统的可用性和性能。

Sticky策略的主要优点是减少了分区再分配的频率,减轻了系统的不稳定性,降低了重新平衡的成本。这对于大规模的Kafka集群和高吞吐量的消费者组特别有用。然而,它不一定适用于所有情况,因为在某些情况下,需要更频繁的重新平衡以确保公平的负载分配。因此,在选择分区分配策略时,需要根据具体的使用情况和需求来权衡。

Sticky分区分配策略及再平衡案例

- 修改分区分配策略,修改消费者组为groupTest03

// 修改分区分配策略

properties.put(ConsumerConfig.PARTITION_ASSIGNMENT_STRATEGY_CONFIG, StickyAssignor.class.getName());- 分别启动三个消费者后,查看第三次分配如下

启动消费者CustomConsumer01,观察控制台输出

// customConsumer01

Successfully synced group in generation Generation{generationId=1, memberId='consumer-group06-1-19e1e6a4-e2ca-467d-909f-3769dc527d34', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-1, groupTest01-2, groupTest01-3, groupTest01-4, groupTest01-5, groupTest01-6])

Adding newly assigned partitions: groupTest01-3, groupTest01-2, groupTest01-1, groupTest01-0, groupTest01-6, groupTest01-5, groupTest01-4

启动消费者CustomConsumer02,观察控制台输出

// customConsumer01

Successfully synced group in generation Generation{generationId=2, memberId='consumer-group06-1-19e1e6a4-e2ca-467d-909f-3769dc527d34', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-1, groupTest01-2, groupTest01-3])

Adding newly assigned partitions: groupTest01-3, groupTest01-2, groupTest01-1, groupTest01-0

// customConsumer02

Successfully synced group in generation Generation{generationId=2, memberId='consumer-group06-1-6ea3622c-bbe8-4e13-8803-d5431a224671', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-4, groupTest01-5, groupTest01-6])

Adding newly assigned partitions: groupTest01-6, groupTest01-5, groupTest01-4

启动消费者CustomConsumer03,观察控制台输出

// customConsumer01

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group06-1-19e1e6a4-e2ca-467d-909f-3769dc527d34', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-0, groupTest01-1, groupTest01-2])

Adding newly assigned partitions: groupTest01-2, groupTest01-1, groupTest01-0

// customConsumer02

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group06-1-6ea3622c-bbe8-4e13-8803-d5431a224671', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-4, groupTest01-5])

Adding newly assigned partitions: groupTest01-5, groupTest01-4

// customConsumer03

Successfully synced group in generation Generation{generationId=3, memberId='consumer-group06-1-eb0ac20c-5d94-43a7-b7c1-96a561513995', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-3, groupTest01-6])

Adding newly assigned partitions: groupTest01-3, groupTest01-6

3) 杀死消费者CustomConsumer01后,观察控制台输出

// customConsumer02

Successfully synced group in generation Generation{generationId=4, memberId='consumer-group06-1-6ea3622c-bbe8-4e13-8803-d5431a224671', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-4, groupTest01-5, groupTest01-0, groupTest01-2])

Adding newly assigned partitions: groupTest01-2, groupTest01-0, groupTest01-5, groupTest01-4

// customConsumer03

Successfully synced group in generation Generation{generationId=4, memberId='consumer-group06-1-eb0ac20c-5d94-43a7-b7c1-96a561513995', protocol='sticky'}

Notifying assignor about the new Assignment(partitions=[groupTest01-3, groupTest01-6, groupTest01-1])

Adding newly assigned partitions: groupTest01-3, groupTest01-1, groupTest01-6