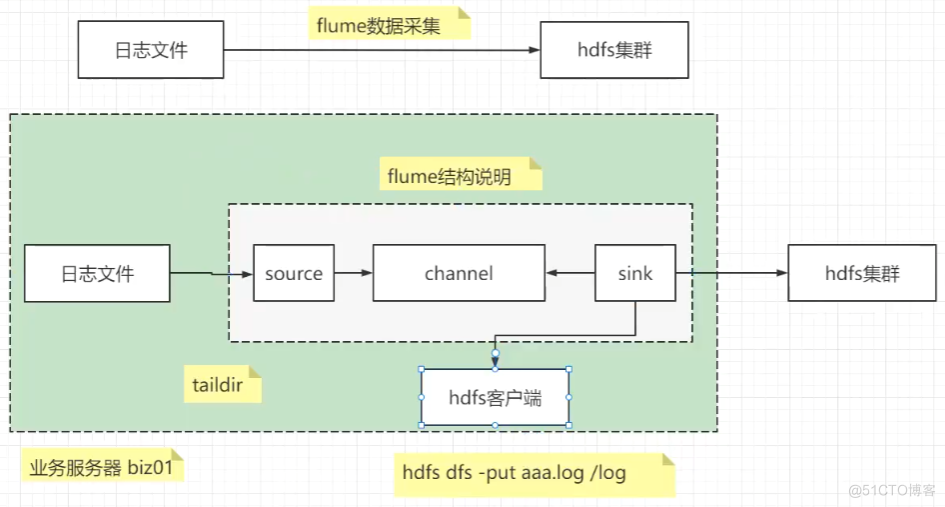

安装数据采集软件Flume

安装数据采集软件Flume

前提条件:

- 业务系统需要有hadoop的客户端

安装hadoop集群客户端

直接从hadoop01节点通过scp拷贝客户端到biz01

# 在hadoop01上执行

cd /bigdata/server

scp -r hadoop/ biz01:$PWD

# 设置好主机名

vi /etc/hosts

192.168.113.145 hadoop01 hadoop01

192.168.113.146 hadoop02 hadoop02

192.168.113.147 hadoop03 hadoop03

192.168.113.148 biz01 biz01

1714984935044.png

安装flume数据采集软件

可以直接去官网下载采集:https://flume.apache.org/,选择左侧的download

在biz01上安装flume数据采集软件

# 1 上传apache-flume-1.10.1-bin.tar.gz 到 /bigdata/soft 目录

# 2 解压到指定目录

tar -zxvf apache-flume-1.10.1-bin.tar.gz -C /bigdata/server/

# 3 改名

cd /bigdata/server

mv apache-flume-1.10.1-bin/ flume配置环境变量

vi /etc/profile.d/customer_env.sh

#!/bin/bash

#JAVA_HOME

export JAVA_HOME=/bigdata/server/jdk1.8

export PATH=$PATH:$JAVA_HOME/bin

#FLUME_HOME

export FLUME_HOME=/bigdata/server/flume

export PATH=$PATH:$FLUME_HOME/bin

#HADOOP_HOME

export HADOOP_HOME=/bigdata/server/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

# 加载配置文件

source /etc/profileflume-ng

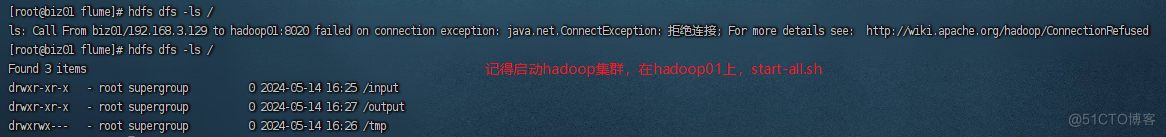

测试环境

# 测试hadoop环境

hdfs dfs -ls /

1715742421385.png

配置Flume采集数据

在lib目录添加一个ETL拦截器

- 处理标准的json格式的数据, 如果格式不符合条件, 则会过滤掉该信息 {"key":"值","key2":"值2",...}

- 处理时间漂移的问题, 把对应的日志存放到具体的分区数据中

目录:/bigdata/server/flume/lib

在业务服务器的Flume的lib目录添加itercepter-etl.jar

加上去之后,记得再查看一下:find iter*

配置采集数据到hdfs文件的配置

在flume的jobs目录,没有该目录,则创建之.

创建文件 jobs/log_file_to_hdfs.conf

#为各组件命名

a1.sources = r1

a1.channels = c1

a1.sinks = k1

#描述source

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /bigdata/data/log/behavior/.*

a1.sources.r1.positionFile = /bigdata/server/flume/position/behavior/taildir_position.json

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = cn.wolfcode.flume.interceptor.ETLInterceptor$Builder

a1.sources.r1.interceptors = i2

a1.sources.r1.interceptors.i2.type = cn.wolfcode.flume.interceptor.TimeStampInterceptor$Builder

## channel1

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

## sink1

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /behavior/origin/log/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = log-

a1.sinks.k1.hdfs.round = false

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

## 控制输出文件是原生文件。

a1.sinks.k1.hdfs.fileType = DataStream

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1运行数据采集命令

# 进入到Flume的目录

cd /bigdata/server/flumebin/flume-ng agent --conf conf/ --name a1 --conf-file jobs/log_file_to_hdfs.conf -Dflume.root.logger=INFO,console # 后台启动运行

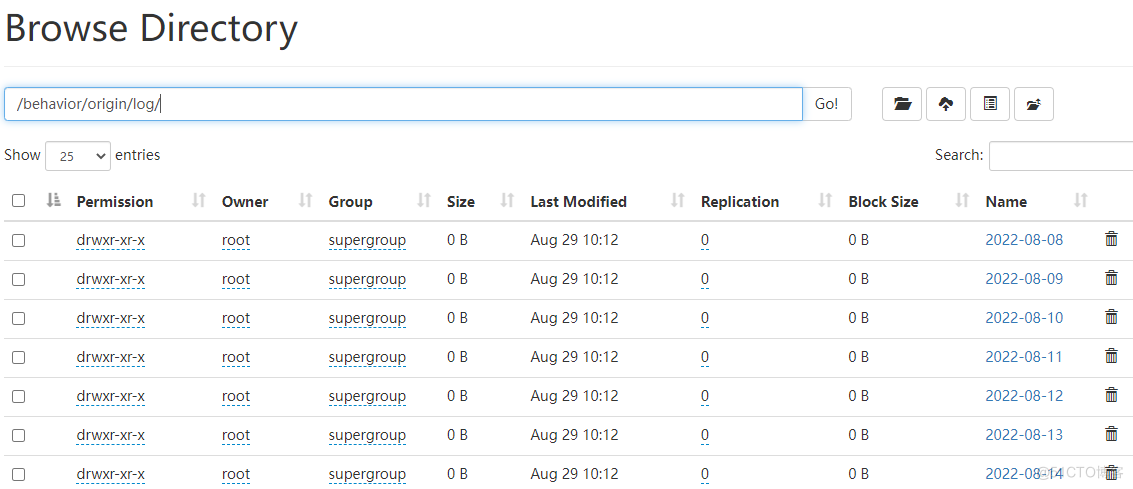

nohup bin/flume-ng agent --conf conf/ --name a1 --conf-file jobs/log_file_to_hdfs.conf -Dflume.root.logger=INFO,console >/bigdata/server/flume/logs/log_file_to_hdfs.log 2>&1 &日志采集效果

image-20220829101315186.png

本文参与 腾讯云自媒体同步曝光计划,分享自作者个人站点/博客。

原始发表:2024-05-20,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录