[C++]onnxruntime_gpu在ubuntu安装完后测试代码

[C++]onnxruntime_gpu在ubuntu安装完后测试代码

云未归来

发布于 2025-07-18 15:56:07

发布于 2025-07-18 15:56:07

测试环境:

ubuntu20.04

onnxruntime_gpu=1.28.0

cuda12.0+cudnn8.9.2

测试代码:

CMakeLists.txt

cmake_minimum_required(VERSION 3.10)

project(onnxruntime_gpu_test)

set(CMAKE_CXX_STANDARD 17)

option(USE_CUDA "Enable CUDA support" ON)

if (NOT APPLE AND USE_CUDA)

find_package(CUDA REQUIRED)

include_directories(${CUDA_INCLUDE_DIRS})

add_definitions(-DUSE_CUDA)

else ()

set(USE_CUDA OFF)

endif ()

set(ONNXRUNTIME_DIR /home/drose/onnxruntime-linux-x64-gpu-1.18.0)

# 查找ONNX Runtime包

include_directories(${ONNXRUNTIME_DIR}/include)

# 添加可执行文件

add_executable(${PROJECT_NAME} main.cpp)

target_link_libraries(${PROJECT_NAME} ${ONNXRUNTIME_DIR}/lib/libonnxruntime.so

)

if (USE_CUDA)

target_link_libraries(${PROJECT_NAME} ${CUDA_LIBRARIES})

endif ()main.cpp

#include <iostream>

#include <vector>

#include <onnxruntime_cxx_api.h>

int main() {

// 初始化ONNX Runtime环境

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "ONNXRuntimeGpuTest");

Ort::SessionOptions session_options;

// 配置GPU选项

OrtCUDAProviderOptions cuda_options;

session_options.AppendExecutionProvider_CUDA(cuda_options);

// 设置线程数(可选)

session_options.SetIntraOpNumThreads(1);

// 加载模型

const char* model_path = "model.onnx"; // 替换为你的模型路径

Ort::Session session(env, model_path, session_options);

size_t inputNodeCount= session.GetInputCount();

std::cout << "输入节点数量:" << inputNodeCount << "\n";

Ort::AllocatorWithDefaultOptions allocator;

std::shared_ptr<char> inputName = std::move(session.GetInputNameAllocated(0, allocator));

std::vector<char*> inputNodeNames;

inputNodeNames.push_back(inputName.get());

std::cout << "输入节点名称:" << inputName << "\n";

Ort::TypeInfo inputTypeInfo = session.GetInputTypeInfo(0);

auto input_tensor_info = inputTypeInfo.GetTensorTypeAndShapeInfo();

ONNXTensorElementDataType inputNodeDataType = input_tensor_info.GetElementType();

std::vector<int64_t> inputTensorShape = input_tensor_info.GetShape();

std::cout << "输入节点shape:";

for (int i = 0; i<inputTensorShape.size(); i++)

{

std::cout << inputTensorShape[i]<<" ";

}

std::cout << "\n";

//获取输出节点数量、名称和shape

size_t outputNodeCount = session.GetOutputCount();

std::cout << "输出节点数量:" << outputNodeCount << "\n";

std::shared_ptr<char> outputName = std::move(session.GetOutputNameAllocated(0, allocator));

std::vector<char*> outputNodeNames;

outputNodeNames.push_back(outputName.get());

std::cout << "输出节点名称:" << outputName << "\n";

Ort::TypeInfo type_info_output0(nullptr);

type_info_output0 = session.GetOutputTypeInfo(0); //output0

auto tensor_info_output0 = type_info_output0.GetTensorTypeAndShapeInfo();

ONNXTensorElementDataType outputNodeDataType = tensor_info_output0.GetElementType();

std::vector<int64_t> outputTensorShape = tensor_info_output0.GetShape();

std::cout << "输出节点shape:";

for (int i = 0; i<outputTensorShape.size(); i++)

{

std::cout << outputTensorShape[i]<<" ";

}

std::cout << "\n";

return 0;

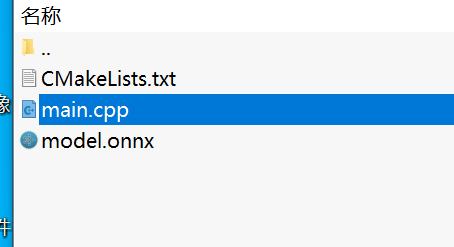

}文件结构:

model.onnx用的是yolov8官方pt模型转成onnx,您也可以使用其他onnx模型均可

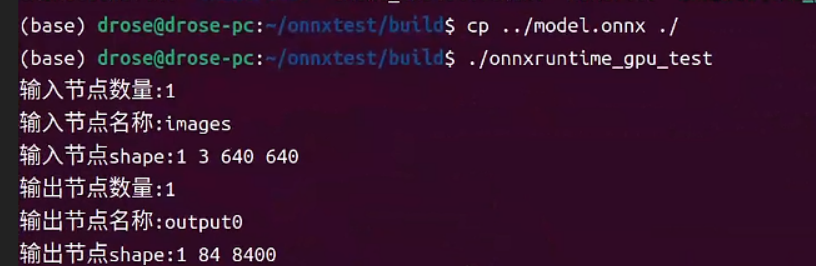

最后输出结果:

本文参与 腾讯云自媒体同步曝光计划,分享自作者个人站点/博客。

原始发表:2025-07-15,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读