Cora图神经网络Pytorch

Cora图神经网络Pytorch

Tom2Code

发布于 2024-02-01 12:37:47

发布于 2024-02-01 12:37:47

代码可运行

运行总次数:0

代码可运行

最近搞到了datawhale的内部资料,学习的同时也来分享一波

首先在加载数据集的时候就报错了:

https://github.com/kimiyoung/planetoid/

raw/master/data/ind.cora.x进行下载Core数据集,

但是没有蹄 子打不开下载不了

出现“TimeoutError: [WinError 10060] 由于连接方

在一段时间后没有正确答复或连接的主机没有反应,连接

尝试失败。”这种错误。所以我们需要手动的下载数据集,并且解压放到对应的文件夹下:

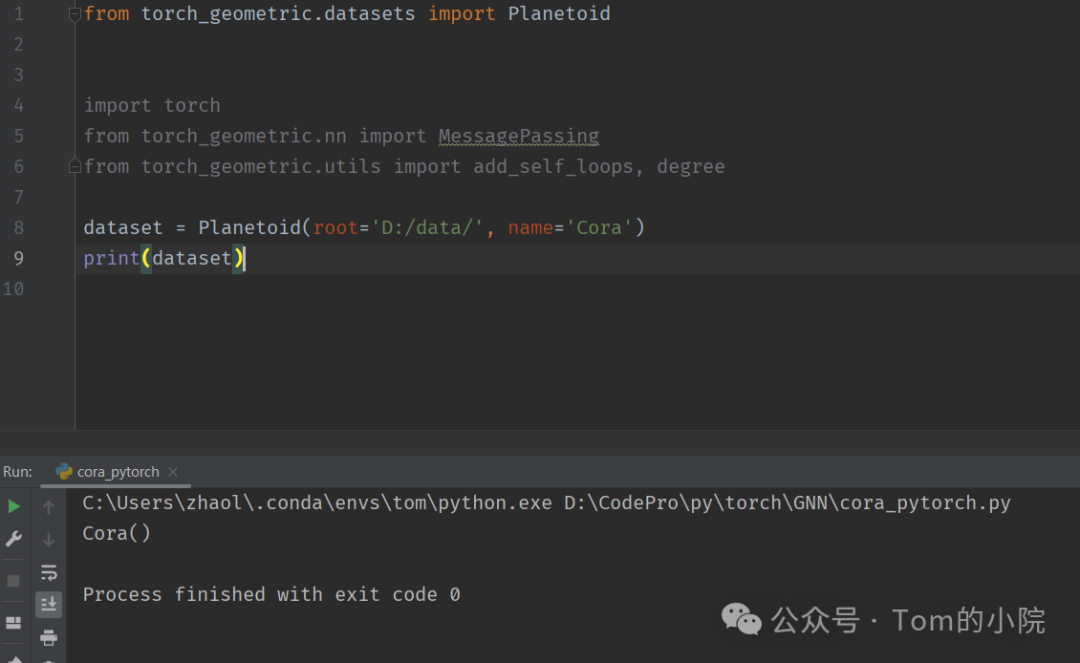

首先给大家先放上加载数据集的代码:

from torch_geometric.datasets import Planetoid

import torch

from torch_geometric.nn import MessagePassing

from torch_geometric.utils import add_self_loops, degree

dataset = Planetoid(root='D:/data/', name='Cora')

print(dataset)

打印一下数据集的一些属性:

from torch_geometric.datasets import Planetoid

import torch

from torch_geometric.nn import MessagePassing

from torch_geometric.utils import add_self_loops, degree

from torch_geometric.transforms import NormalizeFeatures

dataset = Planetoid(root='D:/data/', name='Cora',transform=NormalizeFeatures())

print()

print(f"Dataset: {dataset}")

print("========")

print(f"Number of graphs:{len(dataset)}")

print(f"Number of features:{dataset.num_features}")

print(f'Number of classes: {dataset.num_classes}')

data = dataset[0] # Get the first graph object.

print()

print(data)

print('======================')

# Gather some statistics about the graph.

print(f'Number of nodes: {data.num_nodes}')

print(f'Number of edges: {data.num_edges}')

print(f'Average node degree: {data.num_edges /data.num_nodes:.2f}')

print(f'Number of training nodes: {data.train_mask.sum()}')

print(f'Training node label rate: {int(data.train_mask.sum()) /data.num_nodes:.2f}')

print(f'Contains isolated nodes:{data.contains_isolated_nodes()}')

print(f'Contains self-loops: {data.contains_self_loops()}')

print(f'Is undirected: {data.is_undirected()}')输出结果:

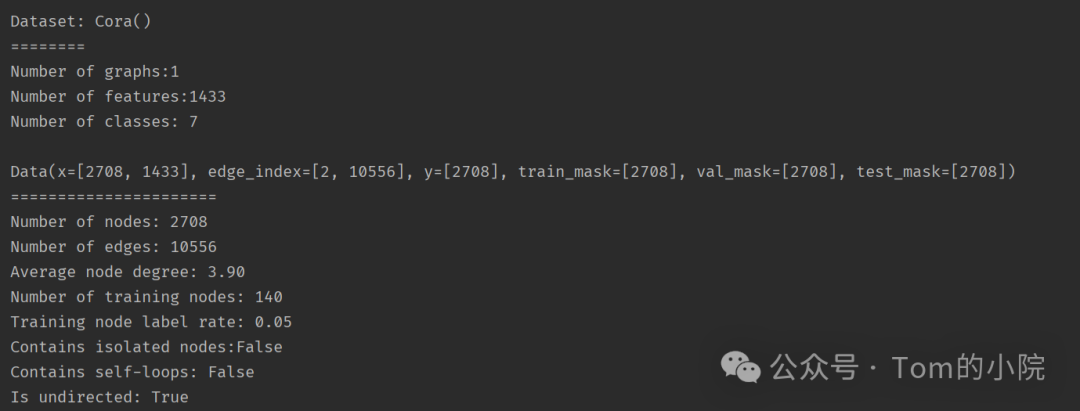

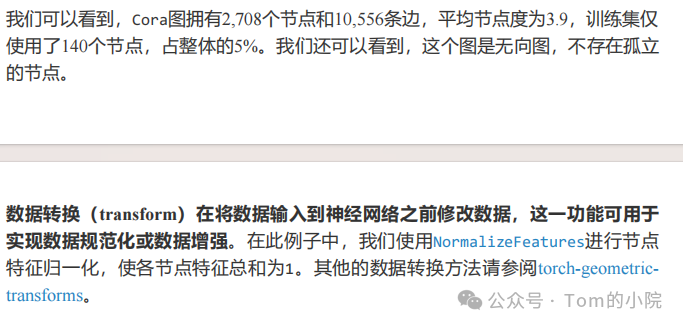

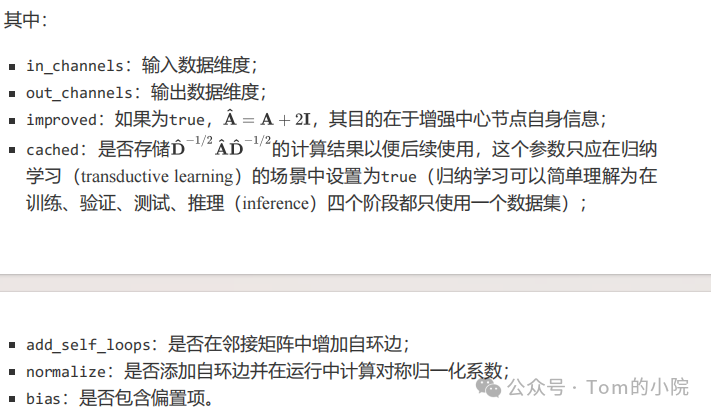

学习资料中是这样介绍的:

然后使用一个简单的MLP神经网络来看看效果怎样:

class MLP(torch.nn.Module):

def __init__(self, hidden_channels):

super(MLP, self).__init__()

torch.manual_seed(12345)

self.lin1 = Linear(dataset.num_features, hidden_channels)

self.lin2 = Linear(hidden_channels, dataset.num_classes)

def forward(self, x):

x = self.lin1(x)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.lin2(x)

return x

model = MLP(hidden_channels=16)

print(model)

model = MLP(hidden_channels=16)

criterion = torch.nn.CrossEntropyLoss() # Define loss criterion.

optimizer = torch.optim.Adam(model.parameters(), lr=0.01,weight_decay=5e-4) # Define optimizer.

def train():

model.train()

optimizer.zero_grad() # Clear gradients.

out = model(data.x) # Perform a single forward pass.

loss = criterion(out[data.train_mask],data.y[data.train_mask]) # Compute the loss solely based on the

loss.backward() # Derive gradients.

optimizer.step() # Update parameters based on gradients.

return loss

for epoch in range(1, 201):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

def test():

model.eval()

out = model(data.x)

pred = out.argmax(dim=1) # Use the class with highest probability.

test_correct = pred[data.test_mask] == data.y[data.test_mask]

# Check against ground-truth labels.

test_acc = int(test_correct.sum()) / int(data.test_mask.sum())

# Derive ratio of correct predictions.

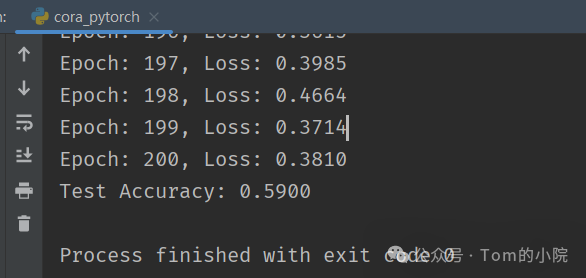

return test_acc输出:

正如我们看到的,效果很差,一个重要的原因就是有标签的节点数量过少,训练的时候会有一些过拟合

接下来我们介绍图卷积神经网络:

然后开始构建我们的图神经网络:

from torch_geometric.nn import GCNConv

class GCN(torch.nn.Module):

def __init__(self, hidden_channels):

super(GCN, self).__init__()

torch.manual_seed(12345)

self.conv1 = GCNConv(dataset.num_features,

hidden_channels)

self.conv2 = GCNConv(hidden_channels, dataset.num_classes)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

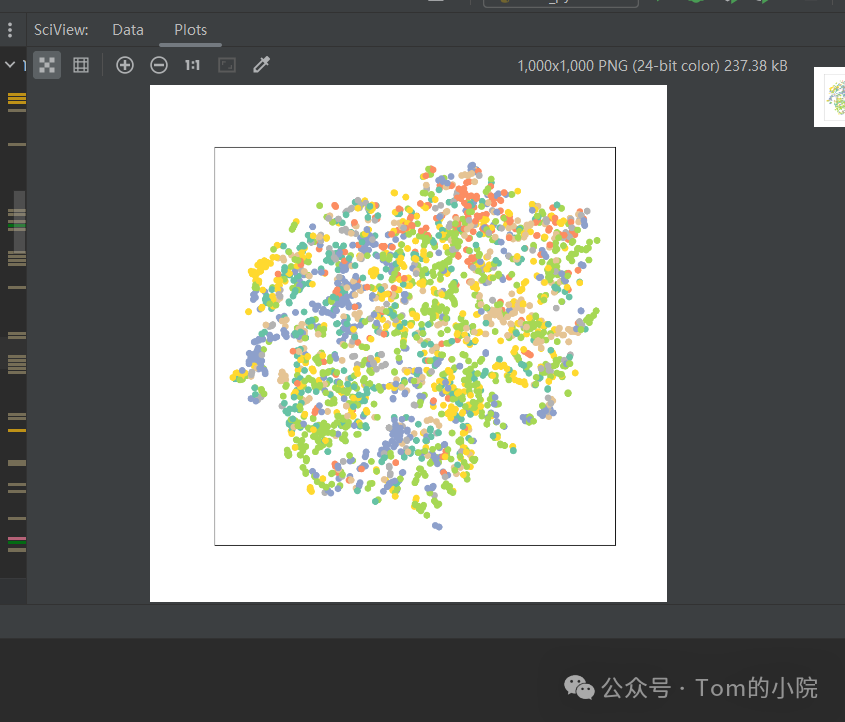

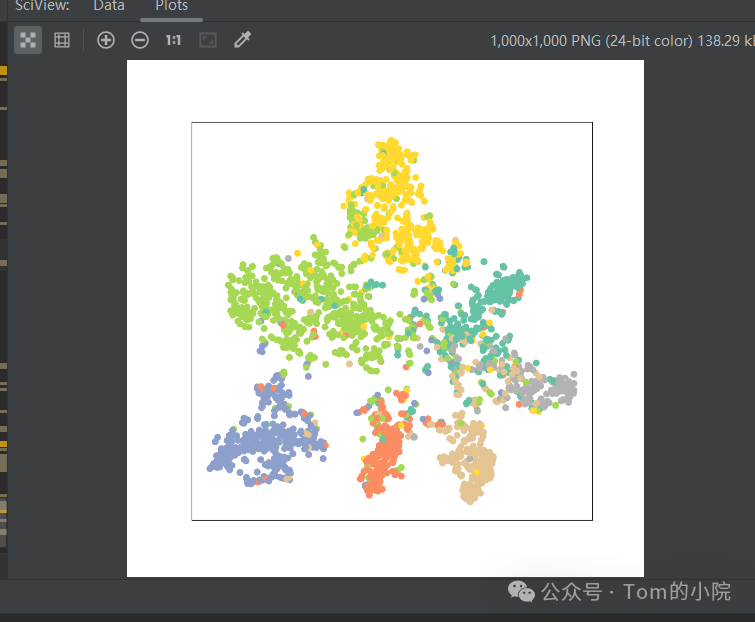

然后可视化由未经过训练的GCN图神经网络生成的节点表征:

通过visualize的函数处理,7维特征的节点被映射到2维的平面上,可以看到有点“同类节点群聚”的现象

接下来,构建我们的图神经网络:

from torch_geometric.nn import GCNConv

class GCN(torch.nn.Module):

def __init__(self, hidden_channels):

super(GCN, self).__init__()

torch.manual_seed(12345)

self.conv1 = GCNConv(dataset.num_features,

hidden_channels)

self.conv2 = GCNConv(hidden_channels, dataset.num_classes)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

model = GCN(hidden_channels=16)

print("图神经网络:")

print(model)

model.eval()

out=model(data.x,data.edge_index)

visualize(out,color=data.y)

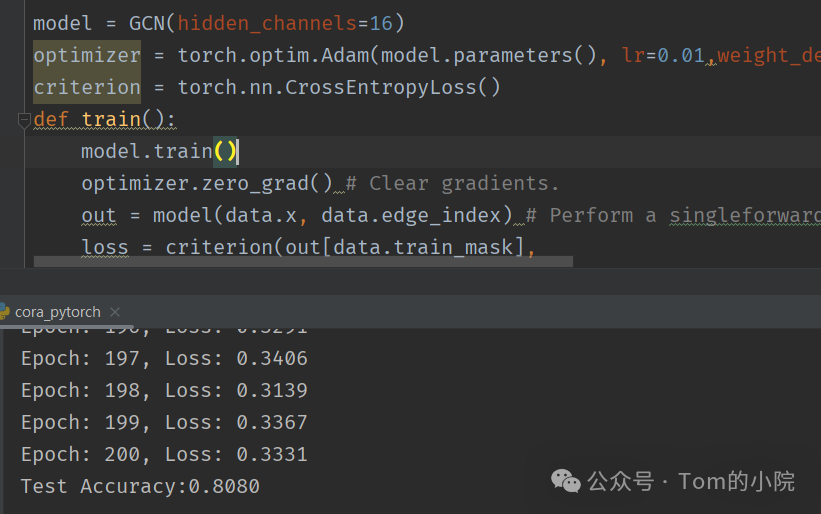

model = GCN(hidden_channels=16)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01,weight_decay=5e-4)

criterion = torch.nn.CrossEntropyLoss()

def train():

model.train()

optimizer.zero_grad() # Clear gradients.

out = model(data.x, data.edge_index) # Perform a singleforward pass.

loss = criterion(out[data.train_mask],

data.y[data.train_mask]) # Compute the loss solely based on thetraining nodes.

loss.backward() # Derive gradients.

optimizer.step() # Update parameters based on gradients.

return loss

for epoch in range(1, 201):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

def test():

model.eval()

out=model(data.x,data.edge_index)

pred=out.argmax(dim=1)

test_correct=pred[data.test_mask]==data.y[data.test_mask]

test_acc=int(test_correct.sum())/int(data.test_mask.sum())

return test_acc

test_acc=test()

print(f"Test Accuracy:{test_acc:.4f}")输出:

可以看到准确率已经提高到了80%,与前面获得59%的测准确率的MLP图神经网络相比,GCN图神经网络准确性要高的多,这表明节点的邻接信息再取得更好的准确率方面起着更关键的作用。

再来做个图看看吧:

通过上面这个图发现,同类节点群聚的现象更加明显了,这意味着在训练后,GCN图神经网络生成的节点表征质量更高了。

完

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2024-01-29,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读