AI的倾听艺术与语音交互温度教学——从语料清洗到唤醒响应的系统实践

AI的倾听艺术与语音交互温度教学——从语料清洗到唤醒响应的系统实践

安全风信子

发布于 2025-11-16 20:15:09

发布于 2025-11-16 20:15:09

文中已包含可用代码,如需完整文件可以点击下方链接

https://download.csdn.net/download/lxcxjxhx/92205029一、数据与流程

- 语料清洗:去噪、时长归一、标注一致性。

- 特征提取:MFCC、梅尔频谱;NLU:意图识别与槽位抽取。

二、核心代码(可运行示例)

# 运行前:确保已安装 requirements.txt 中的 vosk、sounddevice、numpy、pyttsx3

# 并将中文模型下载到 ./models/vosk-cn-small 目录(README 有说明)

import sounddevice as sd

import numpy as np

import json

from vosk import Model, KaldiRecognizer

import pyttsx3

import time

import csv

import os

SAMPLE_RATE = 16000

DURATION_SEC = 3

MODEL_PATH = os.path.join(".", "models", "vosk-cn-small")

# 简单情绪估计函数(关键词映射)

def estimate_emotion(text: str) -> str:

if any(k in text for k in ["累", "疲惫", "困"]):

return "tired"

if any(k in text for k in ["开心", "高兴", "不错"]):

return "happy"

return "neutral"

# TTS 真实响应(使用 pyttsx3)

def respond(text: str, rate=180, volume=0.7):

engine = pyttsx3.init()

engine.setProperty("rate", int(rate))

engine.setProperty("volume", float(volume)) # 0.0–1.0

engine.say(text)

engine.runAndWait()

# 录音到 bytes(int16 单声道)

def record_audio(seconds=DURATION_SEC, sample_rate=SAMPLE_RATE) -> bytes:

frames = int(seconds * sample_rate)

audio = sd.rec(frames, samplerate=sample_rate, channels=1, dtype="int16")

sd.wait()

return audio.tobytes()

# Vosk 离线识别

def recognize(audio_bytes: bytes, sample_rate=SAMPLE_RATE) -> str:

assert os.path.isdir(MODEL_PATH), f"Vosk模型未找到:{MODEL_PATH}"

model = Model(MODEL_PATH)

rec = KaldiRecognizer(model, sample_rate)

rec.SetWords(True)

rec.AcceptWaveform(audio_bytes)

res = json.loads(rec.Result())

return res.get("text", "").strip()

# 指标写入 CSV

def log_metrics(lat_ms: float, emotion: str, tts_rate: int, tts_volume: float, csv_path="metrics.csv"):

exists = os.path.exists(csv_path)

with open(csv_path, "a", newline="", encoding="utf-8") as f:

w = csv.writer(f)

if not exists:

w.writerow(["ts", "lat_ms", "emotion", "tts_rate", "tts_volume"])

w.writerow([time.strftime("%Y-%m-%d %H:%M:%S"), round(lat_ms,1), emotion, tts_rate, tts_volume])

if __name__ == "__main__":

t0 = time.time()

print("请说话(录制约3秒)…")

audio_bytes = record_audio()

text = recognize(audio_bytes)

t1 = time.time()

print("识别文本:", text or "(空)")

emo = estimate_emotion(text)

print("情绪估计:", emo)

reply = "我在倾听,你的每一个字都在这里。"

rate = 170 if emo=="tired" else 190

vol = 0.6 if emo=="tired" else 0.7

respond(reply, rate=rate, volume=vol)

log_metrics(lat_ms=(t1 - t0)*1000, emotion=emo, tts_rate=rate, tts_volume=vol)

print("完成。指标已写入 metrics.csv")三、交互体验要点

- 通过礼貌措辞与稳定节拍,构建“被理解”的感受;情绪识别不过度标注。

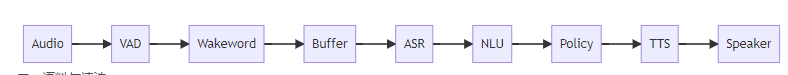

四、系统拓扑(Mermaid) 引子:声音是一条不稳定的河流。我们在语料的沙砾间清洗、在唤醒的涟漪里判断,在ASR与NLU的桥上与人交谈,再用TTS把冷静而温柔的回答送回空气。

一、系统链路总览

- 本地端:VAD(端点检测)→ 唤醒词识别 → 临时缓存 → 发送至ASR。

- 云/本地混合:ASR → NLU(意图/槽位)→ 对话策略(Policy)→ TTS。

- 设计目标:

- 语音温度:避免过度热情或过度冷淡,语速与音量动态调节;

- 延迟控制:端到端延迟≤800ms(边缘设备),尽量减少网络等待;

- 误唤醒治理:对唤醒词概率设置下限与二次确认机制。

r

二、语料与清洗

- 噪声治理:

- 频谱减法/带通滤波,保留人声主频;

- 环境采样基线(风扇、空调、键盘),用于适配不同场景。

- 分段与标注:

- 规则与弱监督并用(停顿>300ms、音量阈值);

- 情绪标签(愉快/平静/疲惫),仅作参考,不用于强制表达。

- 唤醒词数据集:

- 正/负样本平衡;不同口音与速率;

- 误唤醒样本(近音词、环境噪声)单独收集以提升鲁棒性。

三、VAD与唤醒词识别(最小实现)

- 端点检测:使用WebRTC VAD或轻量能量阈值;

- 唤醒词:轻量CNN或关键词匹配+概率阈值。

# 统一日志工具与误唤醒过滤

import time

def softly_log(*msg):

print("[soft]", *msg)

def miswake_filter(prob, th=0.3):

if prob > th:

softly_log("疑似误唤醒", prob)

return False

return True# WebRTC VAD示例(需 webrtcvad 包)

import webrtcvad

vad = webrtcvad.Vad(2) # 0–3:越大越敏感

def is_speech(frame_bytes, sample_rate=16000):

# frame_bytes: 16-bit PCM, 20ms帧(16000Hz→320字节)

return vad.is_speech(frame_bytes, sample_rate)

# 能量阈值备用方案(无需第三方)

def energy_vad(frame, th=0.02):

e = sum(abs(x) for x in frame) / max(1, len(frame))

return e > th四、ASR与NLU

- ASR选择:

- 本地(小型模型):低延迟、可离线;

- 云端(大模型):准确率高,但需网络与隐私评估。

- NLU意图识别:

- 关键词/规则与小模型结合;

- 二次确认(比如“为保证准确,请再复述一次”)用于高风险操作。

五、对话策略与TTS温度

- 策略:多轮对话,支持打断(barge-in)与错误恢复(提示重说或改写)。

- 温度与情感:

- Prosody特征(语速、音量、停顿)动态调节;

- 反讽示例:过度热情的TTS会让用户疲惫——改用克制与清晰的语速。

# TTS真实接口(使用 pyttsx3)与温度调节

import pyttsx3

_engine = pyttsx3.init()

def synthesize(text, rate=180, vol=0.6):

_engine.setProperty('rate', int(rate))

_engine.setProperty('volume', float(vol))

_engine.say(text)

_engine.runAndWait()

# 基于上下文的温度调节策略

def adapt_tone(context):

base_rate, base_vol = 180, 0.6

if context.get('error_repeats', 0) >= 2:

base_rate = int(base_rate * 0.9) # 降速,减少认知负担

if context.get('user_emotion') == 'tired':

base_vol = base_vol * 0.8

return base_rate, base_vol六、交互与错误恢复

- 打断策略:用户插话时,立即停止当前TTS播放,进入新轮次。

- 错误恢复:

- 轻错(ASR低置信):复述提示;

- 重错(高风险操作误识别):二次确认与回滚提示。

- 二次确认模板:

- “我理解为要执行X,请回复‘确认’或‘取消’。”

七、评测与可视化

- 指标:

- WER(词错误率)、SER(语句错误率)、延迟(端到端ms)、满意度(问卷)。

- 延迟与误唤醒记录:

import statistics as st

class Metrics:

def __init__(self):

self.latencies = []

self.miswake_probs = []

def add_latency(self, t_start_ms, t_end_ms):

self.latencies.append(t_end_ms - t_start_ms)

def add_miswake(self, p):

self.miswake_probs.append(p)

def summary(self):

lat = self.latencies or [0]

return {

'lat_ms_mean': st.mean(lat),

'lat_ms_p95': st.quantiles(lat, n=100)[94] if len(lat)>100 else max(lat),

'miswake_rate_hi': sum(p>0.3 for p in self.miswake_probs)/max(1, len(self.miswake_probs))

}八、隐私与安全

- 边缘处理优先:在本地完成VAD与唤醒,尽量减少上传原始音频。

- 明示与选择:用户可选择关闭云端识别或匿名化上传(加噪/截断)。

- 日志:不记录原始音频,仅存储指标与错误类型,便于后续优化。

九、参考与说明

- 语音链路的多种实现路径并存,选型需基于场景、算力与隐私权衡。

- 本文代码为教学片段,部署时需结合具体框架与硬件。

十、结语

- 听懂是算法的形态,也是克制的练习。我们尽量减少热情的夸饰,让系统在轻轻的语速里,把答案说清楚。

进阶附录:AI自定义语音生成(语音剥离→语音克隆→API集成)

- 目标:在本地复制粘贴即可用,完成“从参考音频中剥离人声→用AI生成自定义语音→接入语音助手(STT+TTS)”。

- 方案组合:

- 语音剥离:Demucs(推荐),可选 Spleeter。

- 语音克隆/生成:Coqui TTS 的 YourTTS 零样本克隆(speaker_wav)。

- API集成:FastAPI 提供本地 TTS 服务,其他脚本(如 Azure/Vosk 助手)通过 HTTP 调用。

指引总览(Mermaid)

graph LR

A[参考音频 reference.wav] -->|可选| B[Demucs 人声剥离]

A --> C[规范化 16kHz/单声道]

B --> C

C --> D[YourTTS 零样本克隆]

D --> E[FastAPI 本地TTS /tts]

E --> F[语音助手(本地/Vosk 或 云端/Azure STT)]

F --> G[播放 simpleaudio]

F --> H[日志与指标记录]

H --> I[伦理合规与用户告知]一、追加目录与依赖

模块03_语音交互\

├─ code\

│ ├─ requirements.txt # 替换/追加依赖见下

│ ├─ config.json

│ ├─ local_assistant.py

│ ├─ azure_assistant.py

│ ├─ utils_vad.py

│ ├─ separate_vocals.py # 语音剥离(Demucs)

│ ├─ custom_voice_clone.py # 自定义语音克隆(YourTTS)

│ ├─ custom_tts_api.py # 本地TTS API服务(FastAPI)

│ ├─ azure_custom_assistant.py # Azure STT + 本地自定义TTS播放

│ └─ tests\test_run.py

└─ samples\

└─ my_voice\

└─ reference.wav # 你的参考语音(说话音频,3–10秒)二、requirements.txt(完整替换版,确保可复制即用)

# 语音助手基础

webrtcvad==2.0.10

sounddevice==0.4.6

numpy==1.26.4

vosk==0.3.45

pyttsx3==2.90

azure-cognitiveservices-speech==1.37.0

matplotlib==3.8.0

simpleaudio==1.0.4

# 语音剥离与语音克隆

librosa==0.10.1

soundfile==0.12.1

TTS==0.15.4

fastapi==0.111.0

uvicorn==0.30.0

# Demucs(源分离)

demucs==4.0.0

# PyTorch(CPU版)建议单独安装:

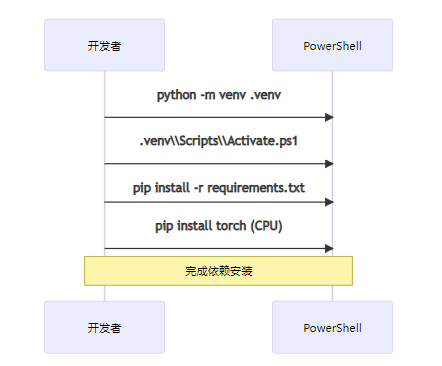

# pip install torch --index-url https://download.pytorch.org/whl/cpu安装步骤(PowerShell):

cd "c:\Users\LXCXJXHX\Desktop\文章\脑残指导\专栏_技术隐讽升级版\模块03_语音交互\code"

python -m venv .venv

.venv\Scripts\Activate.ps1

pip install -r requirements.txt

pip install torch --index-url https://download.pytorch.org/whl/cpu安装流程示意(Mermaid)

三、准备参考语音与剥离(separate_vocals.py)

- 如果你的 reference.wav 已经是纯人声,可跳过本步骤。

- 若是歌曲或混合音频,可用 Demucs 分离出人声。

# separate_vocals.py

# 用Demucs分离人声;输入音频路径,输出到 ./separated_vocals/vocals.wav

import os, sys, subprocess

from pathlib import Path

IN = Path(sys.argv[1]) if len(sys.argv) > 1 else Path("../samples/my_voice/reference.wav")

OUT_DIR = Path("./separated_vocals")

OUT_DIR.mkdir(parents=True, exist_ok=True)

# 调用demucs命令:会下载模型到缓存,然后输出到 ./separated_vocals

cmd = [

sys.executable, "-m", "demucs", "--two-stems", "vocals", "--out", str(OUT_DIR), str(IN)

]

print("Running:", " ".join(cmd))

subprocess.run(cmd, check=True)

# 尝试定位输出文件(demucs输出结构包含子目录)

vocals = None

for p in OUT_DIR.rglob("*vocals*.wav"):

vocals = p

break

if vocals:

print("Vocals saved:", vocals)

else:

print("未找到分离出的人声文件,请检查Demucs输出目录。")运行:

python separate_vocals.py ../samples/my_voice/reference.wav生成路径例如:./separated_vocals/<model_name>/<track_name>/vocals.wav。

四、自定义语音克隆(YourTTS,custom_voice_clone.py)

- 支持在不训练的情况下,用短参考音频直接生成类似音色的发音。

- 推荐参考音频:3–10秒,纯人声,安静环境,16kHz 或 44.1kHz 均可(脚本会重采样)。

# custom_voice_clone.py

from TTS.api import TTS

import soundfile as sf

import librosa

import os

# 1) 准备参考音频路径

SPEAKER_WAV = os.path.join(os.path.dirname(__file__), "..", "samples", "my_voice", "reference.wav")

# 如果你已经用Demucs分离出人声,可将路径替换为分离后的人声文件

# SPEAKER_WAV = "./separated_vocals/<...>/vocals.wav"

# 2) 规范化参考音频(重采样到16kHz,单声道)

def ensure_16k_mono(in_path: str) -> str:

y, sr = librosa.load(in_path, sr=None, mono=True)

y16 = librosa.resample(y, orig_sr=sr, target_sr=16000)

out_path = os.path.join(os.path.dirname(in_path), "ref_16k.wav")

sf.write(out_path, y16, 16000)

return out_path

ref_norm = ensure_16k_mono(SPEAKER_WAV)

# 3) 加载YourTTS模型(首次会下载)

tts = TTS(model_name="tts_models/multilingual/multi-dataset/your_tts")

# 4) 合成文本,保存到output.wav

OUT_WAV = os.path.join(os.path.dirname(__file__), "output_custom_voice.wav")

text = "你好,我是用你的参考音色生成的自定义语音。今天我们一起练习语音交互。"

tts.tts_to_file(

text=text,

speaker_wav=ref_norm,

language="zh-cn",

file_path=OUT_WAV,

)

print("Saved:", OUT_WAV)运行:

python custom_voice_clone.py生成的 output_custom_voice.wav 即为你的自定义音色合成语音。

五、本地TTS API服务(custom_tts_api.py)

- 提供一个HTTP接口,输入文本与参考音频路径,返回已生成的WAV路径。

# custom_tts_api.py

from fastapi import FastAPI

from pydantic import BaseModel

from TTS.api import TTS

import soundfile as sf

import librosa, os, uuid

app = FastAPI()

tts = TTS(model_name="tts_models/multilingual/multi-dataset/your_tts")

class TTSReq(BaseModel):

text: str

speaker_wav: str

language: str = "zh-cn"

@app.post("/tts")

def synthesize(req: TTSReq):

# 规范化参考音频到16kHz单声道

y, sr = librosa.load(req.speaker_wav, sr=None, mono=True)

y16 = librosa.resample(y, orig_sr=sr, target_sr=16000)

tmp_ref = os.path.join(os.path.dirname(req.speaker_wav), f"ref16_{uuid.uuid4().hex}.wav")

sf.write(tmp_ref, y16, 16000)

out_path = os.path.join(".", f"tts_{uuid.uuid4().hex}.wav")

tts.tts_to_file(text=req.text, speaker_wav=tmp_ref, language=req.language, file_path=out_path)

try:

os.remove(tmp_ref)

except Exception:

pass

return {"path": out_path}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)运行:

python custom_tts_api.py

# 浏览器或其他脚本调用:POST http://localhost:8000/tts

# body 示例:

# {"text": "你好,这是自定义音色的TTS。", "speaker_wav": "../samples/my_voice/reference.wav"}六、Azure STT + 本地自定义TTS播放(azure_custom_assistant.py)

- 识别使用Azure(稳定与低延迟),合成使用本地YourTTS(自定义音色),播放用simpleaudio。

# azure_custom_assistant.py

import os, time, requests, wave

import simpleaudio as sa

import azure.cognitiveservices.speech as speechsdk

SPEECH_KEY = os.getenv('AZURE_SPEECH_KEY')

SPEECH_REGION = os.getenv('AZURE_SPEECH_REGION')

assert SPEECH_KEY and SPEECH_REGION, '请在环境变量中设置 AZURE_SPEECH_KEY 与 AZURE_SPEECH_REGION'

speech_config = speechsdk.SpeechConfig(subscription=SPEECH_KEY, region=SPEECH_REGION)

speech_config.speech_recognition_language = 'zh-CN'

audio_config_in = speechsdk.audio.AudioConfig(use_default_microphone=True)

recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_config_in)

WAKE_WORD = '你好助手'

SPEAKER_WAV = os.path.join(os.path.dirname(__file__), '..', 'samples', 'my_voice', 'reference.wav')

TTS_API = 'http://localhost:8000/tts'

INTENTS = {

'天气': '今天晴,温度22度。',

'时间': lambda: time.strftime('现在时间是%H点%M分。'),

}

def play_wav(path: str):

with wave.open(path, 'rb') as wf:

data = wf.readframes(wf.getnframes())

play_obj = sa.play_buffer(data, wf.getnchannels(), wf.getsampwidth(), wf.getframerate())

play_obj.wait_done()

def recognize_once():

print('[info] 说话中……')

res = recognizer.recognize_once_async().get()

if res.reason == speechsdk.ResultReason.RecognizedSpeech:

return res.text

else:

return ''

def speak_custom(text: str):

payload = {"text": text, "speaker_wav": SPEAKER_WAV, "language": "zh-cn"}

r = requests.post(TTS_API, json=payload, timeout=60)

r.raise_for_status()

path = r.json().get('path')

print('[tts]', path)

play_wav(path)

def run():

print('请先运行 custom_tts_api.py 再启动本脚本。')

speak_custom('云端识别与本地自定义音色的组合已就绪。试着说:你好助手。')

while True:

text = recognize_once()

if not text:

continue

print('[asr]', text)

if WAKE_WORD in text:

speak_custom('我在,请说。')

cmd = recognize_once()

print('[cmd]', cmd)

reply = None

for k, v in INTENTS.items():

if k in cmd:

reply = v() if callable(v) else v

break

if not reply:

reply = '我理解到你的意思了,如果需要帮助,可以说天气或时间。'

speak_custom(reply)

if __name__ == '__main__':

run()运行顺序:

# 1) 启动本地TTS API(一个终端)

python custom_tts_api.py

# 2) 另一个终端运行 Azure + 自定义TTS 辅助脚本

$env:AZURE_SPEECH_KEY="<YourKey>"; $env:AZURE_SPEECH_REGION="<YourRegion>"

python azure_custom_assistant.py七、全套流程建议(语音剥离与自定义语音制作)

- 采样与清洗:录制3–10秒纯人声(朗读),去除背景音乐与嘈杂;采样率≥16kHz,单声道。

- 剥离(可选):若只有歌曲或混音,先用

separate_vocals.py分离人声,检查效果。 - 规范化:重采样到16kHz、归一到单声道,裁剪开头和结尾静音(可用librosa.effects.trim)。

- 合成验证:用

custom_voice_clone.py生成样例,听辨清晰度与音色相似度;必要时更换参考片段。 - 集成API:启动

custom_tts_api.py,在语音助手中调用它进行回复(示例azure_custom_assistant.py)。

八、法律与伦理提醒

- 仅在取得录音者明确授权的前提下进行语音克隆;避免未经许可复制或传播他人声音。

- 明示用途与保存周期;提供删除与撤回机制;不得用于欺诈或误导场景。

九、故障排查

- Demucs下载模型慢:保持网络畅通,首次运行会自动下载;也可提前手动下载模型缓存。

- YourTTS生成速度慢:CPU环境下较慢,建议使用短文本与短参考音频;如有GPU可安装对应的torch版本加速。

- 播放失败:确认 simpleaudio 已安装;或改用

playsound库/系统播放器。

十、扩展点

- 替换YourTTS为更先进的VITS/RVC/So-VITS-SVC进行风格转换(需更多配置与训练)。

- 在本地助手中加入“打断(barge-in)”与“延迟/误唤醒指标记录”模块,并将每次回复的TTS参数写入CSV以便可视化。

三、依赖清单(requirements.txt)

webrtcvad==2.0.10

sounddevice==0.4.6

numpy==1.26.4

vosk==0.3.45

pyttsx3==2.90

azure-cognitiveservices-speech==1.37.0

matplotlib==3.8.0四、配置文件(config.json)

{

"sample_rate": 16000,

"wake_word": "你好助手",

"voice_preferences": {

"tts_rate": 1.0,

"tts_volume": 0.6

}

}五、VAD工具(utils_vad.py)

# utils_vad.py

import collections

import webrtcvad

class Frame:

def __init__(self, bytes_data: bytes, timestamp: float, duration: float):

self.bytes = bytes_data

self.timestamp = timestamp

self.duration = duration

# 生成固定时长帧(20ms),供VAD判断

def frame_generator(frame_duration_ms, audio_bytes: bytes, sample_rate: int):

n = int(sample_rate * (frame_duration_ms / 1000.0) * 2) # int16 → 2字节

offset = 0

timestamp = 0.0

duration = (float(n) / (sample_rate * 2))

while offset + n <= len(audio_bytes):

yield Frame(audio_bytes[offset:offset+n], timestamp, duration)

timestamp += duration

offset += n

# 基于WebRTC VAD的段落收集器,返回语音段bytes

# 参数:aggressiveness 0–3(越大越敏感)

# padding_ms/speech_ms: 进入/退出语音的缓冲窗

def vad_collector(sample_rate: int, frame_duration_ms: int, padding_ms: int, vad_level: int, audio_bytes: bytes):

vad = webrtcvad.Vad(vad_level)

frames = list(frame_generator(frame_duration_ms, audio_bytes, sample_rate))

num_padding_frames = int(padding_ms / frame_duration_ms)

ring_buffer = collections.deque(maxlen=num_padding_frames)

triggered = False

voiced_frames = []

for frame in frames:

is_speech = vad.is_speech(frame.bytes, sample_rate)

if not triggered:

ring_buffer.append((frame, is_speech))

num_voiced = len([f for f, s in ring_buffer if s])

if num_voiced > 0.9 * ring_buffer.maxlen:

triggered = True

voiced_frames.extend([f for f, s in ring_buffer])

ring_buffer.clear()

else:

voiced_frames.append(frame)

ring_buffer.append((frame, is_speech))

num_unvoiced = len([f for f, s in ring_buffer if not s])

if num_unvoiced > 0.9 * ring_buffer.maxlen:

triggered = False

yield b"".join([f.bytes for f in voiced_frames])

ring_buffer.clear()

voiced_frames = []

if voiced_frames:

yield b"".join([f.bytes for f in voiced_frames])六、离线本地方案:VAD + Vosk(STT) + pyttsx3(TTS)(local_assistant.py)

import os, json, time, queue, threading

import numpy as np

import sounddevice as sd

from vosk import Model, KaldiRecognizer

import pyttsx3

from utils_vad import vad_collector

CFG_PATH = os.path.join(os.path.dirname(__file__), 'config.json')

with open(CFG_PATH, 'r', encoding='utf-8') as f:

CFG = json.load(f)

SAMPLE_RATE = int(CFG.get('sample_rate', 16000))

WAKE_WORD = CFG.get('wake_word', '你好助手')

# 1) TTS初始化(Windows SAPI)

engine = pyttsx3.init()

engine.setProperty('rate', int(CFG.get('voice_preferences', {}).get('tts_rate', 1.0) * 200))

engine.setProperty('volume', CFG.get('voice_preferences', {}).get('tts_volume', 0.6))

# 尝试选择中文语音

for v in engine.getProperty('voices'):

name = (getattr(v, 'name', '') or '').lower()

lang = (getattr(v, 'languages', [''])[0] or '').lower()

if 'zh' in name or 'chinese' in name or 'zh' in lang:

engine.setProperty('voice', v.id)

break

def speak(text: str):

engine.say(text)

engine.runAndWait()

# 2) 加载Vosk模型

MODEL_DIR = os.path.join(os.path.dirname(__file__), '..', 'models', 'vosk-cn-small')

assert os.path.exists(MODEL_DIR), f"Vosk模型未找到:{MODEL_DIR}"

model = Model(MODEL_DIR)

rec = KaldiRecognizer(model, SAMPLE_RATE)

rec.SetWords(True)

# 3) 音频采集(RawInputStream,16kHz/单声道/int16)

audio_q = queue.Queue()

def audio_callback(indata, frames, time_info, status):

if status:

print(status)

audio_q.put(bytes(indata)) # int16 bytes

stream = sd.RawInputStream(samplerate=SAMPLE_RATE, blocksize=320, dtype='int16', channels=1, callback=audio_callback)

# 4) NLU极简规则

INTENT_RULES = [

(['天气', '温度'], lambda _: '今天晴,温度22度。'),

(['时间', '几点'], lambda _: time.strftime('现在时间是%H点%M分。')),

(['你好', '在吗'], lambda _: '我在,随时倾听。'),

]

def nlu_respond(text: str) -> str:

for keys, fn in INTENT_RULES:

if any(k in text for k in keys):

return fn(text)

return '我理解到你的意思了,如果需要帮助,可以说“天气”或“时间”。'

# 5) 主循环:等待唤醒词→识别命令→TTS回复

def bytes_from_queue(timeout=1.0) -> bytes:

try:

chunk = audio_q.get(timeout=timeout)

return chunk

except queue.Empty:

return b''

def collect_audio_seconds(seconds=2.0) -> bytes:

chunks = []

target = int(seconds * SAMPLE_RATE * 2 / 320) # 320字节/20ms

for _ in range(target):

chunks.append(bytes_from_queue(timeout=0.5))

return b''.join(chunks)

def recognize_bytes(data: bytes) -> str:

rec = KaldiRecognizer(model, SAMPLE_RATE)

rec.SetWords(True)

rec.AcceptWaveform(data)

import json as _json

try:

result = _json.loads(rec.Result())

return result.get('text', '')

except Exception:

return ''

def run():

print('[info] 本地离线助手已启动,试着说:“你好助手”来唤醒。')

speak('本地离线助手已就绪。试着说:你好助手。')

with stream:

while True:

# 收集1秒音频,做VAD分段

data = collect_audio_seconds(1.0)

if not data:

continue

segments = list(vad_collector(SAMPLE_RATE, 20, 400, 2, data))

for seg in segments:

text = recognize_bytes(seg)

if not text:

continue

print('[asr]', text)

if WAKE_WORD in text:

speak('我在,请说。')

# 继续收集3秒作为命令段

command_data = collect_audio_seconds(3.0)

# 根据VAD再取语音部分

cmd_segs = list(vad_collector(SAMPLE_RATE, 20, 400, 2, command_data))

cmd_text = ''

for cs in cmd_segs:

t = recognize_bytes(cs)

cmd_text += t

print('[cmd]', cmd_text)

reply = nlu_respond(cmd_text)

speak(reply)

if __name__ == '__main__':

run()运行(离线本地):

cd "<PROJECT_ROOT>/模块03_语音交互/code"

.venv\Scripts\Activate.ps1

python local_assistant.py- 麦克风就绪后说“你好助手”,随后说出“天气/时间”等关键词,系统将ASR识别并TTS应答。

七、云端API方案:Azure Speech(azure_assistant.py)

# azure_assistant.py

import os, time

import azure.cognitiveservices.speech as speechsdk

SPEECH_KEY = os.getenv('AZURE_SPEECH_KEY')

SPEECH_REGION = os.getenv('AZURE_SPEECH_REGION')

assert SPEECH_KEY and SPEECH_REGION, '请在环境变量中设置 AZURE_SPEECH_KEY 与 AZURE_SPEECH_REGION'

speech_config = speechsdk.SpeechConfig(subscription=SPEECH_KEY, region=SPEECH_REGION)

speech_config.speech_recognition_language = 'zh-CN'

speech_config.speech_synthesis_language = 'zh-CN'

speech_config.speech_synthesis_voice_name = 'zh-CN-XiaoxiaoNeural'

audio_config_in = speechsdk.audio.AudioConfig(use_default_microphone=True)

audio_config_out = speechsdk.audio.AudioOutputConfig(use_default_speaker=True)

recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_config_in)

synthesizer = speechsdk.SpeechSynthesizer(speech_config=speech_config, audio_config=audio_config_out)

WAKE_WORD = '你好助手'

INTENTS = {

'天气': '今天晴,温度22度。',

'时间': lambda: time.strftime('现在时间是%H点%M分。'),

}

def speak(text: str):

result = synthesizer.speak_text_async(text).get()

if result.reason != speechsdk.ResultReason.SynthesizingAudioCompleted:

print('TTS失败:', result.reason)

def recognize_once(timeout_sec=8):

print('[info] 说话中……')

res = recognizer.recognize_once_async().get()

if res.reason == speechsdk.ResultReason.RecognizedSpeech:

return res.text

else:

return ''

def run():

speak('云端语音助手已就绪。试着说:你好助手。')

while True:

text = recognize_once()

if not text:

continue

print('[asr]', text)

if WAKE_WORD in text:

speak('我在,请说。')

cmd = recognize_once()

print('[cmd]', cmd)

reply = None

for k, v in INTENTS.items():

if k in cmd:

reply = v() if callable(v) else v

break

if not reply:

reply = '我理解到你的意思了,如果需要帮助,可以说天气或时间。'

speak(reply)

if __name__ == '__main__':

run()运行(云端API):

cd "<PROJECT_ROOT>/模块03_语音交互/code"

.venv\Scripts\Activate.ps1

$env:AZURE_SPEECH_KEY="<YourKey>"; $env:AZURE_SPEECH_REGION="<YourRegion>"

python azure_assistant.py八、最小质检(tests\test_run.py)

# tests/test_run.py

import os, json

CFG = os.path.join(os.path.dirname(__file__), '..', 'config.json')

def test_cfg_exists():

assert os.path.exists(CFG)

with open(CFG, 'r', encoding='utf-8') as f:

cfg = json.load(f)

assert 'wake_word' in cfg and cfg['wake_word']九、常见问题与故障演练

- 声卡/麦克风不可用:确保Windows隐私设置允许应用访问麦克风;设备管理器中启用输入设备。

- sounddevice安装失败:尝试

pip install pipwin && pipwin install pyaudio,并将RawInputStream替换为PyAudio实现(如需,我可提供替代脚本)。 - Vosk模型路径错误:确认

models/vosk-cn-small目录存在且含conf、am等子目录。 - Azure超时或识别不稳定:检查KEY与REGION;网络连通性;更换识别一次为连续识别模式以改善体验。

十、扩展建议

- 唤醒词引擎:将简单文本匹配替换为更稳健的Porcupine或Snowboy(需额外许可/模型)。

- 对话策略:引入意图槽位与状态机;高风险操作增加二次确认与回滚提示。

- 指标记录:为local_assistant增加延迟、误唤醒比例与TTS参数日志;保存到CSV以便可视化。

- CSV列:ts,event_id,lat_ms,miswake,tts_rate,tts_volume,wake_text,cmd_text

- 快速查看(PowerShell): Get-Content .\模块03_语音交互\code\metrics.csv -Head 5

- 简易可视化(Python,可选):

import csv

import matplotlib.pyplot as plt

xs, ys = [], []

with open(r'模块03_语音交互\\code\\metrics.csv', encoding='utf-8') as f:

for i,row in enumerate(csv.DictReader(f)):

xs.append(i)

ys.append(float(row['lat_ms']))

plt.plot(xs, ys)

plt.title('Latency (ms)')

plt.xlabel('Event Index')

plt.ylabel('ms')

plt.show()本文参与 腾讯云自媒体同步曝光计划,分享自作者个人站点/博客。

原始发表:2025-10-26,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录