【论文阅读】BEVFromer-从多相机图像中学习BEV表达(1)

【论文阅读】BEVFromer-从多相机图像中学习BEV表达(1)

YoungTimes

发布于 2023-09-01 08:55:19

发布于 2023-09-01 08:55:19

“论文: https://arxiv.org/pdf/2203.17270.pdf 代码链接: https://github.com/fundamentalvision/BEVFormer

1、Image Feature Extraction

1.1 GridMask Data Augmentation

当前数据增广方式(Data Augmentation)主要分为三类:

- Spatial Transformation(Random Scale、Crop、 Flip、Random Rotation);

- Color Distortion(Changing Brightness, Hue);

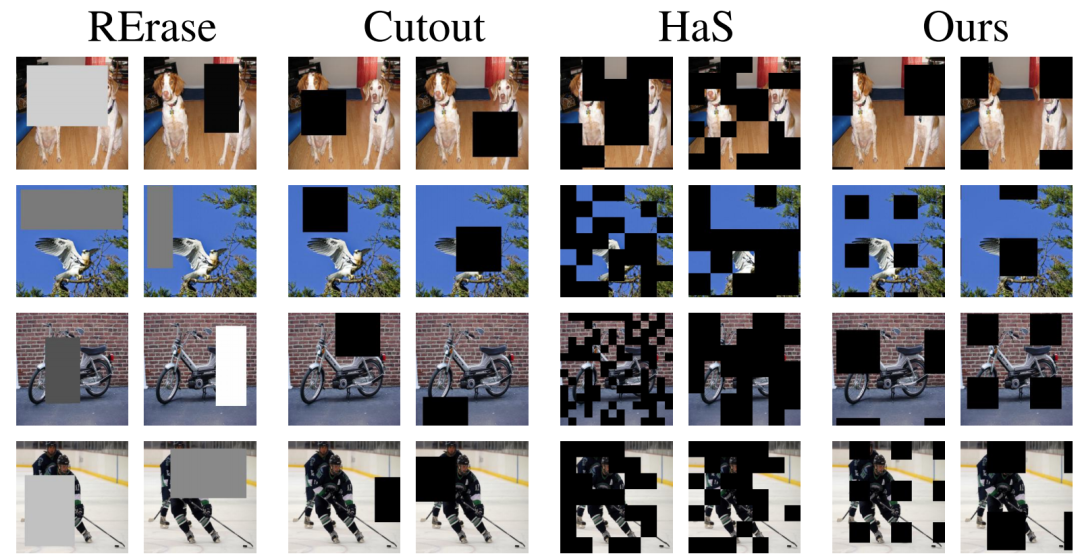

- Information Dropping(Random Erasing,Cutour,Hide-And-Seek);

GridMask属于Information Dropping的方法,它通过随机在图像上丢弃一块区域,作用相当于在网络上增加一个正则项,避免网络过拟合。

不同Information Dropping方法对比,来源:https://arxiv.org/pdf/2001.04086.pdf

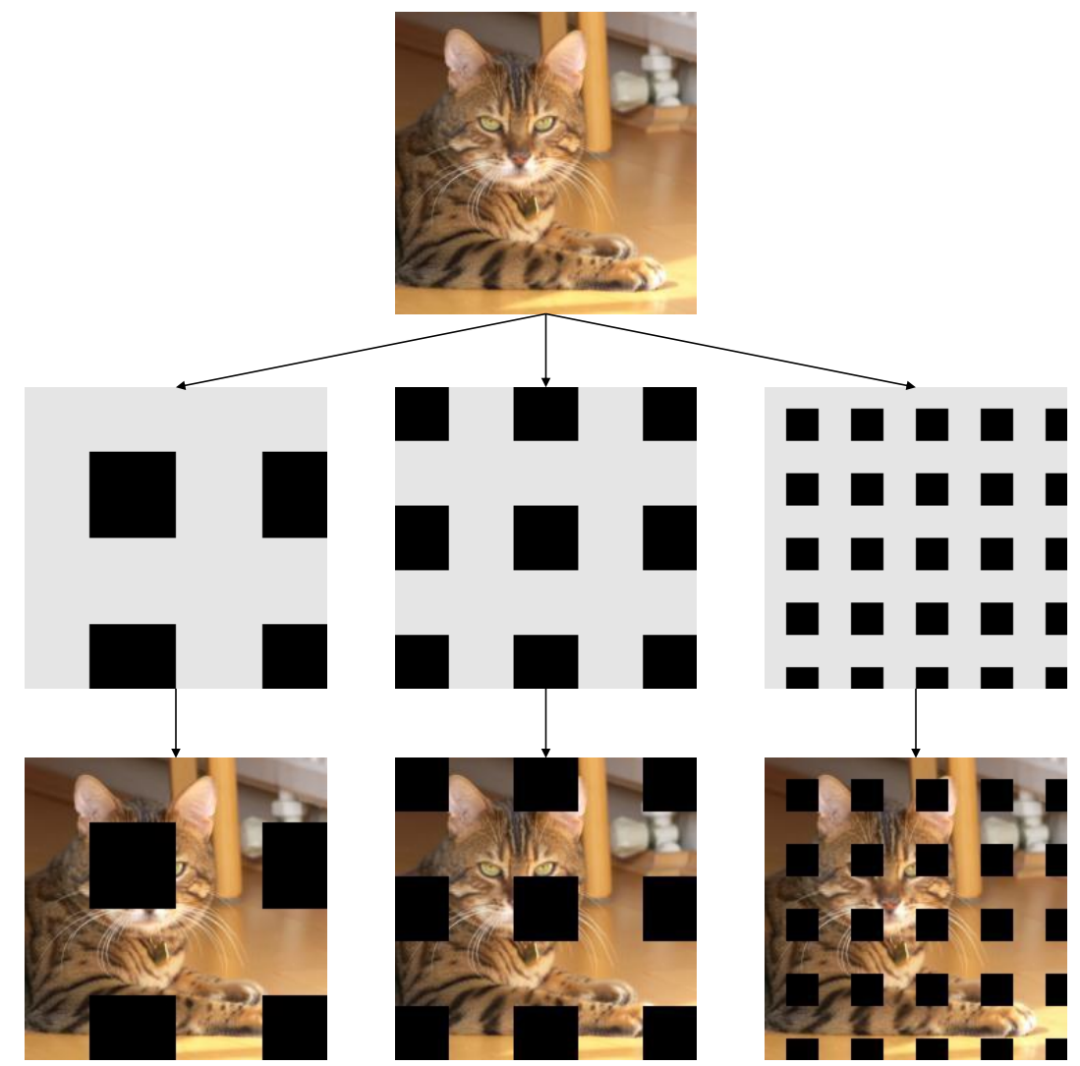

GridMask通过生成一个和原图相同分辨率的Mask(如下图所示),然后将该Mask与原图相乘得到一个图像。Mask中灰色区域的值为1,黑色区域的值为0,为1的图像区域会被保留,而为0的图像区域则被删除。

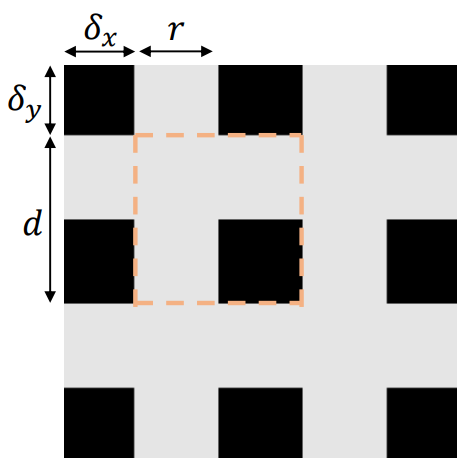

GridMask由多个Mask Unit组成(如下图橙色虚线框所示),每个Mask Unit生成包含4个参数

,r是Mask Unit中保留的比例,d是Mask Unit的Length,

和

是Mask Unit的相对于图像左上角的Offset。

class Grid(object): \

def __init__(self, d1, d2, rotate = 1, ratio = 0.5, mode=1, prob=0.8):

self.d1 = d1

self.d2 = d2

self.rotate = rotate

self.ratio = ratio

self.mode=mode

self.st_prob = self.prob = prob

def set_prob(self, epoch, max_epoch):

self.prob = self.st_prob * min(1, epoch / max_epoch)

def __call__(self, img): \

if np.random.rand() > self.prob:#以一定的概率应用GridMask数据增强

return img

h = img.size(1)

w = img.size(2)

# 1.5 * h, 1.5 * w works fine with the squared images

# But with rectangular input, the mask might not be able to recover back to the input image shape

# A square mask with edge length equal to the diagnoal of the input image

# will be able to cover all the image spot after the rotation. This is also the minimum square.

hh = math.ceil((math.sqrt(h*h + w*w)))

d = np.random.randint(self.d1, self.d2)#

# ratio表示原图片信息保留量,通过控制ratio可以控制填充区域的大小

self.l = math.ceil(d*(1-math.sqrt(1-self.ratio))) # 计算公式见论文

mask = np.ones((hh, hh), np.float32)#创建了一个mask,很明显该mask比原图大,边长是原图的对角线,方便mask旋转

st_h = np.random.randint(d)

st_w = np.random.randint(d)

#这种方式相当于对十字口进行填充,到时候对mask取反就是对方框(Figure4中的黑框)填充

for i in range(-1, hh//d+1):#

s = d*i + st_h

t = s+self.l#可以看出l的含义

s = max(min(s, hh), 0)#不能超出mask的边界

t = max(min(t, hh), 0)

mask[s:t,:] = 0

for i in range(-1, hh//d+1):

s = d*i + st_w

t = s+self.l

s = max(min(s, hh), 0)#不能超出mask的边界

t = max(min(t, hh), 0)

mask[:,s:t] = 0

r = np.random.randn(self.rotate)

mask = Image.fromarray(np.uint8(mask))

mask = mask.rotate(r)#可以旋转

mask = np.asarray(mask)

mask = mask[(hh-h)//2:(hh-h)//2+h, (hh-w)//2:(hh-w)//2+w]#截取图片大小的掩码

if self.mode == 1:

mask = 1-mask

print(np.sum(mask)/(h*w))#可以通过这个值验证ratio是否准确,也就是原图像信息保留值

mask = torch.from_numpy(mask).float()

mask = mask.expand_as(img)

img = img * mask #mask中的矩阵,为1的保留原图像信息,为0的就是删除的部分

return img

class GridMask(nn.Module): \

def __init__(self, d1, d2, rotate = 1, ratio = 0.5, mode=0, prob=1.):

super(GridMask, self).__init__()

self.rotate = rotate

self.ratio = ratio

self.mode = mode

self.st_prob = prob

self.grid = Grid(d1, d2, rotate, ratio, mode, prob)

def set_prob(self, epoch, max_epoch):

self.grid.set_prob(epoch, max_epoch)#通过epoch配置GridMask的概率

def forward(self, x):

if not self.training:

return x#测试时跳过GridMask操作

n,c,h,w = x.size()

y = []

for i in range(n):

y.append(self.grid(x[i]))#对每一张图片单独应用GridMask

y = torch.cat(y).view(n,c,h,w)

return y

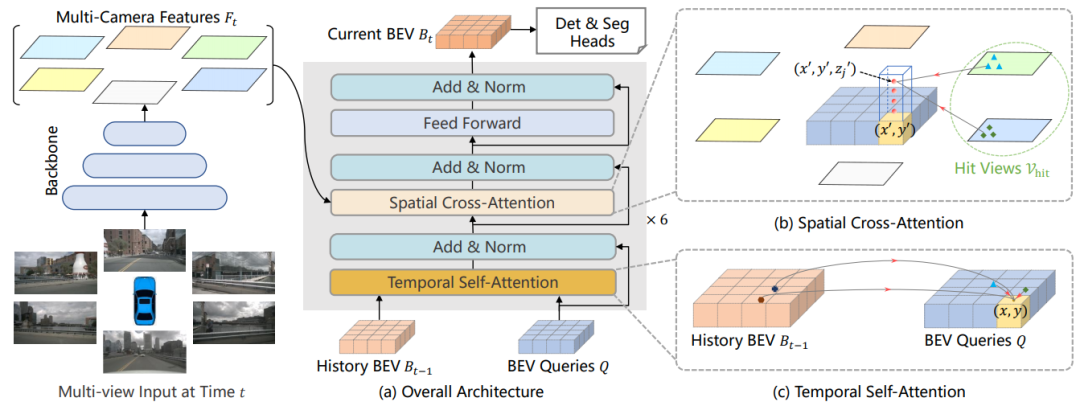

2. Spatial Cross-Attention

2.1 3D BEV Query

BEV的每帧的输入都是高分辨率的环视多视角图像,在Multi-Head Attention中的计算代价太高,所以基于Deformable Attention研发了空间交叉注意力机制(Spatial Cross Attention),每个BEV Query只与多视角图像(Multi Camera Views)中感兴趣(Region of Interest)的区域交互,可以大大降低了计算成本。

Deformable Attention是2D的,所以需要针对3D场景做一些调整:

- Lift each query on the BEV plane to a pillar-like query, Sample 3D reference points from the pillar。

将2D BEV Query通过Lift操作拉成一个体柱(Pillar),并在Z轴上进行采样,获取高度不同的获3D Points。

def get_reference_points(H, W, Z=8, num_points_in_pillar=4, dim='3d', bs=1, device='cuda', dtype=torch.float):

"""Get the reference points used in SCA and TSA.

Args:

H, W: spatial shape of bev.

Z: hight of pillar.

D: sample D points uniformly from each pillar.

device (obj:`device`): The device where

reference_points should be.

Returns:

Tensor: reference points used in decoder, has \

shape (bs, num_keys, num_levels, 2).

"""

......

if dim == '3d':

# num_points_in_pillar: 每个pillar中的采样点数

zs = torch.linspace(0.5, Z - 0.5, num_points_in_pillar, dtype=dtype,

device=device).view(-1, 1, 1).expand(num_points_in_pillar, H, W) / Z

xs = torch.linspace(0.5, W - 0.5, W, dtype=dtype,

device=device).view(1, 1, W).expand(num_points_in_pillar, H, W) / W

ys = torch.linspace(0.5, H - 0.5, H, dtype=dtype,

device=device).view(1, H, 1).expand(num_points_in_pillar, H, W) / H

ref_3d = torch.stack((xs, ys, zs), -1)

ref_3d = ref_3d.permute(0, 3, 1, 2).flatten(2).permute(0, 2, 1)

ref_3d = ref_3d[None].repeat(bs, 1, 1, 1)

# [batch_size, num_points_in_pillar, point_size, 3]

return ref_3d

- Project the 3D points in pillar to 2D points in views, Sample features from ROIs in hit views。

将3D Points通过相机内参投影到2D平面上(每一个投影的2D点只能落在某些视图上,被击中的视图被称为Hit Views)。

来源: https://zhuanlan.zhihu.com/p/539925138

BEV Query坐标转换到像素坐标系分为3步:1)从BEV Query坐标转换到Lidar坐标系;2) Lidar坐标系转到Camera坐标系;3)Camera坐标系转换到像素坐标系。

坐标系的转换公式网上很多,推导过程略过,最终的公式如下:

假设Lidar坐标系下的三维点坐标为

,Camera相对于Lidar的旋转矩阵为R,平移矩阵为t,则有:

从Camera坐标系到像素坐标系的变换如下:

以上所有平移矩阵、旋转矩阵、相机内参组合在一起,形成了坐标转换矩阵(代码中的lidar2img)。

image_paths = []

lidar2img_rts = []

lidar2cam_rts = []

cam_intrinsics = []

for cam_type, cam_info in info['cams'].items():

image_paths.append(cam_info['data_path'])

# obtain lidar to image transformation matrix

lidar2cam_r = np.linalg.inv(cam_info['sensor2lidar_rotation'])

lidar2cam_t = cam_info[

'sensor2lidar_translation'] @ lidar2cam_r.T

lidar2cam_rt = np.eye(4)

lidar2cam_rt[:3, :3] = lidar2cam_r.T

lidar2cam_rt[3, :3] = -lidar2cam_t

intrinsic = cam_info['cam_intrinsic']

viewpad = np.eye(4)

viewpad[:intrinsic.shape[0], :intrinsic.shape[1]] = intrinsic

lidar2img_rt = (viewpad @ lidar2cam_rt.T)

lidar2img_rts.append(lidar2img_rt)

cam_intrinsics.append(viewpad)

lidar2cam_rts.append(lidar2cam_rt.T)

input_dict.update(

dict(

img_filename=image_paths,

lidar2img=lidar2img_rts,

cam_intrinsic=cam_intrinsics,

lidar2cam=lidar2cam_rts,

))

坐标转换的代码实现如下:

a) 还原坐标系到Lidar坐标系下。其中pc_range是BEV特征表征的真实的物理空间大小。

reference_points[..., 0:1] = reference_points[..., 0:1] * \

(pc_range[3] - pc_range[0]) + pc_range[0]

reference_points[..., 1:2] = reference_points[..., 1:2] * \

(pc_range[4] - pc_range[1]) + pc_range[1]

reference_points[..., 2:3] = reference_points[..., 2:3] * \

(pc_range[5] - pc_range[2]) + pc_range[2]

b) 调整为齐次坐标。

reference_points = torch.cat(

(reference_points, torch.ones_like(reference_points[..., :1])), -1)

c) Reference Points坐标投影到像素坐标系。

reference_points = reference_points.permute(1, 0, 2, 3)

D, B, num_query = reference_points.size()[:3]

num_cam = lidar2img.size(1)

reference_points = reference_points.view(

D, B, 1, num_query, 4).repeat(1, 1, num_cam, 1, 1).unsqueeze(-1)

lidar2img = lidar2img.view(

1, B, num_cam, 1, 4, 4).repeat(D, 1, 1, num_query, 1, 1)

reference_points_cam = torch.matmul(lidar2img.to(torch.float32),

reference_points.to(torch.float32)).squeeze(-1)

过滤无效值,只保留在图像范围内的点和z>0点。

bev_mask = (reference_points_cam[..., 2:3] > eps)

reference_points_cam = reference_points_cam[..., 0:2] / torch.maximum(

reference_points_cam[..., 2:3], torch.ones_like(reference_points_cam[..., 2:3]) * eps)

reference_points_cam[..., 0] /= img_metas[0]['img_shape'][0][1]

reference_points_cam[..., 1] /= img_metas[0]['img_shape'][0][0]

bev_mask = (bev_mask & (reference_points_cam[..., 1:2] > 0.0)

& (reference_points_cam[..., 1:2] < 1.0)

& (reference_points_cam[..., 0:1] < 1.0)

& (reference_points_cam[..., 0:1] > 0.0))

至此,完成了上图所示的3D BEV Query向2D图像投影、并过滤非感兴趣区域的功能。

参考材料

- https://zhuanlan.zhihu.com/p/539925138

- https://blog.csdn.net/weixin_42108183/article/details/128433381

- https://arxiv.org/pdf/2001.04086.pdf

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2023-02-18,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录