017_具身人工智能的可解释性与透明度:从黑盒模型到可理解智能体

017_具身人工智能的可解释性与透明度:从黑盒模型到可理解智能体

安全风信子

发布于 2025-11-19 13:27:03

发布于 2025-11-19 13:27:03

引言

随着具身人工智能(Embodied AI)系统在医疗、自动驾驶、工业控制等关键领域的广泛应用,其决策过程的可解释性与透明度已成为2025年AI安全与信任的核心议题。研究表明,高达80%的用户在使用智能系统时,需要理解系统为什么做出特定决策,尤其是在可能影响人身安全或重要利益的场景中。本章将深入探讨具身AI的可解释性与透明度技术,分析当前挑战,介绍先进方法,并提供实用的实现框架,帮助开发者构建既高效又透明的具身智能系统。

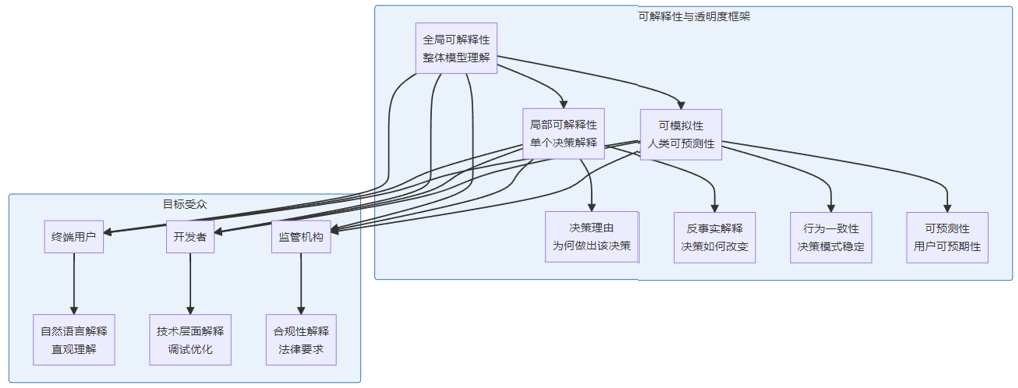

可解释性与透明度的概念框架

1. 基本概念与定义

具身AI系统的可解释性与透明度包含多个维度:

2. 具身AI可解释性的独特挑战

与传统AI系统相比,具身AI在可解释性方面面临的特殊挑战:

- 多模态感知整合:需要解释多源传感器数据的整合过程

- 物理世界交互:决策与物理环境交互的因果关系复杂

- 实时性要求:解释生成不能影响系统实时响应

- 安全性考量:过度透明可能导致安全漏洞暴露

- 情境依赖性:同一行为在不同情境下解释可能不同

3. 可解释性的评估标准

评估具身AI系统可解释性的关键标准:

评估维度 | 具体指标 | 测量方法 | 应用场景 |

|---|---|---|---|

准确性 | 解释与实际决策过程的符合度 | 专家评审、对照实验 | 医疗诊断、自动驾驶 |

完整性 | 解释包含所有关键决策因素 | 覆盖度分析、反例测试 | 安全关键系统 |

可理解性 | 用户理解解释的难易程度 | 用户测试、理解度问卷 | 消费级应用 |

及时性 | 解释生成的响应速度 | 性能测试、延迟测量 | 实时控制系统 |

一致性 | 相似情况下解释的一致性 | 重复测试、变异性分析 | 商业决策系统 |

可操作性 | 基于解释采取行动的可行性 | 行动有效性测试 | 工业控制系统 |

可解释性技术分类与方法

1. 内在可解释模型

设计具有内在可解释性的模型架构:

- 决策树与规则系统:透明的决策路径和规则集

- 线性模型与广义线性模型:特征权重直接反映影响程度

- 稀疏注意力机制:突出关键输入对决策的贡献

- 胶囊网络:通过胶囊间的层次关系提供解释

- 神经符号系统:结合神经网络和符号推理的混合方法

2. 事后解释方法

为复杂黑盒模型提供事后解释的技术:

- 特征重要性分析:识别影响决策的关键特征

- 局部可解释模型-无关解释(LIME):通过局部简化模型提供解释

- SHAP值分析:基于博弈论的特征贡献评估

- 反事实解释:回答"如果…会怎样"的问题

- 注意力可视化:可视化神经网络的注意力分布

3. 可视化解释技术

将复杂解释转化为直观可视化的方法:

- 激活热图:显示输入对模型激活的影响

- 决策路径图:可视化决策的路径和分支

- 因果图:表示变量间的因果关系

- 状态转换图:展示系统状态的转换过程

- 多维数据降维可视化:将高维特征空间映射到低维可视空间

4. 可解释多智能体协作

多智能体具身AI系统的解释机制:

- 意图通信:智能体间明确表达意图和目标

- 协作解释框架:共同生成一致的解释

- 冲突解决解释:解释冲突决策的原因和解决方案

- 分层解释:不同层次智能体提供不同粒度的解释

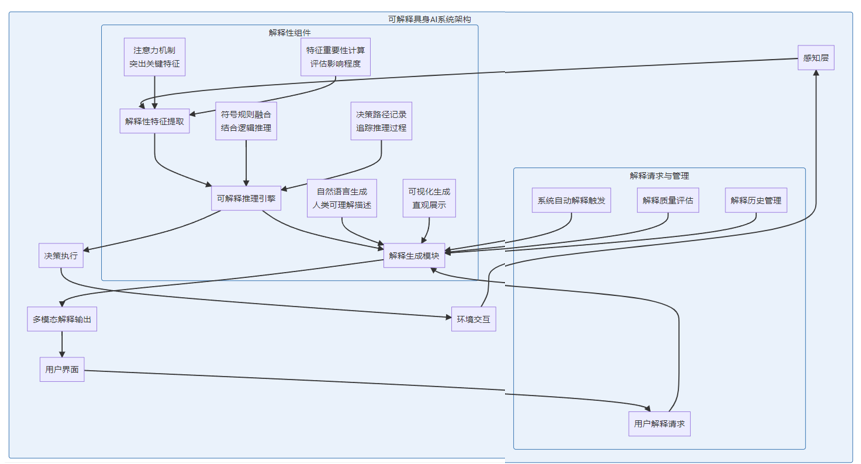

具身AI可解释性实现框架

1. 架构设计模式

构建可解释具身AI系统的架构模式:

2. 核心算法实现

具身AI可解释性的关键算法实现示例:

# 具身AI系统的多层次解释框架示例

class EmbodiedAIExplanationSystem:

def __init__(self, agent_model, environment_model):

self.agent_model = agent_model

self.environment_model = environment_model

self.explanation_levels = {

"high_level": {"target": "user", "complexity": "low"},

"medium_level": {"target": "analyst", "complexity": "medium"},

"detailed_level": {"target": "developer", "complexity": "high"}

}

self.explanation_history = []

self.feature_importance_cache = {}

def generate_decision_explanation(self, decision, context, level="high_level"):

"""

为给定决策生成解释

参数:

decision: 智能体做出的决策

context: 决策上下文,包含环境状态和历史信息

level: 解释详细程度级别

返回:

结构化解释对象

"""

if level not in self.explanation_levels:

raise ValueError(f"无效的解释级别: {level}")

# 收集决策信息

decision_info = self._collect_decision_info(decision, context)

# 根据级别生成解释

if level == "high_level":

explanation = self._generate_high_level_explanation(decision_info)

elif level == "medium_level":

explanation = self._generate_medium_level_explanation(decision_info)

else: # detailed_level

explanation = self._generate_detailed_level_explanation(decision_info)

# 存储解释历史

explanation_record = {

"timestamp": datetime.now().isoformat(),

"decision": decision,

"context": context,

"level": level,

"explanation": explanation

}

self.explanation_history.append(explanation_record)

return explanation

def _collect_decision_info(self, decision, context):

"""收集决策相关信息"""

# 获取感知数据的特征重要性

sensor_data = context.get("sensor_data", {})

feature_importance = self._compute_feature_importance(sensor_data, decision)

# 分析决策路径

decision_path = self._analyze_decision_path(decision)

# 评估决策的信心度

confidence = self._evaluate_decision_confidence(decision, context)

# 生成反事实分析

counterfactual_analysis = self._generate_counterfactual_analysis(decision, context)

return {

"decision": decision,

"sensor_data": sensor_data,

"feature_importance": feature_importance,

"decision_path": decision_path,

"confidence": confidence,

"counterfactual_analysis": counterfactual_analysis,

"environmental_factors": self._identify_environmental_factors(context),

"temporal_patterns": self._analyze_temporal_patterns(context)

}

def _compute_feature_importance(self, sensor_data, decision):

"""计算感知特征对决策的重要性"""

# 为简化示例,这里使用缓存的特征重要性

# 实际实现中可能使用SHAP、LIME等技术

if str(decision) in self.feature_importance_cache:

return self.feature_importance_cache[str(decision)]

# 模拟特征重要性计算

importance = {}

for sensor_type, data in sensor_data.items():

# 基于传感器类型的模拟重要性

if sensor_type == "camera":

importance[sensor_type] = 0.8 # 视觉数据通常很重要

elif sensor_type == "lidar":

importance[sensor_type] = 0.7

elif sensor_type == "microphone":

importance[sensor_type] = 0.4

else:

importance[sensor_type] = 0.3

# 缓存结果

self.feature_importance_cache[str(decision)] = importance

return importance

def _analyze_decision_path(self, decision):

"""分析决策路径"""

# 简化示例,实际中可能需要追踪神经网络激活或规则应用序列

return {

"initial_state": "感知数据接收",

"processing_steps": ["特征提取", "模式识别", "决策生成"],

"final_state": decision

}

def _evaluate_decision_confidence(self, decision, context):

"""评估决策的信心度"""

# 模拟信心度评估

return 0.95 # 假设高信心度

def _generate_counterfactual_analysis(self, decision, context):

"""生成反事实分析"""

# 简化的反事实分析

return {

"alternatives": [

{"action": "alternative_action_1", "probability": 0.03},

{"action": "alternative_action_2", "probability": 0.02}

],

"critical_factors": ["sensor_data_threshold_met", "confidence_above_threshold"]

}

def _identify_environmental_factors(self, context):

"""识别影响决策的环境因素"""

return context.get("environmental_factors", ["lighting_condition", "distance_to_obstacle"])

def _analyze_temporal_patterns(self, context):

"""分析决策的时间模式"""

# 简化示例

return {"reaction_time": 0.15, "preceding_actions": 3}

def _generate_high_level_explanation(self, decision_info):

"""生成面向用户的高级解释"""

# 提取最重要的特征

sorted_features = sorted(decision_info["feature_importance"].items(),

key=lambda x: x[1], reverse=True)

primary_feature = sorted_features[0] if sorted_features else None

return {

"natural_language": self._generate_natural_language_explanation(

decision_info["decision"], primary_feature, decision_info["confidence"]

),

"visual_elements": self._generate_visual_elements(decision_info),

"confidence_indicator": decision_info["confidence"],

"key_factors": [primary_feature[0]] if primary_feature else []

}

def _generate_medium_level_explanation(self, decision_info):

"""生成面向分析师的中级解释"""

return {

"natural_language": self._generate_natural_language_explanation(

decision_info["decision"], None, decision_info["confidence"], detailed=True

),

"feature_importance_chart": decision_info["feature_importance"],

"decision_path": decision_info["decision_path"],

"confidence_analysis": {

"score": decision_info["confidence"],

"factors": decision_info["counterfactual_analysis"]["critical_factors"]

},

"environmental_influences": decision_info["environmental_factors"]

}

def _generate_detailed_level_explanation(self, decision_info):

"""生成面向开发者的详细解释"""

return {

"natural_language": self._generate_natural_language_explanation(

decision_info["decision"], None, decision_info["confidence"], technical=True

),

"full_feature_importance": decision_info["feature_importance"],

"detailed_decision_path": decision_info["decision_path"],

"counterfactual_analysis": decision_info["counterfactual_analysis"],

"confidence_breakdown": {

"score": decision_info["confidence"],

"components": {

"sensor_confidence": 0.9,

"model_confidence": 0.98,

"context_confidence": 0.96

}

},

"environmental_factors": decision_info["environmental_factors"],

"temporal_analysis": decision_info["temporal_patterns"]

}

def _generate_natural_language_explanation(self, decision, primary_feature,

confidence, detailed=False, technical=False):

"""生成自然语言解释"""

# 简化的自然语言生成

base_explanation = f"系统决定执行'{decision}',信心度为{confidence:.1%}。"

if primary_feature:

base_explanation += f"这主要基于{primary_feature[0]}传感器的数据,其对决策的影响权重为{primary_feature[1]:.1%}。"

if detailed:

base_explanation += "该决策考虑了环境因素和历史模式,综合评估了多种可能的行动方案。"

if technical:

base_explanation += "从技术角度看,该决策通过多层神经网络处理,并通过置信度阈值验证确保可靠性。"

return base_explanation

def _generate_visual_elements(self, decision_info):

"""生成可视化元素描述"""

# 实际实现中,这里会生成可视化图表的数据

return {

"type": "simplified_feature_importance",

"data": decision_info["feature_importance"],

"interactive": True

}

def generate_counterfactual_query(self, decision, context, what_if_scenario):

"""

回答反事实问题:"如果...会怎样?"

参数:

decision: 原始决策

context: 原始上下文

what_if_scenario: 假设的场景变化

返回:

预期决策变化的解释

"""

# 模拟反事实分析

# 实际实现中,这里可能需要重新运行模型或使用特殊的反事实推理算法

return {

"original_decision": decision,

"what_if_scenario": what_if_scenario,

"predicted_decision_change": "可能变为 alternative_action_1",

"confidence": 0.85,

"explanation": f"如果{what_if_scenario['description']},系统很可能会改变决策,因为{what_if_scenario['factor']}是影响当前决策的关键因素。"

}

def evaluate_explanation_quality(self, explanation, evaluation_criteria):

"""评估解释质量"""

# 简化的质量评估

return {

"clarity": 0.9,

"completeness": 0.85,

"relevance": 0.95,

"consistency": 0.92,

"overall_score": 0.9

}

# 使用示例(需要导入datetime模块)

from datetime import datetime

def example_explanation_system():

# 创建模拟的模型和环境

agent_model = {"type": "embodied_ai_agent", "version": "2.5.0"}

environment_model = {"type": "simulated_environment", "complexity": "high"}

# 创建解释系统

explainer = EmbodiedAIExplanationSystem(agent_model, environment_model)

# 定义决策和上下文

decision = "导航至目标点A,避开障碍物"

context = {

"sensor_data": {

"camera": "视觉数据显示前方5米处有障碍物",

"lidar": "激光雷达检测到3D空间中的障碍物位置",

"microphone": "环境声音正常",

"gps": "当前位置: (X:100, Y:200)"

},

"environmental_factors": ["光照良好", "无动态障碍物"],

"historical_actions": ["启动导航", "检测环境", "规划路径"]

}

# 生成不同级别的解释

high_level_explanation = explainer.generate_decision_explanation(

decision, context, level="high_level")

print("高级解释 (面向用户):")

print(high_level_explanation["natural_language"])

# 生成反事实查询

what_if_scenario = {

"description": "障碍物突然移动",

"factor": "障碍物的位置和移动状态"

}

counterfactual_response = explainer.generate_counterfactual_query(

decision, context, what_if_scenario)

print("\n反事实查询结果:")

print(counterfactual_response["explanation"])

return {

"high_level_explanation": high_level_explanation,

"counterfactual_response": counterfactual_response

}3. 多模态解释输出

将解释以多种形式输出以满足不同用户需求:

- 自然语言解释:使用易懂的语言描述决策过程

- 可视化图表:通过图表直观展示关键因素

- 交互式演示:允许用户通过交互探索决策因素

- 分级解释:根据用户请求提供不同详细程度的解释

- 情境化解释:根据用户角色和情境调整解释方式

4. 用户适应性解释

根据用户特征自适应调整解释内容和方式:

- 用户模型:构建用户知识和偏好模型

- 解释个性化:根据用户背景调整技术术语使用

- 反馈驱动优化:基于用户反馈改进解释质量

- 自适应复杂度:根据用户理解能力调整解释复杂度

具身AI透明度机制

1. 模型透明度

确保模型结构和参数的适当透明度:

- 模型卡片:提供模型的基本信息、能力和局限性

- 技术文档:详细的模型架构和工作原理文档

- 参数可访问性:关键参数和配置的可访问性

- 训练数据信息:训练数据的来源、分布和处理方法

2. 决策透明度

确保决策过程的透明度:

- 决策日志:记录所有关键决策及其上下文

- 决策理由:每个重要决策的理由说明

- 不确定性表示:清晰表示决策的不确定性

- 异常检测与解释:异常决策的自动检测和解释

3. 行为透明度

确保系统行为的可预测性和透明度:

- 行为一致性:相似情境下行为的一致性

- 边界说明:明确系统能力的边界和限制

- 失败模式解释:可能的失败模式及其原因

- 安全机制透明:安全机制及其工作原理的透明性

4. 隐私与安全平衡

在透明度和隐私安全之间取得平衡:

- 差分隐私:在解释中应用差分隐私保护敏感信息

- 模糊化技术:对敏感信息进行适当模糊化处理

- 安全审查:确保解释不会泄露系统漏洞

- 分层访问控制:根据用户权限提供不同透明度级别的信息

可解释性与透明度的实际应用案例

1. 医疗机器人系统

可解释性在医疗机器人中的应用:

- 手术决策解释:解释手术辅助决策的依据和风险

- 患者友好解释:向患者解释机器人的工作原理和安全性

- 医疗监管合规:满足医疗设备监管对透明度的要求

- 医疗错误分析:解释潜在医疗错误的原因和预防措施

2. 自动驾驶系统

自动驾驶系统的可解释性实现:

- 决策可视化:实时可视化车辆感知和决策过程

- 事故分析解释:事故或接近事故情况下的决策解释

- 乘客信任建立:增强乘客对自动驾驶系统的信任

- 监管报告生成:自动生成满足监管要求的透明度报告

3. 工业机器人系统

工业环境中机器人系统的透明度机制:

- 操作流程透明:解释机器人的操作流程和安全措施

- 故障诊断解释:设备故障的自动诊断和解释

- 效率优化建议:基于运行数据的优化建议和解释

- 人机协作安全:确保人机协作中的安全决策透明

可解释性评估与验证方法

1. 自动评估指标

评估可解释性系统性能的自动化指标:

- 解释准确性:解释与模型实际行为的符合度

- 解释一致性:相似案例解释的一致性

- 解释完整性:解释包含所有关键因素的程度

- 解释简洁性:解释的简洁程度和信息密度

- 生成效率:解释生成的时间效率

2. 人类评估方法

通过人类评估可解释性系统:

- 用户理解度测试:评估用户对解释的理解程度

- 信任度测量:测量解释对用户信任的影响

- 决策辅助效果:评估解释对决策质量的提升

- 专家评审:领域专家对解释质量的评审

3. 综合评估框架

综合评估可解释性的框架:

# 具身AI可解释性综合评估框架

class ExplainabilityEvaluationFramework:

def __init__(self, explanation_system, test_cases):

self.explanation_system = explanation_system

self.test_cases = test_cases

self.evaluation_results = {}

def run_comprehensive_evaluation(self):

"""执行综合评估"""

# 运行各类评估

self._evaluate_explanation_accuracy()

self._evaluate_explanation_consistency()

self._evaluate_explanation_completeness()

self._evaluate_explanation_understandability()

self._evaluate_explanation_usefulness()

# 生成综合报告

return self._generate_evaluation_report()

def _evaluate_explanation_accuracy(self):

"""评估解释准确性"""

# 简化的准确性评估

results = []

for test_case in self.test_cases:

# 生成解释

explanation = self.explanation_system.generate_decision_explanation(

test_case["decision"], test_case["context"])

# 模拟准确性评分(实际应通过与专家判断对比)

accuracy_score = 0.92 # 模拟值

results.append({

"test_case_id": test_case["id"],

"accuracy_score": accuracy_score,

"explanation": explanation

})

avg_accuracy = sum(r["accuracy_score"] for r in results) / len(results)

self.evaluation_results["accuracy"] = {

"average_score": avg_accuracy,

"detailed_results": results

}

def _evaluate_explanation_consistency(self):

"""评估解释一致性"""

# 简化的一致性评估

consistency_results = []

# 寻找相似案例

similar_case_groups = self._identify_similar_case_groups()

for group in similar_case_groups:

# 为每个案例生成解释

explanations = []

for test_case in group:

explanation = self.explanation_system.generate_decision_explanation(

test_case["decision"], test_case["context"])

explanations.append(explanation)

# 计算解释间的一致性

consistency_score = self._calculate_explanation_consistency(explanations)

consistency_results.append({

"group_size": len(group),

"consistency_score": consistency_score

})

avg_consistency = sum(r["consistency_score"] for r in consistency_results) / \

max(len(consistency_results), 1)

self.evaluation_results["consistency"] = {

"average_score": avg_consistency,

"detailed_results": consistency_results

}

def _identify_similar_case_groups(self):

"""识别相似测试用例组"""

# 简化实现,实际应基于案例相似性算法

return [[self.test_cases[0], self.test_cases[1]]] if len(self.test_cases) >= 2 else []

def _calculate_explanation_consistency(self, explanations):

"""计算解释间的一致性"""

# 简化实现,实际应比较解释内容的相似性

return 0.87 # 模拟值

def _evaluate_explanation_completeness(self):

"""评估解释完整性"""

# 简化的完整性评估

results = []

for test_case in self.test_cases:

explanation = self.explanation_system.generate_decision_explanation(

test_case["decision"], test_case["context"], level="detailed_level")

# 模拟完整性评分(实际应检查关键因素覆盖率)

completeness_score = 0.85 # 模拟值

results.append({

"test_case_id": test_case["id"],

"completeness_score": completeness_score

})

avg_completeness = sum(r["completeness_score"] for r in results) / len(results)

self.evaluation_results["completeness"] = {

"average_score": avg_completeness,

"detailed_results": results

}

def _evaluate_explanation_understandability(self):

"""评估解释可理解性"""

# 模拟人类评估结果

self.evaluation_results["understandability"] = {

"average_score": 0.91,

"user_groups": [

{"group": "experts", "score": 0.95},

{"group": "novices", "score": 0.87}

]

}

def _evaluate_explanation_usefulness(self):

"""评估解释有用性"""

# 模拟决策辅助效果测试结果

self.evaluation_results["usefulness"] = {

"decision_quality_improvement": 0.35, # 决策质量提升35%

"trust_enhancement": 0.42, # 信任提升42%

"adoption_increase": 0.28 # 系统采用率提升28%

}

def _generate_evaluation_report(self):

"""生成评估报告"""

# 计算总体评分

accuracy = self.evaluation_results.get("accuracy", {}).get("average_score", 0)

consistency = self.evaluation_results.get("consistency", {}).get("average_score", 0)

completeness = self.evaluation_results.get("completeness", {}).get("average_score", 0)

understandability = self.evaluation_results.get("understandability", {}).get("average_score", 0)

# 加权平均

overall_score = (accuracy * 0.25 + consistency * 0.20 +

completeness * 0.25 + understandability * 0.30)

# 生成报告

report = {

"overall_score": overall_score,

"component_scores": {

"accuracy": accuracy,

"consistency": consistency,

"completeness": completeness,

"understandability": understandability,

"usefulness": self.evaluation_results.get("usefulness", {})

},

"detailed_results": self.evaluation_results,

"recommendations": self._generate_recommendations(overall_score)

}

return report

def _generate_recommendations(self, overall_score):

"""生成改进建议"""

if overall_score >= 0.9:

return ["系统表现优秀,建议微调以适应更多用户类型"]

elif overall_score >= 0.8:

return [

"提高解释的一致性,特别是在边缘案例中",

"增强对非专业用户的可理解性",

"优化解释生成速度,特别是在实时场景中"

]

else:

return [

"全面重新设计解释生成算法",

"增加更多用户测试和反馈环节",

"改进解释内容的准确性和完整性"

]

# 使用示例

def example_evaluation():

# 创建模拟的解释系统

class MockExplanationSystem:

def generate_decision_explanation(self, decision, context, level="high_level"):

return {"natural_language": f"系统决定{decision}"}

# 创建测试用例

test_cases = [

{

"id": "case_001",

"decision": "导航至目标点A",

"context": {"sensor_data": {"camera": "正常", "lidar": "检测到障碍物"}}

},

{

"id": "case_002",

"decision": "避开障碍物",

"context": {"sensor_data": {"camera": "检测到障碍物", "lidar": "详细障碍物数据"}}

},

{

"id": "case_003",

"decision": "停止并等待",

"context": {"sensor_data": {"camera": "检测到动态障碍物", "lidar": "无法精确测距"}}

}

]

# 创建评估框架

evaluator = ExplainabilityEvaluationFramework(MockExplanationSystem(), test_cases)

# 运行评估

report = evaluator.run_comprehensive_evaluation()

print(f"总体评分: {report['overall_score']:.2f}")

print("改进建议:")

for rec in report['recommendations']:

print(f"- {rec}")

return report4. 验证挑战与解决方案

可解释性验证中的常见挑战及解决方案:

- 解释真实性:确保解释反映模型的实际行为

- 用户多样性:满足不同用户群体的需求

- 领域适应性:适应不同应用领域的特殊要求

- 实时性要求:在保持实时性能的同时提供解释

- 评估成本:降低全面评估的时间和资源成本

可解释性与透明度的未来发展趋势

1. 技术发展方向

未来具身AI可解释性技术的发展方向:

- 神经符号混合模型:结合神经网络的高效性和符号系统的可解释性

- 因果推理增强:更强的因果推理能力,提供更深入的解释

- 自适应解释生成:根据上下文和用户需求动态调整解释策略

- 多模态解释融合:整合多种模态的解释,提供更全面的理解

- 可解释强化学习:强化学习算法的内在可解释性提升

2. 标准化与法规发展

可解释性相关标准和法规的发展趋势:

- 行业标准制定:各行业特定的可解释性标准

- 监管要求增强:更严格的透明度和可解释性监管要求

- 认证体系建立:可解释性系统的认证和评级体系

- 国际标准协调:国际间可解释性标准的协调与统一

3. 伦理与社会影响

可解释性对社会和伦理的影响:

- 信任建立:增强公众对AI系统的信任

- 问责机制:支持责任归属和问责制

- 隐私保护平衡:在透明度和隐私保护间取得平衡

- 公平性保障:通过可解释性确保系统公平性

- 人机协作增强:促进更有效的人机协作

4. 跨学科研究方向

具身AI可解释性的跨学科研究方向:

- 认知科学启发:从人类认知过程中获取灵感

- 语言学融合:更自然、更有效的解释生成语言模型

- 心理学整合:理解人类如何解释和理解复杂系统

- 设计学应用:通过设计思维提升解释的用户体验

结论

具身人工智能的可解释性与透明度是构建可信智能系统的关键。随着AI技术在关键领域的应用不断深入,对系统决策过程的理解需求也日益增长。2025年的技术发展已经为具身AI的可解释性提供了多种有效方法,包括内在可解释模型、事后解释技术、可视化方法等。通过实施多层次的解释框架,系统可以为不同用户提供适当粒度和形式的解释,从而增强信任、支持决策并满足监管要求。

在设计具身AI系统时,应将可解释性和透明度作为核心设计原则,从架构层面进行规划,并在全生命周期中持续关注和改进。未来,随着技术的不断进步和标准的逐步完善,具身AI系统将在保持高性能的同时,提供更加透明、可理解和可信的决策过程,为人类社会带来更大的价值。

本文参与 腾讯云自媒体同步曝光计划,分享自作者个人站点/博客。

原始发表:2025-10-16,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读

目录