elasticsearch python散装api (elasticsearch-py)

我对py-elasticsearch散包@Diolor解决方案工作https://stackoverflow.com/questions/20288770/how-to-use-bulk-api-to-store-the-keywords-in-es-by-using-python感到困惑,但我想使用普通的es.bulk()

我的代码:

from elasticsearch import Elasticsearch

es = Elasticsearch()

doc = '''\n {"host":"logsqa","path":"/logs","message":"test test","@timestamp":"2014-10-02T10:11:25.980256","tags":["multiline","mydate_0.005"]} \n'''

result = es.bulk(index="logstash-test", doc_type="test", body=doc)错误是:

No handlers could be found for logger "elasticsearch"

Traceback (most recent call last):

File "./log-parser-perf.py", line 55, in <module>

insertToES()

File "./log-parser-perf.py", line 46, in insertToES

res = es.bulk(index="logstash-test", doc_type="test", body=doc)

File "/usr/local/lib/python2.7/dist-packages/elasticsearch-1.0.0-py2.7.egg/elasticsearch/client/utils.py", line 70, in _wrapped

return func(*args, params=params, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/elasticsearch-1.0.0-py2.7.egg/elasticsearch/client/__init__.py", line 570, in bulk

params=params, body=self._bulk_body(body))

File "/usr/local/lib/python2.7/dist-packages/elasticsearch-1.0.0-py2.7.egg/elasticsearch/transport.py", line 274, in perform_request

status, headers, data = connection.perform_request(method, url, params, body, ignore=ignore)

File "/usr/local/lib/python2.7/dist-packages/elasticsearch-1.0.0-py2.7.egg/elasticsearch/connection/http_urllib3.py", line 57, in perform_request

self._raise_error(response.status, raw_data)

File "/usr/local/lib/python2.7/dist-packages/elasticsearch-1.0.0-py2.7.egg/elasticsearch/connection/base.py", line 83, in _raise_error

raise HTTP_EXCEPTIONS.get(status_code, TransportError)(status_code, error_message, additional_info)

elasticsearch.exceptions.TransportError: TransportError(500, u'ActionRequestValidationException[Validation Failed: 1: no requests added;]')生成的用于POST调用的url是

/logstash-测试/测试/散装

邮政机构是:

{“主机”:“logsqa”,“路径”:“/logs”,“消息”:“测试”,“@时间戳”:“2014-10-02T10:11:25.980256”,“标记”:“multiline”,"mydate_0.005"}

所以我用手做了卷发:这个卷发不起作用:

> curl -XPUT http://localhost:9200/logstash-test/test2/_bulk -d

> '{"host":"logsqa","path":"/logs","message":"test

> test","@timestamp":"2014-10-02T10:11:25.980256","tags":["multiline","mydate_0.005"]}

> '

>

> {"error":"ActionRequestValidationException[Validation Failed: 1: no requests added;]","status":500}因此,错误在一定程度上是可以的,但我确实预期elasticsearch.bulk()将正确地管理输入的args。

pythonf函数是:

bulk(*args, **kwargs)

:arg body: The operation definition and data (action-data pairs), as

either a newline separated string, or a sequence of dicts to

serialize (one per row).

:arg index: Default index for items which don't provide one

:arg doc_type: Default document type for items which don't provide one

:arg consistency: Explicit write consistency setting for the operation

:arg refresh: Refresh the index after performing the operation

:arg routing: Specific routing value

:arg replication: Explicitly set the replication type (default: sync)

:arg timeout: Explicit operation timeout回答 2

Stack Overflow用户

发布于 2016-05-05 12:26:08

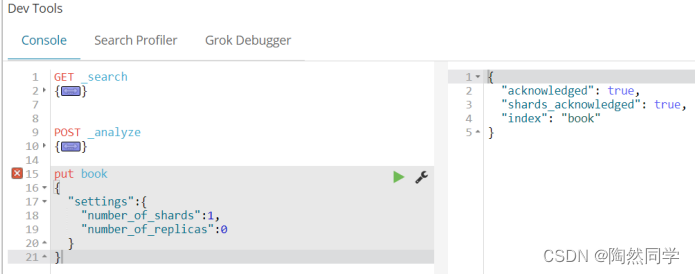

如果目前有人试图使用大容量api并想知道格式应该是什么,下面是对我有用的方法:

doc = [

{

'index':{

'_index': index_name,

'_id' : <some_id>,

'_type':<doc_type>

}

},

{

'field_1': <value>,

'field_2': <value>

}

]

docs_as_string = json.dumps(doc[0]) + '\n' + json.dumps(doc[1]) + '\n'

client.bulk(body=docs_as_string)Stack Overflow用户

发布于 2014-10-02 21:41:37

@HonzaKral on github

https://github.com/elasticsearch/elasticsearch-py/issues/135

嗨,先生,

大容量api (和所有其他api一样)非常接近elasticsearch本身的大容量api格式,因此主体必须是:

doc =‘’{“索引”:{}\n{“主机”:“logsqa”、“路径”:“/logs”、“消息”:“测试测试”、“@时间戳”:“2014-10-02T10:11:25.980256”、“标记”:“multiline”、“mydate_0.005”}}‘使其工作。或者,它可以是这两条数据的列表。

这是一种复杂而笨拙的格式,因此我试图在elasticsearch.helpers.bulk (0)中创建一种更方便的方法来处理bulk。它只接受文档迭代器,将从其中提取任何可选元数据(如_id、_type等),并为您构造(并执行)批量请求。有关已接受格式的更多信息,请参见上面的docsforstreaming_bulk,它是以迭代方式处理流的助手(从用户的角度开始,在后台分批处理)。

希望这能有所帮助。

0- http://elasticsearch-py.readthedocs.org/en/master/helpers.html#elasticsearch.helpers.bulk

https://stackoverflow.com/questions/26159949

复制