大数据上使用模糊集的字符串相似度

我有一个文件,在该文件中,我将检查特定列中名称中的字符串相似性。我使用模糊令牌排序比算法,因为它是我的用例所需要的。这是代码,有什么方法可以加快速度吗?30000张唱片花了很多时间。

import csv

import itertools

import pandas as pd

from fuzzywuzzy import fuzz

data = pd.read_csv('D:\\Sim_Input.csv')

Dishes = data['Product_Name']

threshold_ratio = 85

with open('D:\\Sim_Score.csv', 'w') as f1:

writer = csv.writer(f1, delimiter='\t', lineterminator='\n', )

writer.writerow(['tag4'])

for str_1, str_2 in itertools.permutations(Dishes, 2):

list = []

ratio = (fuzz.token_sort_ratio(str_1, str_2))

if ratio > threshold_ratio:

row = (str_1, str_2, ratio)

list.append(row)

print(list)

writer.writerow(list)我需要一个csv文件的输出与名称的ItemA,ItemB和相似性评分,其中的相似性应该在85以上。

回答 2

Code Review用户

发布于 2018-05-04 07:31:15

为了避免对每一道菜进行多次处理,您可以使用它只处理一次:

dishes = ["pizza with bacon", "pizza with extra cheese", "vegetarian pizza", "cheese and bacon pizza", "bacon with pizza"]

processedDishes = []

for dish in dishes:

processedDishes.append(fuzz._process_and_sort(dish, True, True))

for dish1, dish2 in itertools.combinations(enumerate(processedDishes), 2):

if fuzz.ratio(dish1[1], dish2[1]) >= 85:

print(dishes[dish1[0]], dishes[dish2[0]])然后,可以将其与@scnerd解决方案结合起来,以添加多处理。

如果您知道您的数据都是相同类型的,则可以进一步优化它:

dishes = ["pizza with bacon", "pizza with extra cheese", "vegetarian pizza", "cheese and bacon pizza", "bacon with pizza"]

processedDishes = []

matchers = []

for dish in dishes:

if dish:

processedDish = fuzz._process_and_sort(dish, True, True)

processedDishes.append(processedDish)

matchers.append(fuzz.SequenceMatcher(None, processedDish))

for dish1, dish2 in itertools.combinations(enumerate(processedDishes), 2):

matcher = matchers[dish1[0]]

matcher.set_seq2(dish2[1])

ratio = int(round(100 * matcher.ratio()))

if ratio >= 85:

print(dishes[dish1[0]], dishes[dish2[0]])Update:检查了这些比率是如何计算的,这里有一个更有效的答案,它避免了对之间的大量检查:

dishes = ["pizza with bacon", "pizza with extra cheese", "vegetarian pizza", "cheese and bacon pizza", "bacon with pizza", "a"]

processedDishes = []

matchers = []

for dish in dishes:

if dish:

processedDish = fuzz._process_and_sort(dish, True, True)

processedDishes.append({"processed": processedDish, "dish": dish})

processedDishes.sort(key= lambda x: len(x["processed"]))

for idx, dish in enumerate(processedDishes):

length = len(dish["processed"])

matcher = fuzz.SequenceMatcher(None, dish["processed"])

for idx2 in range(idx + 1, len(processedDishes)):

dish2 = processedDishes[idx2]

if 2 * length / (length + len(dish2["processed"])) < 0.85: # upper bound

break

matcher.set_seq2(dish2["processed"])

if matcher.quick_ratio() >= 0.85 and matcher.ratio() >= 0.85: # should also try without quick_ratio() check

print(dish["dish"], dish2["dish"])Code Review用户

发布于 2018-05-03 09:56:25

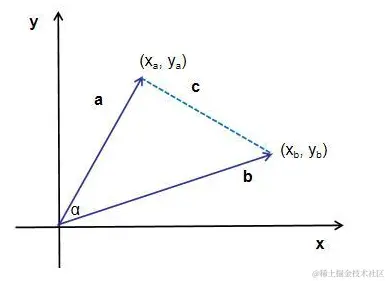

第一个算法建议是使用itertools.combinations而不是.permutations,因为您不关心订单。这假定为fuzz.token_sort_ratio(str_1, str_2) == fuzz.token_sort_ratio(str_2, str_1)。有一半的组合,因为有排列,所以这给你一个免费的2x加速比。

这段代码也很容易并行化。在i7 (8个虚拟核,4个物理核)上,您可能会期望这会给您带来4-8x的加速,但这取决于许多因素。要做到这一点,最不麻烦的方法是使用multiprocessing.Pool.map或.imap_unordered:

import multiprocessing as mp

def ratio(strings):

s1, s2 = strings

return s1, s2, fuzz.token_sort_ratio(s1, s2)

with open('D:\\Sim_Score.csv', 'w') as f1:

writer = csv.writer(f1, delimiter='\t', lineterminator='\n', )

writer.writerow(['tag4'])

with mp.Pool() as pool:

for s1, s2, r in pool.imap_unordered(ratio, itertools.combinations(Dishes, 2)):

if r > threshold_ratio:

writer.writerow([(s1, s2, r)])总之,我预计这些变化会给你5-10倍的加速,这在很大程度上取决于你可用的核心数量。

作为参考,生成器理解版本可能如下所示:

with mp.Pool() as pool:

writer.writerows((s1, s2, r)

for s1, s2, r in pool.imap_unordered(ratio, itertools.combinations(Dishes, 2))

if r > threshold_ratio)与非理解版本相比,该版本的性能有了一些提高,但几乎没有提高,而IMO更难阅读/维护。

我在测试代码时注意到的另一件小事是,fuzzywuzzy建议安装python-Levenshtein以便更快地运行;当我这样做时,它的运行速度比使用内置SequenceMatcher时慢20倍。当我卸载python-Levenshtein时,它又恢复了速度。这在我看来很奇怪,但这肯定是值得一试的。

最后,如果性能很重要,您可以考虑深入了解fuzz.token_sort_ratio所做的工作,看看是否可以删除一些重复的工作。例如,每次传入每个字符串时,它都会再次对其进行标记化和排序,因此您可能可以对字符串进行预标记/排序,并且只在主循环中运行比率逻辑。快速挖掘告诉我,token_sort_ratio是两个主要步骤:

- 使用

fuzz._process_and_sort对每个字符串进行预处理 - 对已处理的字符串运行

fuzz.partial_ratio

目前我无法让这个方法运行得更快,但是如果我能让这个方法运行得很好,我将编辑和更新这个答案。

https://codereview.stackexchange.com/questions/193567

复制