我如何将keras回归预测的(1006,19)结果重组为(1006,1) numpy数组?

提问于 2021-04-22 04:19:09

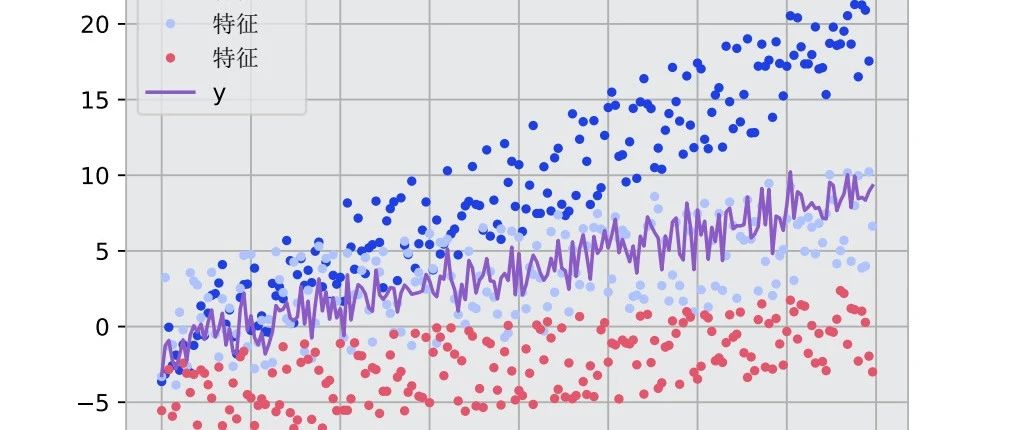

我试图在botch、PyTorch和Keras中创建一个股票预测模型。我已经在网上学习了一些教程,并对其进行了修改,以适应我的数据,而且效果很好。

现在,我将这些代码转换为兼容的Keras模型。我已经创建了模型并进行了预测,但问题是来自Keras的regressor.predict()函数返回一个(1006,19) numpy数组,而当我执行predictions = model(x_test)时,它返回一个(1006,1),这是我接下来工作所需要的,这样我就可以绘制结果。

到目前为止,这是我的Keras代码:

from keras.models import Sequential

from keras.layers import LSTM, Dense, Dropout

lookback = 20

x_train_keras, y_train_keras, x_test_keras, y_test_keras = split_data(price, lookback)

print('x_train.shape = ',x_train_keras.shape) # x_train.shape = (1006, 19, 1)

print('y_train.shape = ',y_train_keras.shape) # y_train.shape = (1006, 1)

print('x_test.shape = ',x_test_keras.shape) # x_test.shape = (252, 19, 1)

print('y_test.shape = ',y_test_keras.shape) # y_test.shape = (252, 1)

regression = Sequential()

regression.add(LSTM(units=50, return_sequences=True, kernel_initializer='glorot_uniform', input_shape=(x_train_keras.shape[1],1)))

regression.add(Dropout(0.2))

regression.add(LSTM(units=50,kernel_initializer='glorot_uniform',return_sequences=True))

regression.add(Dropout(0.2))

regression.add(LSTM(units=50,kernel_initializer='glorot_uniform',return_sequences=True))

regression.add(Dropout(0.2))

regression.add(LSTM(units=50,kernel_initializer='glorot_uniform',return_sequences=True))

regression.add(Dropout(0.2))

regression.add(Dense(units=1))

regression.compile(optimizer='adam', loss='mean_squared_error')

from keras.callbacks import History

history = History()

history = regression.fit(x_train_keras, y_train_keras, batch_size=30, epochs=100, callbacks=[history])

train_predict_keras = regression.predict(x_train_keras)

train_predict_keras = train_predict_keras.reshape((train_predict_keras.shape[0], train_predict_keras.shape[1]))

predict = pd.DataFrame(scaler.inverse_transform(train_predict_keras))

original = pd.DataFrame(scaler.inverse_transform(y_train_keras))

fig = plt.figure()

fig.subplots_adjust(hspace=0.2, wspace=0.2)

plt.subplot(1,2,1)

ax = sns.lineplot(x=original.index, y=original[0], label='Data', color='royalblue')

ax = sns.lineplot(x=predict.index, y=predict[0], label='Training Prediction', color='tomato')

ax.set_title('Stock Price', size=14, fontweight='bold')

ax.set_xlabel("Days", size = 14)

ax.set_ylabel("Cost (USD)", size = 14)

ax.set_xticklabels('', size=10)

plt.subplot(1,2,2)

ax = sns.lineplot(data=history.history.get('loss'), color='royalblue')

ax.set_xlabel("Epoch", size = 14)

ax.set_ylabel("Loss", size = 14)

ax.set_title("Training Loss", size = 14, fontweight='bold')

fig.set_figheight(6)

fig.set_figwidth(16)

# Make predictions

test_predict_keras = regression.predict(x_test_keras)

# Invert predictions

train_predict_keras = scaler.inverse_transform(train_predict_keras)

y_train_keras = scaler.inverse_transform(y_train_keras)

test_predict_keras = scaler.inverse_transform(test_predict_keras.reshape((test_predict_keras.shape[0], test_predict_keras.shape[1])))

y_test = scaler.inverse_transform(y_test_keras)

# Calculate root MSE

trainScore = math.sqrt(mean_squared_error(y_train[:,0], y_train_pred[:,0]))

print(f'Train score {trainScore:.2f} RMSE')

testScore = math.sqrt(mean_squared_error(y_test[:,0], y_test_pred[:,0]))

print(f'Test score {testScore:.2f} RMSE')

# shift train predictions for plotting

trainPredictPlot_keras = np.empty_like(price)

trainPredictPlot_keras[:, :] = np.nan

trainPredictPlot_keras[lookback:len(train_predict_keras)+lookback, :] = train_predict_keras

# shift test predictions for plotting

testPredictPlot_keras = np.empty_like(price)

testPredictPlot_keras[:, :] = np.nan

testPredictPlot_keras[len(train_predict_keras)+lookback-1:len(price)-1, :] = test_predict_keras

original = scaler.inverse_transform(price['Close'].values.reshape(-1,1))

predictions_keras = np.append(trainPredictPlot_keras, testPredictPlot_keras, axis=1)

predictions_keras = np.append(predictions_keras, original, axis=1)

result_keras = pd.DataFrame(predictions_keras)错误发生在trainPredictPlot_keras[lookback:len(train_predict_keras)+lookback, :] = train_predict_keras行中,名为could not broadcast input array from shape (1006,19) into shape (1006,1)

回答 1

Stack Overflow用户

回答已采纳

发布于 2021-04-22 04:25:54

将return_sequences设置为最后一个LSTM层的False。你需要这样做:

....

....

regression.add(LSTM(units=50,kernel_initializer='glorot_uniform',

return_sequences=False))

regression.add(Dropout(0.2))

regression.add(Dense(units=1))

regression.compile(optimizer='adam', loss='mean_squared_error')检查文档

return_sequences:布尔。是否返回最后一个输出。在输出序列中,或者整个序列中。默认值:假。

页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/67213190

复制相关文章

点击加载更多

![Keras中创建LSTM模型的步骤[通俗易懂]](https://ask.qcloudimg.com/http-save/yehe-8223537/0534b28d6011a58a1944b53073449606.jpg)

![[Deep-Learning-with-Python]基于Keras的房价预测](https://ask.qcloudimg.com/http-save/yehe-1631856/rbfulbdtkf.png)